什么是Sqoop?

Sqoop就是hadoop和mysql的一个中间介质 , 作用就是可以将hadoop中的数据传到mysql中 , 或将mysql中的数据导入到hadoop中

Sqoop的安装与配置

安装

详细代码

//解压安装

[root@hadoop soft]# tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@hadoop soft]# rm -rf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@hadoop soft]# ls

hadoop hive jdk mysql5.7 sqoop-1.4.7.bin__hadoop-2.6.0

[root@hadoop soft]# mv sqoop-1.4.7.bin__hadoop-2.6.0/ sqoop

[root@hadoop soft]# ls

hadoop hive jdk mysql5.7 sqoop

//配置环境变量

[root@hadoop sqoop]# vim /etc/profile.d/sqoop.sh

[root@hadoop sqoop]# cat /etc/profile.d/sqoop.sh

SQOOP_HOME=/usr/soft/sqoop

PATH=$PATH:$SQOOP_HOME/bin

CLASSPATH=$CLASSPATH:$SQOOP_HOME/lib

export SQOOP_HOME PATH CLASSPATH

[root@hadoop sqoop]# source /etc/profile.d/sqoop.sh

//修改配置文件

[root@hadoop sqoop]# cd conf

[root@hadoop conf]# ls

oraoop-site-template.xml sqoop-env-template.cmd sqoop-env-template.sh sqoop-site-template.xml sqoop-site.xml

[root@hadoop conf]# cp sqoop-env-template.sh sqoop-env.sh

[root@hadoop conf]# vim sqoop-env.sh

//下载jdbc驱动

[root@hadoop conf]# cd ..

[root@hadoop sqoop]# ls

bin CHANGELOG.txt conf ivy lib NOTICE.txt README.txt sqoop-patch-review.py src

build.xml COMPILING.txt docs ivy.xml LICENSE.txt pom-old.xml sqoop-1.4.7.jar sqoop-test-1.4.7.jar testdata

[root@hadoop sqoop]# cd lib

[root@hadoop lib]# ls

//这里能看到有mysql-connector-java-5.1.46.jar 即可

//验证Sqoop

[root@hadoop lib]# sqoop help

[root@hadoop lib]# sqoop list-databases --connect jdbc:mysql://localhost:3306/ --username root --password 1234

分步详解

- 解压

[root@hadoop soft]# tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

- 删除安装包

[root@hadoop soft]# rm -rf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

rm -rf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

- 改名

[root@hadoop soft]# mv sqoop-1.4.7.bin__hadoop-2.6.0/ sqoop

mv sqoop-1.4.7.bin__hadoop-2.6.0/ sqoop

- 环境配置

[root@hadoop sqoop]# vim /etc/profile.d/sqoop.sh

vim /etc/profile.d/sqoop.sh

//sqoop.sh内容

SQOOP_HOME=/usr/soft/sqoop

PATH=$PATH:$SQOOP_HOME/bin

CLASSPATH=$CLASSPATH:$SQOOP_HOME/lib

export SQOOP_HOME PATH CLASSPATH

- 配置完别忘了source一下

[root@hadoop sqoop]# source /etc/profile.d/sqoop.sh

source /etc/profile.d/sqoop.sh

修改配置文件

- 路径

cd /usr/soft/sqoop/conf

cd /usr/soft/sqoop/conf

- 复制配置文件

[root@hadoop conf]# cp sqoop-env-template.sh sqoop-env.sh

cp sqoop-env-template.sh sqoop-env.sh

- 修改配置文件

[root@hadoop conf]# vim sqoop-env.sh

vim sqoop-env.sh

//编辑的时候把#去掉(让代码生效)

sqoop依赖zookeeper,如果没有安装zookeeper可以先用默认的zookeeper

vim sqoop-env.sh

export HADOOP_COMMON_HOME=/usr/soft/hadoop

export HADOOP_MAPRED_HOME=/usr/soft/hadoop

export HIVE_HOME=/usr/soft/hive

//export ZOOKEEPER_HOME=/usr/soft/zookeeper

//export ZOOCFGDIR=/usr/soft/zookeeper

//export HBASE_HOME=/usr/soft/hbase

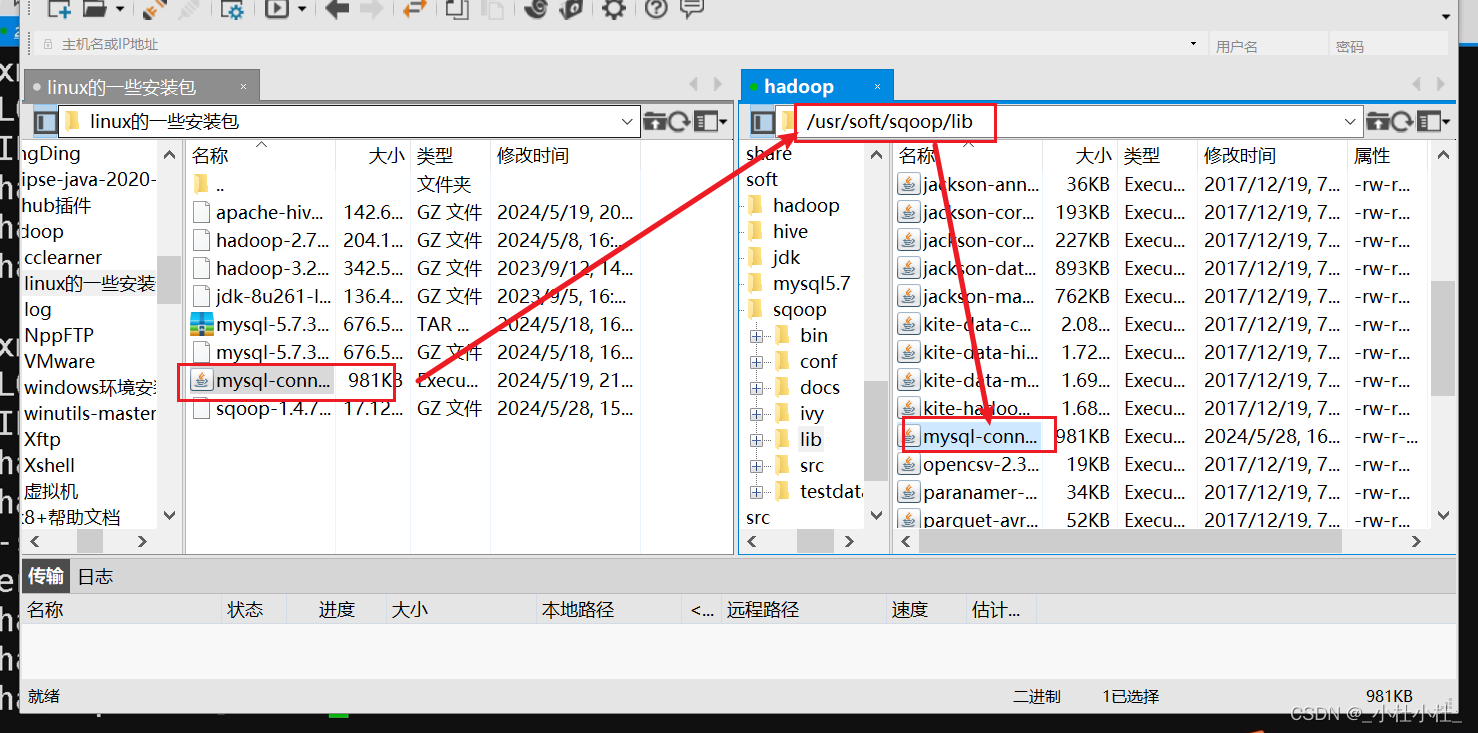

下载JDBC驱动

- 将jdbc驱动包上传到sqoop的lib目录下 :

测试

- 验证Sqoop(我们可以通过某一个command来验证sqoop配置是否正确:)

sqoop help

sqoop help

- 测试Sqoop是否能够成功连接数据库

//注意 : 要写自己的用户名和密码

sqoop list-databases --connect jdbc:mysql://localhost:3306/ --username root --password 1234

sqoop list-databases --connect jdbc:mysql://localhost:3306/ --username root --password 1234

- 出现如下测试成功

19/07/27 18:53:34 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

19/07/27 18:53:34 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

19/07/27 18:53:34 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

hive_metadata

mysql

test

test2

尝试用sqoop导出数据到mysql中

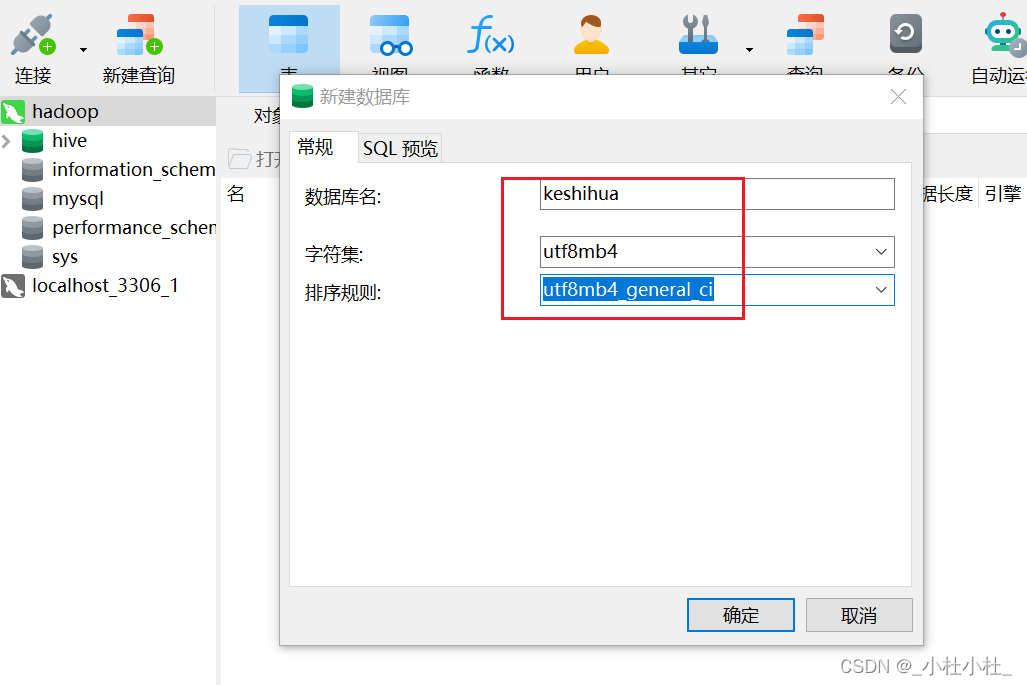

- 在mysql中创建一个新的数据库

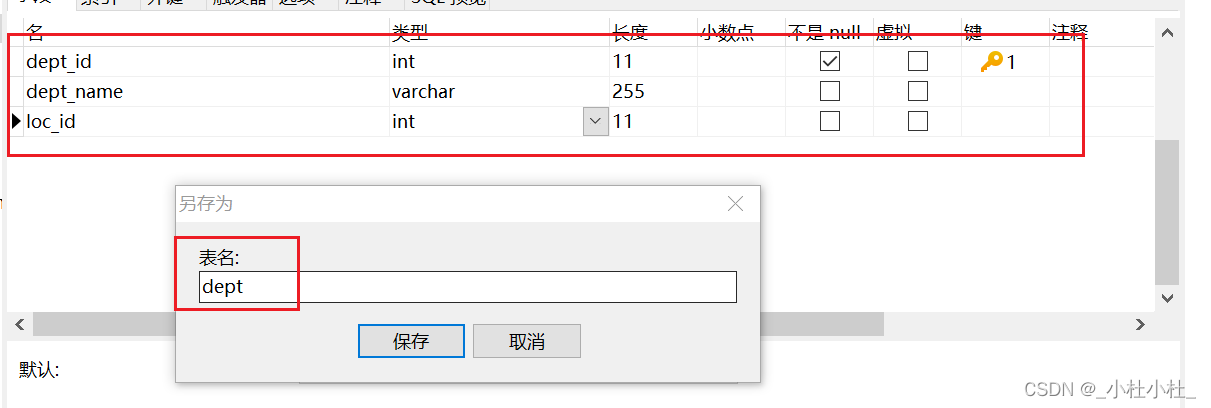

- 建表

- 数据准备

cd /usr/data

vim dept.txt

10 ACCOUNTING 1700

20 RESEARCH 1800

30 SALES 1900

40 OPENATIONS 1700

10 ACCOUNTING 1700

20 RESEARCH 1700

30 SALES 1900

40 OPENATIONS 1700

//上传到hdfs中

hdfs dfs -put dept.txt /user/hive/warehouse/dept

hdfs dfs -put dept.txt /user/hive/warehouse/dept

//打开hive

[root@hadoop ~]# hive

hive

//创建部门表

create external table if not exists default.dept( deptno int, dname string, loc int ) row format delimited fields terminated by '\t';

create external table if not exists default.dept(

deptno int,

dname string,

loc int

) row format delimited fields terminated by '\t';

//(从本地)导入数据到部门表中

load data local inpath '/user/data/dept.txt' into table default.dept;

load data local inpath '/user/data/dept.txt' into table default.dept;

//查询结果

select * from dept;

select * from dept;

//导入到本地的mysql中

bin/sqoop export \

--connect jdbc:mysql://localhost:3306/databaseName\

--username 用户名\

--password 密码\

--table tableName\

--fields-terminated-by "分隔方式" \

--export-dir hdfs中表的路径

bin/sqoop export \

--connect jdbc:mysql://localhost:3306/keshihua \

--username root \

--password 1234 \

--table dept \

--fields-terminated-by "\t" \

--export-dir /user/hive/warehouse/dept

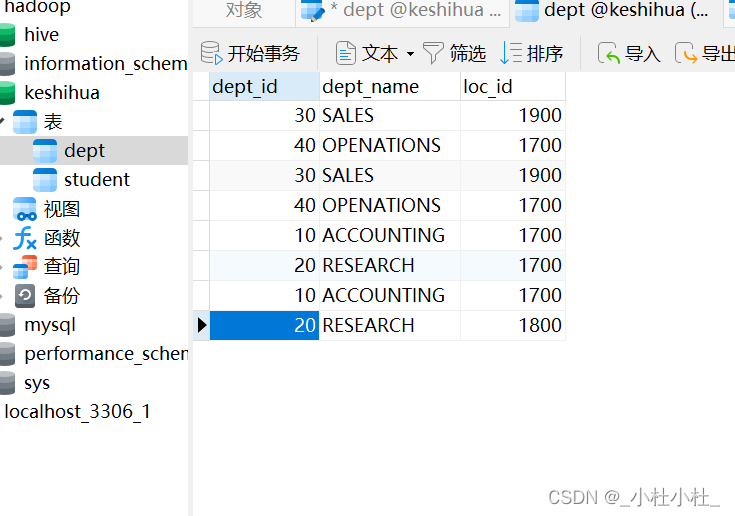

执行完 , 可以看到数据导入到mysql中了

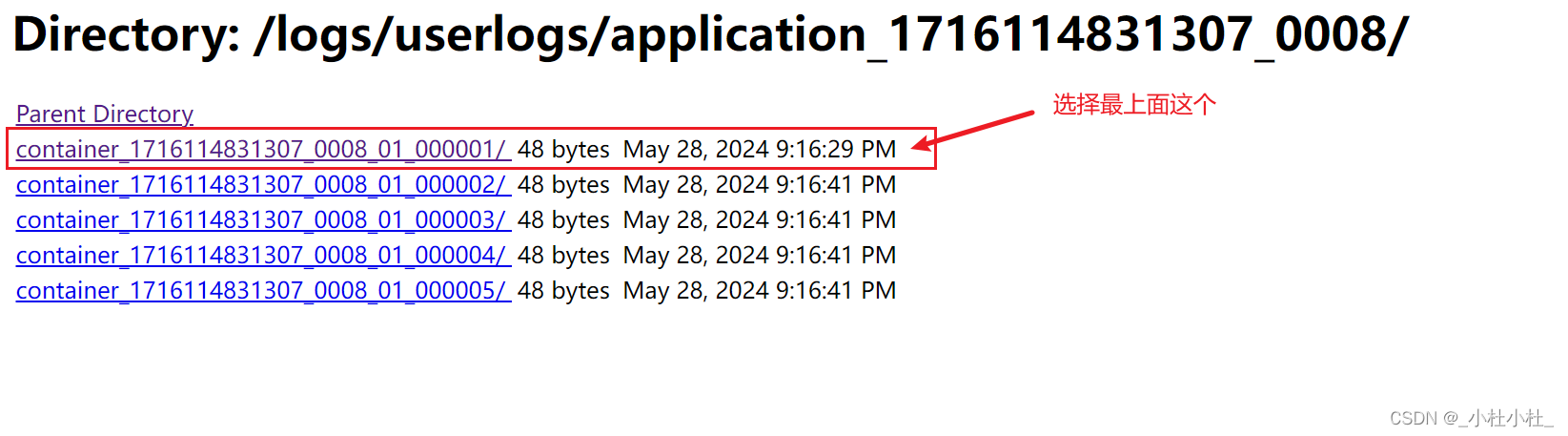

如果这里报错了

可以---->

主机IP号:50070/logs

---->到这个网址中 , 看自己的log日志(syslog)

hive建表和sqoop导入mysql的一些源代码

create external table if not exists default.salary

(age int,

gender string,

education string,

jobTitle string,

jobYears string,

salary decimal

) row format delimited fields terminated by ',';

sqoop export \

--connect jdbc:mysql://localhost:3306/keshihua \

--username root \

--password 1234 \

--table avgSalary \

--fields-terminated-by "/t" \

--export-dir /user/hive/warehouse/avgsalary

select avg(age) as avgAge,avg(jobYears) as avgJobYears,avg(salary) as avgSalary

from salary;

//相同年龄的平均工资

create table if not exists avgSalary(

age int comment '年龄',

avgSalary string comment '平均工资'

)comment '相同年龄的平均工资'

row format delimited fields terminated by '/t';

insert overwrite table avgSalary

select age,avg(salary) as avgSalary from salary

group by age;

sqoop export \

--connect jdbc:mysql://localhost:3306/keshihua \

--username root \

--password 1234 \

--table avgSalary \

--fields-terminated-by "/t" \

--export-dir /user/hive/warehouse/avgsalary \

--input-null-non-string '\\N'

//相同年限的平均工资

create table if not exists sameYearSalary(

jobYears string comment '工作年限',

avgSalary decimal comment '平均工资'

)comment '相同年限的平均工资'

row format delimited fields terminated by ',';

insert overwrite table sameYearSalary

select jobYears,avg(salary) as avgSalary from salary

group by jobYears;

sqoop export \

--connect jdbc:mysql://localhost:3306/keshihua \

--username root \

--password 1234 \

--table sameYearSalary \

--fields-terminated-by "," \

--export-dir /user/hive/warehouse/sameyearsalary \

--input-null-non-string '\\N'

//相同学历的平均工资

create table if not exists sameEducationSalary(

education string comment '学历',

avgSalary decimal comment '平均工资'

)comment '相同学历的平均工资'

row format delimited fields terminated by ',';

insert overwrite table sameEducationSalary

select education,avg(salary) as avgSalary from salary

group by education;

sqoop export \

--connect jdbc:mysql://localhost:3306/keshihua \

--username root \

--password 1234 \

--table sameEducationSalary \

--fields-terminated-by "," \

--export-dir /user/hive/warehouse/sameeducationsalary \

--input-null-non-string '\\N'

//相同性别的平均工资

create table if not exists sameGenderSalary(

gender string comment '性别',

avgSalary decimal comment '平均工资'

)comment '相同学历的平均工资'

row format delimited fields terminated by ',';

insert overwrite table sameGenderSalary

select gender,avg(salary) as avgSalary from salary

group by gender;

sqoop export \

--connect jdbc:mysql://localhost:3306/keshihua \

--username root \

--password 1234 \

--table sameGenderSalary \

--fields-terminated-by "," \

--export-dir /user/hive/warehouse/samegendersalary \

--input-null-non-string '\\N'

2484

2484

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?