更新时间记录:2023.6.19 更新内容:代码优化

本人接触时间序列预测有两三个月了(期间有忙其他事情),从学python,再到学pytorch,pandas等数据处理方面的知识,深感知识海洋的广阔!

本人学的不是很系统,一方面原因可能就是知识付费(并不是说知识付费不好,只是付费内容太多、花冤枉钱),另一方面就是本人的知识体系没有搭建好。我在GitHub上面并没有找到好的源码分享(pytorch框架的LSTM项目),有许多基于TensorFlow框架的,但是我本人由于懒,并不愿意花时间再去学新的框架,所以很无奈。偶然看到时间序列预测有Informer模型,我就又去研究了Informer,对于我一个新手来说,代码优点复杂,只能囫囵吞枣的看看(感谢大佬 羽星_s 的分享),不得不佩服提出Self-Attension的人,感谢Haoyi Zhou团队开源项目,代码写的真漂亮!

在学习了大量免费的资料后(非常感谢愿意免费分享资料的人),自己写出了一个简单的LSTM的代码,数据集我用的Haoyi Zhou他们的一个数据集。我决定分享出来,给更多人一个参考,吃水不忘挖井人,赠人玫瑰,手留余香!

代码非常的简单,实现功能也不齐全,有错误的地方欢迎指出,希望能和大家一起交流!

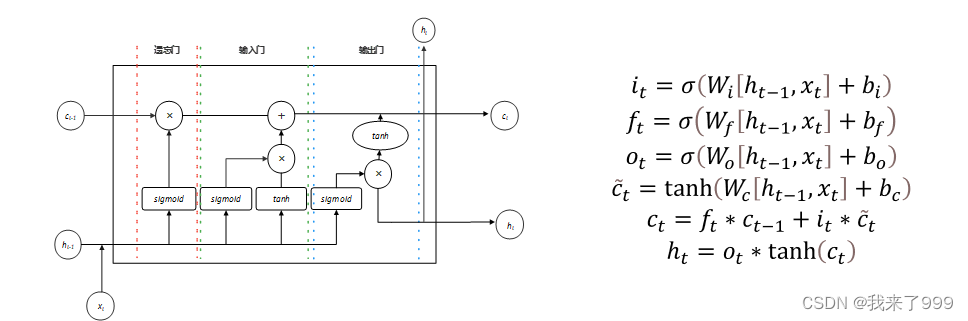

由于我是小白,在学习LSTM网络的时候花了不少时间,最后才把模型与代码关联起来,掌握这个LSTM最重要的就是搞清楚网络的输入(input.shape =(batch_size,seq_len,input_dim) ,我设置LSTM的batch_first=True)与输出(output.shape = (batch-size,seq_len,num_direction * hidden_size))。在下图中,单个LSTM单元,输入量有3个,输出量其实只有两个(output与ht是一样的),我把里面的各种门理解为一个权重,三个输入的权重比。LSTM有3个门,而RNN的另一个变体GRU却是只有两个门(我没研究过GRU)。我的源码里面有GRU的代码(这是我在GitHub上面找到的,具体是谁分享的不太清楚了,这里就没有标出出处。)

我的LSTM代码非常简单,最后的效果,对比于Informer,也非常不好。我以后可能大概率使用Informer去实现预测,也有可能继续研究LSTM,大家如果想进一步研究LSTM,我觉得可以结合Resnet、加入注意力机制等,有一些关于注意力机制的论文(但是很少有源码开源)。

目前我的项目在模型这一块花了不少时间,我已经不太想继续搞这个LSTM模型了,想继续推进项目到下一步了。所以,写个文章对过去的一段付出做个总结。虽然模型还是没有实现真正的预测(不像Informer那样预测未来,我看到过与开源代码实现了单输入单输出的预测,他使用tolist的方法实现,我没有继续研究下去,不知道多输入单输出是否有类似的方法),我只是实现了多输入单输出,没有实现多输入多输出(有几个博主的付费内容有提到这点,但我不知道他们有没有具体实现。我没有花钱买)。

希望我的文章对你有帮助,如果有,可以给我点个关注,谢谢!

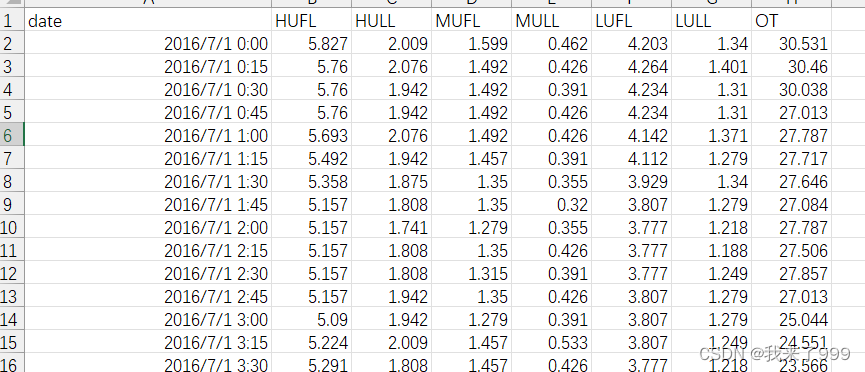

数据集预览:

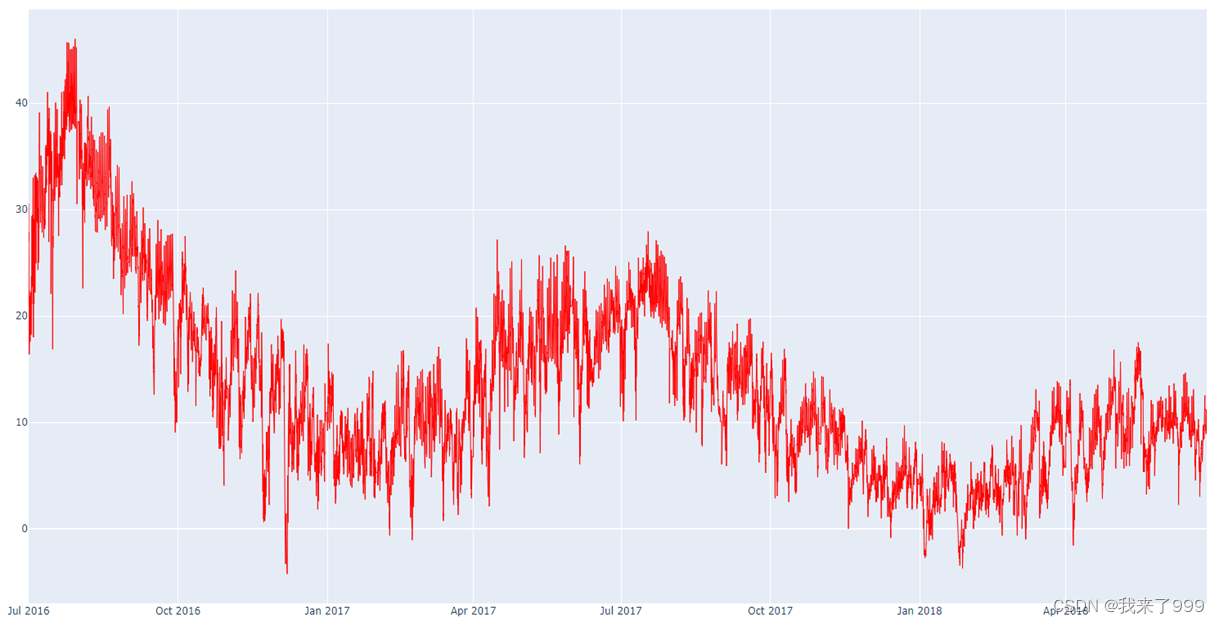

OT列可视化图

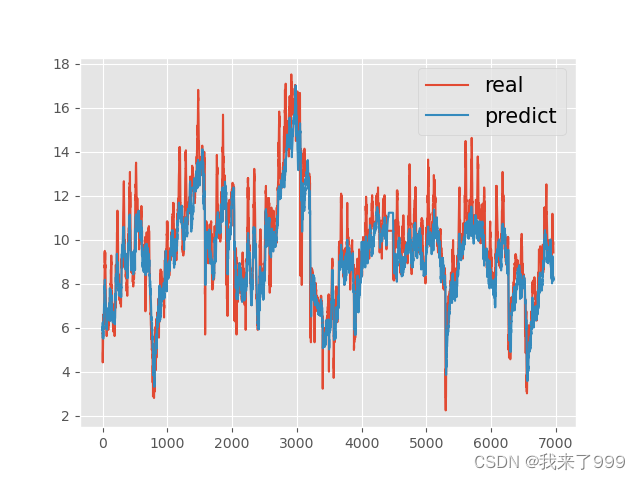

效果展示:

第一张是整个训练集的效果

第二张是我截取整个数据集一部分作为测试集的效果

下面是完整的代码:

# Data Development:2023/5/14 17:07

import torch

from datetime import datetime

from torchvision import transforms, datasets

from torch import nn, optim

from torch.autograd import Variable

from torch.utils.data import DataLoader

import torch.utils.data as Data

import numpy as np

import pandas as pd

import torch.nn.functional as F

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler,StandardScaler

from sklearn.metrics import mean_squared_error

from sklearn.metrics import mean_absolute_error

from tqdm import tqdm

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'TRUE'

import warnings

warnings.filterwarnings("ignore")

torch.manual_seed(10)

plt.style.use('ggplot')

class Config():

data_path = './data/ETTm1.csv'

seq_length =32#time step

input_size =7#input dim

hidden_size = 128

num_layers = 2

num_classes = 1#输出维度

learning_rate = 0.0001

batch_size = 64

n_iters = 100000

split_ratio = 0.8

iter = 0

t = 0

model_train =True #是否训练

# save_model=True #是否保存模型

# data_path = 'WTH.csv'

best_loss = 1000

model_name ='lstm'

save_path = './LSTMModel/{}.pth'.format(model_name)

config = Config()

def read_data(data_path):

'''

read original data, show and plot

'''

# data = pd.read_excel(data_path)

# data = pd.read_csv(data_path,parse_dates=['date'],index_col="date")

data = pd.read_csv(data_path,parse_dates=['date'],index_col=['date'])

# data = data[:0.3*len(data)]

# data = data[data.index.year.isin([2016])].copy()

#将'2016-07-01 00:00:00转换成dataframe日期格式

# data["date"]= pd.to_numeric(data["date"],errors="ignore")

# data.drop(['date'],axis=1,inplace=True)

# data = data.values # 将pd的系列格式转换为np的数组格式

data['HUFL']= data['HUFL'].astype(float)

data['HULL']= data['HULL'].astype(float)

data['MUFL']= data['MUFL'].astype(float)

data['MULL']= data['MULL'].astype(float)

data['LUFL']= data['LUFL'].astype(float)

data['LULL']= data['LULL'].astype(float)

data['HUFL']= data['HUFL'].astype(float)

# Remove $ from prices and cast as float

# df['Close'] = df['Close'].str.replace('$', '').astype(float)

# df['Open'] = df['Open'].str.replace('$', '').astype(float)

# df['High'] = df['High'].str.replace('$', '').astype(float)

# df['Low'] = df['Low'].str.replace('$', '').astype(float)

# Convert Date column to numeric data type

# df["Date"] = pd.to_numeric(df["Date"], errors='ignore')

# data = data.iloc[:int(0.1*len(data)),:]

# plt.ylabel('output_temperature')

# plt.autoscale(axis='x', tight=True)

# plt.plot(data.iloc[:, 0])

# plt.legend(['data_temperature'])

# plt.show()

# data = data.dropna()

# data = data.drop(['date'], axis=1,inplace=True)

# data=data[:,:7].values#不包括最后一行 loc包括

# label=data[:,-1].values

# data = data.iloc[:, :6]

label = data.iloc[:,-1]

# print(data.head())

return data,label

def normalization(data,label):

'''

normalization

'''

# mm_x = MinMaxScaler()

# mm_y = MinMaxScaler()

mm_x = StandardScaler()

mm_y = StandardScaler()

# data = data.values # 将pd的系列格式转换为np的数组格式

# data["HUFL"]= mm_x.fit_transform(data[['HUFL']])

# data["HULL"]= mm_x.fit_transform(data[['HULL']])

# data["MUFL"]= mm_x.fit_transform(data[['MUFD']])

# data["MULL"]= mm_x.fit_transform(data[['MULL']])

#

# data["LUFL"]= mm_x.fit_transform(data[['LUFL']])

# data["LULL"]= mm_x.fit_transform(data[['LULL']])

# data["HUFL"]= mm_x.fit_transform(data[['HUFD']])

data = mm_x.fit_transform(data)

# data = pd.DataFrame(data)

label = mm_y.fit_transform(label.values.reshape(-1, 1))

# label = pd.DataFrame(label)

return data, label, mm_y

def sliding_windows(data, seq_length):

'''

Output:The data form we can feed GRU

'''

x = []

y = []

for i in range(len(data)-seq_length-1):#子序列的个数 长度为seq_length

_x = data[i:(i+seq_length),:]#子序列 seq_length行

_y = data[i+seq_length,-1]#子序列最后一列的下一列最后一个元素 作为标签

# _y = data[i + seq_length-1, -1]

x.append(_x)

y.append(_y)

x, y = np.array(x),np.array(y)#这段代码的作用是为了将x和y转换为NumPy数组

print('x.shape,y.shape:\n',x.shape,y.shape)

return x, y

def data_split(x, y, split_ratio):

'''

convert to torch format and split train&test

'''

train_size = int(len(y) * split_ratio)

test_size = int(len(y) *(1 - split_ratio -0.1) )

x_data = Variable(torch.FloatTensor(np.array(x)))

y_data = Variable(torch.FloatTensor(np.array(y)))

# y_data = Variable(torch.Tensor(np.array(y)))

x_train = Variable(torch.FloatTensor(np.array(x[0:train_size])))

y_train = Variable(torch.FloatTensor(np.array(y[0:train_size])))

x_test = Variable(torch.FloatTensor(np.array(x[-test_size:])))

y_test = Variable(torch.FloatTensor(np.array(y[-test_size:])))

print('x_train.shape,y_train.shape,x_test.shape,y_test.shape:\n',x_train.shape,y_train.shape,x_test.shape,y_test.shape)

return x_data, y_data, x_train, y_train, x_test, y_test

def data_generator(x_train, y_train, x_test, y_test, n_iters, batch_size):

'''

generate DataLoader

'''

num_epochs = n_iters / (len(x_train) / batch_size)

num_epochs = int(num_epochs)

train_dataset = Data.TensorDataset(x_train, y_train)

test_dataset = Data.TensorDataset(x_test, y_test)

train_loader = torch.utils.data.DataLoader(dataset=train_dataset,

batch_size=batch_size,

shuffle=False)

test_loader = torch.utils.data.DataLoader(dataset=test_dataset,

batch_size=batch_size,

shuffle=False)

# val_loader = torch.utils.data.DataLoader()

return train_loader, test_loader, num_epochs

data,label = read_data(config.data_path)

data, label, mm_y = normalization(data, label)

x, y = sliding_windows(data, config.seq_length)

x_data, y_data, x_train, y_train, x_test, y_test = data_split(x, y, config.split_ratio)

print('x_train.shape,y_train.shape,x_test.shape,y_test.shape:\n',x_train.shape,y_train.shape,x_test.shape,y_test.shape)

# for index,date in enumerate(x_train):

#

# print(index,date)

# for index, date in enumerate(y_train):

# print(index, date)

train_loader, test_loader, num_epochs = data_generator(x_train, y_train, x_test, y_test, config.n_iters, config.batch_size)

# print('train_loader.shape,test_loader.shape,x_test.shape,y_test.shape:\n',train_loader.shape,test_loader.shape)

# for i, (features,targets) in enumerate(train_loader):

# print("Size of the features",features.shape)

# print("Printing features:\n", features)

# print("Size of the features", targets.shape)

# print("Printing targets:\n", targets)

# break

# for i, (features,targets) in enumerate(y_train):

# print("Size of the features",features.shape)

# print("Printing features:\n", features)

# print("Printing targets:\n", targets)

# break

class LSTM2(nn.Module):

def __init__(self,num_classes, input_size, hidden_size, num_layers,seq_length):

'''

Initialize the model by setting the number of layers and other parameters'''

super(LSTM2, self).__init__()

self.num_classes = num_classes

self.num_layers = num_layers

self.input_size = input_size

self.hidden_size = hidden_size

self.seq_length = seq_length

self.lstm = nn.LSTM(input_size=input_size, hidden_size=hidden_size,

num_layers=num_layers, batch_first=True,

dropout=0.2, bidirectional=False)

self.fc = nn.Linear(hidden_size, num_classes)

def get_hidden(self,batch_size):

'''(num_layers * num_directions, batch_size, hidden_size )'''

h_0 = torch.zeros(self.num_layers,batch_size,self.hidden_size)

c_0 = torch.zeros(self.num_layers,batch_size,self.hidden_size)

hidden = (h_0,c_0)

return hidden

def forward(self, x):

'''(num_layers * num_directions, batch_size, hidden_size )'''

hidden = self.get_hidden(x.size(0))

# Propagate input through LSTM

outputs, hidden= self.lstm(x, hidden)

# print('output.shape 5', outputs.shape)

#全连接层重新处理输出的tensor shape (batch_size,seq_length,hidden_size)->(seq_length*batch_size,hidden_size)

outputs = outputs.reshape(-1,self.hidden_size)

# print('output.shape 4', outputs.shape)

outputs = self.fc(outputs)

# print('output.shape 3', outputs.shape)

# outputs.shape= (batch_size, seq_length, num_directions * num_layers)

outputs =outputs.view(-1,self.seq_length,self.num_classes)

# print('output.shape 1',outputs.shape)

outputs = outputs[:,-1,:] #outputs.shape 2: torch.Size([55717, 1])

# out2 = outputs[-1]

# print('outputs.shape 2: ',outputs.shape) #outputs.shape 2: torch.Size([32, 1])

return outputs

model = LSTM2(config.num_classes, config.input_size, config.hidden_size, config.num_layers, config.seq_length)

# mymodel =LSTM2(num_classes, input_size, hidden_size, num_layers)

print(model)

criterion = torch.nn.MSELoss() # mean-squared error for regression

optimizer = torch.optim.Adam(model.parameters(), lr=config.learning_rate)

# Training

if config.model_train:

t= config.t

iter = config.iter

model =model

print('Start Training')

for epochs in range(num_epochs):

print('-----------training : {}/{} ----------'.format(t+1,num_epochs))

t +=1

for i,(batch_x, batch_y) in enumerate (train_loader):

outputs = model(batch_x)

# clear the gradients

optimizer.zero_grad()

#loss

#print(outputs.shape, batch_y.shape)

loss = criterion(outputs,batch_y)

#backpropagation

loss.backward()

optimizer.step()

iter+=1

if iter % 100 == 0:

print("iter: %d, loss: %1.5f" % (iter, loss.item()))

model.eval()

test_loss=0

with torch.no_grad():

test_bar = tqdm(test_loader)

for data in test_bar:

batch_x,batch_y = data

y_test_pred = model(batch_x)

test_loss = criterion(y_test_pred,batch_y)

if test_loss < config.best_loss:

config.best_loss = test_loss

torch.save(model.state_dict(), config.save_path)

print('Finished Training')

else:

print('导入已有模型测试:')

def result(x_data, y_data):

model.load_state_dict(torch.load(config.save_path))

print('模型加载成功!开始测试:')

model.eval()

print('y_size:', y_data.shape)

with torch.no_grad():

train_predict = model(x_data)

print('output_size:',train_predict.shape)

data_predict = train_predict.data.numpy()

y_data_plot = y_data.data.numpy()

print('y_size:', y_data_plot.shape)

#其中-1表示自动计算该维度的大小,1表示将每个元素变为一个单独的列。

# 这样做的目的是为了方便绘制折线图或散点图

y_data_plot = np.reshape(y_data_plot, (-1,1))

print('y_data_plot.shape',y_data_plot.shape, 'data_predict.shape', data_predict.shape)

print('MAE/RMSE')

print(mean_absolute_error(y_data_plot, data_predict))

print(np.sqrt(mean_squared_error(y_data_plot, data_predict)))

data_predict = mm_y.inverse_transform(data_predict)

y_data_plot = mm_y.inverse_transform(y_data_plot)

plt.plot(y_data_plot)

plt.plot(data_predict)

plt.legend(('real', 'predict'),fontsize='15')

plt.show()

# plt.savefig('plots/{}.png',format(len(x_train)))

# result(x_data, y_data)

result(x_train,y_train)

result(x_test,y_test)

本人QQ:1632401994

这些代码是我在文件里面复制出来的,有些删减,可能有错误,大家可以在百度网盘下载源码运行源码:链接: https://pan.baidu.com/s/1Navs9PidgAsAtXYiLokmJw?pwd=lstm 提取码: lstm

4247

4247

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?