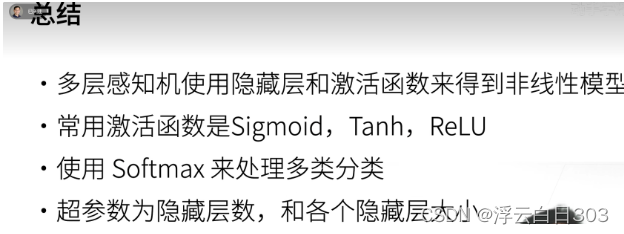

多层感知机

ReLu的存在,是为了调节X平方或者高次方前边的系数,组合起来更贴近你想要的那条曲线用的

多层感知机的简洁实现

##多层感知机的从零实现

import torch

from torch import nn

from d2l import torch as d2l

batch =256

train,test = d2l.load_data_fashion_mnist(batch)

#单隐藏层感知机

hide = 256

input = 784

output = 10

w1= nn.Parameter(torch.randn(input,hide,requires_grad=True))

b1 = nn.Parameter(torch.zeros(hide,requires_grad=True))

w2 = nn.Parameter(torch.randn(

本文介绍了如何使用PyTorch实现一个包含单隐藏层的多层感知机,重点讲解了ReLU激活函数的应用、权重初始化以及使用Sequential模块构建简洁网络结构。

本文介绍了如何使用PyTorch实现一个包含单隐藏层的多层感知机,重点讲解了ReLU激活函数的应用、权重初始化以及使用Sequential模块构建简洁网络结构。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?