/*

*下面的alloc_pages(gfp_mask,order)函数用来请求2^order个连续的页框

*/172

#define alloc_pages(gfp_mask, order)

173 alloc_pages_node(numa_node_id(), gfp_mask, order)

618 #define numa_node_id() (cpu_to_node(raw_smp_processor_id()))

47 /* Returns the number of the node containing CPU 'cpu' */

48 static inline int cpu_to_node(int cpu)

49 {

50 return cpu_2_node[cpu];

51 }

465 int cpu_2_node[NR_CPUS] __read_mostly = { [0 ... NR_CPUS-1] = 0};//每个CPU都有相互对应的节点,__read_mostly是gcc的一个

//属性

//分配页面函数,这个函数比较复杂,所牵涉到的内容也比较多,尤其是进程方面的内容

144 static inline struct page alloc_pages_node(int nid, gfp_t gfp_mask,

145 unsigned int order)

146 {

147 if (unlikely(order >= MAX_ORDER)) /如果要求分配的页数大于MAX_ORDER就以失败告终,这里的MAX_ORDER指的是最大页面号,这里要注意的是对于伙伴算法,所分配的 页面的最大值为2^10,即1024个页面,这一点在伙伴算法中经常会使用到,所以这里的MAX_ORDER的值为11,也就是说如果order的值大于了10,即超出了最大值,那么就会以失败告终,直接以失败返回。/

148 return NULL; /从这个判断可以了解到,所分配页的最大的值为 2^10次方,即1KB个页面,即最大不能超过4MB。/

149

150 / Unknown node is current node /

151 if (nid < 0)

152 nid = numa_node_id();/具体实现: #define numa_node_id() (cpu_to_node(raw_smp_processor_id()))

//最后得到的值为0,因为假设现在只有一个CPU /

/

47 / Returns the number of the node containing CPU 'cpu'

48 static inline int cpu_to_node(int cpu)

49 {

50 return cpu_2_node[cpu];//int cpu_2_node[NR_CPUS] __read_mostly = { [0 ... NR_CPUS-1] = 0 };/ 这又是C语言中使用的一个新的数组初始化的方法。 //read_mostly是在最后执行的时候被组织到一起,这被认为是为了提高效率,因为在多CPU系统中它改善了访问的时间。*/

51 }

/

153

154 return __alloc_pages(gfp_mask, order,

155 NODE_DATA(nid)->node_zonelists + gfp_zone(gfp_mask)); /这是伙伴算法的核心实现,node_zonelists是zone_list类型,gfp_zone的返回值为ZONE_DMA或者是ZONE_NORMAL或ZONE_HIGH,这三个区分别对应着一个值,ZONE_DMA为0,ZONE_NORMAL为1,ZONE_HIGH为2,即__alloc_pages分配页面的管理区由的三个参数决定,如果gfp_zone的返回值为0,就是在ZONE_DMA管理区中分配,如果gfp_zone返回值为1,就是在ZONE_NORMAL中进行分配,如果gfp_zone的返回值为2,就是在ZONE_HIGH中进行分配。/

//下面是NDOE_DATA的具体定义:

/

struct pglist_data *node_data[MAX_NUMNODES] __read_mostly;这里的MAX_NUMNODES的值为1,即就定义一个节点

/

156 }

1232 /

1233 * This is the 'heart' of the zoned buddy allocator.

这个算法是伙伴算法的核心操作

1234 */

1235 struct page * fastcall __alloc_pages(gfp_t gfp_mask, unsigned int order,

1237 struct zonelist *zonelist)

1238 {

1239 const gfp_t wait = gfp_mask & __GFP_WAIT; /为了实现查看是否允许内核对等待空闲页框的当前进程进行阻塞/

1240 struct zone **z; //这里为何要使用双重指针???

1241 struct page page; //指向页描述符的指针

1242 struct reclaim_state reclaim_state; //可回收页面操作

81 /

82 * current->reclaim_state points to one of these when a task is running

83 * memory reclaim

用于回收页面

84 */

1243 struct task_struct p = current; //将p设置成指向当前进程

1244 int do_retry; //

1245 int alloc_flags; //分配标志

1246 int did_some_progress;

1247

1248 might_sleep_if(wait); //对可能睡眠的函数进行注释

1249

1250 if (should_fail_alloc_page(gfp_mask, order)) /检查内存分配是否可行,如果不可行就直接返回,即以失败告终,否则就继续执行内存分配/

1251 return NULL;

1252

1253 restart:

1254 z = zonelist->zones; / the list of zones suitable for gfp_mask ///首先让z指向第一个管理区

1255

1256 if (unlikely(z == NULL)) { /unlikely()宏的功能很有意思的,可以自己去进行验证。这里要实现的如果z==NULL,那么就返回NULL,否则就继续执行。/

1257 / Should this ever happen?? */

1258 return NULL;

1259 }

1261 page = get_page_from_freelist(gfp_mask|__GFP_HARDWALL, order,

1262 zonelist, ALLOC_WMARK_LOW|ALLOC_CPUSET); //从空闲链表中获取2^order页内存

//这是get_page_from_freelist函数的原型

// get_page_from_freelist(gfp_t gfp_mask, unsigned int order,struct zonelist zonelist, int alloc_flags)

1263 if (page)

1264 goto got_pg; //如果获得了相应的页就退出,否则继续执行

1265

1266 /

1267 * GFP_THISNODE (meaning __GFP_THISNODE, __GFP_NORETRY and

84 #define GFP_THISNODE (__GFP_THISNODE | __GFP_NOWARN | __GFP_NORETRY)

1268 * __GFP_NOWARN set) should not cause reclaim since the subsystem

1269 * (f.e. slab) using GFP_THISNODE may choose to trigger reclaim

1270 * using a larger set of nodes after it has established that the

1271 * allowed per node queues are empty and that nodes are

1272 * over allocated.

1273 */

1274 if (NUMA_BUILD && (gfp_mask & GFP_THISNODE) == GFP_THISNODE) //在不支持NUMA的情况下跳转到nopage处

1275 goto nopage;

1276

1277 for (z = zonelist->zones; *z; z++)

1278 wakeup_kswapd(z, order);//回收页面操作,待解

/

1510 *

1511 * A zone is low on free memory, so wake its kswapd task to service it.

1512 *

1513 void wakeup_kswapd(struct zone *zone, int order)

1514 {

1515 pg_data_t *pgdat;

1516

1517 if (!populated_zone(zone)) /return !!(zone->present_pages) zone->present_pages是以页为单位的管理区的总大小,如果以页为单位的管理区的总大小为0,那么就直接结束退出/

1518 return;

1519

1520 pgdat = zone->zone_pgdat;

1521 if (zone_watermark_ok(zone, order, zone->pages_low, 0, 0))

1522 return;

1523 if (pgdat->kswapd_max_order < order)

1524 pgdat->kswapd_max_order = order;

1525 if (!cpuset_zone_allowed_hardwall(zone, GFP_KERNEL))

1526 return;

1527 if (!waitqueue_active(&pgdat->kswapd_wait))

1528 return;

1529 wake_up_interruptible(&pgdat->kswapd_wait);

1530 }

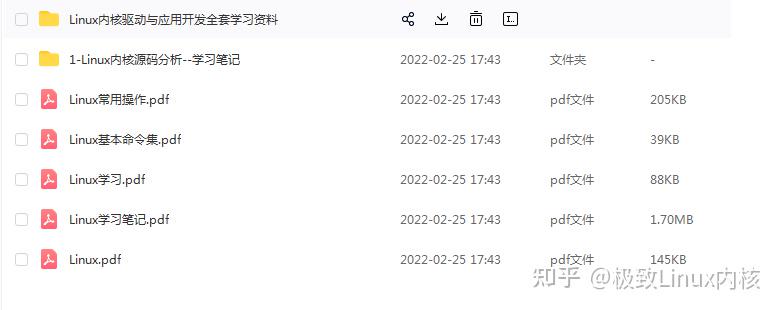

【文章福利】小编推荐自己的Linux内核技术交流群: 【977878001】整理一些个人觉得比较好得学习书籍、视频资料共享在群文件里面,有需要的可以自行添加哦!!!前100进群领取,额外赠送一份 价值699的内核资料包(含视频教程、电子书、实战项目及代码)

内核资料直通车:Linux内核源码技术学习路线+视频教程代码资料

学习直通车:Linux内核源码/内存调优/文件系统/进程管理/设备驱动/网络协议栈

/

1279

1280 /

1281 * OK, we're below the kswapd watermark and have kicked background

1282 * reclaim. Now things get more complex, so set up alloc_flags according

1283 * to how we want to proceed.

1284 *

1285 * The caller may dip into page reserves a bit more if the caller

1286 * cannot run direct reclaim, or if the caller has realtime scheduling

1287 * policy or is asking for __GFP_HIGH memory. GFP_ATOMIC requests will

1288 * set both ALLOC_HARDER (!wait) and ALLOC_HIGH (__GFP_HIGH).

1289 */

1290 alloc_flags = ALLOC_WMARK_MIN; //

/*

890 #define ALLOC_NO_WATERMARKS 0x01 /* don't check watermarks at all *

891 #define ALLOC_WMARK_MIN 0x02 /* use pages_min watermark *

892 #define ALLOC_WMARK_LOW 0x04 /* use pages_low watermark *

893 #define ALLOC_WMARK_HIGH 0x08 /* use pages_high watermark *

894 #define ALLOC_HARDER 0x10 /* try to alloc harder *

895 #define ALLOC_HIGH 0x20 /* __GFP_HIGH set *

896 #define ALLOC_CPUSET 0x40 /* check for correct cpuset *

/

1291 if ((unlikely(rt_task(p)) && !in_interrupt()) || !wait)

1292 alloc_flags |= ALLOC_HARDER;

1293 if (gfp_mask & __GFP_HIGH)

1294 alloc_flags |= ALLOC_HIGH;

1295 if (wait)

1296 alloc_flags |= ALLOC_CPUSET;

1297

1298 /

1299 * Go through the zonelist again. Let __GFP_HIGH and allocations

1300 * coming from realtime tasks go deeper into reserves.

1301 *

1302 * This is the last chance, in general, before the goto nopage.

1303 * Ignore cpuset if GFP_ATOMIC (!wait) rather than fail alloc.

1304 * See also cpuset_zone_allowed() comment in kernel/cpuset.c.

1305 /

1306 page = get_page_from_freelist(gfp_mask, order, zonelist, alloc_flags);//在进行了页面回收后再次进行页面的分配操作

1307 if (page)

1308 goto got_pg; //如果分配成功,就成功返回

1309

1310 / This allocation should allow future memory freeing. /

1311

1312 rebalance:

1313 if (((p->flags & PF_MEMALLOC) || unlikely(test_thread_flag(TIF_MEMDIE)))//#define PF_MEMALLOC 0x00000800 / Allocating memory / TIF_MEMDIE=16

/

63 #define test_thread_flag(flag)

64 test_ti_thread_flag(current_thread_info(), flag)

50 static inline int test_ti_thread_flag(struct thread_info ti, int flag)

51 {

52 return test_bit(flag,&ti->flags);

53 }

/

1314 && !in_interrupt()) {

1315 if (!(gfp_mask & __GFP_NOMEMALLOC)) {

1316 nofail_alloc:

1317 / go through the zonelist yet again, ignoring mins /

1318 page = get_page_from_freelist(gfp_mask, order,

1319 zonelist, ALLOC_NO_WATERMARKS);

1320 if (page)

1321 goto got_pg;

1322 if (gfp_mask & __GFP_NOFAIL) {

1323 congestion_wait(WRITE, HZ/50);

1324 goto nofail_alloc;

1325 }

1326 }

1327 goto nopage;

1328 }

1329

1330 / Atomic allocations - we can't balance anything /

1331 if (!wait) //原子分配,就跳转到nopage,即没有空闲页

1332 goto nopage;

1333

1334 cond_resched();

1335

1336 / We now go into synchronous reclaim 现在进入异步回收/

1337 cpuset_memory_pressure_bump();

1338 p->flags |= PF_MEMALLOC;

1339 reclaim_state.reclaimed_slab = 0;

1340 p->reclaim_state = &reclaim_state;

1341

1342 did_some_progress = try_to_free_pages(zonelist->zones, order, gfp_mask);

1343

1344 p->reclaim_state = NULL;

1345 p->flags &= ~PF_MEMALLOC;

1346

1347 cond_resched();

1348

1349 if (likely(did_some_progress)) {

1350 page = get_page_from_freelist(gfp_mask, order,

1351 zonelist, alloc_flags);

1352 if (page)

1353 goto got_pg;

1354 } else if ((gfp_mask & __GFP_FS) && !(gfp_mask & __GFP_NORETRY)) {//If set the mark of the __GFP_FS zero,Then it doesn't allow the kernel execute the operation depending the filesystem .The mark of __Gfp_NORETRY means that you can allocate the page only once.Here allows allocate many times

1355 /*

1356 * Go through the zonelist yet one more time, keep

1357 * very high watermark here, this is only to catch

1358 * a parallel oom killing, we must fail if we're still

1359 * under heavy pressure.

1360 /

1361 page = get_page_from_freelist(gfp_mask|__GFP_HARDWALL, order,

1362 zonelist, ALLOC_WMARK_HIGH|ALLOC_CPUSET);

1363 if (page)

1364 goto got_pg;

1365

1366 / The OOM killer will not help higher order allocs so fail /

1367 if (order > PAGE_ALLOC_COSTLY_ORDER)

1368 goto nopage;

1369 /

27 *PAGE_ALLOC_COSTLY_ORDER是那些分配行为被认为是一项花费较大的服务所对应的定值,

28 * PAGE_ALLOC_COSTLY_ORDER is the order at which allocations are deemed

29 * costly to service. That is between allocation orders which should

30 * coelesce naturally under reasonable reclaim pressure and those which

31 * will not.

32 *

33 #define PAGE_ALLOC_COSTLY_ORDER 3

/

1370 out_of_memory(zonelist, gfp_mask, order);

1371 goto restart;

1372 }

1373

1374 /

1375 * Don't let big-order allocations loop unless the caller explicitly

1376 * requests that. Wait for some write requests to complete then retry.

1377 *

1378 * In this implementation, __GFP_REPEAT means __GFP_NOFAIL for order

1379 * <= 3, but that may not be true in other implementations.

1380 /

1381 do_retry = 0;

1382 if (!(gfp_mask & __GFP_NORETRY)) {

1383 if ((order <= PAGE_ALLOC_COSTLY_ORDER) ||

1384 (gfp_mask & __GFP_REPEAT))

1385 do_retry = 1;

1386 if (gfp_mask & __GFP_NOFAIL)

1387 do_retry = 1;

1388 }

1389 if (do_retry) {

1390 congestion_wait(WRITE, HZ/50);

1391 goto rebalance;

1392 }

1393

1394 nopage:

1395 if (!(gfp_mask & __GFP_NOWARN) && printk_ratelimit()) {

1396 printk(KERN_WARNING "%s: page allocation failure."

1397 " order:%d, mode:0x%x\n",

1398 p->comm, order, gfp_mask);

1399 dump_stack();

/

278 *

279 * The architecture-independent dump_stack generator

280 *

281 void dump_stack(void)

282 {

283 unsigned long stack;

285 show_trace(current, NULL, &stack);

286 }

241 void show_trace(struct task_struct *task, struct pt_regs *regs,

242 unsigned long * stack)

243 {

244 show_trace_log_lvl(task, regs, stack, "");

245 }

*/

1400 show_mem();//如果没有空闲的页就显示内存具体分布,即罗列出相应的信息

1401 }

1402 got_pg:

1403 return page;

1404 }

1405

1406 EXPORT_SYMBOL(__alloc_pages);

721

721

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?