dash

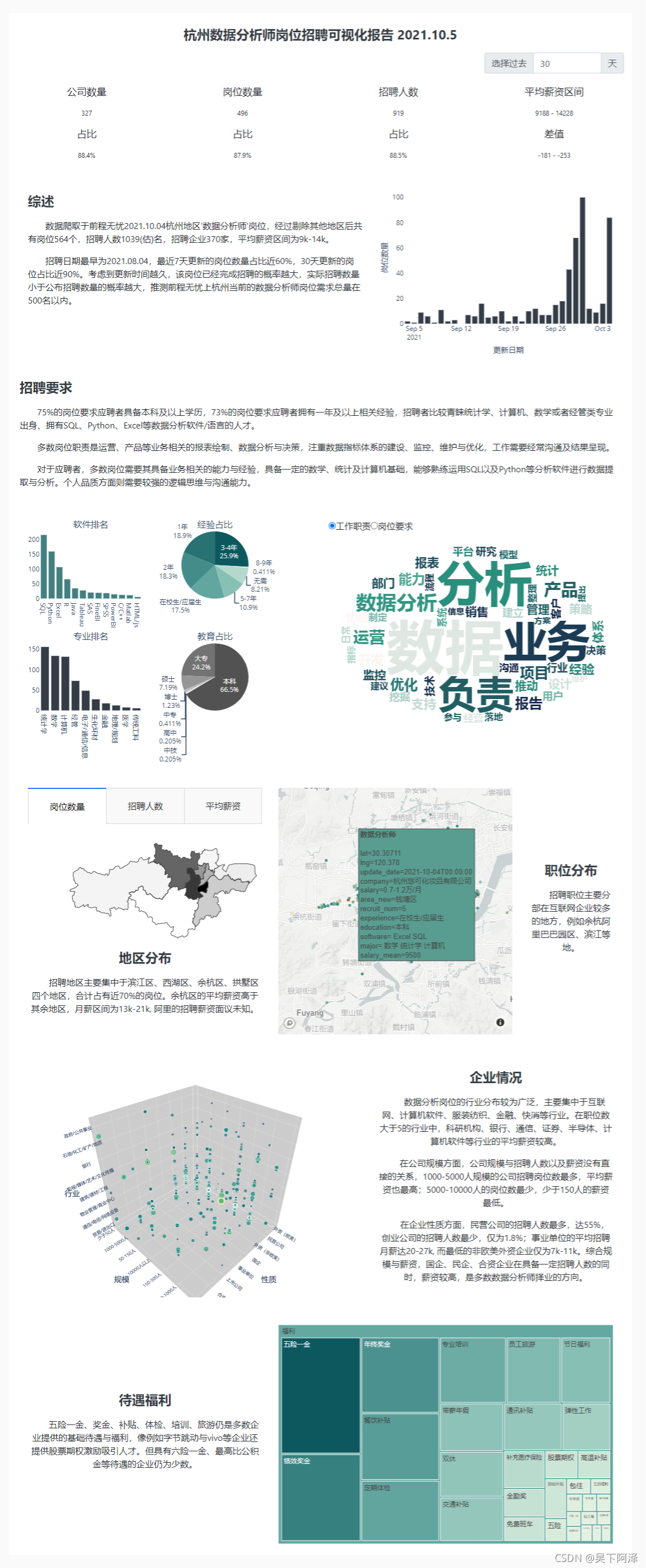

dash不同于flask或者Django,具备少量的前端知识即可制作可视化网页,下图为本人制作的数据分析师岗位招聘情况可视化网页。

文末附网盘下载经过清洗后的数据与可视化网页的代码。

交互

选择右上角的天数即可交互查看过去n天的招聘情况,如下图所示,选择查看过去30天的招聘数据,可见所有数据均发生了改变。过去30天招聘的公司数为招聘总数的88.4%,岗位数量87.9%,招聘人数88.5%, 平均薪资略有下降,直方图、词云图等有所变化。

除了天数,还可以对于一些细节进行交互,如下图切换工作职责与岗位要求词云图

切换各区的岗位数量、招聘人数、平均薪资三个不同指标对比

数据

数据爬取自10.4日前程无忧数据分析师职位,爬虫代码就不放了,没有太多的反爬措施,爬取岗位详情页时使用代理IP即可。

详细的步骤就不说明了,直接上代码吧

代码

import dash

from dash import html

from dash import dcc

import dash_bootstrap_components as dbc

from dash.dependencies import Input, Output

import dash

import plotly.express as px

import plotly.graph_objects as go

from plotly.subplots import make_subplots

import json

import matplotlib.pyplot as plt

from wordcloud import WordCloud

import base64

import pandas as pd

import numpy as np

from datetime import timedelta

import jieba

import os

import palettable.cmocean.sequential as pcs

from PIL import Image

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

data = pd.read_excel(r"data20211007.xlsx", engine='openpyxl').drop('Unnamed: 0', axis=1)

with open(r"杭州市.json", encoding='utf-8-sig') as f:

hangzhou_geo = json.load(f)

# 请替换自己的mapbox token,如果没有可以使用无需token的地图,请查看下文的job_map回调函数中的注释

token = 'pk.eyJ1I*******************************qPYHEMPJapWg'

app = dash.Dash(

__name__,

suppress_callback_exceptions=True

)

app.layout = html.Div([

html.Br(),

dbc.Container(

[

html.P('杭州数据分析师岗位招聘可视化报告 2021.10.5',

style={"font-size": "24px", 'text-align': 'center', "padding-top": "20px",

"color": "rgb(53, 60, 70)", "font-weight": "bold", }),

dbc.Row(

dbc.Col(

dbc.InputGroup([

dbc.InputGroupAddon("选择过去", addon_type="prepend"),

dbc.Input(id='last_days',

type="number",

value=(data['update_date'].max() - data['update_date'].min()).days,

min=1,

max=(data['update_date'].max() - data['update_date'].min()).days,

step=1,

),

dbc.InputGroupAddon("天", addon_type="append"),

]), width={'size': 3, 'offset': 9}

),),

# 指标:公司总数、岗位总数、招聘人员总数、平均薪资区间及其占比、变化

dbc.Row([

dbc.Col([

html.P('公司数量', style={"font-size": "18px", 'text-align': 'center'}),

html.P(id='company_num', style={"font-size": "12px", 'text-align': 'center'}),

html.P('占比', style={"font-size": "18px", 'text-align': 'center'}),

html.P(id='company_num_proportion', style={"font-size": "12px", 'text-align': 'center'})

], width=3

),

dbc.Col([

html.P('岗位数量', style={"font-size": "18px", 'text-align': 'center'}),

html.P(id='job_num', style={"font-size": "12px", 'text-align': 'center'}),

html.P('占比', style={"font-size": "18px", 'text-align': 'center'}),

html.P(id='job_num_proportion', style={"font-size": "12px", 'text-align': 'center'}),

], width=3

),

dbc.Col([

html.P('招聘人数', style={"font-size": "18px", 'text-align': 'center'}),

html.P(id='recruitment_num', style={"font-size": "12px", 'text-align': 'center'}),

html.P('占比', style={"font-size": "18px", 'text-align': 'center'}),

html.P(id='recruitment_num_proportion', style={"font-size": "12px", 'text-align': 'center'}),

], width=3

),

dbc.Col([

html.P('平均薪资区间', style={"font-size": "18px", 'text-align': 'center'}),

html.P(id='avg_salary_range', style={"font-size": "12px", 'text-align': 'center'}),

html.P('差值', style={"font-size": "18px", 'text-align': 'center'}),

html.P(id='avg_salary_range_change', style={"font-size": "12px", 'text-align': 'center'}),

], width=3

),

], style={"padding-top": "20px", "padding-bottom": "20px"}),

# 简述、日期频数图

dbc.Row([

dbc.Col([

html.P('综述', style={"font-size": "24px", "color": "rgb(53, 60, 70)", "font-weight": "bold"}),

dcc.Markdown(

"""

数据爬取于前程无忧2021.10.04杭州地区'数据分析师'岗位,经过剔除其他地区后共有岗位564个,招聘人数1039(估)名,招聘企业370家,平均薪资区间为9k-14k。

招聘日期最早为2021.08.04,最近7天更新的岗位数量占比近60%,30天更新的岗位占比近90%。考虑到更新时间越久,该岗位已经完成招聘的概率越大,实际招聘数量小于公布招聘数量的概率越大,推测前程无忧上杭州当前的数据分析师岗位需求总量在500名以内。

"""

)

], width=7

),

dbc.Col([

dcc.Graph(id='update_job_num'),

], width=5

),

], style={"padding-left": "20px", "padding-right": "20px",

"padding-top": "20px", "padding-bottom": "20px"}),

# 软件、专业、教育、经验要求、工作职责、岗位要求总结

dbc.Row([

html.P('招聘要求', style={"font-size": "24px", "color": "rgb(53, 60, 70)", "font-weight": "bold"}),

dcc.Markdown(

"""

75%的岗位要求应聘者具备本科及以上学历,73%的岗位要求应聘者拥有一年及以上相关经验,招聘者比较青睐统计学、计算机、数学或者经管类专业出身、拥有SQL、Python、Excel等数据分析软件/语言的人才。

多数岗位职责是运营、产品等业务相关的报表绘制、数据分析与决策,注重数据指标体系的建设、监控、维护与优化,工作需要经常沟通及结果呈现。

对于应聘者,多数岗位需要其具备业务相关的能力与经验,具备一定的数学、统计及计算机基础,能够熟练运用SQL以及Python等分析软件进行数据提取与分析。个人品质方面则需要较强的逻辑思维与沟通能力。

""")

], style={"padding-left": "20px", "padding-right": "20px",

"padding-top": "20px", "padding-bottom": "20px"}),

# 软件、专业、教育、经验要求、工作职责、岗位要求画图

dbc.Row([

dbc.Col([

dcc.Graph(id='software_major_edu_experience'),

], width=6),

dbc.Col([

dcc.RadioItems(

id="RadioItems_word_cloud",

options=[

{'label': '工作职责', 'value': 'duty'},

{'label': '岗位要求', 'value': 'requirement'},

],

value='duty',

labelStyle={'display': 'inline-block'}

),

html.Img(id='wordcloud'),

], width=6),

], style={"padding-left": "20px", "padding-right": "20px",

"padding-top": "20px", "padding-bottom": "20px"}),

# 区地图、职位分布散点地图

dbc.Row([

dbc.Col([

dcc.Tabs(id="tabs_map", value='tabs_job_num_map',

children=[

dcc.Tab(label='岗位数量', value='tabs_job_num_map'),

dcc.Tab(label='招聘人数', value='tabs_recruit_num_map'),

dcc.Tab(label='平均薪资', value='tabs_avg_salary_map'),

]),

dcc.Graph(id='district_map', style={"height": "50%"}),

html.P('地区分布', style={"font-size": "24px", "color": "rgb(53, 60, 70)", "font-weight": "bold",

"text-align": "center"}),

dcc.Markdown(

"""

招聘地区主要集中于滨江区、西湖区、余杭区、拱墅区四个地区,合计占有近70%的岗位。余杭区的平均薪资高于其余地区,月薪区间为13k-21k, 阿里的招聘薪资面议未知。

""", style={"display": "flex", "flex-direction": "column",

"justify-content": "center", "text-align": "center"})

], width=5),

dbc.Col([

dcc.Graph(id='job_map'),

], width=5),

dbc.Col([

html.P('职位分布', style={"font-size": "24px", "color": "rgb(53, 60, 70)", "font-weight": "bold"}),

dcc.Markdown("""

招聘职位主要分部在互联网企业较多的地方,例如余杭阿里巴巴园区、滨江等地。

""", )

], width=2, style={"display": "flex", "flex-direction": "column",

"justify-content": "center", "text-align": "center"}),

], style={"padding-left": "20px", "padding-right": "20px",

"padding-top": "20px", "padding-bottom": "20px"}),

# 企业情况

dbc.Row([

dbc.Col([

dcc.Graph(id='company'),

], width=7),

dbc.Col([

html.P('企业情况', style={"font-size": "24px", "color": "rgb(53, 60, 70)", "font-weight": "bold"}),

dcc.Markdown(

"""

数据分析岗位的行业分布较为广泛,主要集中于互联网、计算机软件、服装纺织、金融、快消等行业。在职位数大于5的行业中,科研机构、银行、通信、证券、半导体、计算机软件等行业的平均薪资较高。

在公司规模方面,公司规模与招聘人数以及薪资没有直接的关系,1000-5000人规模的公司招聘岗位数最多,平均薪资也最高;5000-10000人的岗位数最少,少于150人的薪资最低。

在企业性质方面,民营公司的招聘人数最多,达55%,创业公司的招聘人数最少,仅为1.8%;事业单位的平均招聘月薪达20-27k, 而最低的非欧美外资企业仅为7k-11k。综合规模与薪资,国企、民企、合资企业在具备一定招聘人数的同时,薪资较高,是多数数据分析师择业的方向。

"""

)

], width=5, style={"display": "flex", "flex-direction": "column",

"justify-content": "center", "text-align": "center"}),

], style={"padding-left": "20px", "padding-right": "20px",

"padding-top": "20px", "padding-bottom": "20px"}),

# 员工待遇与福利

dbc.Row([

dbc.Col([

html.P(

"待遇福利", style={"font-size": "24px", "color": "rgb(53, 60, 70)", "font-weight": "bold"}

),

dcc.Markdown(

"""

五险一金、奖金、补贴、体检、培训、旅游仍是多数企业提供的基础待遇与福利,像例如字节跳动与vivo等企业还提供股票期权激励吸引人才。但具有六险一金、最高比公积金等待遇的企业仍为少数。

"""

)

], width=5, style={"display": "flex", "flex-direction": "column",

"justify-content": "center", "text-align": "center"}),

dbc.Col([

dcc.Graph(id='welfare'),

], width=7),

], style={"padding-left": "20px", "padding-right": "20px",

"padding-top": "20px", "padding-bottom": "20px"}),

], style={'background-color': 'rgb(255,255,255)', 'border-radius': '4px'}

),

html.Br(),

], style={'background-color': 'rgb(250,250,250)'}

)

@app.callback([

Output('company_num', 'children'),

Output('job_num', 'children'),

Output('recruitment_num', 'children'),

Output('avg_salary_range', 'children'),

Output('company_num_proportion', 'children'),

Output('job_num_proportion', 'children'),

Output('recruitment_num_proportion', 'children'),

Output('avg_salary_range_change', 'children')

],

Input('last_days', 'value'))

def indicator(selected_day):

"""

指标回调函数

"""

last_n_days = data.update_date.max() + timedelta(days=-selected_day)

filtered_data = data.loc[data.update_date >= last_n_days]

company_num = filtered_data.company.nunique()

job_num = filtered_data.shape[0]

recruit_num = filtered_data.recruit_num_new.sum()

avg_salary_low = (filtered_data.salary_low * filtered_data.recruit_num_new).sum() / filtered_data.recruit_num_new.sum()

avg_salary_high = (filtered_data.salary_high * filtered_data.recruit_num_new).sum() / filtered_data.recruit_num_new.sum()

avg_salary_range = str(int(round(avg_salary_low))) + ' - ' + str(int(round(avg_salary_high)))

company_total_num = data.company.nunique()

company_num_proportion = str(round(company_num / company_total_num, 3)*100) + '%'

job_total_num = data.shape[0]

job_num_proportion = str(round(job_num / job_total_num, 3)*100) + '%'

recruit_total_num = data.recruit_num_new.sum()

recruit_num_proportion = str(round(recruit_num / recruit_total_num, 3)*100) + '%'

avg_salary_total_low = (data.salary_low * data.recruit_num_new).sum() / data.recruit_num_new.sum()

avg_salary_total_high = (data.salary_high * data.recruit_num_new).sum() / data.recruit_num_new.sum()

avg_salary_low_diff = avg_salary_low - avg_salary_total_low

avg_salary_high_diff = avg_salary_high - avg_salary_total_high

if avg_salary_low_diff > 0:

avg_salary_low_diff = '+' + str(int(round(avg_salary_low_diff)))

elif avg_salary_low_diff == 0:

avg_salary_low_diff = '0'

else:

avg_salary_low_diff = str(int(round(avg_salary_low_diff)))

if avg_salary_high_diff > 0:

avg_salary_high_diff = '+' + str(int(round(avg_salary_high_diff)))

elif avg_salary_high_diff == 0:

avg_salary_high_diff = '0'

else:

avg_salary_high_diff = str(int(round(avg_salary_high_diff)))

avg_salary_range_change = avg_salary_low_diff + ' - ' + avg_salary_high_diff

return company_num, job_num, recruit_num, avg_salary_range, company_num_proportion, job_num_proportion, \

recruit_num_proportion, avg_salary_range_change

@app.callback(

Output('update_job_num', 'figure'),

Input('last_days', 'value'))

def update_num_graph(selected_day):

"""

日期岗位频数图回调函数

"""

last_n_days = data.update_date.max() + timedelta(days=-selected_day)

filtered_data = data.loc[data.update_date >= last_n_days]

date_count = filtered_data.groupby("update_date").update_date.count()

fig = go.Figure()

fig.add_trace(go.Bar(

x=date_count.index,

y=date_count.values,

name='更新日期',

marker=dict(

color='rgb(53, 60, 70)',

)

))

fig.update_xaxes(title_text="更新日期",

showgrid=False,

showline=False,

zeroline=False,

)

fig.update_yaxes(title_text="岗位数量",

showgrid=False,

showline=False,

zeroline=False,

)

fig.update_layout(

height=300,

margin=dict(l=0, r=0, t=0, b=0),

plot_bgcolor='white',

coloraxis_showscale=False

)

return fig

def district_map(df_area, color):

"""

choropleth_mapbox地图绘制函数

"""

fig = px.choropleth_mapbox(

df_area,

geojson=hangzhou_geo,

color=color,

locations="区",

featureidkey="properties.name",

mapbox_style="white-bg",

color_continuous_scale='greys',

center={"lat": 30.2, "lon": 119.6},

zoom=7,

height=300,

hover_data=df_area.columns,

)

fig.update_layout(

margin={"r": 0, "t": 0, "l": 0, "b": 0},

coloraxis_showscale=False,

)

return fig

@app.callback(

Output('district_map', 'figure'),

[Input('last_days', 'value'),

Input('tabs_map', 'value')])

def tab_district_map(selected_day, tab):

"""

区地区tab回调函数

"""

last_n_days = data.update_date.max() + timedelta(days=-selected_day)

filtered_data = data.loc[data.update_date >= last_n_days]

df_area = (

filtered_data

.assign(salary_total_low=lambda x: x['salary_low'] * x['recruit_num_new'])

.assign(salary_total_high=lambda x: x['salary_high'] * x['recruit_num_new'])

.groupby('area_new')

.agg({

'recruit_num_new': ['count', 'sum'],

'salary_total_low': 'sum',

'salary_total_high': 'sum',

})

.assign(salary_low=lambda x: x[('salary_total_low', 'sum')] / x[('recruit_num_new', 'sum')])

.assign(salary_high=lambda x: x[('salary_total_high', 'sum')] / x[('recruit_num_new', 'sum')])

.assign(salary_mean=lambda x: (x['salary_high'] + x['salary_low']) / 2)

.reset_index()

)

df_area.columns = ['区', '职位数', '招聘人数', 'salary_total_low', 'salary_total_high', 'salary_low',

'salary_high', 'salary_mean']

df_area.drop(['salary_total_high', 'salary_total_low'], axis=1, inplace=True)

df_area[['招聘人数', 'salary_low', 'salary_high', 'salary_mean']] = df_area[['招聘人数', 'salary_low', 'salary_high',

'salary_mean']].round().astype("int")

if tab == 'tabs_job_num_map':

color = '职位数'

elif tab == 'tabs_recruit_num_map':

color = '招聘人数'

elif tab == 'tabs_avg_salary_map':

color = 'salary_mean'

fig = district_map(df_area, color)

return fig

@app.callback(

Output('job_map', 'figure'),

Input('last_days', 'value'))

def job_map(selected_day):

"""

工作分布散点地图回调函数

"""

last_n_days = data.update_date.max() + timedelta(days=-selected_day)

filtered_data = data.loc[data.update_date >= last_n_days]

fig = px.scatter_mapbox(filtered_data,

lat='lat',

lon='lng',

hover_name='name',

hover_data=['update_date', 'company', 'salary', 'area_new', 'recruit_num', 'experience',

'education', 'software', 'major'],

color='salary_mean',

color_continuous_scale='geyser',

zoom=9)

fig.update_layout(mapbox_style="light",

# mapbox_style="open-street-map" # 没有注册mapbox的话,可以使用该地图类型

mapbox_accesstoken=token,

coloraxis_showscale=False,

margin={'r': 0, 't': 0, 'l': 0, 'b': 0}

)

return fig

@app.callback(

Output('software_major_edu_experience', 'figure'),

Input('last_days', 'value'))

def software_major_edu_experience_graph(selected_day):

"""

招聘需求回调函数

"""

last_n_days = data.update_date.max() + timedelta(days=-selected_day)

filtered_data = data.loc[data.update_date >= last_n_days]

majors = ['数学', '统计学', '计算机', '电子/通信/信息', '金融', '经管', '地理/规划', '传统工科', '生化环材', '医学']

data_major_count = filtered_data[majors].sum().sort_values(ascending=False)

software_list = ['C/C++', 'Excel', 'HTML/js', 'Java', 'Matlab', 'SQL', 'PowerBI', 'Python', 'R', 'SAS', 'SPSS',

'Tableau', 'FineBI']

data_software_count = filtered_data[software_list].sum().sort_values(ascending=False)

specs = [[{'type': 'xy'}, {'type': 'domain'}], [{'type': 'xy'}, {'type': 'domain'}]]

fig = make_subplots(rows=2, cols=2, specs=specs, subplot_titles=('软件排名', '经验占比', '专业排名', '教育占比'))

fig.add_trace(

go.Bar(

x=data_software_count.index,

y=data_software_count.values,

marker=dict(

color='rgb(67, 127, 127)'

)

), 1, 1)

fig.add_trace(

go.Bar(

x=data_major_count.index,

y=data_major_count.values,

marker=dict(

color='rgb(53, 60, 70)'

)

), 2, 1)

fig.add_trace(

go.Pie(

labels=[i.replace("经验", "") for i in filtered_data.experience.value_counts().index],

values=filtered_data.experience.value_counts().tolist(),

textinfo='percent+label',

marker=dict(colors=px.colors.sequential.Mint[::-1]),

name='经验', ), 1, 2)

fig.add_trace(

go.Pie(

labels=filtered_data.education.value_counts().index,

values=filtered_data.education.value_counts().tolist(),

textinfo='percent+label',

marker=dict(colors=px.colors.sequential.Greys[-3::-1]),

name='教育', ), 2, 2)

fig.update_xaxes(

showgrid=False,

showline=False,

zeroline=False,

)

fig.update_yaxes(

showgrid=False,

showline=False,

zeroline=False,

)

fig.update_layout(

showlegend=False,

plot_bgcolor='white',

margin=dict(r=0, b=0, l=0, t=20),

)

return fig

@app.callback(

Output('company', 'figure'),

Input('last_days', 'value'))

def company(selected_day):

"""

企业情况3D散点图回调函数

"""

last_n_days = data.update_date.max() + timedelta(days=-selected_day)

filtered_data = data.loc[data.update_date >= last_n_days]

fig = px.scatter_3d(filtered_data.loc[filtered_data.salary_mean.notnull()],

x='company_type', y='company_size', z='company_industry',

size='salary_mean', color='salary_mean',

color_continuous_scale='aggrnyl',

hover_data=['update_date', 'company', 'salary', 'area_new', 'recruit_num', 'experience',

'education', 'software', 'major'],

)

fig.update_layout(

coloraxis_showscale=False,

margin=dict(r=10, b=10, l=10, t=10),

scene=dict(

xaxis=dict(title='性质', tickfont=dict(size=10), backgroundcolor="rgb(204, 204, 204)", gridwidth=0.5),

yaxis=dict(title='规模', tickfont=dict(size=10), backgroundcolor="rgb(204, 204, 204)", gridwidth=0.5),

zaxis=dict(title='行业', tickfont=dict(size=10), backgroundcolor="rgb(204, 204, 204)", gridwidth=0.5)

),

)

return fig

@app.callback(

Output('welfare', 'figure'),

Input('last_days', 'value'))

def welfare(selected_day):

"""

工作福利词云图回调函数

"""

last_n_days = data.update_date.max() + timedelta(days=-selected_day)

filtered_data = data.loc[data.update_date >= last_n_days]

welfare_text = ' '.join(filtered_data.welfare.dropna().tolist())

welfare_text = (

welfare_text

.replace("包住宿", "包住")

.replace("国定双休", "双休")

.replace("周末双休", "双休")

.replace("做五休二", "双休")

.replace("缴纳五险", "五险")

)

df_welfare = pd.DataFrame(welfare_text.split(" ") )[0].value_counts().to_frame().reset_index()

df_welfare.columns = ['福利', 'num']

fig = px.treemap(df_welfare.query("num>=5"),

path=[px.Constant("福利"), '福利'], values='num',

color='num',

color_continuous_scale='Mint',

)

fig.update_layout(

plot_bgcolor='white',

height=400,

margin=dict(l=0, r=0, t=0, b=0),

coloraxis_showscale=False

)

return fig

def word_cloud(selected_day, filtered_data, feature):

"""

分词以及制作词云图,制作词云图后保存至本地图片,然后加载为背景图片,不可交互。

"""

path = '.\词云图文件夹'

if not os.path.exists(path):

os.makedirs(path)

image_src = '.\词云图文件夹\{}词云图{}.png'.format(feature, selected_day)

if not os.path.exists(image_src):

text = ''.join(filtered_data.loc[filtered_data[feature].notnull()][feature].tolist())

jieba.suggest_freq('机器学习', True)

jieba.suggest_freq('统计分析', True)

stop_words_list = []

for filename in os.listdir(r"./stopwords"):

with open(r"./stopwords/{}".format(filename), encoding='utf-8') as f:

stop_words = f.read()

stop_words_list = stop_words_list + stop_words.split('\n')

words = jieba.cut(text)

words_list = []

for word in words:

if word in stop_words_list:

continue

else:

words_list.append(word)

top100words = pd.DataFrame(words_list)[0].value_counts().head(50)

top100words_dict = {k: v for k, v in zip(top100words.index, top100words.tolist())}

mask = np.array(Image.open(r'data.png'))

wc = WordCloud(

font_path='C:/Windows/Fonts/msyhbd.ttc', # 显示中文需要加载本地中文字体

background_color='white',

colormap=pcs.Tempo_3.mpl_colormap,

mask=mask,

width=540,

height=360)

wc.generate_from_frequencies(top100words_dict)

wc.to_file(image_src)

encoded_image = base64.b64encode(open(image_src, 'rb').read())

src = 'data:image/png;base64,{}'.format(encoded_image.decode())

# 使用px.imshow绘图,在网页端交互后会报错但无碍输出

# image = Image.open(image_src)

# fig = px.imshow(image)

# 使用ploty scatter绘制,词之间会遮挡

# from wordcloud import WordCloud, STOPWORDS

# wc = WordCloud(stopwords=set(STOPWORDS),

# max_words=200,

# max_font_size=100)

# wc.generate_from_frequencies(top100words_dict)

#

# word_list = []

# freq_list = []

# fontsize_list = []

# position_list = []

# orientation_list = []

# color_list = []

#

# for (word, freq), fontsize, position, orientation, color in wc.layout_:

# word_list.append(word)

# freq_list.append(freq)

# fontsize_list.append(fontsize)

# position_list.append(position)

# orientation_list.append(orientation)

# color_list.append(color)

#

# # get the positions

# x = []

# y = []

# for i in position_list:

# x.append(i[0])

# y.append(i[1])

#

# # get the relative occurence frequencies

# new_freq_list = []

# for i in freq_list:

# new_freq_list.append(i * 100)

#

# trace = go.Scatter(x=x,

# y=y,

# textfont=dict(size=new_freq_list,

# color=color_list),

# hoverinfo='text',

# hovertext=['{0}{1}'.format(w, f) for w, f in zip(word_list, freq_list)],

# mode='text',

# text=word_list

# )

#

# layout = go.Layout({'xaxis': {'showgrid': False, 'showticklabels': False, 'zeroline': False},

# 'yaxis': {'showgrid': False, 'showticklabels': False, 'zeroline': False}})

#

# fig = go.Figure(data=[trace], layout=layout)

return src

@app.callback(

Output('wordcloud', 'src'),

[Input('last_days', 'value'),

Input('RadioItems_word_cloud', 'value')])

def radioitems_wordcloud(selected_day, item):

"""

词云图回调函数

"""

last_n_days = data.update_date.max() + timedelta(days=-selected_day)

filtered_data = data.loc[data.update_date >= last_n_days]

if item == 'duty':

feature = 'duty'

elif item == 'requirement':

feature = 'requirement'

src = word_cloud(selected_day, filtered_data, feature)

return src

if __name__ == '__main__':

app.run_server(debug=True, port=3000)

有一些需要注意的地方,dash是基于ploty开发的,ploty散点地图加载比较慢,而且有时不一定能够加载出来。所以有精力的朋友可以尝试使用echarts,具体可以参考https://www.cnblogs.com/feffery/p/14386458.html。

数据集与代码下载:

链接:https://pan.baidu.com/s/1pJBR6f7vj5te3ymoNvHYQQ

提取码:ukeh

码字不易,若对您有所帮助,望能关注收藏点赞,谢谢!

454

454

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?