2025年4月25日GPUStack发布了v0.6版本,为昇腾芯片910B(1-4)和310P3内置了MinIE推理,新增了310P芯片的支持,很感兴趣,所以我马上来捣鼓玩玩看哈

官方文档:https://docs.gpustack.ai/latest/installation/ascend-cann/online-installation/

目前GPUStack的Ascend MindIE推理引擎支持的模型列表:https://www.hiascend.com/document/detail/zh/mindie/100/whatismindie/mindie_what_0003.html

部署GPUStack

可以参考我之前写的:鲲鹏+昇腾部署集群管理软件GPUStack,两台服务器搭建双节点集群【实战详细踩坑篇】

启动并创建容器:

docker run -d --name gpustack \

--restart=unless-stopped \

--device /dev/davinci0 \

--device /dev/davinci1 \

--device /dev/davinci_manager \

--device /dev/devmm_svm \

--device /dev/hisi_hdc \

-v /usr/local/dcmi:/usr/local/dcmi \

-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \

-v /usr/local/Ascend/driver/lib64/:/usr/local/Ascend/driver/lib64/ \

-v /usr/local/Ascend/driver/version.info:/usr/local/Ascend/driver/version.info \

-v /etc/ascend_install.info:/etc/ascend_install.info \

--network=host \

--ipc=host \

-v gpustack-data:/var/lib/gpustack \

gpustack/gpustack:latest-npu-310p

部署DeepSeek-R1

(1)登录后选择模型,搜索:deepseek,选择deepseek-ai/DeepSeek-R1-Distill-Qwen-7B模型,后端选择:Ascend MindIE,然后点保存。

下载完成后运行报错,查了一下,目前适配的Ascend MindIE是1.0.0版本,还没适配DeepSeek-R1!

以下为报错日志:

2025-04-27 06:42:05,038 [ERROR] model.py:39 - [Model] >>> Exception:call aclnnInplaceZero failed, detail:EZ9999: Inner Error!

EZ9999: [PID: 43453] 2025-04-27-06:42:05.032.406 Parse dynamic kernel config fail.

TraceBack (most recent call last):

AclOpKernelInit failed opType

ZerosLike ADD_TO_LAUNCHER_LIST_AICORE failed.

[ERROR] 2025-04-27-06:42:05 (PID:43453, Device:0, RankID:-1) ERR01100 OPS call acl api failed

Traceback (most recent call last):

File "/usr/local/lib/python3.11/dist-packages/model_wrapper/model.py", line 37, in initialize

return self.python_model.initialize(config)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/model_wrapper/standard_model.py", line 146, in initialize

self.generator = Generator(

^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/text_generator/generator.py", line 119, in __init__

self.warm_up(max_prefill_tokens, max_seq_len, max_input_len, max_iter_times, inference_mode)

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/text_generator/generator.py", line 303, in warm_up

raise e

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/text_generator/generator.py", line 296, in warm_up

self._generate_inputs_warm_up_backend(input_metadata, inference_mode, dummy=True)

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/text_generator/generator.py", line 378, in _generate_inputs_warm_up_backend

self.generator_backend.warm_up(model_inputs, inference_mode=inference_mode)

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/text_generator/adapter/generator_torch.py", line 198, in warm_up

super().warm_up(model_inputs)

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/text_generator/adapter/generator_backend.py", line 170, in warm_up

_ = self.forward(model_inputs, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/utils/decorators/time_decorator.py", line 38, in wrapper

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/text_generator/adapter/generator_torch.py", line 153, in forward

logits = self.model_wrapper.forward(model_inputs, self.cache_pool.npu_cache, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/modeling/model_wrapper/atb/atb_model_wrapper.py", line 89, in forward

logits = self.forward_tensor(

^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/mindie_llm/modeling/model_wrapper/atb/atb_model_wrapper.py", line 116, in forward_tensor

logits = self.model_runner.forward(

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/Ascend/atb-models/atb_llm/runner/model_runner.py", line 193, in forward

return self.model.forward(**kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/Ascend/atb-models/atb_llm/models/base/flash_causal_lm.py", line 452, in forward

self.init_ascend_weight()

File "/usr/local/Ascend/atb-models/atb_llm/models/qwen2/flash_causal_qwen2.py", line 150, in init_ascend_weight

weight_wrapper = self.get_weights()

^^^^^^^^^^^^^^^^^^

File "/usr/local/Ascend/atb-models/atb_llm/models/qwen2/flash_causal_qwen2.py", line 132, in get_weights

weight_wrapper = WeightWrapper(self.soc_info, self.tp_rank, attn_wrapper, mlp_wrapper)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/Ascend/atb-models/atb_llm/utils/data/weight_wrapper.py", line 49, in __init__

self.placeholder = torch.zeros(1, dtype=torch.float16, device="npu")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

RuntimeError: call aclnnInplaceZero failed, detail:EZ9999: Inner Error!

EZ9999: [PID: 43453] 2025-04-27-06:42:05.032.406 Parse dynamic kernel config fail.

TraceBack (most recent call last):

AclOpKernelInit failed opType

ZerosLike ADD_TO_LAUNCHER_LIST_AICORE failed.

[ERROR] 2025-04-27-06:42:05 (PID:43453, Device:0, RankID:-1) ERR01100 OPS call acl api failed

2025-04-27 06:42:05,042 [ERROR] model.py:42 - [Model] >>> return initialize error result: {'status': 'error', 'npuBlockNum': '0', 'cpuBlockNum': '0'}

[2025-04-27 06:42:05.146668+00:00] [43225] [43226] [server] [WARN] [llm_daemon.cpp:64] : [Daemon] received exit signal[17]

[2025-04-27 06:42:05.146771+00:00] [43225] [43226] [server] [INFO] [llm_daemon.cpp:69] : Daemon wait pid with 43453, status 9

[2025-04-27 06:42:05.146776+00:00] [43225] [43226] [server] [ERROR] [llm_daemon.cpp:74] : ERR: Daemon wait pid with 43453 exit, Please check the service log or python log.

[ERROR] TBE(43892,python3):2025-04-27-06:42:05.253.179 [../../../../../../latest/python/site-packages/tbe/common/repository_manager/utils/repository_manager_log.py:30][log] [../../../../../../latest/python/site-packages/tbe/common/repository_manager/route.py:65][repository_manager] Subprocess[task_distribute] raise error[]

[ERROR] TBE(43893,python3):2025-04-27-06:42:05.253.179 [../../../../../../latest/python/site-packages/tbe/common/repository_manager/utils/repository_manager_log.py:30][log] [../../../../../../latest/python/site-packages/tbe/common/repository_manager/route.py:65][repository_manager] Subprocess[task_distribute] raise error[]

[ERROR] TBE(43891,python3):2025-04-27-06:42:05.253.179 [../../../../../../latest/python/site-packages/tbe/common/repository_manager/utils/repository_manager_log.py:30][log] [../../../../../../latest/python/site-packages/tbe/common/repository_manager/route.py:65][repository_manager] Subprocess[task_distribute] raise error[]

[ERROR] TBE(43890,python3):2025-04-27-06:42:05.253.179 [../../../../../../latest/python/site-packages/tbe/common/repository_manager/utils/repository_manager_log.py:30][log] [../../../../../../latest/python/site-packages/tbe/common/repository_manager/route.py:65][repository_manager] Subprocess[task_distribute] raise error[]

[ERROR] TBE(43888,python3):2025-04-27-06:42:05.253.207 [../../../../../../latest/python/site-packages/tbe/common/repository_manager/utils/repository_manager_log.py:30][log] [../../../../../../latest/python/site-packages/tbe/common/repository_manager/route.py:65][repository_manager] Subprocess[task_distribute] raise error[]

[ERROR] TBE(43887,python3):2025-04-27-06:42:05.253.222 [../../../../../../latest/python/site-packages/tbe/common/repository_manager/utils/repository_manager_log.py:30][log] [../../../../../../latest/python/site-packages/tbe/common/repository_manager/route.py:65][repository_manager] Subprocess[task_distribute] raise error[]

[ERROR] TBE(43889,python3):2025-04-27-06:42:05.253.262 [../../../../../../latest/python/site-packages/tbe/common/repository_manager/utils/repository_manager_log.py:30][log] [../../../../../../latest/python/site-packages/tbe/common/repository_manager/route.py:65][repository_manager] Subprocess[task_distribute] raise error[]

[ERROR] TBE(43886,python3):2025-04-27-06:42:05.253.290 [../../../../../../latest/python/site-packages/tbe/common/repository_manager/utils/repository_manager_log.py:30][log] [../../../../../../latest/python/site-packages/tbe/common/repository_manager/route.py:65][repository_manager] Subprocess[task_distribute] raise error[]

[ERROR] TBE Subprocess[task_distribute] raise error[], main process disappeared!

[ERROR] TBE Subprocess[task_distribute] raise error[], main process disappeared!

[ERROR] TBE Subprocess[task_distribute] raise error[], main process disappeared!

[ERROR] TBE Subprocess[task_distribute] raise error[], main process disappeared!

[ERROR] TBE Subprocess[task_distribute] raise error[], main process disappeared!

[ERROR] TBE Subprocess[task_distribute] raise error[], main process disappeared!

[ERROR] TBE Subprocess[task_distribute] raise error[], main process disappeared!

[ERROR] TBE Subprocess[task_distribute] raise error[], main process disappeared!

/usr/lib/python3.11/multiprocessing/resource_tracker.py:254: UserWarning: resource_tracker: There appear to be 30 leaked semaphore objects to clean up at shutdown

warnings.warn('resource_tracker: There appear to be %d '

Daemon is killing...

[2025-04-27 06:42:10.147021][43225][localhost.localdomain][system][stop][endpoint][success]

[2025-04-27 06:42:10.147044][43225][localhost.localdomain][system][stop][mindie server][success]

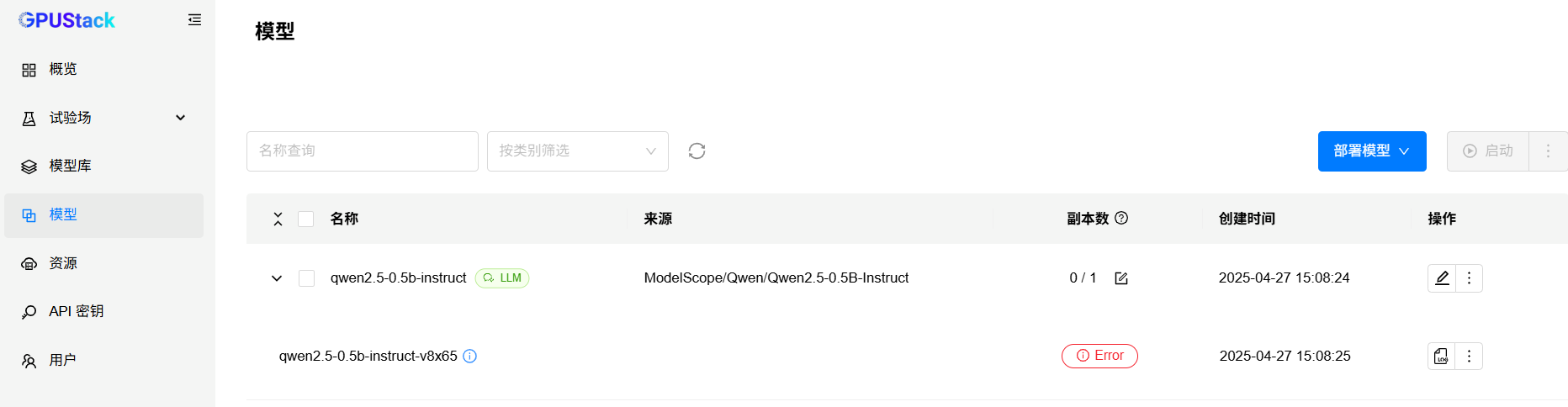

部署Qwen2.5模型

目前看qwen2.5系列是支持的,所以尝试一下

也不行,我是真服了,报错日志和上面一模一样

发现问题了,原来也是要改模型权重的精度,不支持BF16,需要改成FP16

运行成功了!

测试对话

低参数基本都会有这个问题,我改回测高参数的模型,测试Qwen2.5-7B-Instruct正常

Deepseek-R1-qwen-7b,回答有点错乱,高参数的应该没事

Deepseek-R1-qwen-14b测试正常

1609

1609

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?