(1)本文的目的是对搜狗的用户查询日志的利用sum进行排序,选出搜索比较热的一些查询,语料集如下:

列名分别为 访问时间\t用户ID\t[查询词]\t该URL在返回结果中的排名\t用户点击的顺序号\t用户点击的URL

(2)这里实现分为了三种方式去实现

(2.1)第一种,利用TreeMap与重写cleanUp进行实现,个人觉得这是最简单的一种方法了,但是它有一定的缺点,缺点在后面会提到,先附上代码:

package com.sougou;

import java.io.IOException;

import java.util.TreeMap;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class TopK {

public static final int K = 100;

public static class KMap extends Mapper<LongWritable, Text, IntWritable, Text> {

TreeMap<Integer, String> map = new TreeMap<Integer, String>();

@Override

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

if(line.trim().length() > 0 && line.indexOf("\t") != -1) {

String[] arr = line.split("\t", 2);

String name = arr[0];

Integer num = Integer.parseInt(arr[1]);

map.put(num, name);

if(map.size() > K) {

map.remove(map.firstKey());

}

}

}

@Override

protected void cleanup(Mapper<LongWritable, Text, IntWritable, Text>.Context context) throws IOException, InterruptedException {

for(Integer num : map.keySet()) {

context.write(new IntWritable(num), new Text(map.get(num)));

}

}

}

public static class KReduce extends Reducer<IntWritable, Text, IntWritable, Text> {

TreeMap<Integer, String> map = new TreeMap<Integer, String>();

@Override

public void reduce(IntWritable key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

map.put(key.get(), values.iterator().next().toString());

if(map.size() > K) {

map.remove(map.firstKey());

}

}

@Override

protected void cleanup(Reducer<IntWritable, Text, IntWritable, Text>.Context context) throws IOException, InterruptedException {

for(Integer num : map.keySet()) {

context.write(new IntWritable(num), new Text(map.get(num)));

}

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

conf.set("master", "10.1.18.201:9000");

// 设置输入输出文件目录

String[] ioArgs = new String[] { "hdfs://master:9000/Key_out", "hdfs://master:9000/top_out" };

String[] otherArgs = new GenericOptionsParser(conf, ioArgs).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: <in> <out>");

System.exit(2);

}

//设置一个job

Job job = Job.getInstance(conf, "top K");

job.setJarByClass(TopK.class);

// 设置Map、Combine和Reduce处理类

job.setMapperClass(KMap.class);

job.setCombinerClass(KReduce.class);

job.setReducerClass(KReduce.class);

job.setNumReduceTasks(1);

// 设置输出类型

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(Text.class);

// 将输入的数据集分割成小数据块splites,提供一个RecordReder的实现

job.setInputFormatClass(TextInputFormat.class);

// 提供一个RecordWriter的实现,负责数据输出

job.setOutputFormatClass(TextOutputFormat.class);

// 设置输入和输出目录

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

缺点是假如有多个reducer,由于每个reducer是跑在不同的容器即跑在不同的jvm中的,故cleanup是无效的,如果只有一个reduce的话,当数据量太大时,会出现OOM问题,而且当key相同时,会被覆盖掉。

(2)重写Writable,进行排序

package com.sougou;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.util.TreeMap;

public class TopN_2 {

public static final int K = 3;

public static class MyIntWritable extends IntWritable {

public MyIntWritable() {

}

public MyIntWritable(int value) {

super(value);

}

@Override

public int compareTo(IntWritable o) {

return -super.compareTo(o); //重写IntWritable排序方法,默认是升序 ,

}

}

public static class MyMapper extends Mapper<LongWritable, Text, MyIntWritable, Text> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// super.map(key, value, context);

String line = value.toString();

if(line.trim().length() > 0 && line.indexOf("\t") != -1) {

String[] arr = line.split("\t", 2);

int score = Integer.parseInt(arr[1]);

context.write(new MyIntWritable(score), new Text(arr[0]));

}

}

}

public static class MyReducer extends Reducer<MyIntWritable, Text, Text, MyIntWritable> {

int num = 0;

@Override

protected void reduce(MyIntWritable key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

// super.reduce(key, values, context);

for (Text text : values) {

if (num < K)

{

context.write(text, key);

}

num++;

}

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

conf.set("master", "10.1.18.201:9000");

// 设置输入输出文件目录

String[] ioArgs = new String[] { "hdfs://master:9000/Key_out", "hdfs://master:9000/top_out" };

String[] otherArgs = new GenericOptionsParser(conf, ioArgs).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: <in> <out>");

System.exit(2);

}

// conf.set("mapreduce.framework.name", "local");

//

// conf.set("fs.defaultFS", "file:///");

Job job = Job.getInstance(conf);

// job.setJar("/Users/f7689781/Desktop/MyMapReduce.jar");

job.setJarByClass(TopN_2.class);

job.setMapperClass(MyMapper.class);

job.setReducerClass(MyReducer.class);

job.setMapOutputKeyClass(MyIntWritable.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(MyIntWritable.class);

FileSystem fileSystem = FileSystem.get(conf);

fileSystem.deleteOnExit(new Path(otherArgs[1]));

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

// exit(arg) arg 非0表示jvm异常终止

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

(3)使用直接排序,在ReduceTask中进行排序

import java.io.File;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Collections;

import java.util.Comparator;

import java.util.List;

import org.apache.commons.io.FileUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import com.alibaba.fastjson.JSON;

public class TopN1 {

public static class MapTask extends Mapper<LongWritable, Text, Text, MovieBean>{

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, MovieBean>.Context context)

throws IOException, InterruptedException {

try {

MovieBean movieBean = JSON.parseObject(value.toString(), MovieBean.class);

String movie = movieBean.getMovie();

context.write(new Text(movie), movieBean);

} catch (Exception e) {

}

}

}

public static class ReduceTask extends Reducer<Text, MovieBean, MovieBean, NullWritable>{

@Override

protected void reduce(Text movieId, Iterable<MovieBean> movieBeans,

Reducer<Text, MovieBean, MovieBean, NullWritable>.Context context)

throws IOException, InterruptedException {

List<MovieBean> list = new ArrayList<>();

for (MovieBean movieBean : movieBeans) {

MovieBean movieBean2 = new MovieBean();

movieBean2.set(movieBean);

list.add(movieBean2);//????

}

Collections.sort(list, new Comparator<MovieBean>() {

@Override

public int compare(MovieBean o1, MovieBean o2) {

return o2.getRate() - o1.getRate();

}

});

for (int i = 0; i < Math.min(20, list.size()); i++) {

context.write(list.get(i), NullWritable.get());

}

}

}

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "avg");

//设置map和reduce,以及提交的jar

job.setMapperClass(MapTask.class);

job.setReducerClass(ReduceTask.class);

job.setJarByClass(TopN1.class);

//设置输入输出类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(MovieBean.class);

job.setOutputKeyClass(MovieBean.class);

job.setOutputValueClass(NullWritable.class);

//输入和输出目录

FileInputFormat.addInputPath(job, new Path("E:/data/rating.json"));

FileOutputFormat.setOutputPath(job, new Path("E:\\data\\out\\topN1"));

//判断文件是否存在

File file = new File("E:\\data\\out\\topN1");

if(file.exists()){

FileUtils.deleteDirectory(file);

}

//提交任务

boolean completion = job.waitForCompletion(true);

System.out.println(completion?"你很优秀!!!":"滚去调bug!!");

}

}

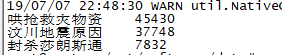

最后的结果如下:

984

984

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?