WrenAI+Ollama 本地部署实现自然语言读取数据库

作者:coder_fang

WrenAI 是开源且比较成熟的SQL AI,可以使用Ollama本地模型进行部署,本文是作者多次踩坑后能正确运行的配置,相比官网进行了少许改动。

运行环境:Wrenai:0.27.0,ollama:0.12.5,Windows11 Docker Desktop,3080TI显卡

-

安装Desktop Docker ,Ollama,本文需要的模型有:qwen2.5:14b,nomic-embed-text:latest,使用ollama pull进行下拉。

-

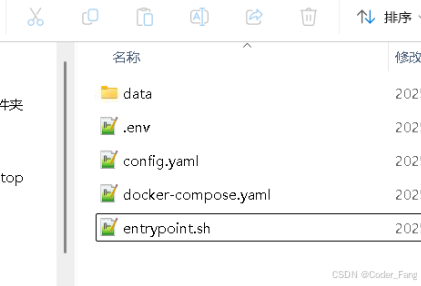

创建wren工作目录,目录结构如图:

-

.env

COMPOSE_PROJECT_NAME=wrenai

PLATFORM=linux/amd64

PROJECT_DIR=.

# service port

WREN_ENGINE_PORT=8080

WREN_ENGINE_SQL_PORT=7432

WREN_AI_SERVICE_PORT=5555

WREN_UI_PORT=3000

IBIS_SERVER_PORT=8000

WREN_UI_ENDPOINT=http://wren-ui:${

WREN_UI_PORT}

# ai service settings

QDRANT_HOST=qdrant

SHOULD_FORCE_DEPLOY=1

EMBEDDER_OLLAMA_URL=192.168.1.37

EMBEDDING_MODEL=nomic-embed-text

# vendor keys

OPENAI_API_KEY=

# version

# CHANGE THIS TO THE LATEST VERSION

WREN_PRODUCT_VERSION=0.27.0

WREN_ENGINE_VERSION=0.18.3

WREN_AI_SERVICE_VERSION=0.27.1

IBIS_SERVER_VERSION=0.18.3

WREN_UI_VERSION=0.31.1

WREN_BOOTSTRAP_VERSION=0.1.5

# user id (uuid v4)

USER_UUID=

# for other services

POSTHOG_API_KEY=phc_nhF32aj4xHXOZb0oqr2cn4Oy9uiWzz6CCP4KZmRq9aE

POSTHOG_HOST=https://app.posthog.com

TELEMETRY_ENABLED=true

# this is for telemetry to know the model, i think ai-service might be able to provide a endpoint to get the information

GENERATION_MODEL=gpt-4o-mini

LANGFUSE_SECRET_KEY=

LANGFUSE_PUBLIC_KEY=

# the port exposes to the host

# OPTIONAL: change the port if you have a conflict

HOST_PORT=3000

AI_SERVICE_FORWARD_PORT=5555

# Wren UI

EXPERIMENTAL_ENGINE_RUST_VERSION=false

# Wren Engine

# OPTIONAL: set if you want to use local storage for the Wren Engine

LOCAL_STORAGE=.

- config.yaml

type: llm

provider: litellm_llm

models:

- api_base: http://host.docker.internal:11434 # if you are using mac/windows, don't change this; if you are using linux, please search "Run Ollama in docker container" in this page: https://docs.getwren.ai/oss/installation/custom_llm#running-wren-ai-with-your-custom-llm-embedder

model: ollama_chat/qwen2.5-coder:14b # ollama_chat/<ollama_model_name>

timeout: 600

alias: default

kwargs:

n: 1

temperature: 0

---

type: embedder

provider: litellm_embedder

models:

- model: ollama/nomic-embed-text # put your ollama embedder model name here, openai/<ollama_model_name>

api_base: http://host.docker.internal:11434 # if you are using mac/windows, don't change this; if you are using linux, please search "Run Ollama in docker container" in this page: https://docs.getwren.ai/oss/installation/custom_llm#running-wren-ai-with-your-custom-llm-embedder

timeout: 600

alias: default

---

type: engine

provider: wren_ui

endpoint: http://wren-ui:3000

---

type: engine

provider: wren_ibis

endpoint: http://ibis-server:8000

---

type: document_store

provider: qdrant

location: http://qdrant:6333

embedding_model_dim: 768

timeout: 120

recreate_index: true

---

type: pipeline

pipes:

- name: db_schema_indexing

embedder: litellm_embedder.default

document_store: qdrant

- name: historical_question_indexing

embedder: litellm_embedder.default

document_store: qdrant

- name: table_description_indexing

embedder: litellm_embedder.default

document_store: qdrant

- name: db_schema_retrieval

llm: litellm_llm.default

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

760

760

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?