TensorFlow学习笔记02:使用tf.data读取和保存数据文件

使用tf.data读取和写入数据文件

准备加州房价数据集并将其标准化:

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

# 获取房价数据

housing = fetch_california_housing()

# 划分训练集,验证集和测试集

x_train_all, x_test, y_train_all, y_test = train_test_split(housing.data, housing.target)

x_train, x_valid, y_train, y_valid = train_test_split(x_train_all, y_train_all)

print(x_train.shape, y_train.shape)

print(x_valid.shape, y_valid.shape)

print(x_test.shape, y_test.shape)

# 进行数据标准化

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(x_train)

x_valid_scaled = scaler.transform(x_valid)

x_test_scaled = scaler.transform(x_test)

读取和写入csv文件

写入csv文件

将数据集写入到csv文件的代码比较简单,只要使用正常的文件读写即可:

-

定义函数

save_to_csv,将数据写入csv文件中import numpy as np def save_to_csv(output_dir, data, name_prefix, header=None, n_parts=10): """ 将数据存储到多个csv文件中 :param output_dir: 存储csv文件的目录 :param data: 数据 :param name_prefix: 'train','valid'或'test' :param header: csv文件第一行标签 :param n_parts: csv文件个数 :return: csv文件路径列表 """ filename_format = os.path.join(output_dir, "{}_{:05d}-of-{:05d}.csv") filenames = [] # 生成数据集均分n_parts份的索引,将每一部分存入一个csv文件 for file_idx, row_indices in enumerate(np.array_split(np.arange(len(data)), n_parts)): # 生成csv文件名 csv_filename = filename_format.format(name_prefix, file_idx, n_parts) filenames.append(csv_filename) # 写入文件 with open(csv_filename, "wt", encoding="utf-8") as f: if header is not None: # 写入文件头 f.write(header + "\n") for row_index in row_indices: # 写入文件内容 f.write(",".join([repr(col) for col in data[row_index]])) f.write('\n') return filenames -

调用

save_to_csv,将train,valid,test数据分别存入多个csv文件中import os # 创建存储csv文件的目录 output_dir = "data/generate_csv" if not os.path.exists(output_dir): os.makedirs(output_dir) # 准备数据 train_data = np.c_[x_train_scaled, y_train] valid_data = np.c_[x_valid_scaled, y_valid] test_data = np.c_[x_test_scaled, y_test] # 准备header header_cols = housing.feature_names + ["MidianHouseValue"] header_str = ",".join(header_cols) # 将train,valid,test数据存入csv文件 train_filenames = save_to_csv(output_dir, train_data, "train", header_str, n_parts=20) valid_filenames = save_to_csv(output_dir, valid_data, "valid", header_str, n_parts=10) test_filenames = save_to_csv(output_dir, test_data, "test", header_str, n_parts=10) -

查看生成的csv文件名:

import pprint print("train filenames:") pprint.pprint(train_filenames) print("valid filenames:") pprint.pprint(valid_filenames) print("test filenames:") pprint.pprint(test_filenames)输出如下:

train filenames: ['data/generate_csv/train_00000-of-00020', 'data/generate_csv/train_00001-of-00020', ..., 'data/generate_csv/train_00019-of-00020'] valid filenames: ['data/generate_csv/valid_00000-of-00010', 'data/generate_csv/valid_00001-of-00010', ..., 'data/generate_csv/valid_00009-of-00010'] test filenames: ['data/generate_csv/test_00000-of-00010', 'data/generate_csv/test_00001-of-00010', ..., 'data/generate_csv/test_00009-of-00010']

读取csv文件

读取csv数据文件的步骤如下:

-

使用

tf.data.Dataset.list_files(file_pattern)获取csv文件名列表filename_dataset = tf.data.Dataset.list_files(train_filenames) for filename in filename_dataset: print(filename)输出如下:

tf.Tensor(b'data/generate_csv/train_00019-of-00020.csv', shape=(), dtype=string) tf.Tensor(b'data/generate_csv/train_00004-of-00020.csv', shape=(), dtype=string) ... tf.Tensor(b'data/generate_csv/train_00006-of-00020.csv', shape=(), dtype=string) -

使用

tf.data.TextLineDataset(filename)将csv文件内容转为TextLineDataset,注意使用skip()跳过csv文件的headerdataset = filename_dataset.interleave(lambda filename: tf.data.TextLineDataset(filename).skip(1)) # 使用skip跳过header for line in dataset.take(15): print(line.numpy())输出如下:

b'0.801544314532886,0.27216142415910205,-0.11624392696666119,-0.2023115137272354,-0.5430515742518128,-0.021039615516440048,-0.5897620622908205,-0.08241845654707416,3.226' b'0.4853051504718848,-0.8492418886278699,-0.06530126513877861,-0.023379656040017353,1.4974350551260218,-0.07790657783453239,-0.9023632702857819,0.7814514907892068,2.956' ... b'1.1990412250459561,-0.04823952235146133,0.7491221281727167,0.1308828788491473,-0.060375323994361546,-0.02954897439374466,-0.5524365449182886,0.03243130523751367,5.00001' -

使用

tf.io.decode_csv(records, record_defaults)解析一行csv文件内容使用

tf.io.decode_csv(records, record_defaults)函数可以解析一行csv文件内容,其中record_defaults字典存储各字段的默认值.sample_str = '1,2,3,4,5' record_defaults = [tf.constant(0, dtype=tf.int32), 0, np.nan, "hello", tf.constant([]) ] parsed_fields = tf.io.decode_csv(sample_str, record_defaults) print(parsed_fields)输出如下:

[<tf.Tensor: shape=(), dtype=int32, numpy=1>, <tf.Tensor: shape=(), dtype=int32, numpy=2>, <tf.Tensor: shape=(), dtype=float32, numpy=3.0>, <tf.Tensor: shape=(), dtype=string, numpy=b'4'>, <tf.Tensor: shape=(), dtype=float32, numpy=5.0>]解析csv数据过程如下:

def parse_csv_line(line, n_fields=9): defaults = [tf.constant(np.nan)] * n_fields parsed_fields = tf.io.decode_csv(line, record_defaults=defaults) x = tf.stack(parsed_fields[0:-1]) y = tf.stack(parsed_fields[-1:]) return x, y dataset = dataset.map(lambda line: parse_csv_line(line, n_fields=9)) # 输出一条数据 print(next(iter(dataset.take(1))))输出如下

(<tf.Tensor: shape=(8,), dtype=float32, numpy= array([-1.119975 , -1.3298433 , 0.14190045, 0.4658137 , -0.10301778, -0.10744184, -0.7950524 , 1.5304717 ], dtype=float32)>, <tf.Tensor: shape=(1,), dtype=float32, numpy=array([0.66], dtype=float32)>)

读取和保存TFRecord文件

TFRecord基础API

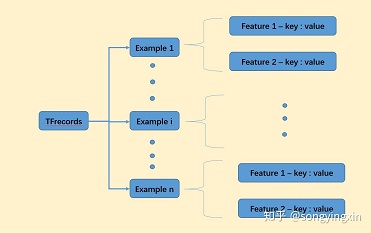

TFRecord文件是一种二进制文件,其结构如下:

-

每条数据由一个

tf.Example对象表示 -

每个

Example对象的features属性为一个tf.train.Features对象,其内容为{"key": tf.train.Feature}字典,每个映射表示一个字段,tf.train.Feature字段可以是以下三种类的实例之一:tf.train.BytesList: 存储string,byte类型的Tensor.tf.train.FloatList: 存储float,double类型的Tensor.tf.train.Int64List: 存储bool,enum,int32,uint32,int64,uint64类型的Tensor.

Example对象的创建和序列化

下面代码演示Example对象的创建和序列化:

-

创建三种

Fature对象:# tf.train.BytesList favorite_books = [name.encode('utf-8') for name in ["machine learning", "cc150"]] favorite_books_bytelist = tf.train.BytesList(value = favorite_books) print("favorite_books_bytelist:") print(favorite_books_bytelist) # tf.train.FloatList hours_floatlist = tf.train.FloatList(value = [15.5, 9.5, 7.0, 8.0]) print("hours_floatlist:") print(hours_floatlist) # tf.train.Int64List age_int64list = tf.train.Int64List(value = [42]) print("age_int64list:") print(age_int64list)输出如下:

favorite_books_bytelist: value: "machine learning" value: "cc150" hours_floatlist: value: 15.5 value: 9.5 value: 7.0 value: 8.0 age_int64list: value: 42 -

创建

Fatures对象:features = tf.train.Features( feature = { "favorite_books": tf.train.Feature(bytes_list = favorite_books_bytelist), "hours": tf.train.Feature(float_list = hours_floatlist), "age": tf.train.Feature(int64_list = age_int64list), } ) print(features)输出如下:

feature { key: "age" value { int64_list { value: 42 } } } feature { key: "favorite_books" value { bytes_list { value: "machine learning" value: "cc150" } } } feature { key: "hours" value { float_list { value: 15.5 value: 9.5 value: 7.0 value: 8.0 } } } -

创建

tf.train.Example对象:example = tf.train.Example(features=features) print(example)输出如下:

features { feature { key: "age" value { int64_list { value: 42 } } } feature { key: "favorite_books" value { bytes_list { value: "machine learning" value: "cc150" } } } feature { key: "hours" value { float_list { value: 15.5 value: 9.5 value: 7.0 value: 8.0 } } } } -

使用

Example对象的SerializeToString()方法将其序列化为字节流:serialized_example = example.SerializeToString() print(serialized_example)输出如下:

b'\n\\\n-\n\x0efavorite_books\x12\x1b\n\x19\n\x10machine learning\n\x05cc150\n\x0c\n\x03age\x12\x05\x1a\x03\n\x01*\n\x1d\n\x05hours\x12\x14\x12\x12\n\x10\x00\x00xA\x00\x00\x18A\x00\x00\xe0@\x00\x00\x00A'

TFRecord文件的读写

-

使用

tf.io.TFRecordWriter对象的write()方法可以将字节流写入TFRecord文件:filename = "test.tfrecords" with tf.io.TFRecordWriter(filename) as writer: for i in range(3): # 将同样的三个Example对象写入TFRecords文件 writer.write(serialized_example)通过传入

tf.io.TFRecordOptions对象可以控制TFRecords文件的压缩类型:filename = "test.tfrecords.zip" options = tf.io.TFRecordOptions(compression_type = "GZIP") with tf.io.TFRecordWriter(filename, options) as writer: for i in range(3): # 将同样的三个Example对象写入TFRecords文件 writer.write(serialized_example) -

使用

tf.data.TFRecordDataset将TFRecords文件读取为TFRecordDataset对象,其内容为每个Example对象序列化后的字节流:dataset = tf.data.TFRecordDataset(["test.tfrecords.zip",], compression_type= "GZIP") for serialized_example_tensor in dataset: print(serialized_example_tensor)输出如下:

tf.Tensor(b'\n\\\n\x1d\n\x05hours\x12\x14\x12\x12\n\x10\x00\x00xA\x00\x00\x18A\x00\x00\xe0@\x00\x00\x00A\n-\n\x0efavorite_books\x12\x1b\n\x19\n\x10machine learning\n\x05cc150\n\x0c\n\x03age\x12\x05\x1a\x03\n\x01*', shape=(), dtype=string) tf.Tensor(b'\n\\\n\x1d\n\x05hours\x12\x14\x12\x12\n\x10\x00\x00xA\x00\x00\x18A\x00\x00\xe0@\x00\x00\x00A\n-\n\x0efavorite_books\x12\x1b\n\x19\n\x10machine learning\n\x05cc150\n\x0c\n\x03age\x12\x05\x1a\x03\n\x01*', shape=(), dtype=string) tf.Tensor(b'\n\\\n\x1d\n\x05hours\x12\x14\x12\x12\n\x10\x00\x00xA\x00\x00\x18A\x00\x00\xe0@\x00\x00\x00A\n-\n\x0efavorite_books\x12\x1b\n\x19\n\x10machine learning\n\x05cc150\n\x0c\n\x03age\x12\x05\x1a\x03\n\x01*', shape=(), dtype=string) -

使用

tf.io.parse_single_example(serialized_example_tensor, feature_description)方法将序列化后的字节流解析为Tensor字典,其中feature_description字典参数指定各字段类型.# feature_description指定各字段类型 feature_description = { "favorite_books": tf.io.VarLenFeature(dtype = tf.string), "hours": tf.io.VarLenFeature(dtype = tf.float32), "age": tf.io.FixedLenFeature([], dtype = tf.int64), } # 读取TfRecord文件 dataset = tf.data.TFRecordDataset([filename]) for serialized_example_tensor in dataset: # 将序列化后的字节流解析为字典 example = tf.io.parse_single_example(serialized_example_tensor, feature_description) print(example)输出如下:

{'favorite_books': <tensorflow.python.framework.sparse_tensor.SparseTensor object at 0x7fad1f7632e8>, 'hours': <tensorflow.python.framework.sparse_tensor.SparseTensor object at 0x7fad1f763fd0>, 'age': <tf.Tensor: shape=(), dtype=int64, numpy=42>} {'favorite_books': <tensorflow.python.framework.sparse_tensor.SparseTensor object at 0x7fad1f763f98>, 'hours': <tensorflow.python.framework.sparse_tensor.SparseTensor object at 0x7fad1f7639b0>, 'age': <tf.Tensor: shape=(), dtype=int64, numpy=42>} {'favorite_books': <tensorflow.python.framework.sparse_tensor.SparseTensor object at 0x7fad1f763fd0>, 'hours': <tensorflow.python.framework.sparse_tensor.SparseTensor object at 0x7fad1f7632e8>, 'age': <tf.Tensor: shape=(), dtype=int64, numpy=42>}

写入TFRecord文件

将数据集拆分为两个feature,分别是input_features和label

input_features_train = tf.data.Dataset.from_tensor_slices(x_train_scaled)

label_train = tf.data.Dataset.from_tensor_slices(y_train)

dataset_train = tf.data.Dataset.zip((input_features_train, label_train))

-

序列化一条数据的代码如下:

def serialize_example(x, y): """Converts x, y to tf.train.Example and serialize""" input_feautres = tf.train.FloatList(value = x) label = tf.train.FloatList(value = y) features = tf.train.Features( feature = { "input_features": tf.train.Feature(float_list = input_feautres), "label": tf.train.Feature(float_list = label) } ) example = tf.train.Example(features = features) return example.SerializeToString() -

将数据集写入TFRecord文件的代码如下:

def save_to_tfrecords(base_filename, dataset, n_shards, steps_per_shard, compression_type = None): options = tf.io.TFRecordOptions(compression_type = compression_type) all_filenames = [] for shard_id in range(n_shards): filename = '{}_{:05d}-of-{:05d}'.format(base_filename, shard_id, n_shards) with tf.io.TFRecordWriter(filename, options) as writer: for x_batch, y_batch in dataset.take(steps_per_shard): for x_example, y_example in zip(x_batch, y_batch): writer.write(serialize_example(x_example, y_example)) all_filenames.append(filename_fullpath) return all_filenames

读取TFRecord文件

-

解析一条字节流的代码如下:

feature_description = { "input_features": tf.io.FixedLenFeature([8], dtype=tf.float32), "label": tf.io.FixedLenFeature([1], dtype=tf.float32) } def parse_example(serialized_example): example = tf.io.parse_single_example(serialized_example, feature_description) return example["input_features"], example["label"] -

读取TFRecord文件的代码如下:

def tfrecords_reader_dataset(filenames, batch_size=32, compression_type=None): dataset = tf.data.Dataset.list_files(filenames) dataset = dataset.repeat() dataset = dataset.interleave(lambda filename: tf.data.TFRecordDataset(filename, compression_type=compression_type)) dataset.shuffle() dataset = dataset.map(parse_example,) dataset = dataset.batch(batch_size) return dataset

4887

4887

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?