在Flink上,为了充分利用python数据分析的能力,像PySpark那样,需要安装PyFlink。

首先进入之前创建的虚拟环境pyspark_env

conda activate pyspark_env可以通过PyPi安装(需耐心等待下载安装过程)

pip install apache-flink==1.16.3注意:从Flink 1.11版本开始, PyFlink 作业支持在 Windows 系统上运行,因此您也可以在 Windows 上开发和调试 PyFlink 作业了。参考:环境安装 | Apache Flink

一个Flink程序由3部分构成,分别为Source、Transformation和Sink

Source:数据源。readTextFile(), socketTextStream()等,包括4类:本地集合、文件、网络套接字、消息队列或分布式日志流式数据源、自定义Source

Transformation:数据转换。map(), flatMap(),等算子

Sink:数据输出。可将结果发送到HDFS、文本文档、MySQL、Elasticsearch等,如writeAsText()算子。

简单打印HDFS文件内容

from pyflink.datastream import StreamExecutionEnvironment

import os

def printdata(input):

os.environ["HADOOP_CLASSPATH"] = os.popen("hadoop classpath").read()

env = StreamExecutionEnvironment.get_execution_environment()

env.set_parallelism(1)

data_stream = env.read_text_file(input) #source

data_stream.print() #sink

env.execute()

if __name__ == '__main__':

input = "hdfs://master:9000/input/flinkwords.txt"

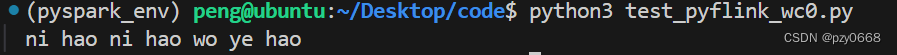

printdata(input)虚拟环境中直接 python3 test_pyflink_wc0.py 执行结果如下:

如果需要打印本地文件。将input的协议改为 file:/// 即可

单词词频统计,批处理方式

from pyflink.datastream import StreamExecutionEnvironment, RuntimeExecutionMode

from pyflink.common import Types

import os

def printdata(input):

os.environ["HADOOP_CLASSPATH"] = os.popen("hadoop classpath").read()

env = StreamExecutionEnvironment.get_execution_environment()

env.set_runtime_mode(RuntimeExecutionMode.BATCH)

env.set_parallelism(1)

ds = env.read_text_file(input) #source

def split(line):

yield from line.split()

ds = ds.flat_map(split) \

.map(lambda i: (i, 1), output_type=Types.TUPLE([Types.STRING(), Types.INT()])) \

.key_by(lambda i:i[0]) \

.reduce(lambda i, j: (i[0], i[1] + j[1])) #transformation

ds.print() #sink

env.execute()

if __name__ == '__main__':

input = "hdfs://master:9000/input/flinkwords.txt"

printdata(input)

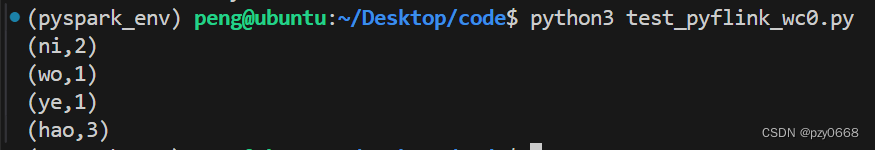

在虚拟环境中,执行结果如下:

系统自带的例子

稍微改下:

from pyflink.common import WatermarkStrategy, Encoder, Types

from pyflink.datastream import StreamExecutionEnvironment, RuntimeExecutionMode

from pyflink.datastream.connectors.file_system import (FileSource, StreamFormat, FileSink,

OutputFileConfig, RollingPolicy)

import os

word_count_data = ["To be, or not to be,--that is the question:--",

"Whether 'tis nobler in the mind to suffer",

"The slings and arrows of outrageous fortune",

"Or to take arms against a sea of troubles,",

"And by opposing end them?--To die,--to sleep,--",

"No more; and by a sleep to say we end",

"The heartache, and the thousand natural shocks",

"That flesh is heir to,--'tis a consummation",

"Devoutly to be wish'd. To die,--to sleep;--",

"To sleep! perchance to dream:--ay, there's the rub;",

"For in that sleep of death what dreams may come,",

"When we have shuffled off this mortal coil,",

"Must give us pause: there's the respect",

"That makes calamity of so long life;",

"For who would bear the whips and scorns of time,",

"The oppressor's wrong, the proud man's contumely,",

"The pangs of despis'd love, the law's delay,",

"The insolence of office, and the spurns",

"That patient merit of the unworthy takes,",

"When he himself might his quietus make",

"With a bare bodkin? who would these fardels bear,",

"To grunt and sweat under a weary life,",

"But that the dread of something after death,--",

"The undiscover'd country, from whose bourn",

"No traveller returns,--puzzles the will,",

"And makes us rather bear those ills we have",

"Than fly to others that we know not of?",

"Thus conscience does make cowards of us all;",

"And thus the native hue of resolution",

"Is sicklied o'er with the pale cast of thought;",

"And enterprises of great pith and moment,",

"With this regard, their currents turn awry,",

"And lose the name of action.--Soft you now!",

"The fair Ophelia!--Nymph, in thy orisons",

"Be all my sins remember'd."]

def word_count(input_path, output_path):

#创建执行环境对象

os.environ["HADOOP_CLASSPATH"] = os.popen("hadoop classpath").read()

env = StreamExecutionEnvironment.get_execution_environment()

env.set_runtime_mode(RuntimeExecutionMode.BATCH)

# write all the data to one file

env.set_parallelism(1)

# define the source

if input_path is not None:

ds = env.from_source(

source=FileSource.for_record_stream_format(StreamFormat.text_line_format(),

input_path)

.process_static_file_set().build(),

watermark_strategy=WatermarkStrategy.for_monotonous_timestamps(),

source_name="file_source"

)

else:

print("Executing word_count example with default input data set.")

print("Use --input to specify file input.")

ds = env.from_collection(word_count_data)

def split(line):

yield from line.split()

# compute word count

ds = ds.flat_map(split) \

.map(lambda i: (i, 1), output_type=Types.TUPLE([Types.STRING(), Types.INT()])) \

.key_by(lambda i: i[0]) \

.reduce(lambda i, j: (i[0], i[1] + j[1]))

# define the sink

if output_path is not None:

ds.sink_to(

sink=FileSink.for_row_format(

base_path=output_path,

encoder=Encoder.simple_string_encoder())

.with_output_file_config(

OutputFileConfig.builder()

.with_part_prefix("prefix")

.with_part_suffix(".ext")

.build())

.with_rolling_policy(RollingPolicy.default_rolling_policy())

.build()

)

else:

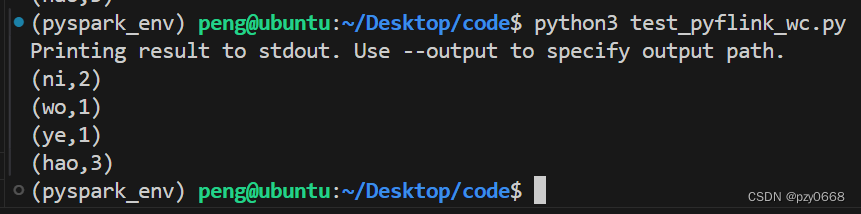

print("Printing result to stdout. Use --output to specify output path.")

ds.print()

# submit for execution

env.execute()

if __name__ == '__main__':

input = "hdfs://master:9000/input/flinkwords.txt"

output = ""

word_count(input, None)

结果如下:

至此,简单示例已完成,当然还有Table API方式,有待后续探索。。。。。。

5634

5634

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?