目录

一、安装torchserve

直接参考官方:

二、生成mar文件

1、编写handler代码

# -*- coding: utf-8 -*-

import pickle

import numpy as np

from ts.torch_handler.base_handler import BaseHandler

import torch

import torchvision

import time

import torch.nn.functional as F

def xywh2xyxy(x):

# Convert nx4 boxes from [x, y, w, h] to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

y = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)

y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x

y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y

y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x

y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y

return y

class ModelHandler(BaseHandler):

def __init__(self):

super().__init__()

self._context = None

self.initialized = False

self.explain = False

self.target = 0

def preprocess(self, data):

# (b, 3, 640, 640)

print(type(data))

data = pickle.loads(data[0].get("data"))

preprocessed_data = data['img']

print(preprocessed_data.shape)

params = data['params']

print(params)

self.params = params

self.conf_thres = params['conf_thres']

self.iou_thres = params['iou_thres']

preprocessed_data = torch.from_numpy(preprocessed_data).cuda()

preprocessed_data = preprocessed_data / 255.

# (3, 640, 640)

#preprocessed_data = preprocessed_data.permute(2, 0, 1)

# (1, 3, 640, 640)

#preprocessed_data = preprocessed_data.unsqueeze(0)

preprocessed_data = preprocessed_data.type(torch.cuda.FloatTensor)

return preprocessed_data

def inference(self, model_input, *args, **kwargs):

# Do some inference call to engine here and return output

with torch.no_grad():

pred = self.model.forward(model_input)[0]

print('pred shape ', pred.shape)

return pred

def postprocess(self, inference_output):

postprocess_output = inference_output

#postprocess_output = [i for i in inference_output]

pred = self.non_max_suppression(postprocess_output, conf_thres=self.conf_thres, iou_thres=self.iou_thres)

pred = [p.tolist() for p in pred]

print('post pred shape ', np.array(pred).shape)

return [pred]

def non_max_suppression(self, prediction,

conf_thres=0.25,

iou_thres=0.45,

classes=None,

agnostic=False,

multi_label=False,

labels=(),

max_det=300):

"""Non-Maximum Suppression (NMS) on inference results to reject overlapping bounding boxes

Returns:

list of detections, on (n,6) tensor per image [xyxy, conf, cls]

"""

bs = prediction.shape[0] # batch size

nc = prediction.shape[2] - 5 # number of classes

xc = prediction[..., 4] > conf_thres # candidates

# Checks

assert 0 <= conf_thres <= 1, f'Invalid Confidence threshold {conf_thres}, valid values are between 0.0 and 1.0'

assert 0 <= iou_thres <= 1, f'Invalid IoU {iou_thres}, valid values are between 0.0 and 1.0'

# Settings

# min_wh = 2 # (pixels) minimum box width and height

max_wh = 7680 # (pixels) maximum box width and height

max_nms = 30000 # maximum number of boxes into torchvision.ops.nms()

time_limit = 0.1 + 0.03 * bs # seconds to quit after

redundant = True # require redundant detections

multi_label &= nc > 1 # multiple labels per box (adds 0.5ms/img)

merge = False # use merge-NMS

t = time.time()

output = [torch.zeros((0, 6), device=prediction.device)] * bs

for xi, x in enumerate(prediction): # image index, image inference

# Apply constraints

# x[((x[..., 2:4] < min_wh) | (x[..., 2:4] > max_wh)).any(1), 4] = 0 # width-height

x = x[xc[xi]] # confidence

# Cat apriori labels if autolabelling

if labels and len(labels[xi]):

lb = labels[xi]

v = torch.zeros((len(lb), nc + 5), device=x.device)

v[:, :4] = lb[:, 1:5] # box

v[:, 4] = 1.0 # conf

v[range(len(lb)), lb[:, 0].long() + 5] = 1.0 # cls

x = torch.cat((x, v), 0)

# If none remain process next image

if not x.shape[0]:

continue

# Compute conf

x[:, 5:] *= x[:, 4:5] # conf = obj_conf * cls_conf

# Box (center x, center y, width, height) to (x1, y1, x2, y2)

box = xywh2xyxy(x[:, :4])

# Detections matrix nx6 (xyxy, conf, cls)

if multi_label:

i, j = (x[:, 5:] > conf_thres).nonzero(as_tuple=False).T

x = torch.cat((box[i], x[i, j + 5, None], j[:, None].float()), 1)

else: # best class only

conf, j = x[:, 5:].max(1, keepdim=True)

x = torch.cat((box, conf, j.float()), 1)[conf.view(-1) > conf_thres]

# Filter by class

if classes is not None:

x = x[(x[:, 5:6] == torch.tensor(classes, device=x.device)).any(1)]

# Apply finite constraint

# if not torch.isfinite(x).all():

# x = x[torch.isfinite(x).all(1)]

# Check shape

n = x.shape[0] # number of boxes

if not n: # no boxes

continue

elif n > max_nms: # excess boxes

x = x[x[:, 4].argsort(descending=True)[:max_nms]] # sort by confidence

# Batched NMS

c = x[:, 5:6] * (0 if agnostic else max_wh) # classes

boxes, scores = x[:, :4] + c, x[:, 4] # boxes (offset by class), scores

i = torchvision.ops.nms(boxes, scores, iou_thres) # NMS

if i.shape[0] > max_det: # limit detections

i = i[:max_det]

if merge and (1 < n < 3E3): # Merge NMS (boxes merged using weighted mean)

# update boxes as boxes(i,4) = weights(i,n) * boxes(n,4)

iou = box_iou(boxes[i], boxes) > iou_thres # iou matrix

weights = iou * scores[None] # box weights

x[i, :4] = torch.mm(weights, x[:, :4]).float() / weights.sum(1, keepdim=True) # merged boxes

if redundant:

i = i[iou.sum(1) > 1] # require redundancy

output[xi] = x[i]

if (time.time() - t) > time_limit:

LOGGER.warning(f'WARNING: NMS time limit {time_limit:.3f}s exceeded')

break # time limit exceeded

return output

def handle(self, data, context):

model_input = self.preprocess(data)

model_output = self.inference(model_input)

res = self.postprocess(model_output)

return res

if __name__ == '__main__':

handler = ModelHandler()

handler.initialize()

x = torch.randn(640,640,3)

x = handler.preprocess(x)

x = handle.inference(x)

x = handler.postprocess(x)

print(x)

主要就是写前处理、推理过程、后处理。

这里的前处理部分,输入数据是4维的,需要在访问模型服务是将img转成对应格式,也可以在前处理部分进行维度转换相关操作,两种方式都可以。

后处理部分,主要就是非极大值抑制,直接用的yolov5中的代码。

编写完成后,可以转成mar文件然后测试,一开始可能会有不少问题,我这里加了一些打印调试用的,如果能直接调试handler文件就好了,暂时没找到方法。

2、生成mar文件

a、先将yolov5的模型转成torchscript

这个比较简单,参考官方文档就行,有一个地方注意一下,转gpu的话,需要设置--device参数,默认是转cpu版本。

也可以不转torchscript模型,这种方式暂时没有尝试。

b、转mar文件

torch-model-archiver --model-name yolov5 --version 1.0 --serialized-file ../detection/yolov5/yolov5s.torchscript --export-path ../detection/yolov5/ --handler torch_serve/ts/torch_handler/yolov5_handler.py -f三、测试

1、测试代码

通过grpc方式:

# -*- coding: utf-8 -*-

import json

import pickle

import cv2

import numpy as np

import time

from PIL import Image, ImageDraw, ImageFont

from torchserve_grpc_client import *

stub = get_inference_stub()

def letterbox(im, new_shape=(640, 640), color=(114, 114, 114), auto=False, scaleFill=False, scaleup=True, stride=32):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

# Scale ratio (new / old)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

if not scaleup: # only scale down, do not scale up (for better val mAP)

r = min(r, 1.0)

# Compute padding

ratio = r, r # width, height ratios

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

if auto: # minimum rectangle

dw, dh = np.mod(dw, stride), np.mod(dh, stride) # wh padding

elif scaleFill: # stretch

dw, dh = 0.0, 0.0

new_unpad = (new_shape[1], new_shape[0])

ratio = new_shape[1] / shape[1], new_shape[0] / shape[0] # width, height ratios

dw /= 2 # divide padding into 2 sides

dh /= 2

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im, ratio, (dw, dh)

def get_data(img_file):

img0 = cv2.imread(img_file)

img = letterbox(img0, new_shape=(640, 640))[0]

img = img.transpose((2, 0, 1))[::-1] # to chw rgb

img = img[np.newaxis, ...]

print(img.shape)

return img0, img

def scale_coords(img1_shape, coords, img0_shape, ratio_pad=None):

# Rescale coords (xyxy) from img1_shape to img0_shape

if ratio_pad is None: # calculate from img0_shape

gain = min(img1_shape[0] / img0_shape[0], img1_shape[1] / img0_shape[1]) # gain = old / new

pad = (img1_shape[1] - img0_shape[1] * gain) / 2, (img1_shape[0] - img0_shape[0] * gain) / 2 # wh padding

else:

gain = ratio_pad[0][0]

pad = ratio_pad[1]

coords[:, [0, 2]] -= pad[0] # x padding

coords[:, [1, 3]] -= pad[1] # y padding

coords[:, :4] /= gain

clip_coords(coords, img0_shape)

return coords

def clip_coords(boxes, shape):

# Clip bounding xyxy bounding boxes to image shape (height, width)

#if isinstance(boxes, torch.Tensor): # faster individually

# boxes[:, 0].clamp_(0, shape[1]) # x1

# boxes[:, 1].clamp_(0, shape[0]) # y1

# boxes[:, 2].clamp_(0, shape[1]) # x2

# boxes[:, 3].clamp_(0, shape[0]) # y2

#else: # np.array (faster grouped)

boxes[:, [0, 2]] = boxes[:, [0, 2]].clip(0, shape[1]) # x1, x2

boxes[:, [1, 3]] = boxes[:, [1, 3]].clip(0, shape[0]) # y1, y2

def post_process(pred, img0, img):

print(pred.shape)

det = pred[0] # one image

if det is not None and len(det):

# Rescale boxes from imgsz to im0 size

det[:, :4] = scale_coords(img.shape[2:], det[:, :4], img0.shape).round()

return det

def draw_img(x, img, color=None, label=None, line_thickness=None):

# Plots one bounding box on image img

tl = line_thickness or round(0.002 * (img.shape[0] + img.shape[1]) / 2) + 1 # line/font thickness

color = color or [random.randint(0, 255) for _ in range(3)]

c1, c2 = (int(x[0]), int(x[1])), (int(x[2]), int(x[3]))

cv2.rectangle(img, c1, c2, color, thickness=tl, lineType=cv2.LINE_AA)

if label:

tf = max(tl - 1, 1) # font thickness

t_size = cv2.getTextSize(label, 0, fontScale=tl / 3, thickness=tf)[0]

c2 = c1[0] + t_size[0], c1[1] - t_size[1] - 3

cv2.rectangle(img, c1, c2, color, -1, cv2.LINE_AA) # filled

cv2.putText(img, label, (c1[0], c1[1] - 2), 0, tl / 3, [225, 255, 255], thickness=tf, lineType=cv2.LINE_AA)

#font_size = 20

#img_pil = Image.fromarray(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

#font = ImageFont.truetype('./ukai.ttc', size=font_size)

#draw = ImageDraw.Draw(img_pil)

#draw.text((c1[0], max(c1[1], 0)), label, (255, 0, 0), font)

#img = cv2.cvtColor(np.asarray(img_pil), cv2.COLOR_RGB2BGR)

return img

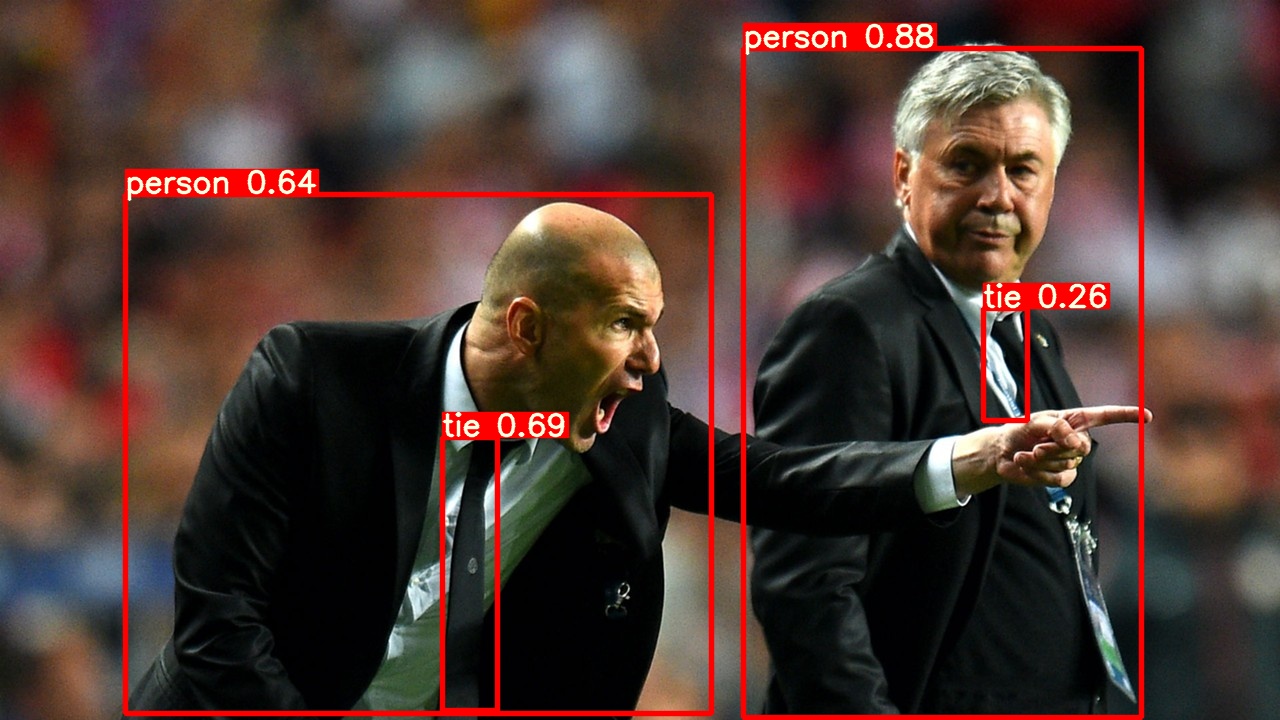

img_file = '/home/mi/workspace/projects/detection/yolov5/data/images/zidane.jpg'

img0, img = get_data(img_file)

data = {'img': img, 'params': {'conf_thres': 0.25, 'iou_thres': 0.45}}

data = pickle.dumps(data)

pred = infer(stub, "yolov5", data)

res = json.loads(pred)

res = np.array(res)

print(res.shape)

res = post_process(res, img0, img)

print(res)

names = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush'] # class names

for x1, y1, x2, y2, conf, cls in reversed(res):

#label = '%s %.2f' % (names[int(cls)], conf)

label = names[int(cls)]

img0 = draw_img([x1, y1, x2, y2], img0, label='{} {:.2f}'.format(label, conf), color=(0,0,255))

cv2.imwrite('res_{}.jpg'.format(''), img0)

2、启动torchserve

torchserve --start --ncs --model-store ../detection/yolov5/ --models yolov5.mar --ts-config torch_serve/docker/config.properties3、测试结果

代码放在ts_scripts目录中,可以直接运行。

结果:

和pytorch模型结果做比较:

可以发现两者结果一样,如果不放心,可以比较二者输出的检测结果,我看了一下,大概小数点后5、6位有差异,对我们来说没有任何影响。

可以发现两者结果一样,如果不放心,可以比较二者输出的检测结果,我看了一下,大概小数点后5、6位有差异,对我们来说没有任何影响。

这里需要注意一点,就是图片resize的方式,对比时要保持一致,具体参考yolov5中的letterbox方法。

四、其他问题

在测试过程中可能遇到的一些问题

1、请求/响应超过最大值

io.grpc.StatusRuntimeException: RESOURCE_EXHAUSTED: gRPC message exceeds maximum size 6553500: 10838210

修改config.properties中的max_request_size,max_response_size,在启动服务时通过--ts-config传入。

inference_address=http://0.0.0.0:8080

management_address=http://0.0.0.0:8081

metrics_address=http://0.0.0.0:8082

number_of_netty_threads=32

job_queue_size=1000

model_store=/home/model-server/model-store

workflow_store=/home/model-server/wf-store

max_request_size=65535000

max_response_size=65535000

grpc_inference_port = 7070

grpc_management_port = 70712、received message larger than max (56680420 vs. 4194304)

修改grpc接收消息最大值:

options = [('grpc.max_receive_message_length', 200 * 1024 * 1024)]

channel = grpc.insecure_channel('localhost:7070', options=options)

stub = inference_pb2_grpc.InferenceAPIsServiceStub(channel)

3005

3005

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?