概述

TaskSetManager调度一个TaskSet内的所有task。它会跟踪每个task,并在task失败时重试直至一定的次数;通过延迟调度为它的TaskSet执行locality-aware调度。TaskSetManager有2个主要的方法:

resourceOffer()方法:该方法会判断TaskSet是否想要运行某个task在某个节点上。

statusUpdate()方法:该方法会通知TaskSetManager某个task的状态发生了改变。

添加所有的task到pending lists

相关的成员变量

// Set of pending tasks for each executor. These collections are actually

// treated as stacks, in which new tasks are added to the end of the

// ArrayBuffer and removed from the end. This makes it faster to detect

// tasks that repeatedly fail because whenever a task failed, it is put

// back at the head of the stack. These collections may contain duplicates

// for two reasons:

// (1): Tasks are only removed lazily; when a task is launched, it remains

// in all the pending lists except the one that it was launched from.

// (2): Tasks may be re-added to these lists multiple times as a result

// of failures.

// Duplicates are handled in dequeueTaskFromList, which ensures that a

// task hasn't already started running before launching it.

private val pendingTasksForExecutor = new HashMap[String, ArrayBuffer[Int]]

// Set of pending tasks for each host. Similar to pendingTasksForExecutor,

// but at host level.

private val pendingTasksForHost = new HashMap[String, ArrayBuffer[Int]]

// Set of pending tasks for each rack -- similar to the above.

private val pendingTasksForRack = new HashMap[String, ArrayBuffer[Int]]

// Set containing pending tasks with no locality preferences.

private[scheduler] var pendingTasksWithNoPrefs = new ArrayBuffer[Int]

// Set containing all pending tasks (also used as a stack, as above).

private val allPendingTasks = new ArrayBuffer[Int]

// Tasks that can be speculated. Since these will be a small fraction of total

// tasks, we'll just hold them in a HashSet.

private[scheduler] val speculatableTasks = new HashSet[Int]TaskSetManager初始化时添加所有的task到pending lists

// Add all our tasks to the pending lists. We do this in reverse order

// of task index so that tasks with low indices get launched first.

//添加所有的task到pending lists

for (i <- (0 until numTasks).reverse) {

addPendingTask(i)

}根据一个task的偏好位置,添加它到相应的pending list

/** Add a task to all the pending-task lists that it should be on. */

private[spark] def addPendingTask(index: Int) {

for (loc <- tasks(index).preferredLocations) {

loc match {

//如果偏好位置是ExecutorCacheTaskLocation类型,添加task到pendingTasksForExecutor

case e: ExecutorCacheTaskLocation =>

pendingTasksForExecutor.getOrElseUpdate(e.executorId, new ArrayBuffer) += index

//如果偏好位置是HDFSCacheTaskLocaton类型,添加task到

case e: HDFSCacheTaskLocation =>

val exe = sched.getExecutorsAliveOnHost(loc.host)

exe match {

case Some(set) =>

for (e <- set) {

pendingTasksForExecutor.getOrElseUpdate(e, new ArrayBuffer) += index

}

logInfo(s"Pending task $index has a cached location at ${e.host} " +

", where there are executors " + set.mkString(","))

case None => logDebug(s"Pending task $index has a cached location at ${e.host} " +

", but there are no executors alive there.")

}

case _ =>

}

//不管task的偏好位置是什么类型,都记录task的偏好位置的host到pendingTasksForHost

pendingTasksForHost.getOrElseUpdate(loc.host, new ArrayBuffer) += index

//不管task的偏好位置是什么类型,都记录task的偏好位置的host所在的rack到pendingTasksForRack

for (rack <- sched.getRackForHost(loc.host)) {

pendingTasksForRack.getOrElseUpdate(rack, new ArrayBuffer) += index

}

}

//如果task的偏好位置为空,添加task到pendingTasksWithNoPrefs

if (tasks(index).preferredLocations == Nil) {

pendingTasksWithNoPrefs += index

}

//不管task的偏好位置是什么类型,添加task到allPendingTasks

allPendingTasks += index // No point scanning this whole list to find the old task there

}TaskLocation

概述

指示一个task应该运行的位置。该位置的形式可以是一个host或者一个(host, executorId) 。如果task的偏好位置是后者,则更加偏向于在指定的executorId上运行这个task。如果指定的executorId不存在,则降级为同一个host上的其它executorId。

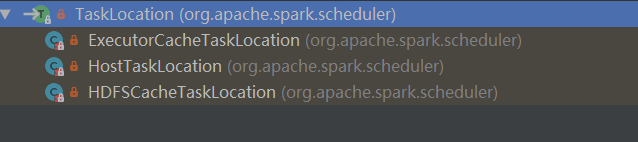

TaskLocation的继承关系

ExecutorCacheTasakLocation:偏好位置的形式是(host, executorId)。

HostTaskLocation:偏好位置的形式是一个host。

HDFSCacheTaskLocation:偏好位置的形式是一个host,并且用hdfs缓存。

从pending lists中取出一个task

dequeueTask()方法

根据给定的(execId, host)从pending lists中取出一个pending task,返回该task的index和locality level。

pending list的优先级顺序是:PendingTasksForExecutor、PendingTasksForHost、pendingTasksWithNoPrefs、PendingTasksForRack。

/**

* Dequeue a pending task for a given node and return its index and locality level.

* Only search for tasks matching the given locality constraint.

*

* @return An option containing (task index within the task set, locality, is speculative?)

*/

private def dequeueTask(execId: String, host: String, maxLocality: TaskLocality.Value)

: Option[(Int, TaskLocality.Value, Boolean)] =

{

for (index <- dequeueTaskFromList(execId, host, getPendingTasksForExecutor(execId))) {

return Some((index, TaskLocality.PROCESS_LOCAL, false))

}

if (TaskLocality.isAllowed(maxLocality, TaskLocality.NODE_LOCAL)) {

for (index <- dequeueTaskFromList(execId, host, getPendingTasksForHost(host))) {

return Some((index, TaskLocality.NODE_LOCAL, false))

}

}

if (TaskLocality.isAllowed(maxLocality, TaskLocality.NO_PREF)) {

// Look for noPref tasks after NODE_LOCAL for minimize cross-rack traffic

for (index <- dequeueTaskFromList(execId, host, pendingTasksWithNoPrefs)) {

return Some((index, TaskLocality.PROCESS_LOCAL, false))

}

}

if (TaskLocality.isAllowed(maxLocality, TaskLocality.RACK_LOCAL)) {

for {

rack <- sched.getRackForHost(host)

index <- dequeueTaskFromList(execId, host, getPendingTasksForRack(rack))

} {

return Some((index, TaskLocality.RACK_LOCAL, false))

}

}

if (TaskLocality.isAllowed(maxLocality, TaskLocality.ANY)) {

for (index <- dequeueTaskFromList(execId, host, allPendingTasks)) {

return Some((index, TaskLocality.ANY, false))

}

}

// find a speculative task if all others tasks have been scheduled

dequeueSpeculativeTask(execId, host, maxLocality).map {

case (taskIndex, allowedLocality) => (taskIndex, allowedLocality, true)}

}dequeueTaskFromList()方法

根据给定的(execId, host),从给定的pending list中取出一个task,返回该task的index

/**

* Dequeue a pending task from the given list and return its index.

* Return None if the list is empty.

* This method also cleans up any tasks in the list that have already

* been launched, since we want that to happen lazily.

*/

private def dequeueTaskFromList(

execId: String,

host: String,

list: ArrayBuffer[Int]): Option[Int] = {

var indexOffset = list.size

while (indexOffset > 0) {

indexOffset -= 1

val index = list(indexOffset)

if (!isTaskBlacklistedOnExecOrNode(index, execId, host)) {

// This should almost always be list.trimEnd(1) to remove tail

list.remove(indexOffset)

if (copiesRunning(index) == 0 && !successful(index)) {

return Some(index)

}

}

}

None

}resourceOffer()方法

根据给定的(execId, host),从给定的pending list中取出一个task,返回TaskDescription

/**

* Respond to an offer of a single executor from the scheduler by finding a task

*

* NOTE: this function is either called with a maxLocality which

* would be adjusted by delay scheduling algorithm or it will be with a special

* NO_PREF locality which will be not modified

*

* @param execId the executor Id of the offered resource

* @param host the host Id of the offered resource

* @param maxLocality the maximum locality we want to schedule the tasks at

*/

@throws[TaskNotSerializableException]

def resourceOffer(

execId: String,

host: String,

maxLocality: TaskLocality.TaskLocality)

: Option[TaskDescription] =

{

val offerBlacklisted = taskSetBlacklistHelperOpt.exists { blacklist =>

blacklist.isNodeBlacklistedForTaskSet(host) ||

blacklist.isExecutorBlacklistedForTaskSet(execId)

}

if (!isZombie && !offerBlacklisted) {

val curTime = clock.getTimeMillis()

//获取可允许的最大locality

var allowedLocality = maxLocality

if (maxLocality != TaskLocality.NO_PREF) {

allowedLocality = getAllowedLocalityLevel(curTime)

if (allowedLocality > maxLocality) {

// We're not allowed to search for farther-away tasks

allowedLocality = maxLocality

}

}

//dequeueTask()根据给定的(execId,host)和可允许的最大locality,从pending lists中取出一个task

//返回该task的index和taskLocality

dequeueTask(execId, host, allowedLocality).map { case ((index, taskLocality, speculative)) =>

// Found a task; do some bookkeeping and return a task description

//根据index获取相应的Task

val task = tasks(index)

val taskId = sched.newTaskId()

// Do various bookkeeping

copiesRunning(index) += 1

val attemptNum = taskAttempts(index).size

//Task封装成TaskInfo

val info = new TaskInfo(taskId, index, attemptNum, curTime,

execId, host, taskLocality, speculative)

taskInfos(taskId) = info

taskAttempts(index) = info :: taskAttempts(index)

// Update our locality level for delay scheduling

// NO_PREF will not affect the variables related to delay scheduling

if (maxLocality != TaskLocality.NO_PREF) {

currentLocalityIndex = getLocalityIndex(taskLocality)

lastLaunchTime = curTime

}

// Serialize and return the task

val serializedTask: ByteBuffer = try {

//将Task序列化

ser.serialize(task)

} catch {

// If the task cannot be serialized, then there's no point to re-attempt the task,

// as it will always fail. So just abort the whole task-set.

case NonFatal(e) =>

val msg = s"Failed to serialize task $taskId, not attempting to retry it."

logError(msg, e)

abort(s"$msg Exception during serialization: $e")

throw new TaskNotSerializableException(e)

}

if (serializedTask.limit() > TaskSetManager.TASK_SIZE_TO_WARN_KB * 1024 &&

!emittedTaskSizeWarning) {

emittedTaskSizeWarning = true

logWarning(s"Stage ${task.stageId} contains a task of very large size " +

s"(${serializedTask.limit() / 1024} KB). The maximum recommended task size is " +

s"${TaskSetManager.TASK_SIZE_TO_WARN_KB} KB.")

}

//将Task记录到runningTaskSet集合中

addRunningTask(taskId)

// We used to log the time it takes to serialize the task, but task size is already

// a good proxy to task serialization time.

// val timeTaken = clock.getTime() - startTime

//输出该task开始运行的日志

val taskName = s"task ${info.id} in stage ${taskSet.id}"

logInfo(s"Starting $taskName (TID $taskId, $host, executor ${info.executorId}, " +

s"partition ${task.partitionId}, $taskLocality, ${serializedTask.limit()} bytes)")

sched.dagScheduler.taskStarted(task, info)

//返回TaskDescription

new TaskDescription(

taskId,

attemptNum,

execId,

taskName,

index,

task.partitionId,

addedFiles,

addedJars,

task.localProperties,

serializedTask)

}

} else {

None

}

}跑spark任务输出task开始运行的日志如下:

2019-06-29 15:32:35 [ INFO] [dispatcher-event-loop-0] spark.scheduler.TaskSetManager:58 Starting task 0.0 in stage 0.0 (TID 0, hadoop16, partition 0,RACK_LOCAL, 90674 bytes)

2019-06-29 15:32:35 [ INFO] [dispatcher-event-loop-0] spark.scheduler.TaskSetManager:58 Starting task 1.0 in stage 0.0 (TID 1, hadoop2, partition 1,RACK_LOCAL, 90674 bytes)

2019-06-29 15:32:35 [ INFO] [dispatcher-event-loop-0] spark.scheduler.TaskSetManager:58 Starting task 2.0 in stage 0.0 (TID 2, hadoop11, partition 2,RACK_LOCAL, 90674 bytes)TaskSetManager处理成功完成的task

标记该task的状态为成功,并且通知DAGScheduler该task已经结束。

/**

* Marks a task as successful and notifies the DAGScheduler that the task has ended.

*/

def handleSuccessfulTask(tid: Long, result: DirectTaskResult[_]): Unit = {

val info = taskInfos(tid)

val index = info.index

// Check if any other attempt succeeded before this and this attempt has not been handled

if (successful(index) && killedByOtherAttempt.contains(tid)) {

// Undo the effect on calculatedTasks and totalResultSize made earlier when

// checking if can fetch more results

calculatedTasks -= 1

val resultSizeAcc = result.accumUpdates.find(a =>

a.name == Some(InternalAccumulator.RESULT_SIZE))

if (resultSizeAcc.isDefined) {

totalResultSize -= resultSizeAcc.get.asInstanceOf[LongAccumulator].value

}

// Handle this task as a killed task

handleFailedTask(tid, TaskState.KILLED,

TaskKilled("Finish but did not commit due to another attempt succeeded"))

return

}

info.markFinished(TaskState.FINISHED, clock.getTimeMillis())

if (speculationEnabled) {

successfulTaskDurations.insert(info.duration)

}

removeRunningTask(tid)

// Kill any other attempts for the same task (since those are unnecessary now that one

// attempt completed successfully).

for (attemptInfo <- taskAttempts(index) if attemptInfo.running) {

logInfo(s"Killing attempt ${attemptInfo.attemptNumber} for task ${attemptInfo.id} " +

s"in stage ${taskSet.id} (TID ${attemptInfo.taskId}) on ${attemptInfo.host} " +

s"as the attempt ${info.attemptNumber} succeeded on ${info.host}")

killedByOtherAttempt += attemptInfo.taskId

sched.backend.killTask(

attemptInfo.taskId,

attemptInfo.executorId,

interruptThread = true,

reason = "another attempt succeeded")

}

if (!successful(index)) {

tasksSuccessful += 1

logInfo(s"Finished task ${info.id} in stage ${taskSet.id} (TID ${info.taskId}) in" +

s" ${info.duration} ms on ${info.host} (executor ${info.executorId})" +

s" ($tasksSuccessful/$numTasks)")

// Mark successful and stop if all the tasks have succeeded.

successful(index) = true

if (tasksSuccessful == numTasks) {

isZombie = true

}

} else {

logInfo("Ignoring task-finished event for " + info.id + " in stage " + taskSet.id +

" because task " + index + " has already completed successfully")

}

// There may be multiple tasksets for this stage -- we let all of them know that the partition

// was completed. This may result in some of the tasksets getting completed.

sched.markPartitionCompletedInAllTaskSets(stageId, tasks(index).partitionId, info)

// This method is called by "TaskSchedulerImpl.handleSuccessfulTask" which holds the

// "TaskSchedulerImpl" lock until exiting. To avoid the SPARK-7655 issue, we should not

// "deserialize" the value when holding a lock to avoid blocking other threads. So we call

// "result.value()" in "TaskResultGetter.enqueueSuccessfulTask" before reaching here.

// Note: "result.value()" only deserializes the value when it's called at the first time, so

// here "result.value()" just returns the value and won't block other threads.

sched.dagScheduler.taskEnded(tasks(index), Success, result.value(), result.accumUpdates, info)

maybeFinishTaskSet()

}跑spark任务输出task已经完成的典型日志如下:

2019-06-29 15:33:10 [ INFO] [task-result-getter-0] spark.scheduler.TaskSetManager:58 Finished task 29.0 in stage 0.0 (TID 29) in 34821 ms on hadoop20 (1/320)

2019-06-29 15:33:10 [ INFO] [task-result-getter-1] spark.scheduler.TaskSetManager:58 Finished task 21.0 in stage 0.0 (TID 21) in 34837 ms on hadoop20 (2/320)

2019-06-29 15:33:10 [ INFO] [task-result-getter-2] spark.scheduler.TaskSetManager:58 Finished task 13.0 in stage 0.0 (TID 13) in 34860 ms on hadoop20 (3/320)

4731

4731

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?