Machine Learning(7)Neural network —— Perceptrons

Chenjing Ding

2018/02/21

| notation | meaning |

|---|---|

| g(x) | activate function |

| xn x n | the n-th input vector (simplified as xi x i when n is not specified) |

| xni x n i | the i-th entry of xn x n (simplified as xi x i when n is not specified) |

| N | the number of input vectors |

| K | the number of classes |

| tn t n | a vector with K dimensional with k-th entry 1 only when the n-th input vector belongs to k-th class, tn = (0,0,…1…0) |

| yj(x) y j ( x ) | the output of j-th output neural |

| y(x) y ( x ) | a output vector of input vector x; y(x)=(y1(x)...yK(x)) y ( x ) = ( y 1 ( x ) . . . y K ( x ) ) |

| Wτ+1ji W j i τ + 1 | the ( τ+1 τ + 1 )-th update of weight Wji W j i |

| Wτji W j i τ | the τ τ -th update of weight Wji W j i |

| ∂E(W)∂W(m)ij ∂ E ( W ) ∂ W i j ( m ) | the gradient of m-th layer weight |

| li l i | the number of neural in i-th layer |

| W(mn)ji W j i ( m n ) | the weight between layer m and n |

1. two layers perceptron

1.1 construction

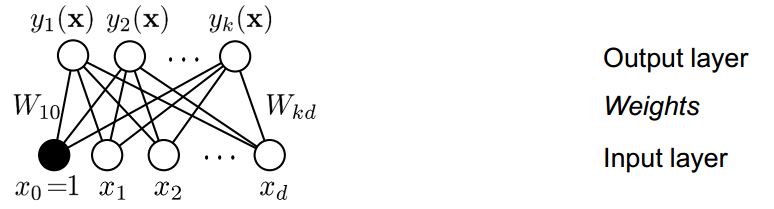

2 layers refers to output layer and input layer; the basic construction of 2 layers perceptron is as followed:

figure1 the construction of 2 layers perceptron

input layer:

d neural, d is the dimensional of an input vector x; The input layer can applied with non-linear basic functions

ϕ(x)

ϕ

(

x

)

.

Weights:

Wji

W

j

i

, j is the index of neural in output layer;i is the index of neural in input layer;

Output layer:

There are k classes, so there are k output functions. The output layer can applied with activate function g(x).

1.2 Learning: How to get Wji W j i

Gradient descent with sequential updating can be used to minimize the error function E(W) to adjust weights.

step1: set up an error function E(W);

if we use L2 loss,

step2: calculate ∂En(W)∂Wji ∂ E n ( W ) ∂ W j i ;

step3: sequential updating, η η is the learning rate;

Thus, perceptron learning corresponds to Gradient Descent of a quadratic error function.

- effor function more details:

- sequential updating and delta rule:

- Gradient descent

1.3 properties of 2 layers perceptron

it can only represent the linear function since

yj(x)=∑i=0dWji∗xi or ∑i=0dWji∗ϕ(xi) y j ( x ) = ∑ i = 0 d W j i ∗ x i o r ∑ i = 0 d W j i ∗ ϕ ( x i )the discriminant boundary is always linear in input space x or input space ϕ(x) ϕ ( x ) when input layer applied with ϕ(x) ϕ ( x ) , to be specific, the boundary can be a line, a plane and can not be a curve and so on. However, multi layers perceptron with hidden units can represent any continuous functions. ⇒ ⇒ 2. multi layers perceptronϕ(x) ϕ ( x ) and g(x) g ( x ) are given before; They are fixed functions.

There is always bias item in the linear discriminant function; (y = ax+b,b is the bias item and it has nothing to do with input x), thus the input layer always have d+1 input neural and the x0 x 0 is always 1, in a result y=ax1+bx0,x1=x,d=1 y = a x 1 + b x 0 , x 1 = x , d = 1 ;

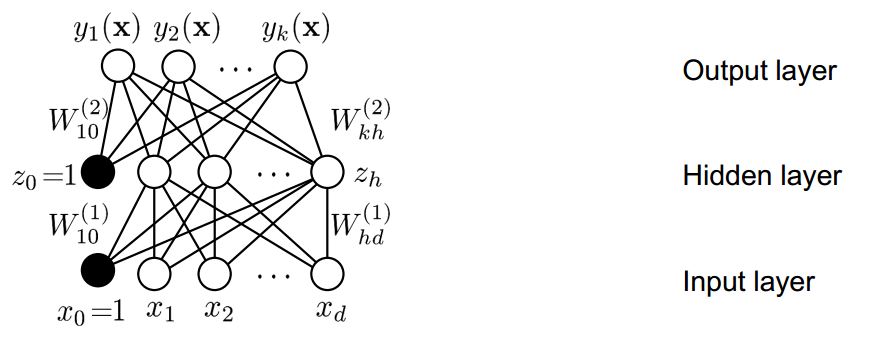

2 multi layers perceptron

There are some hidden layers between input layer and output layer.

For example, perceptron with one hidden layer as followed,

figure2 the construction of multi layers perceptron

output:

In 1.2 we know how to learn the weight of 2 layers perceptron. As the same way, for multi layers, we also need to find the error function and using Gradient Decent to update all weights, but computing the gradient is more complex. So here are 2 main steps:

step1: computing the gradient

⇒

⇒

2.1Backpropogation

step2: adjusting the weight in the direction of gradient, same as 1.2 step3, we well later focus on some optimization techniques to improve the performance

⇒

⇒

Machine Learning(7)Neural network–optimization techniques

2.1 Backpropagation

2.1.1 How to use backpropagation

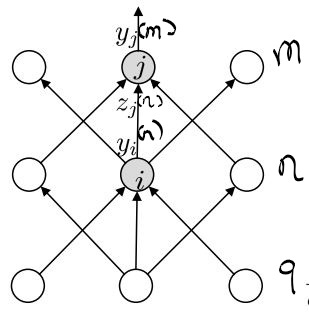

figure3 the construction of multi layers perceptron

if the id of layer is m, n and q form top to bottom, the number of neural in each layer is lm,ln l m , l n and lq l q ; between 2 layers, the above layer is always the output layer with index of neural j and similarly, the bottom layer is always the input layer with i;

Our goal is to obtain the gradient of

∂E(W)∂Wji(mn)

∂

E

(

W

)

∂

W

j

i

(

m

n

)

:

2.1.2 Why use backpropagation with reverse-mode differentiation

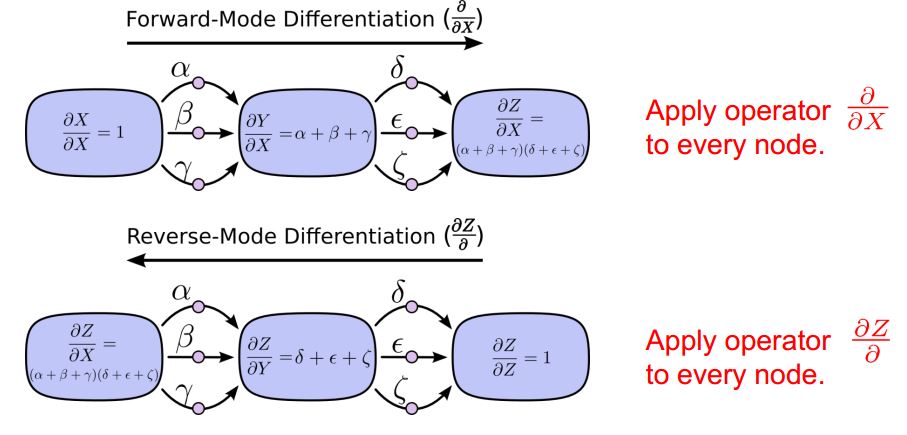

For all adjacent layers m and n, There are 2 ways to calculate ∂E(W)∂W(mn)ij ∂ E ( W ) ∂ W i j ( m n ) . To simplify, suppose we want to calculate ∂Z∂X ∂ Z ∂ X , one way is to apply operator ∂∂X ∂ ∂ X to every node, which is called Forward-Mode Differentiation. The other way is to apply operator ∂Z∂ ∂ Z ∂ called reverse-mode differentiation;

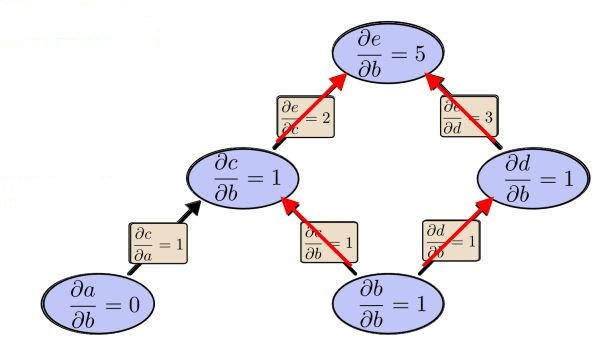

*figure4 computation graph* *1: Forward - Mode - Differentiate*

*figure4 computation graph* *1: Forward - Mode - Differentiate*

*figure5 Forward - Mode - Differentiate computation graph*

*figure5 Forward - Mode - Differentiate computation graph*

Forward-mode-differentiate apply operator

∂∂b

∂

∂

b

to every node, in our cases, the operator is

∂∂y(m)j

∂

∂

y

j

(

m

)

if the goal is to obtain

∂E(W)∂W(m m−1)jmi

∂

E

(

W

)

∂

W

j

m

i

(

m

m

−

1

)

;the id of first layer down is 0;

thus we need to visit every layer only to get ∂E(W)∂W(10)j1i ∂ E ( W ) ∂ W j 1 i ( 10 ) , when it comes to ∂E(W)∂W(10)j1i+1 ∂ E ( W ) ∂ W j 1 i + 1 ( 10 ) ,we need to visit every layer again!

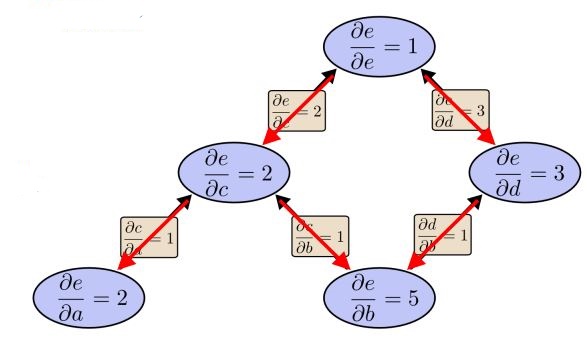

2: reverse-mode differentiation

figure6 Reverse-mode differentiation computation graph

From the graph above, only one pass we know

∂e∂

∂

e

∂

to all nodes. It is more efficient than Forward-mode-differentiate.

Reverse-mode differentiation apply

∂e∂

∂

e

∂

to every node, in our case, it is

∂E(W)∂

∂

E

(

W

)

∂

;That is to say,

∂E(W)∂y(q−1)jq−1,∂E(W)∂y(q−2)jq−2...∂E(W)∂y(1)j1

∂

E

(

W

)

∂

y

j

q

−

1

(

q

−

1

)

,

∂

E

(

W

)

∂

y

j

q

−

2

(

q

−

2

)

.

.

.

∂

E

(

W

)

∂

y

j

1

(

1

)

are calculated in order.

Then

∂E(W)∂W(q−1,q−2)jq−1iq−1,∂E(W)∂W(q−2,q−3)jq−2,iq−2...∂E(W)∂W(1,0)j1i1

∂

E

(

W

)

∂

W

j

q

−

1

i

q

−

1

(

q

−

1

,

q

−

2

)

,

∂

E

(

W

)

∂

W

j

q

−

2

,

i

q

−

2

(

q

−

2

,

q

−

3

)

.

.

.

∂

E

(

W

)

∂

W

j

1

i

1

(

1

,

0

)

are also obtained ; As mentioned above,

im

i

m

is the id of neural in m-th layer when this layer is input layer,

im

i

m

can be 0 to

lm

l

m

;

jm

j

m

is in similar way.

From all above, Reverse-mode differentiation can compute all derivatives in one single pass, that is why we use Back-propagation with reverse-mode differentiation;

Next topic will introduce some optimization techniques and how to implement these ideas with python.

316

316

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?