- 从网站中或得指定字段

import requests

import pandas as pd

from bs4 import BeautifulSoup

import time

from multiprocessing import Pool

class Downloader():

def __init__(self):

self.server = 'https://www.uniprot.org/uniprot/'

self.file = r"uniprots_input.csv"

#self.url = 'https://www.uniprot.org/uniprot/XXX#family_and_domains'

self.url = 'https://www.uniprot.org/uniprot/XXX'

#网站链接

self.uniprots = []

self.headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:71.0) Gecko/20100101 Firefox/71.0',

'Upgrade-Insecure-Requests': '1'}

self.outpath_txt = r"uniprots_output.csv"

def get_uni(self):

df = pd.read_csv(self.file)

# print(df)

# print("***************")

# print(df.columns)

self.uniprots.extend(df['Uniports'])

def get_contents(self, uniprot):

target = self.url.replace('XXX', uniprot)

s = requests.session()

rep = s.get(url=target, verify=False, headers=self.headers, timeout=30)

rep.raise_for_status()

soup = BeautifulSoup(rep.text, 'html.parser')

#families = soup.select('#family_and_domains > div.annotation > a')

families = soup.select('#content-gene')

fam = [i.get_text() for i in families]

if fam != []:

fam.extend([uniprot])

else:

families = soup.select('#content-gene')

fam = [i.get_text() for i in families]

fam.extend([uniprot])

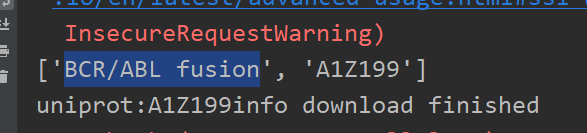

print(fam)

return fam

#写入文件

def write_txt(self, family):

with open(self.outpath_txt, 'a') as f:

f.write(str(family) + '\n')

def trans_csv(self):

df = pd.read_csv(self.file)

families = {}

with open(self.outpath_txt, 'r') as f:

text = f.readlines()

for f_u in text:

f_u = f_u.replace('\n', '').split(',')

families.update({f_u[len(f_u) - 1]: '|'.join(f_u[0:(len(f_u) - 1)])})

uni_left = [uni for uni in list(df['Uniports']) if uni not in families.keys()]

for u in uni_left:

families.update({u: None})

df['Family'] = df['Uniports'].map(lambda x: families[x])

df.to_csv(self.file)

if __name__ == "__main__":

dl = Downloader()

dl.get_uni()

pool = Pool(processes=8)

for uniprot in dl.uniprots:

try:

pool.apply_async(func=dl.write_txt, args=(dl.get_contents(uniprot),))

print("uniprot:" + uniprot + "info download finished")

time.sleep(0.5)

except:

print(uniprot + "download fail")

pool.close()

pool.join()

dl.trans_csv()

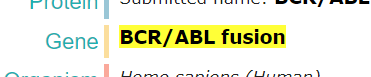

unpiortID-A1Z199

获得对应的基因号码

注解:python3.7

参考文献:https://blog.csdn.net/recher_He1107/article/details/103888869

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?