SwinTransfomer在发布后,赢得了广泛关注,本人也曾用该模型进行实验,发现该模型的确有较好的结果。这里主要结合论文和代码中的网络结构进行详细解析。

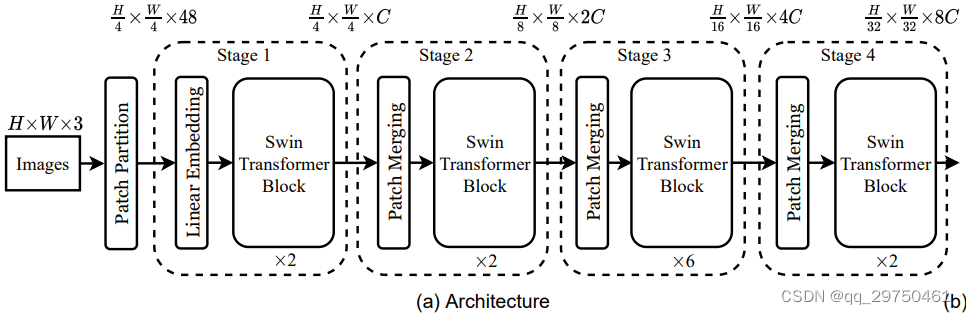

论文中的网络结构

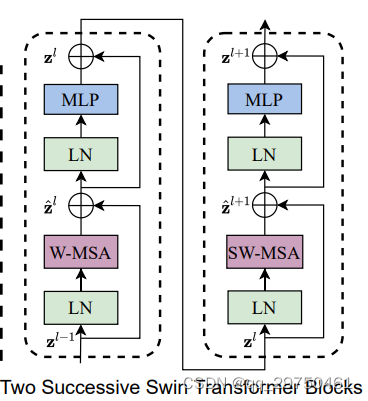

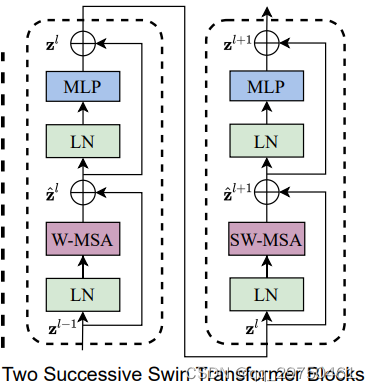

其中SwinTransformer Block的结构为,即为一个标准W-MSA和SW-MSA的级联:

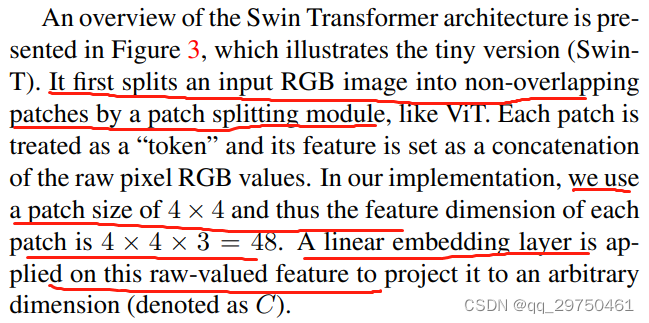

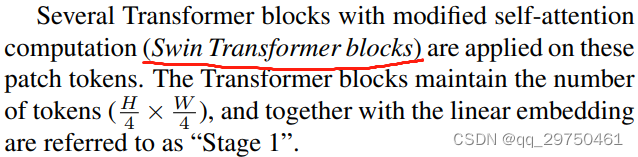

网络结构的细节

关于网络整体的细节,在文中的第三部分有非常详细的说明,这里先把文章中的相关内容截下,然后再结合代码详细展开叙述。

1 关于网络中的输入图像预处理部分,该部分对应的网络整体架构图如下面的截图。

所对应的代码如下:

class PatchEmbed(nn.Module):

r""" Image to Patch Embedding

Args:

img_size (int): Image size. Default: 224.

patch_size (int): Patch token size. Default: 4.

in_chans (int): Number of input image channels. Default: 3.

embed_dim (int): Number of linear projection output channels. Default: 96.

norm_layer (nn.Module, optional): Normalization layer. Default: None

"""

def __init__(self, img_size=224, patch_size=4, in_chans=3, embed_dim=96, norm_layer=None):

super().__init__()

img_size = to_2tuple(img_size)

patch_size = to_2tuple(patch_size)

patches_resolution = [img_size[0] // patch_size[0], img_size[1] // patch_size[1]]

self.img_size = img_size

self.patch_size = patch_size

self.patches_resolution = patches_resolution

self.num_patches = patches_resolution[0] * patches_resolution[1]

self.in_chans = in_chans

self.embed_dim = embed_dim

self.proj = nn.Conv2d(in_chans, embed_dim, kernel_size=patch_size, stride=patch_size)

if norm_layer is not None:

self.norm = norm_layer(embed_dim)

else:

self.norm = None

def forward(self, x):

B, C, H, W = x.shape

# FIXME look at relaxing size constraints

assert H == self.img_size[0] and W == self.img_size[1], \

f"Input image size ({H}*{W}) doesn't match model ({self.img_size[0]}*{self.img_size[1]})."

x = self.proj(x).flatten(2).transpose(1, 2) # B Ph*Pw C

if self.norm is not None:

x = self.norm(x)

return x

即代码中在输入端使用了 4 x 4 且步长为4的卷积,则假设原输入为224 x 224,经过此过程后,图像变为56 x 56的大小,此过程没有用非线性层,所以文中说的是将图像分为 4 x 4 的小图像块,然后进行线性变换,得到图像的嵌入特征向量。

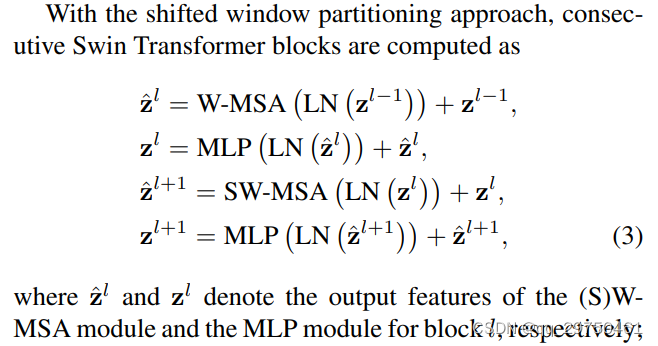

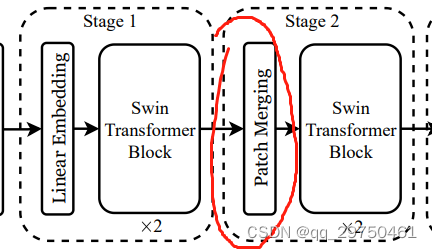

2 SwinTransformerBlock

论文中给出的 SwinTransformer模块,在图的标注部分,说的非常明确,这个是两个SwinTransformer Block的连接,其中不同点在于前一个阶段是 W-MSA模块,而后一个是 SW-MSA模块,则可以看出,SwinTransfomer Block包含了这两种模块,关于SwinBlock的说明,在后续的文章内容中也有说明。但是如果不细读文章或者细看代码,很可能会认为在单个SwinBlock中包含了这两个部分,其实不是的,这是两个级联的SwinBlock。

接下来结合代码来看一下:(BasicLayer方法中的构建SwinBlock的部分,大家可以看到,其实W-MSA和SW-MSA是交替出现的,具体通过shift_size这个变量进行选择)

# build blocks

self.blocks = nn.ModuleList([

SwinTransformerBlock(dim=dim, input_resolution=input_resolution,

num_heads=num_heads, window_size=window_size,

shift_size=0 if (i % 2 == 0) else window_size // 2,

mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale,

drop=drop, attn_drop=attn_drop,

drop_path=drop_path[i] if isinstance(drop_path, list) else drop_path,

norm_layer=norm_layer,

fused_window_process=fused_window_process)

for i in range(depth)])

SwinTransformerBlock方法(这个应该是本论文中的重点部分之一了):

class SwinTransformerBlock(nn.Module):

r""" Swin Transformer Block.

Args:

dim (int): Number of input channels.

input_resolution (tuple[int]): Input resulotion.

num_heads (int): Number of attention heads.

window_size (int): Window size.

shift_size (int): Shift size for SW-MSA.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set.

drop (float, optional): Dropout rate. Default: 0.0

attn_drop (float, optional): Attention dropout rate. Default: 0.0

drop_path (float, optional): Stochastic depth rate. Default: 0.0

act_layer (nn.Module, optional): Activation layer. Default: nn.GELU

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

fused_window_process (bool, optional): If True, use one kernel to fused window shift & window partition for acceleration, similar for the reversed part. Default: False

"""

def __init__(self, dim, input_resolution, num_heads, window_size=7, shift_size=0,

mlp_ratio=4., qkv_bias=True, qk_scale=None, drop=0., attn_drop=0., drop_path=0.,

act_layer=nn.GELU, norm_layer=nn.LayerNorm,

fused_window_process=False):

super().__init__()

self.dim = dim

self.input_resolution = input_resolution

self.num_heads = num_heads

self.window_size = window_size

self.shift_size = shift_size

self.mlp_ratio = mlp_ratio

if min(self.input_resolution) <= self.window_size:

# if window size is larger than input resolution, we don't partition windows

self.shift_size = 0

self.window_size = min(self.input_resolution)

assert 0 <= self.shift_size < self.window_size, "shift_size must in 0-window_size"

self.norm1 = norm_layer(dim)

self.attn = WindowAttention(

dim, window_size=to_2tuple(self.window_size), num_heads=num_heads,

qkv_bias=qkv_bias, qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

self.norm2 = norm_layer(dim)

mlp_hidden_dim = int(dim * mlp_ratio)

self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, act_layer=act_layer, drop=drop)

if self.shift_size > 0:

# calculate attention mask for SW-MSA

H, W = self.input_resolution

img_mask = torch.zeros((1, H, W, 1)) # 1 H W 1

h_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

w_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

cnt = 0

for h in h_slices:

for w in w_slices:

#相当于将 img_mask分了9块

img_mask[:, h, w, :] = cnt

cnt += 1

#num_windows*1, window_size, window_size, 1

mask_windows = window_partition(img_mask, self.window_size) # nW, window_size, window_size, 1

mask_windows = mask_windows.view(-1, self.window_size * self.window_size)

#(num_windows,window_size*window_size)

# ->(num_windows,1,window_size*window_size)-(num_windows,window_size*window_size,1)

#->(num_windows,window_size*window_size,window_size*window_size)

attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2)

attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0))

else:

attn_mask = None

self.register_buffer("attn_mask", attn_mask)

self.fused_window_process = fused_window_process

def forward(self, x):

H, W = self.input_resolution

B, L, C = x.shape

assert L == H * W, "input feature has wrong size"

shortcut = x

x = self.norm1(x)

x = x.view(B, H, W, C)

# cyclic shift

if self.shift_size > 0:

if not self.fused_window_process:

shifted_x = torch.roll(x, shifts=(-self.shift_size, -self.shift_size), dims=(1, 2))

# partition windows

x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C

else:

x_windows = WindowProcess.apply(x, B, H, W, C, -self.shift_size, self.window_size)

else:

shifted_x = x

# partition windows

x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C

x_windows = x_windows.view(-1, self.window_size * self.window_size, C) # nW*B, window_size*window_size, C

# W-MSA/SW-MSA

attn_windows = self.attn(x_windows, mask=self.attn_mask) # nW*B, window_size*window_size, C

# merge windows

attn_windows = attn_windows.view(-1, self.window_size, self.window_size, C)

# reverse cyclic shift

if self.shift_size > 0:

if not self.fused_window_process:

shifted_x = window_reverse(attn_windows, self.window_size, H, W) # B H' W' C

x = torch.roll(shifted_x, shifts=(self.shift_size, self.shift_size), dims=(1, 2))

else:

x = WindowProcessReverse.apply(attn_windows, B, H, W, C, self.shift_size, self.window_size)

else:

shifted_x = window_reverse(attn_windows, self.window_size, H, W) # B H' W' C

x = shifted_x

x = x.view(B, H * W, C)

x = shortcut + self.drop_path(x)

# FFN

x = x + self.drop_path(self.mlp(self.norm2(x)))

return x

其实大家先看该方法中的 forward 函数,可以大致推导出SwinTranformer的计算流程,其实是和论文描述一致的:

我们需要关注的是,在该部分到底是如何进行 W-MSA和SW-MSA过程的。

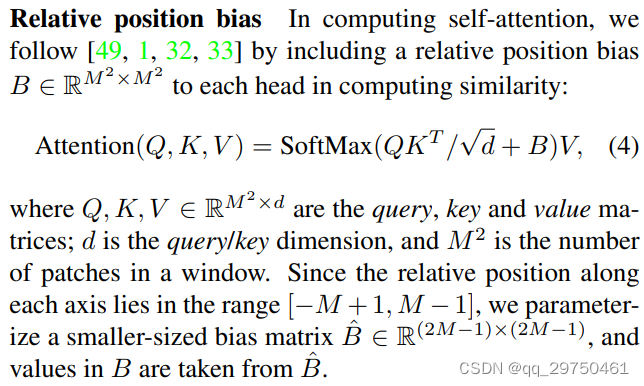

首先看该方法中的 WindowAttention 方法,当然这个是多头注意力机制模块(有点类似于分组卷积),此外这段代码中包含了论文中提到 relative position bias

class WindowAttention(nn.Module):

r""" Window based multi-head self attention (W-MSA) module with relative position bias.

It supports both of shifted and non-shifted window.

Args:

dim (int): Number of input channels.

window_size (tuple[int]): The height and width of the window.

num_heads (int): Number of attention heads.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

qk_scale (float | None, optional): Override default qk scale of head_dim ** -0.5 if set

attn_drop (float, optional): Dropout ratio of attention weight. Default: 0.0

proj_drop (float, optional): Dropout ratio of output. Default: 0.0

"""

def __init__(self, dim, window_size, num_heads, qkv_bias=True, qk_scale=None, attn_drop=0., proj_drop=0.):

super().__init__()

self.dim = dim

self.window_size = window_size # Wh, Ww

self.num_heads = num_heads

head_dim = dim // num_heads

self.scale = qk_scale or head_dim ** -0.5

# define a parameter table of relative position bias

# 169 * num_heads

self.relative_position_bias_table = nn.Parameter(

torch.zeros((2 * window_size[0] - 1) * (2 * window_size[1] - 1), num_heads)) # 2*Wh-1 * 2*Ww-1, nH

# get pair-wise relative position index for each token inside the window

coords_h = torch.arange(self.window_size[0])

coords_w = torch.arange(self.window_size[1])

coords = torch.stack(torch.meshgrid([coords_h, coords_w])) # 2, Wh, Ww

coords_flatten = torch.flatten(coords, 1) # 2, Wh*Ww

#49个位置的值,分别于自身的49个位置值做差,得到相对位置坐标

relative_coords = coords_flatten[:, :, None] - coords_flatten[:, None, :] # 2, Wh*Ww, Wh*Ww

relative_coords = relative_coords.permute(1, 2, 0).contiguous() # Wh*Ww, Wh*Ww, 2

relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0

relative_coords[:, :, 1] += self.window_size[1] - 1

relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1

#值从 0 - 168(156=(6+6)*13+12(6+6)) 主对角线上的值为 84

relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww

self.register_buffer("relative_position_index", relative_position_index)

self.qkv = nn.Linear(dim, dim * 3, bias=qkv_bias)

self.attn_drop = nn.Dropout(attn_drop)

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(proj_drop)

trunc_normal_(self.relative_position_bias_table, std=.02)

self.softmax = nn.Softmax(dim=-1)

def forward(self, x, mask=None):

"""

Args:

x: input features with shape of (num_windows*B, N, C)

mask: (0/-inf) mask with shape of (num_windows, Wh*Ww, Wh*Ww) or None

"""

B_, N, C = x.shape

qkv = self.qkv(x).reshape(B_, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4)

#(B,num_heads,N,C // self.num_heads)

q, k, v = qkv[0], qkv[1], qkv[2] # make torchscript happy (cannot use tensor as tuple)

q = q * self.scale

attn = (q @ k.transpose(-2, -1))#(B,num_heads,N,N) N = 49

# 得到Wh*Ww,Wh*Ww,nH每个坐标位置的相对位置偏置量(越靠近中心值越大)

relative_position_bias = self.relative_position_bias_table[self.relative_position_index.view(-1)].view(

self.window_size[0] * self.window_size[1], self.window_size[0] * self.window_size[1], -1) # Wh*Ww,Wh*Ww,nH

relative_position_bias = relative_position_bias.permute(2, 0, 1).contiguous() # nH, Wh*Ww, Wh*Ww

attn = attn + relative_position_bias.unsqueeze(0)#(B,num_heads,N,N)

if mask is not None:

nW = mask.shape[0]#n_windows

#mask:num_windows, Wh*Ww, Wh*Ww-> 1, num_windows, 1,Wh*Ww, Wh*Ww

attn = attn.view(B_ // nW, nW, self.num_heads, N, N) + mask.unsqueeze(1).unsqueeze(0)

attn = attn.view(-1, self.num_heads, N, N)

attn = self.softmax(attn)

else:

attn = self.softmax(attn)

attn = self.attn_drop(attn)

x = (attn @ v).transpose(1, 2).reshape(B_, N, C)

x = self.proj(x)

x = self.proj_drop(x)

return x

如果理解相对位置编码的是实现,可以看该部分。其中 relative_position_bias_table 存储的是可学习[(2 * window_size[0] - 1) * (2 * window_size[1] - 1)]*num_heads 的位置编码值,需要注意的是该编码值为截断正态随机采样,整体的值分布是随机的,没有像正态分布那样在中间大两边小的情况,因为该张量为可学习的,relative_position_index 是给定窗口大小的相对位置索引,其索引长度为(window_size[0] * window_size[1])x(window_size[0] * window_size[1),该大小对应窗口自注意力模块形成的尺寸大小,每个索引位的取值为范围为 [0,(2 * window_size[0] - 1) x (2 * window_size[1] - 1)-1]。

self.relative_position_bias_table = nn.Parameter(

torch.zeros((2 * window_size[0] - 1) * (2 * window_size[1] - 1), num_heads)) # 2*Wh-1 * 2*Ww-1, nH

# get pair-wise relative position index for each token inside the window

coords_h = torch.arange(self.window_size[0])

coords_w = torch.arange(self.window_size[1])

coords = torch.stack(torch.meshgrid([coords_h, coords_w])) # 2, Wh, Ww

coords_flatten = torch.flatten(coords, 1) # 2, Wh*Ww

#49个位置的值,分别于自身的49个位置值做差,得到相对位置坐标

relative_coords = coords_flatten[:, :, None] - coords_flatten[:, None, :] # 2, Wh*Ww, Wh*Ww

relative_coords = relative_coords.permute(1, 2, 0).contiguous() # Wh*Ww, Wh*Ww, 2

relative_coords[:, :, 0] += self.window_size[0] - 1 # shift to start from 0

relative_coords[:, :, 1] += self.window_size[1] - 1

relative_coords[:, :, 0] *= 2 * self.window_size[1] - 1

#值从 0 - 168(156=(6+6)*13+12(6+6)) 主对角线上的值为 84

relative_position_index = relative_coords.sum(-1) # Wh*Ww, Wh*Ww

self.register_buffer("relative_position_index", relative_position_index)

trunc_normal_(self.relative_position_bias_table, std=.02)

其实在窗口注意力的代码中,还有和 mask 相关的代码,这个mask就是为了配合 shift_size 生成的,目的是为了实现在同一窗口下,实现不同偏移量子窗口的注意力机制,这个在文中有相关的说明。

if mask is not None:

nW = mask.shape[0]#n_windows

#mask:num_windows, Wh*Ww, Wh*Ww-> 1, num_windows, 1,Wh*Ww, Wh*Ww

attn = attn.view(B_ // nW, nW, self.num_heads, N, N) + mask.unsqueeze(1).unsqueeze(0)

attn = attn.view(-1, self.num_heads, N, N)

attn = self.softmax(attn)

else:

attn = self.softmax(attn)

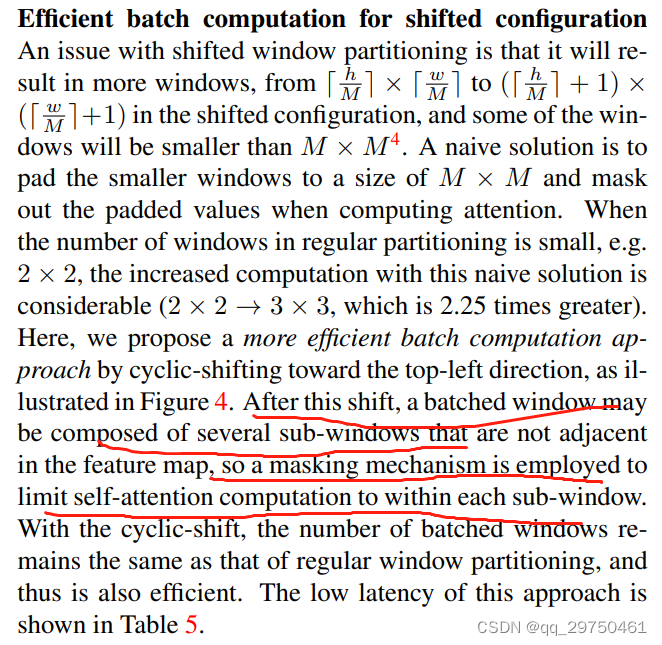

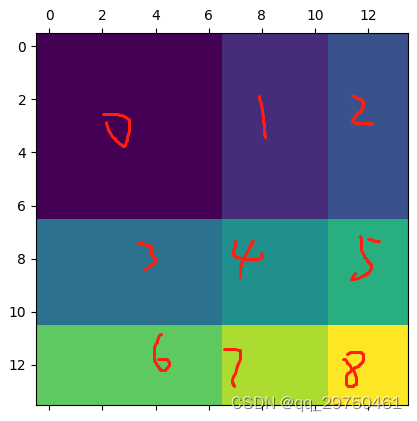

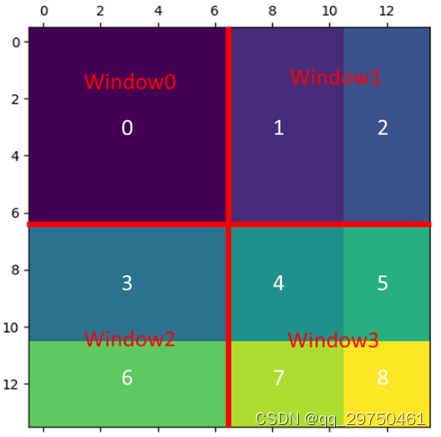

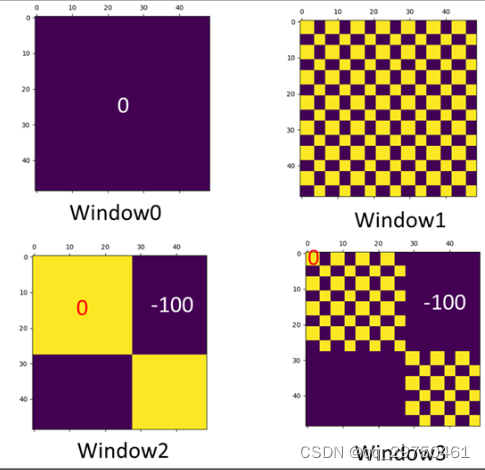

接下来看一下这个 mask 的实现,可以通过代码发现,mask是在 img_mask这个母版上移动得到的,我们可以根据论文代码中给定的关系,假设 H=W=14 window_size=7 shift_size=3,则会划分出 2*2=4个子窗口。

if self.shift_size > 0:

# calculate attention mask for SW-MSA

H, W = self.input_resolution

img_mask = torch.zeros((1, H, W, 1)) # 1 H W 1

h_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

w_slices = (slice(0, -self.window_size),

slice(-self.window_size, -self.shift_size),

slice(-self.shift_size, None))

cnt = 0

for h in h_slices:

for w in w_slices:

#相当于将 img_mask分了9块

img_mask[:, h, w, :] = cnt

cnt += 1

#num_windows*1, window_size, window_size, 1

mask_windows = window_partition(img_mask, self.window_size) # nW, window_size, window_size, 1

mask_windows = mask_windows.view(-1, self.window_size * self.window_size)

#(num_windows,window_size*window_size)

# ->(num_windows,1,window_size*window_size)-(num_windows,window_size*window_size,1)

#->(num_windows,window_size*window_size,window_size*window_size)

attn_mask = mask_windows.unsqueeze(1) - mask_windows.unsqueeze(2)

attn_mask = attn_mask.masked_fill(attn_mask != 0, float(-100.0)).masked_fill(attn_mask == 0, float(0.0))

则此时 img_mask的输出为

上图的 img_mask 经过 window_partition 之后,变为

这个基本与文中对应,为什么要形成这样的mask模板,这个可以继续往下看。

然后对经过partition之后的窗口进行最终输出4个窗口注意力的掩码图,这4个掩码图对应输入张量(B,C,H,W)切分窗口后对应的子窗口,实现子窗口自注意力机制(变换后的特征图对应位置之间的注意力计算)的计算,从而实现整个输入张量自注意力机制的计算。以下展示的是最终的 mask 二值图的可视化。

与mask相关的是 SW-MSA模块,这一部分代码是关键代码,即在已经计算过(q x k)且加入相对位置偏移的算子中加上 mask, 即实现了注意力机制是在自身对应位置计算的,不对应的位置加了一个比较大负值(-100),则经过softmax后,这部分的分值就变低了,将最终的分支与 v 变量进行相乘,基本可以实现遮挡与想要计算位置不同位置特征的目的。

if mask is not None:

nW = mask.shape[0]#n_windows

#mask:num_windows, Wh*Ww, Wh*Ww-> 1, num_windows, 1,Wh*Ww, Wh*Ww

attn = attn.view(B_ // nW, nW, self.num_heads, N, N) + mask.unsqueeze(1).unsqueeze(0)

attn = attn.view(-1, self.num_heads, N, N)

attn = self.softmax(attn)

attn = self.attn_drop(attn)

x = (attn @ v).transpose(1, 2).reshape(B_, N, C)

x = self.proj(x)

x = self.proj_drop(x)

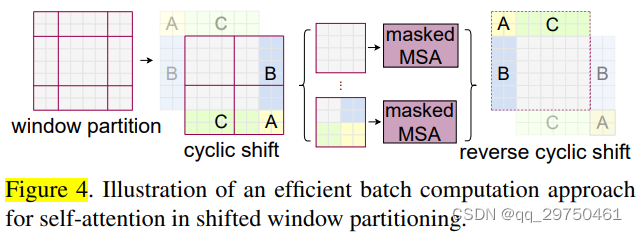

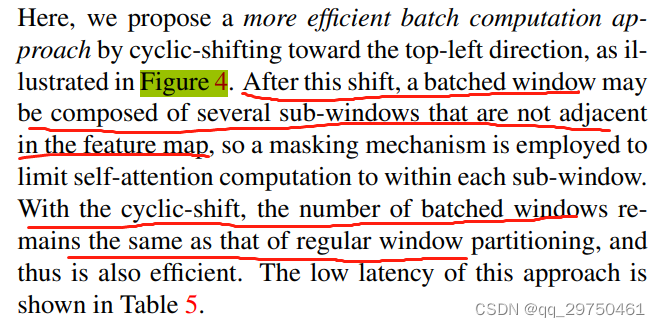

以上基本是本文中子注意力模块计算的过程,当然我们主要讲的是 W-MSA,由于文中还有个模块为 SW-MSA,在进行SW-MSA之前,其实还需要注意的一点是,将原始输入的特征图进行适当的循环移位,但值得注意的是,在代码中 shift_size在任何一个阶段均为 (window_size//2),由于window_size为定值,所以 shift_size也为定值,论文内容和代码如下:

下面代码的效果对应上面的图。

# cyclic shift

if self.shift_size > 0:

if not self.fused_window_process:

shifted_x = torch.roll(x, shifts=(-self.shift_size, -self.shift_size), dims=(1, 2))

# partition windows

x_windows = window_partition(shifted_x, self.window_size) # nW*B, window_size, window_size, C

else:

x_windows = WindowProcess.apply(x, B, H, W, C, -self.shift_size, self.window_size)

else:

shifted_x = x

# partition windows

x_windows = window_partition(shifted_x, self.window_size)

计算完 SW-MSA后,会将该窗口重新复原

# reverse cyclic shift

if self.shift_size > 0:

if not self.fused_window_process:

shifted_x = window_reverse(attn_windows, self.window_size, H, W) # B H' W' C

x = torch.roll(shifted_x, shifts=(self.shift_size, self.shift_size), dims=(1, 2))

else:

x = WindowProcessReverse.apply(attn_windows, B, H, W, C, self.shift_size, self.window_size)

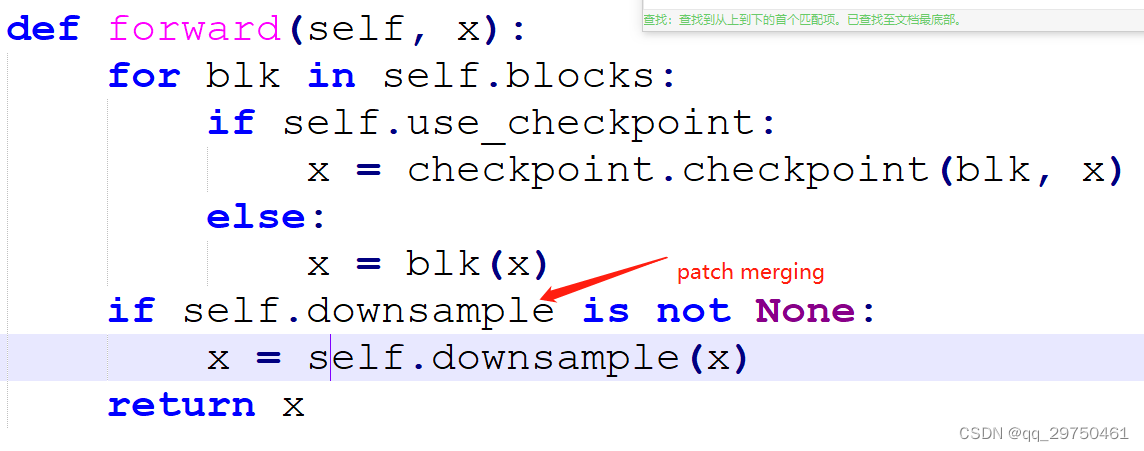

以上就是SwinTransformerBlock模块做的事情了。下面再说下本文的一种池化机制:PatchMerging。

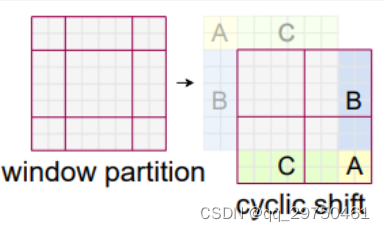

池化机制 PatchMerging

这个模块主要是进行特征图的池化操作,与卷积神经网路中比较直接的池化策略不同,这里采用的 横纵间隔抽取特征图中的像素点,物理上将特征图由(H,W)变为 (H/2,W/2),再经过一个全连接进行降维(4C->2C),最终将(H,W,C)的输入变为了(H/2,W/2,2C)的输出,实现2倍缩放的池化操作。

class PatchMerging(nn.Module):

r""" Patch Merging Layer.

Args:

input_resolution (tuple[int]): Resolution of input feature.

dim (int): Number of input channels.

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

"""

def __init__(self, input_resolution, dim, norm_layer=nn.LayerNorm):

super().__init__()

self.input_resolution = input_resolution

self.dim = dim

self.reduction = nn.Linear(4 * dim, 2 * dim, bias=False)

self.norm = norm_layer(4 * dim)

def forward(self, x):

"""

x: B, H*W, C

"""

H, W = self.input_resolution

B, L, C = x.shape

assert L == H * W, "input feature has wrong size"

assert H % 2 == 0 and W % 2 == 0, f"x size ({H}*{W}) are not even."

x = x.view(B, H, W, C)

x0 = x[:, 0::2, 0::2, :] # B H/2 W/2 C

x1 = x[:, 1::2, 0::2, :] # B H/2 W/2 C

x2 = x[:, 0::2, 1::2, :] # B H/2 W/2 C

x3 = x[:, 1::2, 1::2, :] # B H/2 W/2 C

x = torch.cat([x0, x1, x2, x3], -1) # B H/2 W/2 4*C

x = x.view(B, -1, 4 * C) # B H/2*W/2 4*C

x = self.norm(x)

x = self.reduction(x)

return x

值得注意的是,文中图示部分是将Patch Merging模块与SwinBlock模块组合为stage 2的,其实在代码中,Patch Merging 为 stage1的最后一步,但这个其实与文中图示不冲突。

总结

SwinTransformer的代码确实值得好好看一下,这样文中的技术才会较全面的理解。码字不易,如有问题,欢迎各位留言。

1447

1447

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?