摘要:记录一下自己在10月份参加DataWhale组队学习transformer的所得。这篇博客主要关于transformer基本原理的学习和一个输入序列转换的简单demo,并补充了一些transformer在CV领域的variants,希望本次组队学习能帮助自己快速入门,有机会将transformer用在透过散射成像或者PAM成像相关领域中。以下内容主要参照Datawhale开源资料《动手学CV-Pytorch》第六章,感谢DataWhale开源组织,也欢迎大家点进去多多学习~

2017年谷歌在一篇名为Attention Is All You Need [1]的论文中,提出了一个基于self-attention (自注意力机制)结构来处理序列相关的问题的模型,名为Transformer。

Transformer在很多不同NLP任务中获得了成功,例如:文本分类、机器翻译、阅读理解等。在解决这类问题时,Transformer模型摒弃了固有的定式,并没有用任何CNN或者RNN的结构,而是使用了自注意力机制,自动捕捉输入序列不同位置处的相对关联,善于处理较长文本,并且该模型可以高度并行地工作,训练速度很快。

从2020年开始,transformer被移植到CV领域并大放异彩,包括流行的识别任务(例如图像分类,目标检测,动作识别和分割),生成模型,多模式任务(例如视觉问题解答和视觉推理),视频处理(例如活动识别,视频预测),low-level视觉(例如图像超分辨率和图像复原等),具体可参见两篇综述:A Survey on Visual Transformer [2] 和Transformers in Vision: A Survey [3].

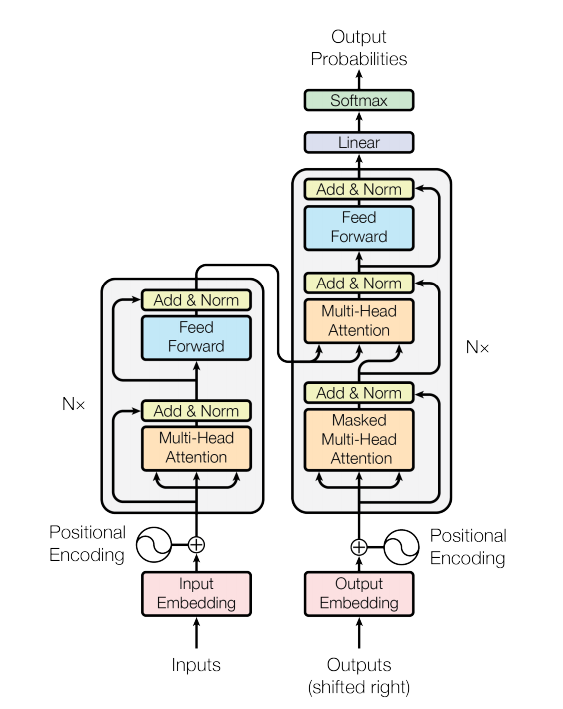

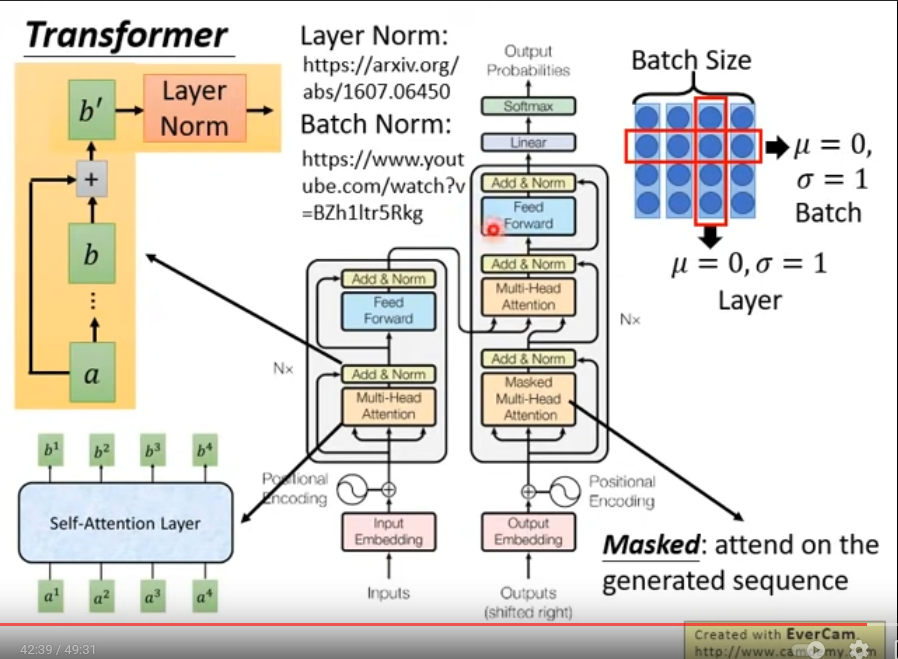

Transformer模型结构

-

编码器(Encoder):N个相同层堆叠在一起,每一层又包含2个子层;第1个子层是Multi-Head Attention(多头的自注意机制),第2个子层是一个简单的Feed Foward网络,两个子层都添加了一个residual 结构和Layer normalization。

-

译码器(Decoder):同样堆叠N个相同层,每一层结构除了也有Multi-Head Attention和Feed Foward网络,还包括一个子层Masked Multi-Head Attention,每个子层同样使用residual 结构和Layer normalization; N层堆叠后加上全连接+Softmax层得到预测概率输出。

- 第一个子层Masked Multi-Head Attention在多头注意力机制的基础上多了掩码mask,用于屏蔽掉无效的padding区域,以及屏蔽来自“未来”的信息,将注意力集中在当前生成序列上(上次预测结果作为输入);

- 第二个子层Multi-Head Attention中,query来自译码器的上一个子层,key 和 value 来自编码器的输出。可以这样理解,就是第二层负责,利用译码器已经预测出的信息作为query,去编码器提取的各种特征中,查找相关信息并融合到当前特征中,来完成预测。

模型输入

由Input Embedding和Positional Encoding(位置编码)两部分组合而成

- Input Embedding层

将某种格式的输入数据,例如文本(Word Embedding),转变为模型可以处理的向量表示,来描述原始数据所包含的信息(提取特征)。构建Embedding层的代码很简单,核心是借助torch提供的nn.Embedding。Embedding层输出的可以理解为当前时间步的特征 - Positional Encoding层

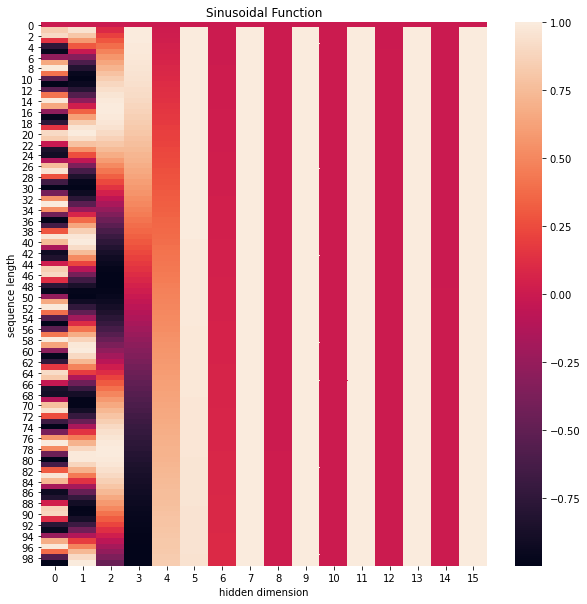

为模型提供当前时间步的前后出现顺序的信息。位置编码可以有很多选择,可以是固定的,也可以设置成可学习的参数。这里,我们使用固定的位置编码。具体地,使用不同频率的sin和cos函数来进行位置编码,如下所示:

P E ( p o s , 2 i ) = sin ( p o s 1000 0 2 i d m o d e l ) P E ( p o s , 2 i + 1 ) = cos ( p o s 1000 0 2 i d m o d e l ) PE_{(pos, 2 i)}=\sin \left(\frac{ pos }{10000^{\frac{2 i}{d_{model }}}}\right) \\ PE_{(pos, 2 i+1)}=\cos \left(\frac{ pos}{10000^{\frac{2 i}{d_{model }}}}\right) PE(pos,2i)=sin(10000dmodel2ipos)PE(pos,2i+1)=cos(10000dmodel2ipos)

其中pos代表时间步的下标索引/句子中字的位置,向量 P E p o s PE_{pos} PEpos 也就是第pos个时间步的位置编码(编码分奇偶),编码长度同Input Embedding层输出向量维度 d m o d e l d_{model} dmodel

对于最大序列长度为100,字嵌入维度为16的位置编码可视化如下:

思考:为什么上面的公式可以作为位置编码?

- 在上面公式的定义下,时间步p和时间步p+k的位置编码的内积,即 P E ( p ) ⋅ P E ( p + k ) PE_{(p)} \cdot PE_{(p+k)} PE(p)⋅PE(p+k) 是与p无关,只与k有关的定值,证明如下。 也就是说,任意两个相距k个时间步的位置编码向量的内积都是相同的,这就相当于蕴含了两个时间步之间相对位置关系的信息。

P E p = { cos ( p 1000 0 2 i d m o d e l ) , sin ( p 1000 0 2 i d m o d e l ) } , i = 0 , 1 , 2... P E p + k = { cos ( p + k 1000 0 2 i d m o d e l ) , sin ( p + k 1000 0 2 i d m o d e l ) } , i = 0 , 1 , 2... P E p ⋅ P E p + k = ∑ i cos ( p 1000 0 2 i d m o d e l ) cos ( p + k 1000 0 2 i d m o d e l ) − sin ( p 1000 0 2 i d m o d e l ) sin ( p + k 1000 0 2 i d m o d e l ) = ∑ i cos ( p 1000 0 2 i d m o d e l − p + k 1000 0 2 i d m o d e l ) = ∑ i cos ( − k 1000 0 2 i d m o d e l ) = c o n s t . PE_{p}=\left \{ \cos \left(\frac{ p }{10000^{\frac{2 i}{d_{model }}}}\right), \sin \left(\frac{ p }{10000^{\frac{2 i}{d_{model }}}}\right) \right \}, i=0, 1, 2... \\ PE_{p+k}=\left \{ \cos \left(\frac{ p+k }{10000^{\frac{2 i}{d_{model }}}}\right), \sin \left(\frac{ p+k }{10000^{\frac{2 i}{d_{model }}}}\right) \right \}, i=0, 1, 2...\\ PE_{p} \cdot PE_{p+k} = \sum_i {\cos \left(\frac{ p }{10000^{\frac{2 i}{d_{model }}}}\right) \cos \left(\frac{ p+k }{10000^{\frac{2 i}{d_{model }}}}\right) - \sin \left(\frac{ p }{10000^{\frac{2 i}{d_{model }}}}\right) \sin \left(\frac{ p+k }{10000^{\frac{2 i}{d_{model }}}}\right) } \\ =\sum_i {\cos \left(\frac{ p }{10000^{\frac{2 i}{d_{model }}}} - \frac{ p+k }{10000^{\frac{2 i}{d_{model }}}} \right)} =\sum_i {\cos \left(\frac{ -k }{10000^{\frac{2 i}{d_{model }}}} \right)} =const. PEp={cos(10000dmodel2ip),sin(10000dmodel2ip)},i=0,1,2...PEp+k={cos(10000dmodel2ip+k),sin(10000dmodel2ip+k)},i=0,1,2...PEp⋅PEp+k=∑icos(10000dmodel2ip)cos(10000dmodel2ip+k)−sin(10000dmodel2ip)sin(10000dmodel2ip+k)=∑icos(10000dmodel2ip−10000dmodel2ip+k)=∑icos(10000dmodel2i−k)=const.

- 此外,每个时间步的位置编码又是唯一的,这两个很好的性质使得上面的公式作为位置编码是有理论保障的。

-

Encoder和Decoder都包含输入模块

编码器和解码器两个部分都包含输入,且两部分的输入的结构是相同的,只是推理时的用法不同,编码器只推理一次,而解码器是类似RNN那样循环推理,将解码器上次预测结果再次输入,来预测下次结果,如此不断生成预测结果的。

解码器部分既有编码器提取特征的输入,也有上次预测结果经embedding层和position encoding层的输入,输出序列的下一个结果。 -

模型输出

输出部分就很简单了,每个时间步都过一个 线性层 + softmax层

线性层的作用:通过对上一步的线性变化得到指定维度的输出,也就是转换维度的作用。转换后的维度对应着输出类别的个数,如果是翻译任务,那就对应的是文字字典的大小。

自注意力机制 (self-attention)

“把注意力聚焦到最有价值的局部来仔细观察,从而作出有效判断。”

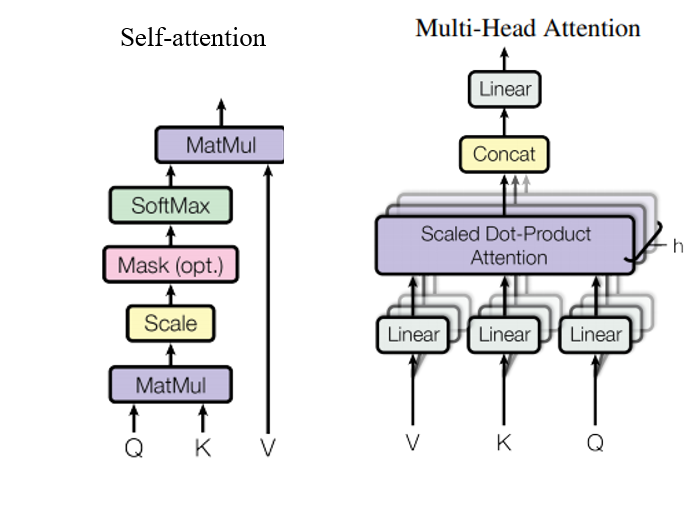

注意力计算:它需要三个指定的输入Q(query), K(key), V(value), 然后通过下面公式得到注意力的计算结果:

A

t

t

e

n

t

i

o

n

(

Q

,

K

,

V

)

=

s

o

f

t

m

a

x

(

Q

K

T

d

k

)

V

Attention(Q, K, V) = softmax \left(\frac{QK^T}{\sqrt{d_k}} \right)V

Attention(Q,K,V)=softmax(dkQKT)V

可以这么简单的理解,当前时间步的注意力计算结果,是一个组系数 * 每个时间步的特征向量value的累加,而这个系数,通过当前时间步的query和其他时间步对应的key做内积得到,这个过程相当于用自己的query对别的时间步的key做查询,判断相似度,决定以多大的比例将对应时间步的信息继承过来。

这里通过李宏毅老师的Transformer视频辅助理解,以下有大量视频截图+我的零碎笔记~

Transformer: seq2seq model with “self-attention”

• RNN难以并行化,CNN可以

RNN考虑整个输入(句子序列)才输出

CNN能输出相同序列,但filter局部连接,输出时只能看句子局部

多个filter同时计算

更高层fdilter的感受野更大,能考虑更长句子

- Self-attention取代RNN

输入输出和RNN一样,都是sequence,每一个输出都看了整个句子,同时能并行化输出

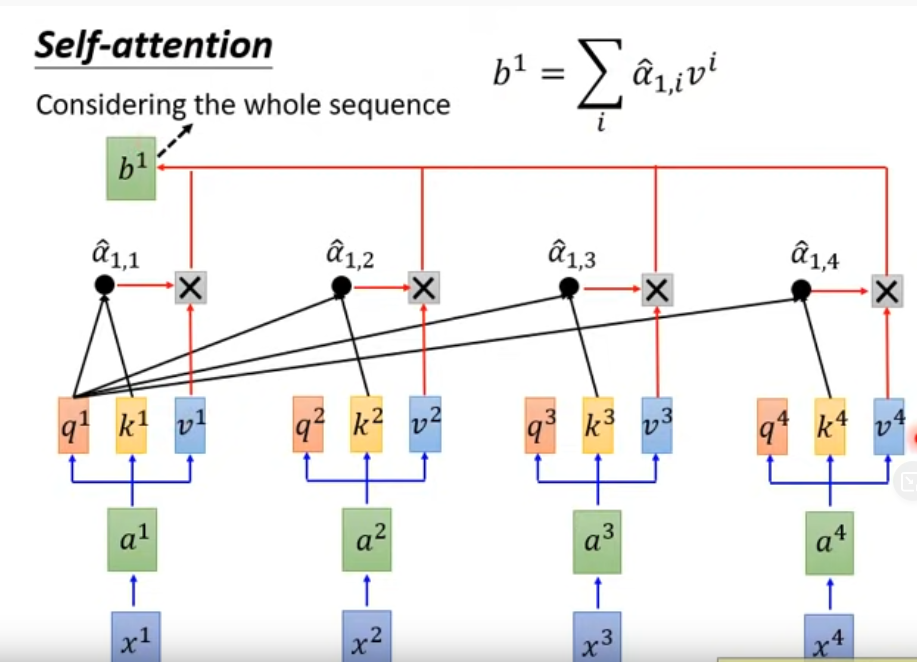

- 拿每个query q 对每个key k做attention(吃2个vector吐1个数),得到 α ( 1 , i ) α_{(1,i)} α(1,i)

- α ( 1 , i ) α_{(1,i)} α(1,i) 经过softmax层

- 产生b1需要已经考虑到整个sequence,依次算出b2, b3, b4

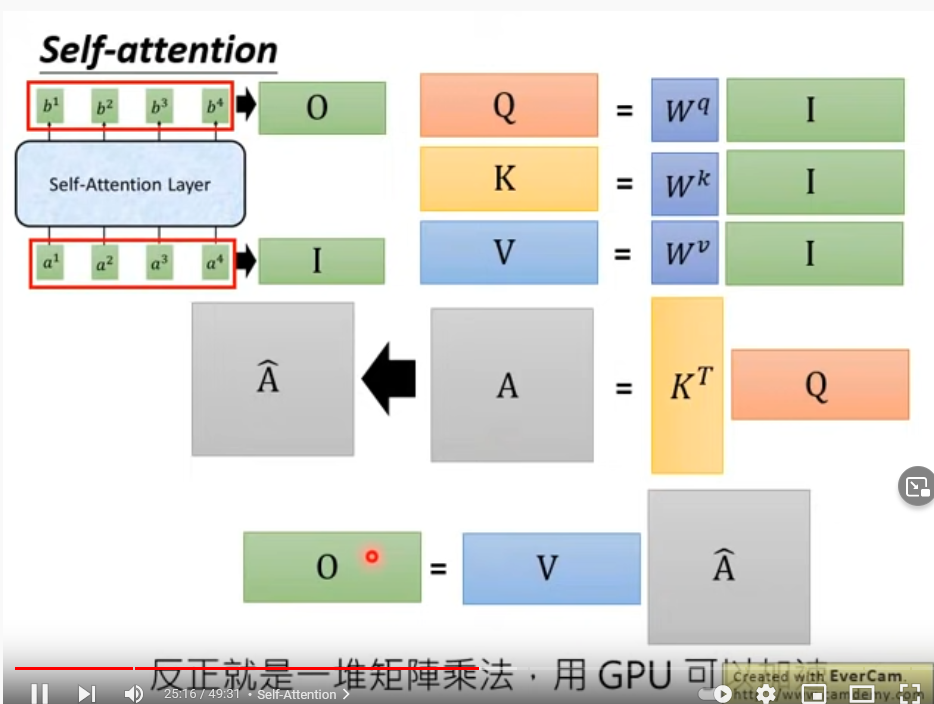

- Self-attention如何做并行化?矩阵运算

计算q, k, v

- 计算 α ( 1 , i ) α_{(1,i)} α(1,i)为q1与 k i k_i ki的dot product并计算b1;依次计算 α ( 2 , i ) α_{(2,i)} α(2,i)和 b 2 b_2 b2…

- 计算最终输出:V与α相乘叠加,即向量与矩阵相乘

- 总结:矩阵乘法,GPU加速

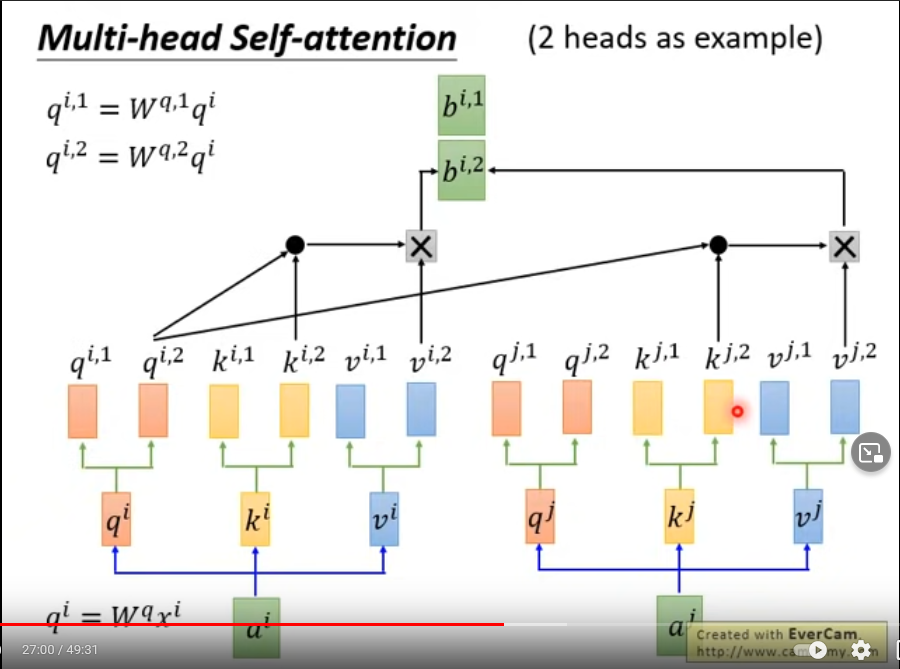

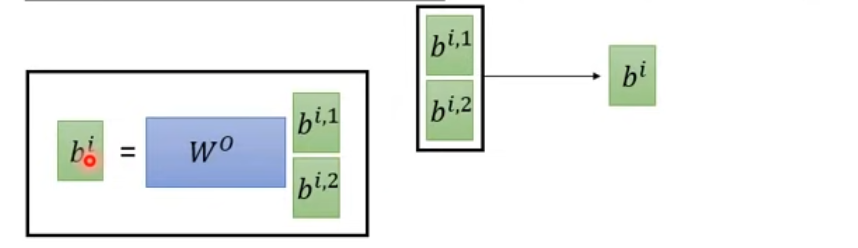

多头注意力机制 (Mult-Head Attention)

刚刚介绍了attention机制,在搭建EncoderLayer时候所使用的Attention模块,实际使用的是多头注意力,可以简单理解为多个注意力模块组合在一起。

多头注意力机制的作用:这种结构设计能让每个注意力机制去优化每个词汇的不同特征部分,从而均衡同一种注意力机制可能产生的偏差,让词义拥有来自更多元表达,实验表明可以从而提升模型效果。

实际transformer网络中使用的是均是多头注意力网络层,self-attention和Multi-head attention的示意图如下:

继续李宏毅视频补充:

继续李宏毅视频补充:

Multi-head self-attention (2 head为例)

不同head,Local/global 感受野,head数目可调

原本self-attention里Input sequence顺序不重要,与每个input vector都做attention,与时间点无关,无位置信息

e i e^i ei与 a i a^i ai 相同维度,以添加位置信息;为什么是相加(add),而不是连接(catenate)?

a i + e i a^i+e^i ai+ei 理解: a i a^i ai拆分为 x i x^i xi和one-hot vector p i p^i pi,W拆分为 W I W^I WI 和 W P W^P WP,则 e i = W P P i e^i=W^PP^i ei=WPPi

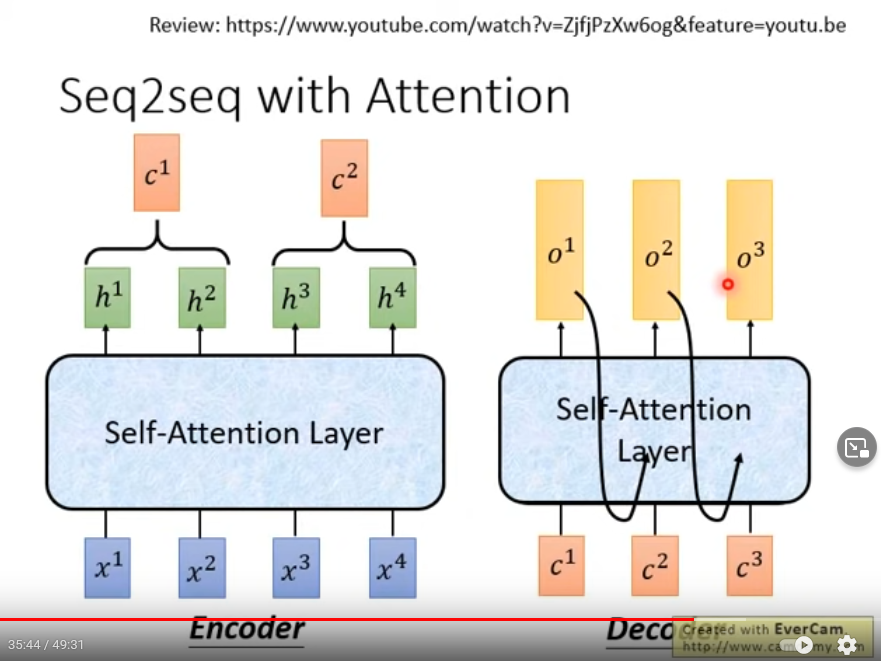

Seq2seq model with Attention:把RNN换成self-attention

decoder既有当前输入,也有上次输出,如此循环预测

前馈全连接层

EncoderLayer中另一个核心的子层是 Feed Forward Layer,我们这就介绍一下。

在进行了Attention操作之后,encoder和decoder中的每一层都包含了一个全连接前向网络,对每个position的向量分别进行相同的操作,包括两个线性变换和一个ReLU激活输出:

F

F

N

(

x

)

=

m

a

x

(

0

,

x

W

1

+

b

1

)

W

2

+

b

2

FFN(x) = max(0, xW_1+b_1)W_2 + b_2

FFN(x)=max(0,xW1+b1)W2+b2

Feed Forward Layer 其实就是简单的由两个前向全连接层组成,核心在于,Attention模块每个时间步的输出都整合了所有时间步的信息,而Feed Forward Layer每个时间步只是对自己的特征的一个进一步整合,与其他时间步无关。

掩码及其作用

掩码的尺寸不定,里面一般只有0和1,代表位置被遮掩或者不被遮掩。掩码的作用如下:一个是屏蔽掉无效的padding区域,一个是屏蔽掉来自“未来”的信息。Encoder中的掩码主要是起到第一个作用,Decoder中的掩码则同时发挥着两种作用。

-

屏蔽掉无效的padding区域:我们训练需要组batch进行,就以机器翻译任务为例,一个batch中不同样本的输入长度很可能是不一样的,此时我们要设置一个最大句子长度,然后对空白区域进行padding填充,而填充的区域无论在Encoder还是Decoder的计算中都是没有意义的,因此需要用mask进行标识,屏蔽掉对应区域的响应。

-

屏蔽掉来自未来的信息:我们已经学习了attention的计算流程,它是会综合所有时间步的计算的,那么在解码的时候,就有可能获取到未来的信息,这是不行的。因此,这种情况也需要我们使用mask进行屏蔽。

vanilla transformer代码实现

import math, copy, time

import numpy as np

import torch

from torch import nn

import torch.nn.functional as F

# Transformer model, see (2017)Attention Is All You Need https://arxiv.org/abs/1706.03762

# The code is from: https://datawhalechina.github.io/dive-into-cv-pytorch/#/chapter06_transformer/6_1_hello_transformer

#Embedding层

class Embeddings(nn.Module):

'''

将输入序列提取特征+向量化表示 (word embeddings含义)

'''

def __init__(self, d_model, vocab):

'''

d_model: dimension of each embedding vector

vocab: size of the dictionary of embeddings

'''

super(Embeddings, self).__init__()

#调用torch.nn.Embedding预定义层, https://pytorch.org/docs/stable/generated/torch.nn.Embedding.html?highlight=nn%20embedding#torch.nn.Embedding

self.lut = nn.Embedding(vocab, d_model)

self.d_model = d_model

def forward(self, x):

embedds = self.lut(x)

return embedds * math.sqrt(self.d_model)

#Position encoding层

class PositionalEncoding(nn.Module):

'''

为输入文本序列提供当前word/时间步的出现顺序信息

'''

def __init__(self, d_model, dropout, max_len=5000):

'''

d_model: 词嵌入的维度

dropout: dropout rate

max_len 每个句子最大长度

'''

super(PositionalEncoding, self).__init__()

self.dropout = nn.Dropout(p=dropout)

#compute the positional encodings

pe = torch.zeros(max_len, d_model) #(max_len, d_model)

position = torch.arange(0, max_len).unsqueeze(1) #(max_len, 1)

div_term = torch.exp(torch.arange(0, d_model, 2) *

-(math.log(10000.0) / d_model)) #(1, d_model/2)

pe[:, 0::2] = torch.sin(position * div_term) #(max_len, d_model/2),行向量与列向量遍历相乘

pe[:, 1::2] = torch.cos(position * div_term) #(max_len, d_model/2)

pe = pe.unsqueeze(0) #(1, max_len, d_model)

self.register_buffer('pe', pe)

def forward(self, x):

x = x + self.pe[:, :x.size(1)].requires_grad_(False)

return self.dropout(x)

# 定义一个clones函数,来更方便的将某个结构复制若干份

def clones(module, N):

"Produce N identical layers."

return nn.ModuleList([copy.deepcopy(module) for _ in range(N)])

class SublayerConnection(nn.Module):

"""

实现子层连接结构的类

"""

def __init__(self, size, dropout):

super(SublayerConnection, self).__init__()

self.norm = LayerNorm(size)

self.dropout = nn.Dropout(dropout)

def forward(self, x, sublayer):

"Apply residual connection to any sublayer with the same size."

# 原paper的方案

#sublayer_out = sublayer(x)

#x_norm = self.norm(x + self.dropout(sublayer_out))

# 稍加调整的版本

sublayer_out = sublayer(x)

sublayer_out = self.dropout(sublayer_out)

x_norm = x + self.norm(sublayer_out)

return x_norm

# Attention

def attention(query, key, value, mask=None, dropout=None):

"Compute 'Scaled Dot Product Attention'"

d_k = query.size(-1)

scores = torch.matmul(query, key.transpose(-2, -1)) / math.sqrt(d_k)

if mask is not None:

scores = scores.masked_fill(mask == 0, -1e9)

p_attn = F.softmax(scores, dim = -1)

if dropout is not None:

p_attn = dropout(p_attn)

return torch.matmul(p_attn, value), p_attn

class MultiHeadedAttention(nn.Module):

def __init__(self, h, d_model, dropout=0.1):

"Take in model size and number of heads."

super(MultiHeadedAttention, self).__init__()

assert d_model % h == 0

# We assume d_v always equals d_k

self.d_k = d_model // h

self.h = h

self.linears = clones(nn.Linear(d_model, d_model), 4)

self.attn = None

self.dropout = nn.Dropout(p=dropout)

def forward(self, query, key, value, mask=None):

"Implements Figure 2"

if mask is not None:

# Same mask applied to all h heads.

mask = mask.unsqueeze(1)

nbatches = query.size(0)

# 1) Do all the linear projections in batch from d_model => h x d_k

query, key, value = \

[l(x).view(nbatches, -1, self.h, self.d_k).transpose(1, 2)

for l, x in zip(self.linears, (query, key, value))]

# 2) Apply attention on all the projected vectors in batch.

x, self.attn = attention(query, key, value, mask=mask,

dropout=self.dropout)

# 3) "Concat" using a view and apply a final linear.

x = x.transpose(1, 2).contiguous() \

.view(nbatches, -1, self.h * self.d_k)

return self.linears[-1](x)

class PositionwiseFeedForward(nn.Module):

"Implements FFN equation."

def __init__(self, d_model, d_ff, dropout=0.1):

super(PositionwiseFeedForward, self).__init__()

self.w_1 = nn.Linear(d_model, d_ff)

self.w_2 = nn.Linear(d_ff, d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, x):

return self.w_2(self.dropout(F.relu(self.w_1(x))))

# We employ a residual connection around each of the two sub-layers, followed by layer normalization

class LayerNorm(nn.Module):

"Construct a layernorm module (See citation for details)."

def __init__(self, feature_size, eps=1e-6):

super(LayerNorm, self).__init__()

self.a_2 = nn.Parameter(torch.ones(feature_size))

self.b_2 = nn.Parameter(torch.zeros(feature_size))

self.eps = eps

def forward(self, x):

mean = x.mean(-1, keepdim=True)

std = x.std(-1, keepdim=True)

return self.a_2 * (x - mean) / (std + self.eps) + self.b_2

def subsequent_mask(size):

"Mask out subsequent positions."

attn_shape = (1, size, size)

subsequent_mask = np.triu(np.ones(attn_shape), k=1).astype('uint8')

return torch.from_numpy(subsequent_mask) == 0

#编码器

class Encoder(nn.Module):

"""

Encoder

The encoder is composed of a stack of N=6 identical layers.

"""

def __init__(self, layer, N):

super(Encoder, self).__init__()

# 调用时会将编码器层传进来,我们简单克隆N分,叠加在一起,组成完整的Encoder

self.layers = clones(layer, N)

self.norm = LayerNorm(layer.size)

def forward(self, x, mask):

"Pass the input (and mask) through each layer in turn."

for layer in self.layers:

x = layer(x, mask)

return self.norm(x)

class EncoderLayer(nn.Module):

"EncoderLayer is made up of two sublayer: self-attn and feed forward"

def __init__(self, size, self_attn, feed_forward, dropout):

super(EncoderLayer, self).__init__()

self.self_attn = self_attn

self.feed_forward = feed_forward

self.sublayer = clones(SublayerConnection(size, dropout), 2)

self.size = size # embedding's dimention of model, 默认512

def forward(self, x, mask):

# attention sub layer

x = self.sublayer[0](x, lambda x: self.self_attn(x, x, x, mask))

# feed forward sub layer

z = self.sublayer[1](x, self.feed_forward)

return z

# Decoder

# The decoder is also composed of a stack of N=6 identical layers.

class Decoder(nn.Module):

"Generic N layer decoder with masking."

def __init__(self, layer, N):

super(Decoder, self).__init__()

self.layers = clones(layer, N)

self.norm = LayerNorm(layer.size)

def forward(self, x, memory, src_mask, tgt_mask):

for layer in self.layers:

x = layer(x, memory, src_mask, tgt_mask)

return self.norm(x)

class DecoderLayer(nn.Module):

"Decoder is made of self-attn, src-attn, and feed forward (defined below)"

def __init__(self, size, self_attn, src_attn, feed_forward, dropout):

super(DecoderLayer, self).__init__()

self.size = size

self.self_attn = self_attn

self.src_attn = src_attn

self.feed_forward = feed_forward

self.sublayer = clones(SublayerConnection(size, dropout), 3)

def forward(self, x, memory, src_mask, tgt_mask):

"Follow Figure 1 (right) for connections."

m = memory

x = self.sublayer[0](x, lambda x: self.self_attn(x, x, x, tgt_mask))

x = self.sublayer[1](x, lambda x: self.src_attn(x, m, m, src_mask))

return self.sublayer[2](x, self.feed_forward)

class Generator(nn.Module):

"Define standard linear + softmax generation step."

def __init__(self, d_model, vocab):

super(Generator, self).__init__()

self.proj = nn.Linear(d_model, vocab)

def forward(self, x):

return F.log_softmax(self.proj(x), dim=-1)

# Model Architecture

class EncoderDecoder(nn.Module):

"""

A standard Encoder-Decoder architecture.

Base for this and many other models.

Base for this and many other models.

"""

def __init__(self, encoder, decoder, src_embed, tgt_embed, generator):

super(EncoderDecoder, self).__init__()

self.encoder = encoder

self.decoder = decoder

self.src_embed = src_embed # input embedding module(input embedding + positional encode)

self.tgt_embed = tgt_embed # ouput embedding module

self.generator = generator # output generation module

def forward(self, src, tgt, src_mask, tgt_mask):

"Take in and process masked src and target sequences."

memory = self.encode(src, src_mask)

res = self.decode(memory, src_mask, tgt, tgt_mask)

return res

def encode(self, src, src_mask):

src_embedds = self.src_embed(src)

return self.encoder(src_embedds, src_mask)

def decode(self, memory, src_mask, tgt, tgt_mask):

target_embedds = self.tgt_embed(tgt)

return self.decoder(target_embedds, memory, src_mask, tgt_mask)

# Full Model

def make_model(src_vocab, tgt_vocab, N=6, d_model=512, d_ff=2048, h=8, dropout=0.1):

"""

构建模型

params:

src_vocab: encoder输入序列编码的词典数量

tgt_vocab: dncoder输入序列编码的词典数量

N: 编码器和解码器堆叠基础模块的个数

d_model: 模型中embedding的size,默认512

d_ff: FeedForward Layer层中embedding的size,默认2048

h: MultiHeadAttention中多头的个数,必须被d_model整除

dropout:

"""

c = copy.deepcopy

attn = MultiHeadedAttention(h, d_model)

ff = PositionwiseFeedForward(d_model, d_ff, dropout)

position = PositionalEncoding(d_model, dropout)

model = EncoderDecoder(

Encoder(EncoderLayer(d_model, c(attn), c(ff), dropout), N),

Decoder(DecoderLayer(d_model, c(attn), c(attn), c(ff), dropout), N),

nn.Sequential(Embeddings(d_model, src_vocab), c(position)),

nn.Sequential(Embeddings(d_model, tgt_vocab), c(position)),

Generator(d_model, tgt_vocab))

# This was important from their code.

# Initialize parameters with Glorot / fan_avg.

for p in model.parameters():

if p.dim() > 1:

nn.init.xavier_uniform_(p)

return model

if __name__ == "__main__":

print("\n-----------------------")

print("test subsequect_mask")

temp_mask = subsequent_mask(4)

print(temp_mask)

print("\n-----------------------")

print("test build model")

tmp_model = make_model(10, 10, 2)

print(tmp_model)

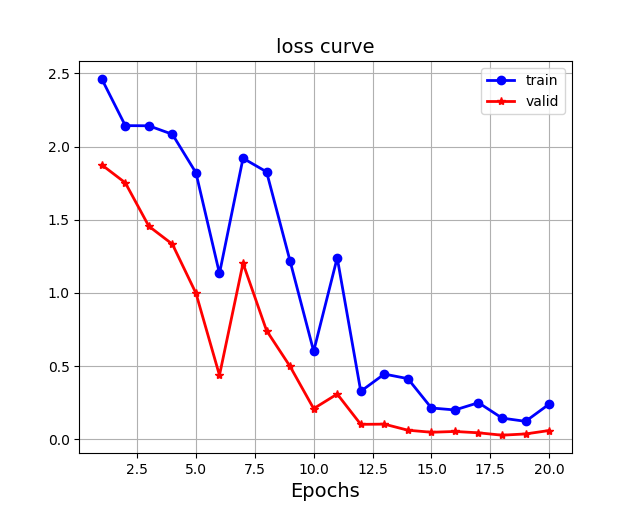

Toy-level task:数字序列转换

下面我们用一个人造的玩具级的小任务,来实战体验下Transformer的训练,加深我们的理解,并且验证我们上面所述代码是否work。

任务描述:针对数字序列进行学习,学习的最终目标是使模型学会输出与输入的序列删除第一个字符之后的相同的序列,如输入[1,2,3,4,5],我们尝试让模型学会输出[2,3,4,5]。

第一步:构建并生成人工数据集

第二步:构建Transformer模型及相关准备工作

第三步:运行模型进行训练和评估

第四步:使用模型进行贪婪解码

‘’’

import time

import numpy as np

import torch

import torch.nn as nn

from models.transformer_basic import make_model, subsequent_mask

import matplotlib.pyplot as plt

'''

#Toy-level number sequence transformation via transformer

@author: anshengmath@163.com

The code is from https://github.com/datawhalechina/dive-into-cv-pytorch/blob/master/code/chapter06_transformer/6.1_hello_transformer/first_train_demo.py

'''

class Batch:

"Object for holding a batch of data with mask during training."

def __init__(self, src, trg=None, pad=0):

self.src = src

self.src_mask = (src != pad).unsqueeze(-2)

if trg is not None:

self.trg = trg[:, :-1] # decoder的输入(即期望输出除了最后一个token以外的部分) (2, 9)

self.trg_y = trg[:, 1:] # decoder的期望输出(trg基础上再删去句子起始符)(2, 9)

self.trg_mask = self.make_std_mask(self.trg, pad) #(2, 9, 9)

self.ntokens = (self.trg_y != pad).data.sum()

@staticmethod

def make_std_mask(tgt, pad):

"""

Create a mask to hide padding and future words.

pad 和 future words 均在mask中用0表示

"""

tgt_mask = (tgt != pad).unsqueeze(-2)

tgt_mask = tgt_mask & subsequent_mask(tgt.size(-1)).type_as(tgt_mask.data) #(2, 1, 9) & (1, 9, 9) = (2, 9, 9)

return tgt_mask

# Synthetic Data

def data_gen(V, batch, nbatches, device):

"""

Generate random data for a src-tgt copy task.

V: 词典数量,取值范围[0, V-1],约定0作为特殊符号使用代表padding

slen: 生成的序列数据的长度

batch: batch_size

nbatches: number of batches/iterations per epoch

"""

slen = 10

for i in range(nbatches):

data = torch.from_numpy(np.random.randint(1, V, size=(batch, slen)))

# 约定输出为输入除去序列第一个元素,即向后平移一位进行输出,同时输出数据要在第一个时间步添加一个起始符

# 因此,加入输入数据为 [3, 4, 2, 6, 4, 5]

# ground truth输出为 [1, 4, 2, 6, 4, 5]

tgt = data.clone()

tgt[:, 0] = 1

src = data

if device == "cuda":

src = src.cuda()

tgt = tgt.cuda()

yield Batch(src, tgt, 0)

# test data_gen

data_iter = data_gen(V=5, batch=2, nbatches=10, device="cpu")

for i, batch in enumerate(data_iter):

print("\nbatch.src")

print(batch.src.shape) #torch.Size([2, 10])encoder的输入

print(batch.src)

print("\nbatch.trg")

print(batch.trg.shape) #torch.Size([2, 9])decoder的输入(第1列置1并舍弃最后1列)

print(batch.trg)

print("\nbatch.trg_y")

print(batch.trg_y.shape) #torch.Size([2, 9])decoder的期望输出(舍弃第1列)

print(batch.trg_y)

print("\nbatch.src_mask")

print(batch.src_mask.shape) #torch.Size([2, 1, 10])encoder的输入掩码,屏蔽无效padding区域

print(batch.src_mask)

print("\nbatch.trg_mask")

print(batch.trg_mask.shape) #torch.Size([2, 9, 9])decoder的输入掩码,屏蔽无效padding区域 & “未来”信息

print(batch.trg_mask)

break

#raise RuntimeError()

class NoamOpt:

"Optim wrapper that implements rate."

def __init__(self, model_size, factor, warmup, optimizer):

self.optimizer = optimizer

self._step = 0

self.warmup = warmup #400

self.factor = factor #1

self.model_size = model_size #d_model

self._rate = 0

def step(self):

"Update parameters and rate"

self._step += 1

rate = self.rate()

for p in self.optimizer.param_groups:

p['lr'] = rate

self._rate = rate

self.optimizer.step()

def rate(self, step = None):

"Implement `lrate` above"

if step is None:

step = self._step

return self.factor * \

(self.model_size ** (-0.5) *

min(step ** (-0.5), step * self.warmup ** (-1.5)))

def get_std_opt(model):

return NoamOpt(model.src_embed[0].d_model, 2, 4000,

torch.optim.Adam(model.parameters(), lr=0, betas=(0.9, 0.98), eps=1e-9))

class LabelSmoothing(nn.Module):

"Implement label smoothing."

def __init__(self, size, padding_idx, smoothing=0.0):

super(LabelSmoothing, self).__init__()

self.criterion = nn.KLDivLoss(size_average=False)

self.padding_idx = padding_idx #0

self.confidence = 1.0 - smoothing

self.smoothing = smoothing #0

self.size = size #词典数量

self.true_dist = None

def forward(self, x, target):

assert x.size(1) == self.size

true_dist = x.data.clone()

true_dist.fill_(self.smoothing / (self.size - 2))

true_dist.scatter_(1, target.data.unsqueeze(1).type(torch.int64), self.confidence)

true_dist[:, self.padding_idx] = 0

mask = torch.nonzero(target.data == self.padding_idx)

if mask.dim() > 0:

true_dist.index_fill_(0, mask.squeeze(), 0.0)

self.true_dist = true_dist

return self.criterion(x, true_dist.requires_grad_(False))

class SimpleLossCompute:

"A simple loss compute and train function."

def __init__(self, generator, criterion, opt=None):

self.generator = generator

self.criterion = criterion

self.opt = opt

def __call__(self, x, y, norm):

"""

norm: loss的归一化系数,用batch中所有有效token数即可

"""

x = self.generator(x)

x_ = x.contiguous().view(-1, x.size(-1))

y_ = y.contiguous().view(-1)

loss = self.criterion(x_, y_)

loss /= norm

loss.backward()

if self.opt is not None:

self.opt.step()

self.opt.optimizer.zero_grad()

return loss.item() * norm

def run_epoch(data_iter, model, loss_compute, device=None):

"Standard Training and Logging Function"

start = time.time()

total_tokens = 0

total_loss = 0

tokens = 0

for i, batch in enumerate(data_iter):

out = model.forward(batch.src, batch.trg,

batch.src_mask, batch.trg_mask)

loss = loss_compute(out, batch.trg_y, batch.ntokens)

total_loss += loss

total_tokens += batch.ntokens

tokens += batch.ntokens

if i % 50 == 1:

elapsed = time.time() - start

print("Epoch Step: %d Loss: %f Tokens per Sec: %f" %

(i, loss / batch.ntokens, tokens / elapsed))

start = time.time()

tokens = 0

return total_loss / total_tokens

# Train the model

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print('Using {} device'.format(device))

batch_size = 32

V = 11 # 词典的数量

iter_per_train_epoch = 30 # 训练时每个epoch多少个batch/iterations

iter_per_valid_epoch = 10 # 验证时每个epoch多少个batch/iterations

criterion = LabelSmoothing(size=V, padding_idx=0, smoothing=0.0)

model = make_model(V, V, N=2)

model_opt = NoamOpt(model.src_embed[0].d_model, 1, 400,

torch.optim.Adam(model.parameters(), lr=0, betas=(0.9, 0.98), eps=1e-9))

if device == "cuda":

model.cuda()

train_mean_loss = []

valid_mean_loss = []

for epoch in range(20): # 训练20个epoch

print(f"\nepoch {epoch+1}")

print("train...")

model.train()

data_iter = data_gen(V, batch_size, iter_per_train_epoch, device)

loss_compute = SimpleLossCompute(model.generator, criterion, model_opt)

train_mean_loss.append(run_epoch(data_iter, model, loss_compute, device))

print("valid...")

model.eval()

valid_data_iter = data_gen(V, batch_size, iter_per_valid_epoch, device)

valid_loss_compute = SimpleLossCompute(model.generator, criterion, None)

valid_mean_loss.append(run_epoch(valid_data_iter, model, valid_loss_compute, device))

print(f"valid loss: {valid_mean_loss}")

plt.figure()

plt.plot(range(1, 21), train_mean_loss, 'b-o', label="train", linewidth=2)

plt.plot(range(1, 21), valid_mean_loss, 'r-*', label="valid", linewidth=2)

plt.grid(True, linestyle='-')

plt.xlabel('Epochs', fontsize= 14)

plt.legend()

plt.title("loss curve", fontsize= 14)

plt.show()

网络训练和验证的loss curve

训好模型后,使用贪心解码的策略,进行预测。

推理得到预测结果的方法并不是唯一的,贪心解码是最常用的,我们在 6.1.2 模型输入的小节中已经介绍过,其实就是先从一个句子起始符开始,每次推理解码器得到一个输出,然后将得到的输出加到解码器的输入中,再次推理得到一个新的输出,循环往复直到预测出句子的终止符,此时将所有预测连在一起便得到了完整的预测结果。

# Greedy decode to test if the whole input sequence can be predicted to be completely accurate

def greedy_decode(model, src, src_mask, max_len, start_symbol):

memory = model.encode(src, src_mask)

# ys代表目前已生成的序列,最初为仅包含一个起始符的序列,不断将预测结果追加到序列最后

ys = torch.ones(1, 1).fill_(start_symbol).type_as(src.data)

for i in range(max_len-1):

out = model.decode(memory,

src_mask,

ys, #target

subsequent_mask(ys.size(1)).type_as(src.data)) #target mask

prob = model.generator(out[:, -1])

_, next_word = torch.max(prob, dim = 1)

next_word = next_word.data[0]

ys = torch.cat([ys, torch.ones(1, 1).type_as(src.data).fill_(next_word)], dim=1)

return ys

print("greedy decode")

model.eval()

src = torch.LongTensor([[1,2,3,4,5,6,7,8,9,10]]).cuda()

src_mask = torch.ones(1, 1, 10).cuda()

pred_result = greedy_decode(model, src, src_mask, max_len=10, start_symbol=1)

print(pred_result[:, 1:])

最终网络输出:

greedy decode

tensor([[ 2, 3, 4, 5, 6, 7, 8, 9, 10]], device=‘cuda:0’)

总结:

- 我第一次接触NLP的训练任务,看代码还是花了很久,尤其这里标签平滑类

LabelSmoothing的构造、优化器的封装类NoamOpt、输出iterator的数据生成类data_gen、以及贪婪算法测试整个输入数字序列是否完整预测正确的greedy_decode函数,都写得比较巧妙,不是很容易看懂。 - 接下来多了解一些最近transformer在CV上的工作,尤其是图像增强,分割和重建。

Transformer在CV中的variants

- 知乎上关于视觉transformer对比cnn的特性和优势的讨论

如何看待Transformer在CV上的应用前景,未来有可能替代CNN吗?

- 视觉Transformer近期很有代表性的工作:

- 图像分类有:iGPT, ViT, DeiT, BiT-L等

- 目标检测有:DETR

- 语义分割有:SETR, CMSA

- 医学图像分割有:nnFormer[1](基于自注意力和卷积经验组合的交错架构), MedT[2], MISSFormer, TransUNet

- 图像增强(图像超分辨、图像复原)有:IPT[3], TTSR[4], SwinIR[5]

图像生成:iGPT[6], TransGAN[7]

[1] nnFormer: Interleaved Transformer for Volumetric Segmentation

代码:https://github.com/282857341/nnFormer

论文:https://arxiv.org/abs/2109.03201

[2] Medical Transformer: Gated Axial-Attention for Medical Image Segmentation

github.com/jeya-maria-jose/Medical-Transformer

论文:https://arxiv.org/abs/2102.10662

[3] H. Chen, Y. Wang, T. Guo, C. Xu, Y. Deng, Z. Liu, S. Ma, C. Xu, C. Xu, and W. Gao, “Pre-trained image processing transformer,”arXiv preprint arXiv:2012.00364, 2020

https://arxiv.org/abs/2012.00364

https://github.com/huawei-noah/Pretrained-IPT

https://github.com/ryanlu2240/Pretrained_IPT

[4] F. Yang, H. Yang, J. Fu, H. Lu, and B. Guo, “Learning texture transformer network for image super-resolution,” in CVPR, 2020

https://arxiv.org/abs/2006.04139

https://github.com/researchmm/TTSR

[5]SwinIR: Image Restoration Using Swin Transformer

论文地址:https://arxiv.org/abs/2108.10257

项目地址:https://github.com/JingyunLiang/SwinIR

[6] Chen, Mark, et al. “Generative pretraining from pixels.” International Conference on Machine Learning. PMLR, 2020.

http://proceedings.mlr.press/v119/chen20s/chen20s.pdf

https://github.com/EugenHotaj/pytorch-generative/blob/master/pytorch_generative/models/autoregressive/image_gpt.py

[7]Y. Jiang, S. Chang, and Z. Wang, “Transgan: Two transformers can make one strong gan,” 2021

代码:VITA-Group/TransGAN

论文:TransGAN: Two Transformers Can Make One Strong GAN

参考文献

[1]. Vaswani, Ashish, et al. “Attention is all you need.” Advances in neural information processing systems. 2017.

[2]. Han, Kai, et al. “A survey on visual transformer.” arXiv preprint arXiv:2012.12556 (2020).

[3]. Khan, Salman, et al. “Transformers in vision: A survey.” arXiv preprint arXiv:2101.01169 (2021).

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?