pytorch的resnet模块在torchvision的models中。

里面可以选择的resnet类型有:

_all_列表的每一个resnet都提供了实现的函数:

-

def resnet18(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-18 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet18', BasicBlock, [

2,

2,

2,

2], pretrained, progress,

-

**kwargs)

-

-

-

def resnet34(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-34 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet34', BasicBlock, [

3,

4,

6,

3], pretrained, progress,

-

**kwargs)

-

-

-

def resnet50(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-50 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet50', Bottleneck, [

3,

4,

6,

3], pretrained, progress,

-

**kwargs)

-

-

-

def resnet101(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-101 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet101', Bottleneck, [

3,

4,

23,

3], pretrained, progress,

-

**kwargs)

-

-

-

def resnet152(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-152 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet152', Bottleneck, [

3,

8,

36,

3], pretrained, progress,

-

**kwargs)

-

-

-

def resnext50_32x4d(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNeXt-50 32x4d model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

kwargs[

'groups'] =

32

-

kwargs[

'width_per_group'] =

4

-

return _resnet(

'resnext50_32x4d', Bottleneck, [

3,

4,

6,

3],

-

pretrained, progress, **kwargs)

-

-

-

def resnext101_32x8d(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNeXt-101 32x8d model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

kwargs[

'groups'] =

32

-

kwargs[

'width_per_group'] =

8

-

return _resnet(

'resnext101_32x8d', Bottleneck, [

3,

4,

23,

3],

-

pretrained, progress, **kwargs)

一,简单介绍残差网络Resnet:

resnet是由很多以下的结构组成:

这种结构,当有1x1卷积核的时候,我们叫bottleneck,当没有1x1卷积核时,我们称其为BasicBlock。残差网络一般就是由这两个结构组成的。

残差网络的结构:(例resnet18)

(彩图resnet18的结构图中,虚曲线表示不同维度的连接,实曲线表示相同维度的连接)

从上图可以看到几个重点的关于resnet的特点:

1.resnet18都是由BasicBlock组成的,并且从表中也可以得知,50层(包括50层)以上的resnet才由Bottleneck组成。

2.所有类型的resnet卷积操作的通道数(无论是输入通道还是输出通道)都是64的倍数

3.所有类型的resnet的卷积核只有3x3和1x1两种

4.无论哪一种resnet,除了公共部分(conv1)外,都是由4大块组成(con2_x,con3_x,con4_x,con5_x,),每一块的起始通道数都是64,128,256,512,这点非常重要。暂且称它为“基准 通道数”

了解这些有利于我们理解resnet的源码。

二,代码解析

1.两个重要结构:BasicBlock和Bottleneck

在pytorch的resnet模块在torchvision的models中。由于resnet中的卷积核不是1x1就是3x3,所以首先定义了这两个卷积核的操作:

-

def conv3x3(in_planes, out_planes, stride=1, groups=1, dilation=1):

-

"""3x3 convolution with padding"""

-

return nn.Conv2d(in_planes, out_planes, kernel_size=

3, stride=stride,

-

padding=dilation, groups=groups, bias=

False, dilation=dilation)

-

-

-

def conv1x1(in_planes, out_planes, stride=1):

-

"""1x1 convolution"""

-

return nn.Conv2d(in_planes, out_planes, kernel_size=

1, stride=stride, bias=

False)

作为重头戏的BasicBlock和Bottleneck都是以类的形式书写:

BasicBlock:

-

class BasicBlock(nn.Module):

-

expansion =

1

#expansion是BasicBlock和Bottleneck的核心区别之一

-

-

def __init__(self, inplanes, planes, stride=1, downsample=None, groups=1,

-

base_width=64, dilation=1, norm_layer=None):

-

super(BasicBlock, self).__init__()

-

if norm_layer

is

None:

-

norm_layer = nn.BatchNorm2d

-

if groups !=

1

or base_width !=

64:

-

raise ValueError(

'BasicBlock only supports groups=1 and base_width=64')

-

if dilation >

1:

-

raise NotImplementedError(

"Dilation > 1 not supported in BasicBlock")

-

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

-

self.conv1 = conv3x3(inplanes, planes, stride)

-

self.bn1 = norm_layer(planes)

-

self.relu = nn.ReLU(inplace=

True)

-

self.conv2 = conv3x3(planes, planes)

-

self.bn2 = norm_layer(planes)

-

self.downsample = downsample

-

self.stride = stride

-

-

def forward(self, x):

-

identity = x

-

out = self.conv1(x)

-

out = self.bn1(out)

-

out = self.relu(out)

-

out = self.conv2(out)

-

out = self.bn2(out)

-

-

if self.downsample

is

not

None:

-

identity = self.downsample(x)

-

-

out += identity

-

out = self.relu(out)

-

-

return out

看到代码 self.downsample = downsample,在默认情况downsample=None,表示不做downsample,但有一个情况需要做,就是一个 BasicBlock的分支x要与output相加时,若x和output的通道数不一样,则要做一个downsample,剧透一下,在resnet里的downsample就是用一个1x1的卷积核处理,变成想要的通道数。为什么要这样做?因为最后要x要和output相加啊, 通道不同相加不了。所以downsample是专门用来改变x的通道数的。

接下来分析BasicBlock处理后的图像的维度是如何变化的:

我们看到,BasicBlock虽然是经过两个3x3的卷积,但是前一个是设置了步长的(剧透给你,设置的步长是2),后一个则是没有设置步长的,这意味着用的是默认步长(剧透给你,默认步长是1),接下来也不想剧透了,直接给看con3x3的定义吧:

可以看到padding在没指定的情况下,默认也是1.根据公式:

W为特征图的长度(或宽度),F为卷积核的长度(或宽度),P为padding,S为步长。所以卷积后的特征图的尺寸就跟步长很有关系,我们知道卷积核为3x3,所以F=3,也知道P=1.所以 当S=1时,W是不变的。当S=2时,W会减少两倍。下面的Bottleneck也是这个原理。就不多写了。

BasicBlock即:

接下来是

Bottleneck:

-

class Bottleneck(nn.Module):

-

expansion =

4

#expansion是BasicBlock和Bottleneck的核心区别之一

-

-

def __init__(self, inplanes, planes, stride=1, downsample=None, groups=1,

-

base_width=64, dilation=1, norm_layer=None):

-

super(Bottleneck, self).__init__()

-

if norm_layer

is

None:

-

norm_layer = nn.BatchNorm2d

-

width = int(planes * (base_width /

64.)) * groups

-

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

-

self.conv1 = conv1x1(inplanes, width)

-

self.bn1 = norm_layer(width)

-

self.conv2 = conv3x3(width, width, stride, groups, dilation)

-

self.bn2 = norm_layer(width)

-

self.conv3 = conv1x1(width, planes * self.expansion)

-

self.bn3 = norm_layer(planes * self.expansion)

-

self.relu = nn.ReLU(inplace=

True)

-

self.downsample = downsample

-

self.stride = stride

-

-

def forward(self, x):

-

identity = x

-

-

out = self.conv1(x)

-

out = self.bn1(out)

-

out = self.relu(out)

-

-

out = self.conv2(out)

-

out = self.bn2(out)

-

out = self.relu(out)

-

-

out = self.conv3(out)

-

out = self.bn3(out)

-

-

if self.downsample

is

not

None:

-

identity = self.downsample(x)

-

-

out += identity

-

out = self.relu(out)

-

-

return out

Bottleneck即:

BasicBlock和Bottleneck的两点核心区别:

1.BasicBlock的卷积核都是2个3x3,Bottleneck则是一个1x1,3x3,1x1共三个卷积核组成。

2.BasicBlock的expansion为1,即输入和输出的通道数是一致的。而Bottleneck的expansion为4,即输出通道数是输入通道数的4倍。

了解这些有利于代码 的理解。

关于downsample:

不管是BasicBlock还是Bottleneck,最后都会做一个判断是否需要给x做downsample,因为必须要把x的通道数变成与主枝的输出的通道一致,才能相加。

2.resnet的本体(ResNet类)

源码中的ResNet类可以根据输入参数的不同,变成resnet18,34,50,101等。

这个ResNet非常重要,由于考虑篇幅问题,后面再细讲。

3.调用pytorch源码使用resnet:

-

from torchvision.models.resnet

import resnet50, Bottleneck

-

resnet = resnet50(pretrained=

True)

短短两行代码就可以调用resnet了。接下来我们顺着源码“顺藤摸瓜”地看一下代码的执行流程:

从resnet50(pretrained=True)可以看出,调用的是resnet50网络,然后通过在torchvision.models.resnet模块中可以找到resnet50()的定义:

-

def resnet50(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-50 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet50', Bottleneck, [

3,

4,

6,

3], pretrained, progress,

-

**kwargs)

可以看到,resnet50()仅仅做了一件事,就是调用了配备了特定参数的_resnet()方法。

即_resnet('resnet50', Bottleneck, [3, 4, 6, 3], pretrained, progress, **kwargs)

第一个参数不用说了,而我们看到 Bottleneck参数,就可以联想到_resnet的第二个参数是resnet网络的组成部分,即不是BasicBlock就是Bottleneck。而[3,4,6,3]具体是什么意思我们要接着看_resnet()的定义:

-

def _resnet(arch, block, layers, pretrained, progress, **kwargs):

-

model = ResNet(block, layers, **kwargs)

-

if pretrained:

-

state_dict = load_state_dict_from_url(model_urls[arch],

-

progress=progress)

-

model.load_state_dict(state_dict)

-

return model

我们可以看到在_resnet()的[3,4,6,3]对应的位置,是显示layers的,且把其作为参数传给了残差网络的本体类Resnet。

接着判断是否需要预训练(pretrained是否为True),为True则加载权重后返回模型,为False就直接返回模型。

而_resnet()中block参数对应的位置就是BasicBlock或Bottleneck。即block就是表示BasicBlock或Bottleneck。

所以一句话概括_resnet()的作用就是先调用Resnet类生成一个Resnet的壳子,若需要预训练则加载权重,不需要就直接返回Resnet的壳子。

以resnet50为例,_resnet()的arch参数填入的是'resnet50',然后会根据这个参数到model_urls字典中找寻相对应的权重资源下载。

该字典如下:

===================

接下来就仔细看看Resnet类的代码了:

ResNet:

-

class ResNet(nn.Module):

-

-

def __init__(self, block, layers, num_classes=1000, zero_init_residual=False,

-

groups=1, width_per_group=64, replace_stride_with_dilation=None,

-

norm_layer=None):

-

super(ResNet, self).__init__()

-

if norm_layer

is

None:

-

norm_layer = nn.BatchNorm2d

-

self._norm_layer = norm_layer

-

-

self.inplanes =

64

-

self.dilation =

1

-

if replace_stride_with_dilation

is

None:

-

# each element in the tuple indicates if we should replace

-

# the 2x2 stride with a dilated convolution instead

-

replace_stride_with_dilation = [

False,

False,

False]

-

if len(replace_stride_with_dilation) !=

3:

-

raise ValueError(

"replace_stride_with_dilation should be None "

-

"or a 3-element tuple, got {}".format(replace_stride_with_dilation))

-

self.groups = groups

-

self.base_width = width_per_group

-

self.conv1 = nn.Conv2d(

3, self.inplanes, kernel_size=

7, stride=

2, padding=

3,

-

bias=

False)

-

self.bn1 = norm_layer(self.inplanes)

-

self.relu = nn.ReLU(inplace=

True)

-

self.maxpool = nn.MaxPool2d(kernel_size=

3, stride=

2, padding=

1)

-

self.layer1 = self._make_layer(block,

64, layers[

0])

-

self.layer2 = self._make_layer(block,

128, layers[

1], stride=

2,

-

dilate=replace_stride_with_dilation[

0])

-

self.layer3 = self._make_layer(block,

256, layers[

2], stride=

2,

-

dilate=replace_stride_with_dilation[

1])

-

self.layer4 = self._make_layer(block,

512, layers[

3], stride=

2,

-

dilate=replace_stride_with_dilation[

2])

-

self.avgpool = nn.AdaptiveAvgPool2d((

1,

1))

-

self.fc = nn.Linear(

512 * block.expansion, num_classes)

-

-

for m

in self.modules():

-

if isinstance(m, nn.Conv2d):

-

nn.init.kaiming_normal_(m.weight, mode=

'fan_out', nonlinearity=

'relu')

-

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

-

nn.init.constant_(m.weight,

1)

-

nn.init.constant_(m.bias,

0)

-

-

# Zero-initialize the last BN in each residual branch,

-

# so that the residual branch starts with zeros, and each residual block behaves like an identity.

-

# This improves the model by 0.2~0.3% according to https://arxiv.org/abs/1706.02677

-

if zero_init_residual:

-

for m

in self.modules():

-

if isinstance(m, Bottleneck):

-

nn.init.constant_(m.bn3.weight,

0)

-

elif isinstance(m, BasicBlock):

-

nn.init.constant_(m.bn2.weight,

0)

-

-

def _make_layer(self, block, planes, blocks, stride=1, dilate=False):

-

norm_layer = self._norm_layer

-

downsample =

None

-

previous_dilation = self.dilation

-

if dilate:

-

self.dilation *= stride

-

stride =

1

-

if stride !=

1

or self.inplanes != planes * block.expansion:

-

downsample = nn.Sequential(

-

conv1x1(self.inplanes, planes * block.expansion, stride),

-

norm_layer(planes * block.expansion),

-

)

-

-

layers = []

-

layers.append(block(self.inplanes, planes, stride, downsample, self.groups,

-

self.base_width, previous_dilation, norm_layer))

-

self.inplanes = planes * block.expansion

-

for _

in range(

1, blocks):

-

layers.append(block(self.inplanes, planes, groups=self.groups,

-

base_width=self.base_width, dilation=self.dilation,

-

norm_layer=norm_layer))

-

-

return nn.Sequential(*layers)

-

-

def forward(self, x):

-

x = self.conv1(x)

-

x = self.bn1(x)

-

x = self.relu(x)

-

x = self.maxpool(x)

-

-

x = self.layer1(x)

-

x = self.layer2(x)

-

x = self.layer3(x)

-

x = self.layer4(x)

-

-

x = self.avgpool(x)

-

x = x.reshape(x.size(

0),

-1)

-

x = self.fc(x)

-

-

return x

一眼看下去不知道该从何入手,但看pytorch的网络,要想知道它的执行顺序是怎么样的,看它的forward方法即可。

从ResNet的forward代码来看,它是先经过conv1(),bn,relu和maxpool()。从之前的resnet表格得知,这几层无论是resnet18,resnet34,resnet50,resnet101等等的resnet一开始都必须经过这几层。这是静态的。

然后进入四层layer()才是动态以区别具体是resnet18,resnet34,resnet50,resnet101等等中的哪一个。

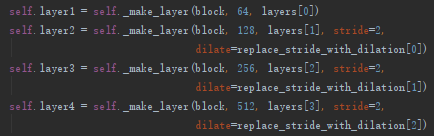

从动态代码来看,所有的resnet都有4个动态层。追寻layer1,2,3,4究竟是如何定义的我们可以看到:

其实所谓的layer1,2,3,4都是由不同参数的_make_layer()方法得到的。看_make_layer()的参数,发现了layers[0~3]就是上面输入的[3,4,6,3],即layers[0]是3,layers[1]是4,layers[2]是6,layers[3]是3。我们继续追寻_make_layer()的定义看看这些数字表示什么意思:

-

def _make_layer(self, block, planes, blocks, stride=1, dilate=False):

-

norm_layer = self._norm_layer

-

downsample =

None

-

previous_dilation = self.dilation

-

if dilate:

-

self.dilation *= stride

-

stride =

1

-

if stride !=

1

or self.inplanes != planes * block.expansion:

-

downsample = nn.Sequential(

-

conv1x1(self.inplanes, planes * block.expansion, stride),

-

norm_layer(planes * block.expansion),

-

)

-

-

layers = []

-

layers.append(block(self.inplanes, planes, stride, downsample, self.groups,

-

self.base_width, previous_dilation, norm_layer))

-

self.inplanes = planes * block.expansion

-

for _

in range(

1, blocks):

-

layers.append(block(self.inplanes, planes, groups=self.groups,

-

base_width=self.base_width, dilation=self.dilation,

-

norm_layer=norm_layer))

-

-

return nn.Sequential(*layers)

(注意:_make_layer()中的planes参数是“基准通道数”,不是输出通道数!!!不是输出通道数!!!不是输出通道数!!!)

我们定位到_make_layer()的第三个(不算上self)参数blocks,在_make_layer()中用到blocks的地方是:

-

layers = []

-

layers.append(block(self.inplanes, planes, stride, downsample, self.groups,

-

self.base_width, previous_dilation, norm_layer))

-

self.inplanes = planes * block.expansion

-

for _

in range(

1, blocks):

-

layers.append(block(self.inplanes, planes, groups=self.groups,

-

base_width=self.base_width, dilation=self.dilation,

-

norm_layer=norm_layer))

可以看到其实blocks这个整数就是表示生成的block的数目。由于在resnet50填入的block是Bottleneck,所以blocks表示Bottleneck的数目。因此[3,4,6,3]表示按次序生成3个Bottleneck,4个Bottleneck,6个Bottleneck,3个Bottleneck。这个表格中resnet50的结构是一致的。所以layers[0]是3个Bottleneck,layers[1]是4个Bottleneck,layers[2]是6个Bottleneck,layers[3]是3个Bottleneck。

===========================

综合来说:

==========================

接下来是resnet一些点的分析:

1.首先是输出维度:

我们看到output size那里,维度是依次下降两倍的,56-28-14-7,但是我们会不会有疑问,经过那么多个卷积核为什么才下降两倍呢?那我们来看看是怎么实现的,这个实现是与ResNet类中的_make_layer()方法密切相关:

看到_make_layer()源码,有没有怀疑过为什么都是建立block,但是要分开两个步骤建呢,是因为上面那个是设置了步长的,步长为2,下面那个是用默认步长的,步长为1。当步长为2时,结合卷积核分析,会减低2倍特征图尺寸。步长为1时,则不变。

总结一些小知识:

1.无论哪种resnet,都有4个layer,进入layer之前,输入图片就已经被缩小了4倍了(一个卷积和一个最大池化操作各1/2)。除了第一个layer不会缩小图片外,其余三个layer都会缩小一半图片。

==========================

最后附上完整的torchvision.models.resnet:

-

import torch.nn

as nn

-

from .utils

import load_state_dict_from_url

-

-

-

__all__ = [

'ResNet',

'resnet18',

'resnet34',

'resnet50',

'resnet101',

-

'resnet152',

'resnext50_32x4d',

'resnext101_32x8d']

-

-

-

model_urls = {

-

'resnet18':

'https://download.pytorch.org/models/resnet18-5c106cde.pth',

-

'resnet34':

'https://download.pytorch.org/models/resnet34-333f7ec4.pth',

-

'resnet50':

'https://download.pytorch.org/models/resnet50-19c8e357.pth',

-

'resnet101':

'https://download.pytorch.org/models/resnet101-5d3b4d8f.pth',

-

'resnet152':

'https://download.pytorch.org/models/resnet152-b121ed2d.pth',

-

'resnext50_32x4d':

'https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth',

-

'resnext101_32x8d':

'https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth',

-

}

-

-

-

def conv3x3(in_planes, out_planes, stride=1, groups=1, dilation=1):

-

"""3x3 convolution with padding"""

-

return nn.Conv2d(in_planes, out_planes, kernel_size=

3, stride=stride,

-

padding=dilation, groups=groups, bias=

False, dilation=dilation)

-

-

-

def conv1x1(in_planes, out_planes, stride=1):

-

"""1x1 convolution"""

-

return nn.Conv2d(in_planes, out_planes, kernel_size=

1, stride=stride, bias=

False)

-

-

-

class BasicBlock(nn.Module):

-

expansion =

1

-

-

def __init__(self, inplanes, planes, stride=1, downsample=None, groups=1,

-

base_width=64, dilation=1, norm_layer=None):

-

super(BasicBlock, self).__init__()

-

if norm_layer

is

None:

-

norm_layer = nn.BatchNorm2d

-

if groups !=

1

or base_width !=

64:

-

raise ValueError(

'BasicBlock only supports groups=1 and base_width=64')

-

if dilation >

1:

-

raise NotImplementedError(

"Dilation > 1 not supported in BasicBlock")

-

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

-

self.conv1 = conv3x3(inplanes, planes, stride)

-

self.bn1 = norm_layer(planes)

-

self.relu = nn.ReLU(inplace=

True)

-

self.conv2 = conv3x3(planes, planes)

-

self.bn2 = norm_layer(planes)

-

self.downsample = downsample

-

self.stride = stride

-

-

def forward(self, x):

-

identity = x

-

-

out = self.conv1(x)

-

out = self.bn1(out)

-

out = self.relu(out)

-

-

out = self.conv2(out)

-

out = self.bn2(out)

-

-

if self.downsample

is

not

None:

-

identity = self.downsample(x)

-

-

out += identity

-

out = self.relu(out)

-

-

return out

-

-

-

class Bottleneck(nn.Module):

-

expansion =

4

-

-

def __init__(self, inplanes, planes, stride=1, downsample=None, groups=1,

-

base_width=64, dilation=1, norm_layer=None):

-

super(Bottleneck, self).__init__()

-

if norm_layer

is

None:

-

norm_layer = nn.BatchNorm2d

-

width = int(planes * (base_width /

64.)) * groups

-

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

-

self.conv1 = conv1x1(inplanes, width)

-

self.bn1 = norm_layer(width)

-

self.conv2 = conv3x3(width, width, stride, groups, dilation)

-

self.bn2 = norm_layer(width)

-

self.conv3 = conv1x1(width, planes * self.expansion)

-

self.bn3 = norm_layer(planes * self.expansion)

-

self.relu = nn.ReLU(inplace=

True)

-

self.downsample = downsample

-

self.stride = stride

-

-

def forward(self, x):

-

identity = x

-

-

out = self.conv1(x)

-

out = self.bn1(out)

-

out = self.relu(out)

-

-

out = self.conv2(out)

-

out = self.bn2(out)

-

out = self.relu(out)

-

-

out = self.conv3(out)

-

out = self.bn3(out)

-

-

if self.downsample

is

not

None:

-

identity = self.downsample(x)

-

-

out += identity

-

out = self.relu(out)

-

-

return out

-

-

-

class ResNet(nn.Module):

-

-

def __init__(self, block, layers, num_classes=1000, zero_init_residual=False,

-

groups=1, width_per_group=64, replace_stride_with_dilation=None,

-

norm_layer=None):

-

super(ResNet, self).__init__()

-

if norm_layer

is

None:

-

norm_layer = nn.BatchNorm2d

-

self._norm_layer = norm_layer

-

-

self.inplanes =

64

-

self.dilation =

1

-

if replace_stride_with_dilation

is

None:

-

# each element in the tuple indicates if we should replace

-

# the 2x2 stride with a dilated convolution instead

-

replace_stride_with_dilation = [

False,

False,

False]

-

if len(replace_stride_with_dilation) !=

3:

-

raise ValueError(

"replace_stride_with_dilation should be None "

-

"or a 3-element tuple, got {}".format(replace_stride_with_dilation))

-

self.groups = groups

-

self.base_width = width_per_group

-

self.conv1 = nn.Conv2d(

3, self.inplanes, kernel_size=

7, stride=

2, padding=

3,

-

bias=

False)

-

self.bn1 = norm_layer(self.inplanes)

-

self.relu = nn.ReLU(inplace=

True)

-

self.maxpool = nn.MaxPool2d(kernel_size=

3, stride=

2, padding=

1)

-

self.layer1 = self._make_layer(block,

64, layers[

0])

-

self.layer2 = self._make_layer(block,

128, layers[

1], stride=

2,

-

dilate=replace_stride_with_dilation[

0])

-

self.layer3 = self._make_layer(block,

256, layers[

2], stride=

2,

-

dilate=replace_stride_with_dilation[

1])

-

self.layer4 = self._make_layer(block,

512, layers[

3], stride=

2,

-

dilate=replace_stride_with_dilation[

2])

-

self.avgpool = nn.AdaptiveAvgPool2d((

1,

1))

-

self.fc = nn.Linear(

512 * block.expansion, num_classes)

-

-

for m

in self.modules():

-

if isinstance(m, nn.Conv2d):

-

nn.init.kaiming_normal_(m.weight, mode=

'fan_out', nonlinearity=

'relu')

-

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

-

nn.init.constant_(m.weight,

1)

-

nn.init.constant_(m.bias,

0)

-

-

# Zero-initialize the last BN in each residual branch,

-

# so that the residual branch starts with zeros, and each residual block behaves like an identity.

-

# This improves the model by 0.2~0.3% according to https://arxiv.org/abs/1706.02677

-

if zero_init_residual:

-

for m

in self.modules():

-

if isinstance(m, Bottleneck):

-

nn.init.constant_(m.bn3.weight,

0)

-

elif isinstance(m, BasicBlock):

-

nn.init.constant_(m.bn2.weight,

0)

-

-

def _make_layer(self, block, planes, blocks, stride=1, dilate=False):

-

norm_layer = self._norm_layer

-

downsample =

None

-

previous_dilation = self.dilation

-

if dilate:

-

self.dilation *= stride

-

stride =

1

-

if stride !=

1

or self.inplanes != planes * block.expansion:

-

downsample = nn.Sequential(

-

conv1x1(self.inplanes, planes * block.expansion, stride),

-

norm_layer(planes * block.expansion),

-

)

-

-

layers = []

-

layers.append(block(self.inplanes, planes, stride, downsample, self.groups,

-

self.base_width, previous_dilation, norm_layer))

-

self.inplanes = planes * block.expansion

-

for _

in range(

1, blocks):

-

layers.append(block(self.inplanes, planes, groups=self.groups,

-

base_width=self.base_width, dilation=self.dilation,

-

norm_layer=norm_layer))

-

-

return nn.Sequential(*layers)

-

-

def forward(self, x):

-

x = self.conv1(x)

-

x = self.bn1(x)

-

x = self.relu(x)

-

x = self.maxpool(x)

-

-

x = self.layer1(x)

-

x = self.layer2(x)

-

x = self.layer3(x)

-

x = self.layer4(x)

-

-

x = self.avgpool(x)

-

x = x.reshape(x.size(

0),

-1)

-

x = self.fc(x)

-

-

return x

-

-

-

def _resnet(arch, block, layers, pretrained, progress, **kwargs):

-

model = ResNet(block, layers, **kwargs)

-

if pretrained:

-

state_dict = load_state_dict_from_url(model_urls[arch],

-

progress=progress)

-

model.load_state_dict(state_dict)

-

return model

-

-

-

def resnet18(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-18 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet18', BasicBlock, [

2,

2,

2,

2], pretrained, progress,

-

**kwargs)

-

-

-

def resnet34(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-34 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet34', BasicBlock, [

3,

4,

6,

3], pretrained, progress,

-

**kwargs)

-

-

-

def resnet50(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-50 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet50', Bottleneck, [

3,

4,

6,

3], pretrained, progress,

-

**kwargs)

-

-

-

def resnet101(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-101 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet101', Bottleneck, [

3,

4,

23,

3], pretrained, progress,

-

**kwargs)

-

-

-

def resnet152(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNet-152 model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

return _resnet(

'resnet152', Bottleneck, [

3,

8,

36,

3], pretrained, progress,

-

**kwargs)

-

-

-

def resnext50_32x4d(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNeXt-50 32x4d model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

kwargs[

'groups'] =

32

-

kwargs[

'width_per_group'] =

4

-

return _resnet(

'resnext50_32x4d', Bottleneck, [

3,

4,

6,

3],

-

pretrained, progress, **kwargs)

-

-

-

def resnext101_32x8d(pretrained=False, progress=True, **kwargs):

-

"""Constructs a ResNeXt-101 32x8d model.

-

-

Args:

-

pretrained (bool): If True, returns a model pre-trained on ImageNet

-

progress (bool): If True, displays a progress bar of the download to stderr

-

"""

-

kwargs[

'groups'] =

32

-

kwargs[

'width_per_group'] =

8

-

return _resnet(

'resnext101_32x8d', Bottleneck, [

3,

4,

23,

3],

-

pretrained, progress, **kwargs)

768

768

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?