import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

C:\Users\chengyuanting\.conda\envs\tensorflow19\lib\importlib\_bootstrap.py:219: RuntimeWarning: numpy.ufunc size changed, may indicate binary incompatibility. Expected 216, got 192

return f(*args, **kwds)

C:\Users\chengyuanting\.conda\envs\tensorflow19\lib\importlib\_bootstrap.py:219: RuntimeWarning: numpy.ufunc size changed, may indicate binary incompatibility. Expected 192 from C header, got 216 from PyObject

return f(*args, **kwds)

C:\Users\chengyuanting\.conda\envs\tensorflow19\lib\importlib\_bootstrap.py:219: RuntimeWarning: numpy.ufunc size changed, may indicate binary incompatibility. Expected 216, got 192

return f(*args, **kwds)

载入数据

mnist = input_data.read_data_sets("MNIST",one_hot = True)

Extracting MNIST\train-images-idx3-ubyte.gz

Extracting MNIST\train-labels-idx1-ubyte.gz

Extracting MNIST\t10k-images-idx3-ubyte.gz

Extracting MNIST\t10k-labels-idx1-ubyte.gz

mnist

Datasets(train=<tensorflow.contrib.learn.python.learn.datasets.mnist.DataSet object at 0x00000199C25E5F28>, validation=<tensorflow.contrib.learn.python.learn.datasets.mnist.DataSet object at 0x00000199C25F6668>, test=<tensorflow.contrib.learn.python.learn.datasets.mnist.DataSet object at 0x00000199C4C68C18>)

batch_size = 100

n_batchs = mnist.train.num_examples // batch_size

构建参数的概要函数

针对模型的参数进行的统计

def variable_info(var):

with tf.name_scope('summaries'):

mean_value = tf.reduce_mean(var)

tf.summary.scalar('mean',mean_value)

with tf.name_scope('stddev'):

stddev_value = tf.sqrt(tf.reduce_mean(tf.square(var - mean_value)))

tf.summary.scalar('stddev',stddev_value)

tf.summary.scalar('max',tf.reduce_max(var))

tf.summary.scalar('min',tf.reduce_min(var))

tf.summary.histogram('histogram',var)

定义输入层

with tf.name_scope("input_layer"):

x = tf.placeholder(tf.float32,[None,784])

y = tf.placeholder(tf.float32,[None,10])

keep_prob = tf.placeholder(tf.float32)

lr = tf.Variable(0.01,tf.float32)

tf.summary.scalar('learning_rate',lr)

定义网络结构

with tf.name_scope('network'):

with tf.name_scope("weight"):

w = tf.Variable(tf.truncated_normal([784,10],stddev = 0.1),name = 'w')

variable_info(w)

with tf.name_scope('baises'):

b = tf.Variable(tf.zeros([10]) + 0.1,name="b")

variable_info(b)

with tf.name_scope('xw_plus_b'):

a = tf.matmul(x,w) + b

with tf.name_scope('softmax'):

out = tf.nn.softmax(a)

定义交叉熵损失函数与优化器

with tf.name_scope("loss_train"):

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits = out,labels = y))

train_step = tf.train.AdamOptimizer(lr).minimize(loss)

tf.summary.scalar("loss",loss)

WARNING:tensorflow:From <ipython-input-11-784de1597487>:2: softmax_cross_entropy_with_logits (from tensorflow.python.ops.nn_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Future major versions of TensorFlow will allow gradients to flow

into the labels input on backprop by default.

See tf.nn.softmax_cross_entropy_with_logits_v2.

定义评价模块

with tf.name_scope("eval"):

with tf.name_scope("correct"):

correct = tf.equal(tf.argmax(out,1),tf.argmax(y,1))

with tf.name_scope("accuracy"):

accuracy = tf.reduce_mean(tf.cast(correct,tf.float32))

tf.summary.scalar('accuracy',accuracy)

初始化变量和summary

init = tf.global_variables_initializer()

merged = tf.summary.merge_all()

训练

with tf.Session() as sess:

sess.run(init)

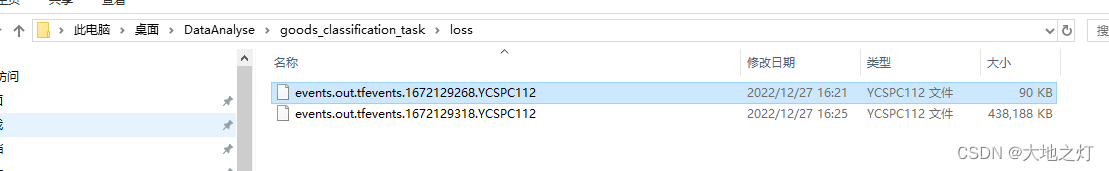

writer = tf.summary.FileWriter('loss/',sess.graph)

for epoch in range(200):

sess.run(tf.assign(lr,0.001 * (0.95 ** epoch)))

for batch in range(n_batchs):

batch_x,batch_y = mnist.train.next_batch(batch_size)

summary, _ = sess.run([merged,train_step],feed_dict = {x:batch_x,y:batch_y,keep_prob:0.5})

writer.add_summary(summary,epoch * n_batchs + batch)

loss_value,acc,lr_value = sess.run([loss,accuracy,lr],feed_dict = {x:mnist.test.images,y:mnist.test.labels,keep_prob:1.0})

print("Iter: ",epoch,"Loss: ",loss_value,"Acc: ",acc,"lr: ",lr_value)

Iter: 0 Loss: 1.6125548 Acc: 0.8939 lr: 0.001

Iter: 1 Loss: 1.5808961 Acc: 0.9092 lr: 0.00095

Iter: 2 Loss: 1.5689776 Acc: 0.9151 lr: 0.0009025

Iter: 3 Loss: 1.5611166 Acc: 0.918 lr: 0.000857375

Iter: 4 Loss: 1.556958 Acc: 0.92 lr: 0.00081450626

Iter: 5 Loss: 1.5531466 Acc: 0.9231 lr: 0.0007737809

Iter: 6 Loss: 1.5505781 Acc: 0.9244 lr: 0.0007350919

Iter: 7 Loss: 1.5494175 Acc: 0.924 lr: 0.0006983373

Iter: 8 Loss: 1.5474731 Acc: 0.9259 lr: 0.0006634204

Iter: 9 Loss: 1.5453671 Acc: 0.9281 lr: 0.0006302494

Iter: 10 Loss: 1.5444945 Acc: 0.9278 lr: 0.0005987369

Iter: 11 Loss: 1.5433562 Acc: 0.9301 lr: 0.0005688001

Iter: 12 Loss: 1.5426576 Acc: 0.9298 lr: 0.0005403601

Iter: 13 Loss: 1.5419394 Acc: 0.93 lr: 0.0005133421

Iter: 14 Loss: 1.5413963 Acc: 0.9308 lr: 0.000487675

Iter: 15 Loss: 1.5413688 Acc: 0.9304 lr: 0.00046329122

Iter: 16 Loss: 1.5408303 Acc: 0.9307 lr: 0.00044012666

Iter: 17 Loss: 1.5404127 Acc: 0.9305 lr: 0.00041812033

Iter: 18 Loss: 1.5400867 Acc: 0.9301 lr: 0.00039721432

Iter: 19 Loss: 1.5396076 Acc: 0.9301 lr: 0.0003773536

Iter: 20 Loss: 1.5392723 Acc: 0.9305 lr: 0.00035848594

Iter: 21 Loss: 1.5389512 Acc: 0.9306 lr: 0.00034056162

Iter: 22 Loss: 1.5388392 Acc: 0.9305 lr: 0.00032353355

Iter: 23 Loss: 1.5386236 Acc: 0.9303 lr: 0.00030735688

Iter: 24 Loss: 1.5386375 Acc: 0.9302 lr: 0.000291989

Iter: 25 Loss: 1.5383784 Acc: 0.931 lr: 0.00027738957

Iter: 26 Loss: 1.5379254 Acc: 0.9312 lr: 0.0002635201

Iter: 27 Loss: 1.5380003 Acc: 0.9311 lr: 0.00025034408

Iter: 28 Loss: 1.5377746 Acc: 0.9314 lr: 0.00023782688

Iter: 29 Loss: 1.5379293 Acc: 0.9304 lr: 0.00022593554

Iter: 30 Loss: 1.5375808 Acc: 0.9317 lr: 0.00021463877

Iter: 31 Loss: 1.5374979 Acc: 0.9314 lr: 0.00020390682

Iter: 32 Loss: 1.5372896 Acc: 0.9321 lr: 0.00019371149

Iter: 33 Loss: 1.5374172 Acc: 0.9317 lr: 0.0001840259

Iter: 34 Loss: 1.5371789 Acc: 0.9313 lr: 0.00017482461

Iter: 35 Loss: 1.5370125 Acc: 0.9326 lr: 0.00016608338

Iter: 36 Loss: 1.5369906 Acc: 0.9322 lr: 0.00015777921

Iter: 37 Loss: 1.536993 Acc: 0.9318 lr: 0.00014989026

Iter: 38 Loss: 1.5368667 Acc: 0.9323 lr: 0.00014239574

Iter: 39 Loss: 1.5369099 Acc: 0.9315 lr: 0.00013527596

Iter: 40 Loss: 1.5366946 Acc: 0.9323 lr: 0.00012851215

Iter: 41 Loss: 1.536604 Acc: 0.9323 lr: 0.00012208655

Iter: 42 Loss: 1.5367252 Acc: 0.9318 lr: 0.00011598222

Iter: 43 Loss: 1.5366664 Acc: 0.9313 lr: 0.00011018311

Iter: 44 Loss: 1.5365683 Acc: 0.9312 lr: 0.000104673956

Iter: 45 Loss: 1.5365902 Acc: 0.9313 lr: 9.944026e-05

Iter: 46 Loss: 1.5363859 Acc: 0.9319 lr: 9.446825e-05

Iter: 47 Loss: 1.5364598 Acc: 0.9317 lr: 8.974483e-05

Iter: 48 Loss: 1.5363631 Acc: 0.9316 lr: 8.525759e-05

Iter: 49 Loss: 1.5363832 Acc: 0.9319 lr: 8.099471e-05

Iter: 50 Loss: 1.5362748 Acc: 0.9315 lr: 7.6944976e-05

Iter: 51 Loss: 1.536229 Acc: 0.9323 lr: 7.3097726e-05

Iter: 52 Loss: 1.5362756 Acc: 0.9315 lr: 6.944284e-05

Iter: 53 Loss: 1.5362172 Acc: 0.9315 lr: 6.59707e-05

Iter: 54 Loss: 1.5362222 Acc: 0.9317 lr: 6.267217e-05

Iter: 55 Loss: 1.5361922 Acc: 0.9318 lr: 5.9538554e-05

Iter: 56 Loss: 1.5361674 Acc: 0.9316 lr: 5.656163e-05

Iter: 57 Loss: 1.5361019 Acc: 0.9321 lr: 5.3733547e-05

Iter: 58 Loss: 1.5360867 Acc: 0.9318 lr: 5.1046867e-05

Iter: 59 Loss: 1.5360582 Acc: 0.9314 lr: 4.8494527e-05

Iter: 60 Loss: 1.5360819 Acc: 0.9317 lr: 4.60698e-05

Iter: 61 Loss: 1.536051 Acc: 0.9316 lr: 4.3766307e-05

Iter: 62 Loss: 1.5360154 Acc: 0.9316 lr: 4.1577994e-05

Iter: 63 Loss: 1.5360197 Acc: 0.9317 lr: 3.9499093e-05

Iter: 64 Loss: 1.5360059 Acc: 0.9316 lr: 3.752414e-05

Iter: 65 Loss: 1.5359796 Acc: 0.9316 lr: 3.5647932e-05

Iter: 66 Loss: 1.5359614 Acc: 0.9317 lr: 3.3865537e-05

Iter: 67 Loss: 1.5359674 Acc: 0.9319 lr: 3.217226e-05

Iter: 68 Loss: 1.5359429 Acc: 0.9318 lr: 3.0563646e-05

Iter: 69 Loss: 1.5359277 Acc: 0.9318 lr: 2.9035464e-05

Iter: 70 Loss: 1.5359355 Acc: 0.9317 lr: 2.758369e-05

Iter: 71 Loss: 1.5359349 Acc: 0.9316 lr: 2.6204505e-05

Iter: 72 Loss: 1.5359257 Acc: 0.9317 lr: 2.4894282e-05

Iter: 73 Loss: 1.535903 Acc: 0.9317 lr: 2.3649567e-05

Iter: 74 Loss: 1.5358955 Acc: 0.9318 lr: 2.2467088e-05

Iter: 75 Loss: 1.5358825 Acc: 0.932 lr: 2.1343734e-05

Iter: 76 Loss: 1.5358828 Acc: 0.9319 lr: 2.0276548e-05

Iter: 77 Loss: 1.5358721 Acc: 0.9319 lr: 1.926272e-05

Iter: 78 Loss: 1.5358682 Acc: 0.932 lr: 1.8299585e-05

Iter: 79 Loss: 1.5358596 Acc: 0.9319 lr: 1.7384604e-05

Iter: 80 Loss: 1.5358545 Acc: 0.9318 lr: 1.6515374e-05

Iter: 81 Loss: 1.5358483 Acc: 0.9319 lr: 1.5689606e-05

Iter: 82 Loss: 1.5358437 Acc: 0.9319 lr: 1.4905126e-05

Iter: 83 Loss: 1.5358357 Acc: 0.9319 lr: 1.4159869e-05

Iter: 84 Loss: 1.5358274 Acc: 0.9319 lr: 1.3451876e-05

Iter: 85 Loss: 1.5358242 Acc: 0.9319 lr: 1.2779282e-05

Iter: 86 Loss: 1.5358182 Acc: 0.9319 lr: 1.2140318e-05

Iter: 87 Loss: 1.5358177 Acc: 0.9319 lr: 1.1533302e-05

Iter: 88 Loss: 1.5358127 Acc: 0.9319 lr: 1.0956637e-05

Iter: 89 Loss: 1.5358096 Acc: 0.9319 lr: 1.0408805e-05

Iter: 90 Loss: 1.5358005 Acc: 0.9319 lr: 9.888365e-06

Iter: 91 Loss: 1.535797 Acc: 0.9319 lr: 9.393946e-06

Iter: 92 Loss: 1.535792 Acc: 0.9321 lr: 8.924249e-06

Iter: 93 Loss: 1.5357928 Acc: 0.9319 lr: 8.478037e-06

Iter: 94 Loss: 1.5357901 Acc: 0.9319 lr: 8.054135e-06

Iter: 95 Loss: 1.535787 Acc: 0.9319 lr: 7.651428e-06

Iter: 96 Loss: 1.5357847 Acc: 0.9319 lr: 7.2688567e-06

Iter: 97 Loss: 1.5357846 Acc: 0.9319 lr: 6.905414e-06

Iter: 98 Loss: 1.5357807 Acc: 0.9319 lr: 6.560143e-06

Iter: 99 Loss: 1.535777 Acc: 0.9319 lr: 6.232136e-06

Iter: 100 Loss: 1.5357772 Acc: 0.9319 lr: 5.920529e-06

Iter: 101 Loss: 1.5357744 Acc: 0.9319 lr: 5.6245026e-06

Iter: 102 Loss: 1.5357723 Acc: 0.9319 lr: 5.3432777e-06

Iter: 103 Loss: 1.5357704 Acc: 0.9319 lr: 5.0761137e-06

Iter: 104 Loss: 1.5357691 Acc: 0.9319 lr: 4.8223083e-06

Iter: 105 Loss: 1.5357666 Acc: 0.9319 lr: 4.5811926e-06

Iter: 106 Loss: 1.5357642 Acc: 0.932 lr: 4.352133e-06

Iter: 107 Loss: 1.5357634 Acc: 0.932 lr: 4.1345265e-06

Iter: 108 Loss: 1.535762 Acc: 0.932 lr: 3.9278e-06

Iter: 109 Loss: 1.5357621 Acc: 0.932 lr: 3.73141e-06

Iter: 110 Loss: 1.5357596 Acc: 0.932 lr: 3.5448395e-06

Iter: 111 Loss: 1.5357587 Acc: 0.932 lr: 3.3675976e-06

Iter: 112 Loss: 1.5357572 Acc: 0.932 lr: 3.1992176e-06

Iter: 113 Loss: 1.5357571 Acc: 0.932 lr: 3.0392569e-06

Iter: 114 Loss: 1.5357553 Acc: 0.932 lr: 2.887294e-06

Iter: 115 Loss: 1.5357549 Acc: 0.932 lr: 2.7429292e-06

Iter: 116 Loss: 1.535754 Acc: 0.932 lr: 2.6057828e-06

Iter: 117 Loss: 1.535753 Acc: 0.932 lr: 2.4754936e-06

Iter: 118 Loss: 1.5357525 Acc: 0.932 lr: 2.3517189e-06

Iter: 119 Loss: 1.5357517 Acc: 0.932 lr: 2.234133e-06

Iter: 120 Loss: 1.5357504 Acc: 0.932 lr: 2.1224264e-06

Iter: 121 Loss: 1.5357497 Acc: 0.932 lr: 2.016305e-06

Iter: 122 Loss: 1.5357491 Acc: 0.932 lr: 1.9154897e-06

Iter: 123 Loss: 1.5357485 Acc: 0.932 lr: 1.8197153e-06

Iter: 124 Loss: 1.5357482 Acc: 0.932 lr: 1.7287296e-06

Iter: 125 Loss: 1.5357474 Acc: 0.932 lr: 1.6422931e-06

Iter: 126 Loss: 1.5357469 Acc: 0.932 lr: 1.5601785e-06

Iter: 127 Loss: 1.5357461 Acc: 0.932 lr: 1.4821695e-06

Iter: 128 Loss: 1.535746 Acc: 0.932 lr: 1.408061e-06

Iter: 129 Loss: 1.5357453 Acc: 0.932 lr: 1.337658e-06

Iter: 130 Loss: 1.5357447 Acc: 0.932 lr: 1.2707751e-06

Iter: 131 Loss: 1.5357442 Acc: 0.932 lr: 1.2072363e-06

Iter: 132 Loss: 1.535744 Acc: 0.932 lr: 1.1468745e-06

Iter: 133 Loss: 1.5357437 Acc: 0.932 lr: 1.0895308e-06

Iter: 134 Loss: 1.5357432 Acc: 0.932 lr: 1.0350542e-06

Iter: 135 Loss: 1.5357428 Acc: 0.932 lr: 9.833016e-07

Iter: 136 Loss: 1.5357424 Acc: 0.932 lr: 9.3413644e-07

Iter: 137 Loss: 1.535742 Acc: 0.932 lr: 8.874296e-07

Iter: 138 Loss: 1.535742 Acc: 0.932 lr: 8.4305816e-07

Iter: 139 Loss: 1.5357416 Acc: 0.932 lr: 8.0090524e-07

Iter: 140 Loss: 1.5357414 Acc: 0.932 lr: 7.6085996e-07

Iter: 141 Loss: 1.5357409 Acc: 0.932 lr: 7.22817e-07

Iter: 142 Loss: 1.5357406 Acc: 0.932 lr: 6.866761e-07

Iter: 143 Loss: 1.5357404 Acc: 0.932 lr: 6.5234235e-07

Iter: 144 Loss: 1.5357403 Acc: 0.932 lr: 6.197252e-07

Iter: 145 Loss: 1.5357401 Acc: 0.932 lr: 5.8873894e-07

Iter: 146 Loss: 1.53574 Acc: 0.932 lr: 5.59302e-07

Iter: 147 Loss: 1.5357397 Acc: 0.932 lr: 5.313369e-07

Iter: 148 Loss: 1.5357393 Acc: 0.932 lr: 5.0477007e-07

Iter: 149 Loss: 1.5357393 Acc: 0.932 lr: 4.7953154e-07

Iter: 150 Loss: 1.5357393 Acc: 0.932 lr: 4.5555498e-07

Iter: 151 Loss: 1.535739 Acc: 0.932 lr: 4.3277723e-07

Iter: 152 Loss: 1.5357388 Acc: 0.932 lr: 4.1113836e-07

Iter: 153 Loss: 1.5357387 Acc: 0.932 lr: 3.9058145e-07

Iter: 154 Loss: 1.5357385 Acc: 0.932 lr: 3.7105238e-07

Iter: 155 Loss: 1.5357385 Acc: 0.932 lr: 3.5249977e-07

Iter: 156 Loss: 1.5357383 Acc: 0.932 lr: 3.3487476e-07

Iter: 157 Loss: 1.5357382 Acc: 0.932 lr: 3.1813104e-07

Iter: 158 Loss: 1.5357381 Acc: 0.932 lr: 3.0222446e-07

Iter: 159 Loss: 1.5357379 Acc: 0.932 lr: 2.8711327e-07

Iter: 160 Loss: 1.5357379 Acc: 0.932 lr: 2.727576e-07

Iter: 161 Loss: 1.5357378 Acc: 0.932 lr: 2.591197e-07

Iter: 162 Loss: 1.5357378 Acc: 0.932 lr: 2.4616372e-07

Iter: 163 Loss: 1.5357378 Acc: 0.932 lr: 2.3385554e-07

Iter: 164 Loss: 1.5357376 Acc: 0.932 lr: 2.2216277e-07

Iter: 165 Loss: 1.5357376 Acc: 0.932 lr: 2.1105463e-07

Iter: 166 Loss: 1.5357375 Acc: 0.932 lr: 2.0050189e-07

Iter: 167 Loss: 1.5357375 Acc: 0.932 lr: 1.904768e-07

Iter: 168 Loss: 1.5357373 Acc: 0.932 lr: 1.8095295e-07

Iter: 169 Loss: 1.5357374 Acc: 0.932 lr: 1.7190531e-07

Iter: 170 Loss: 1.5357374 Acc: 0.932 lr: 1.6331005e-07

Iter: 171 Loss: 1.5357373 Acc: 0.932 lr: 1.5514455e-07

Iter: 172 Loss: 1.5357372 Acc: 0.932 lr: 1.4738731e-07

Iter: 173 Loss: 1.5357372 Acc: 0.932 lr: 1.4001795e-07

Iter: 174 Loss: 1.5357372 Acc: 0.932 lr: 1.3301705e-07

Iter: 175 Loss: 1.5357372 Acc: 0.932 lr: 1.263662e-07

Iter: 176 Loss: 1.5357372 Acc: 0.932 lr: 1.2004789e-07

Iter: 177 Loss: 1.5357372 Acc: 0.932 lr: 1.140455e-07

Iter: 178 Loss: 1.5357372 Acc: 0.932 lr: 1.0834322e-07

Iter: 179 Loss: 1.5357373 Acc: 0.932 lr: 1.0292606e-07

Iter: 180 Loss: 1.5357372 Acc: 0.932 lr: 9.7779754e-08

Iter: 181 Loss: 1.5357372 Acc: 0.932 lr: 9.2890765e-08

Iter: 182 Loss: 1.5357372 Acc: 0.932 lr: 8.824623e-08

Iter: 183 Loss: 1.5357372 Acc: 0.932 lr: 8.383392e-08

Iter: 184 Loss: 1.5357372 Acc: 0.932 lr: 7.964222e-08

Iter: 185 Loss: 1.5357372 Acc: 0.932 lr: 7.566011e-08

Iter: 186 Loss: 1.5357372 Acc: 0.932 lr: 7.18771e-08

Iter: 187 Loss: 1.5357372 Acc: 0.932 lr: 6.828325e-08

Iter: 188 Loss: 1.5357372 Acc: 0.932 lr: 6.486909e-08

Iter: 189 Loss: 1.5357372 Acc: 0.932 lr: 6.1625634e-08

Iter: 190 Loss: 1.5357372 Acc: 0.932 lr: 5.854435e-08

Iter: 191 Loss: 1.5357372 Acc: 0.932 lr: 5.5617136e-08

Iter: 192 Loss: 1.5357372 Acc: 0.932 lr: 5.2836278e-08

Iter: 193 Loss: 1.5357372 Acc: 0.932 lr: 5.0194465e-08

Iter: 194 Loss: 1.5357372 Acc: 0.932 lr: 4.768474e-08

Iter: 195 Loss: 1.5357372 Acc: 0.932 lr: 4.5300503e-08

Iter: 196 Loss: 1.5357372 Acc: 0.932 lr: 4.3035477e-08

Iter: 197 Loss: 1.5357372 Acc: 0.932 lr: 4.0883705e-08

Iter: 198 Loss: 1.5357372 Acc: 0.932 lr: 3.883952e-08

Iter: 199 Loss: 1.5357372 Acc: 0.932 lr: 3.6897543e-08

2247

2247

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?