PyTorch 自定义model简单示例

环境:python3.8

Pytorch介绍

Pytorch中文文档

import torch

import numpy as np

def test():

x_data = torch.Tensor([[2,2,1],[1,1,2],[-1,0,1],[-1,1,-1]])

y_data = torch.Tensor([[5,1],[4,2],[0,0],[-1,-3]])

# 对应关系为x [[1,1],

# [1,-1]

# [1,1]] = y

class MyModule(torch.nn.Module):

def __init__(self):

# 第一句话,调用父类的构造函数

super(MyModule, self).__init__()

# 对应x和y的特征数量

self.mylayer = MyLayer(len(x_data.data[0]),len(y_data.data[0]))

def forward(self, x):

x = self.mylayer(x)

return x

# y = w1*x1 + w2*x2 + w3*x3 + b

class MyLayer(torch.nn.Module):

def __init__(self, in_features, out_features, bias=True, weight=True):

# 和自定义模型一样,第一句话就是调用父类的构造函数

super(MyLayer, self).__init__()

self.in_features = in_features

self.out_features = out_features

# 由于weights是可以训练的,所以使用Parameter来定义

self.weight = torch.nn.Parameter(torch.Tensor(in_features, out_features))

self.bias = torch.nn.Parameter(torch.Tensor(in_features))

def forward(self, x):

y = torch.matmul(x, self.weight)

# 求当前均值

avg = 0

for i in self.bias.data:

avg += i

avg /= len(self.bias.data)

# 填充到所有

bias = torch.DoubleTensor(len(y.data),len(y.data[0])).fill_(avg)

y += bias

return y

model = MyModule()

# 均方误差损失

criterion = torch.nn.MSELoss(reduction='sum')

optimizer = torch.optim.SGD(model.parameters(), lr=0.001)

# 训练

print("loop -------------")

train_time = 1000

pre_loss = 0

for epoch in range(train_time):

y_pred = model(x_data)

# 误差越来越小

loss = criterion(y_pred, y_data)

if np.isinf(loss.item()):

print("loss is inf")

break

# 设置训练中断

if abs(pre_loss-loss) < 1e-5:

break

print(epoch, loss.item())

pre_loss = loss

optimizer.zero_grad()

loss.backward()

optimizer.step()

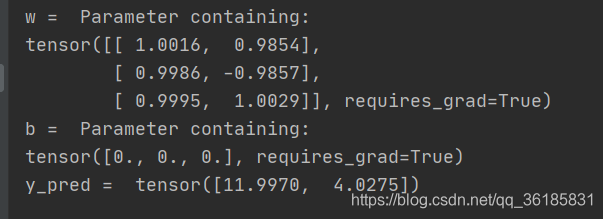

print('w = ', model.mylayer.weight)

print('b = ', model.mylayer.bias)

x_test = torch.Tensor([[3.0, 4.0, 5.0]])

y_test = model(x_test)

print('y_pred = ', y_test.data[0])

if __name__ == "__main__":

test()

结果图:

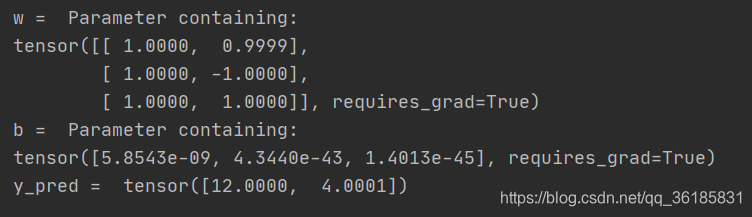

调整训练次数10000和中断条件1e-10后可以达到:

904

904

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?