stitcher_detail是opencv官网提供的一个用于多福图像拼接的Demo,其主要过程如下:

1.输入待拼接图像集合;

2.分别对每幅图像进行特征点提取;

3.特征匹配与匹配点筛选;

4.对匹配点对进行筛选,留下最优图像对生成单映矩阵H;

5.求取相机参数K与R;

6.图像变换;

7.拼接缝隙寻找;

8.光照补偿用处拼接图像的拼接缝可以平滑过渡;

9.完成图像拼接;

本文主要针对步骤3与步骤4的源码库文件matches.cpp进行修改,以达到提升拼接精度与拼接速度的目的。特征匹配与匹配点筛选采用暴力匹配将所有关键点进行匹配,使用knnMatch的方法首先对暴力匹配的结果进行筛选,筛选出同时满足1->2与2->1的点对,将其称之为粗匹配,对粗匹配的结果采用密度聚类的方法搜索出同时满足以下条件的特征点对:任意一对粗匹配的点对作为中心点,其周围给定半径R内有阈值k个特征匹配点将

视为好的特征匹配点,对所有粗匹配点使用此方法最终找出M个符合条件的特征点,当M大于给定阈值N时将M个特征点组合成为新的精匹配点对。通过实验发现使用以上方法进行特征匹配点对的筛选可达到100%的精度效果。对筛选出的匹配点对通过强制将其都视为内点inline,计算中置信度,根据置信度使用查并集的方法的方法对所有图像进行分组,依次生成每个图像间的H矩阵用于图像拼接。以下为源码需要修改的地方,修改matchers.cpp中的源码如下:

1.第一处修改处

void CpuMatcher::match(const ImageFeatures &features1, const ImageFeatures &features2, MatchesInfo& matches_info)

{

CV_INSTRUMENT_REGION();

CV_Assert(features1.descriptors.type() == features2.descriptors.type());

CV_Assert(features2.descriptors.depth() == CV_8U || features2.descriptors.depth() == CV_32F);

matches_info.matches.clear();

Ptr<cv::DescriptorMatcher> matcher;

#if 1 // TODO check this

if (1/*ocl::isOpenCLActivated()*/)

{

matcher = makePtr<BFMatcher>((int)NORM_L2);

}

else

#endif

{

Ptr<flann::IndexParams> indexParams = makePtr<flann::KDTreeIndexParams>();

Ptr<flann::SearchParams> searchParams = makePtr<flann::SearchParams>();

if (features2.descriptors.depth() == CV_8U)

{

indexParams->setAlgorithm(cvflann::FLANN_INDEX_LSH);

searchParams->setAlgorithm(cvflann::FLANN_INDEX_LSH);

}

matcher = makePtr<FlannBasedMatcher>(indexParams, searchParams);

}

std::vector< std::vector<DMatch> > pair_matches;

MatchesSet matche_set;

vector<DMatch> temp_matches;

// Find 1->2 matches

matcher->knnMatch(features1.descriptors, features2.descriptors, pair_matches, 2);

for (size_t i = 0; i < pair_matches.size(); ++i)

{

if (pair_matches[i].size() < 2)

continue;

const DMatch& m0 = pair_matches[i][0];

const DMatch& m1 = pair_matches[i][1];

if (m0.distance < (1.f - match_conf_) * m1.distance)

{

temp_matches.push_back(m0);

matche_set.insert(std::make_pair(m0.queryIdx, m0.trainIdx));

}

}

LOG("\n1->2 matches: " << temp_matches.size() << endl);

// Find 2->1 matches

pair_matches.clear();

matcher->knnMatch(features2.descriptors, features1.descriptors, pair_matches, 2);

for (size_t i = 0; i < pair_matches.size(); ++i)

{

if (pair_matches[i].size() < 2)

continue;

const DMatch& m0 = pair_matches[i][0];

const DMatch& m1 = pair_matches[i][1];

if (m0.distance < (1.f - match_conf_) * m1.distance)

if (matche_set.find(std::make_pair(m0.trainIdx, m0.queryIdx)) == matche_set.end())

temp_matches.push_back(DMatch(m0.trainIdx, m0.queryIdx, m0.distance));

}

LOG("\n1->2 matches: " << temp_matches.size() << endl);

pair_matches.clear();

vector<KeyPoint> kp1, kp2;

vector<DMatch> bestMatches = temp_matches;

kp1 = features1.keypoints;

kp2 = features2.keypoints;

/* for (int i = 0; i < (int)temp_matches.size(); i++)

{

bestMatches.push_back(temp_matches[i][0]);

} */

float angel1 = 0;

float angel2 = 0;

float oneToMore = 0;

int k = 0;

struct keypointFlag{

DMatch match;

int flag;

};

keypointFlag initFlag[bestMatches.size()];

for(int i=0;i<bestMatches.size();i++)

{

initFlag[i].match=bestMatches[i];

initFlag[i].flag=0;

}

vector<DMatch>goodMatches;

for(int i = 0;i<bestMatches.size();i++)

{

k = 0;

for(int j = 0;j<bestMatches.size();j++)

{

if(i!=j&&initFlag[i].flag != 2)

{

oneToMore = sqrt(pow(kp1[initFlag[i].match.queryIdx].pt.x-kp1[initFlag[j].match.queryIdx].pt.x,2)

+pow(kp1[initFlag[i].match.queryIdx].pt.y-kp1[initFlag[j].match.queryIdx].pt.y,2));

angel1 = atan((kp1[initFlag[i].match.queryIdx].pt.y-kp2[initFlag[i].match.trainIdx].pt.y)

/(kp1[initFlag[i].match.queryIdx].pt.x-kp2[initFlag[i].match.trainIdx].pt.x));

angel2 = atan((kp1[initFlag[j].match.queryIdx].pt.y-kp2[initFlag[j].match.trainIdx].pt.y)

/(kp1[initFlag[j].match.queryIdx].pt.x-kp2[initFlag[j].match.trainIdx].pt.x));

if(oneToMore<70&&abs(angel1-angel2)<0.1)

{

initFlag[j].flag = 1;

}

}

}

for(int j = 0;j<bestMatches.size();j++)

{

if(i!=j)

{

if(initFlag[j].flag == 1)

{

k++;

}

}

}

if(k>4)

{

goodMatches.push_back(initFlag[i].match);

initFlag[i].flag = 2;

for(int j = 0;j<bestMatches.size();j++)

{

if(initFlag[j].flag == 1)

{

int flagTmp = 1;

for(int m = 0;m < goodMatches.size();m++)

{

if(kp1[initFlag[j].match.queryIdx].pt.x == kp1[goodMatches[m].queryIdx].pt.x

&& kp1[initFlag[j].match.queryIdx].pt.y == kp1[goodMatches[m].queryIdx].pt.y)

{

flagTmp = 0;

break;

}

}

if(flagTmp == 1)

{

goodMatches.push_back(initFlag[j].match);

initFlag[j].flag = 0;

}

}

}

}

for(int j = 0;j<bestMatches.size();j++)

{

if(initFlag[j].flag == 1)

initFlag[j].flag = 0;

}

}

//int thread_i = int(goodMatches.size() / 100);

//

//if (thread_i >= 2)

//{

// for(int i = 0;i < goodMatches.size();i = i+thread_i)

// {

// const DMatch& m0 = goodMatches[i];

// matches_info.matches.push_back(m0);

// }

//}

//else

//{

for (size_t i = 0; i < goodMatches.size(); ++i)

{

const DMatch& m0 = goodMatches[i];

matches_info.matches.push_back(m0);

}

//}

cout<<"features.img_idx<1,2>"<<"("<<features1.img_idx<<","<<features2.img_idx<<") :"<<matches_info.matches.size()<<endl;

}2.第二处修改处:

void BestOf2NearestMatcher::match(const ImageFeatures &features1, const ImageFeatures &features2,

MatchesInfo &matches_info)

{

CV_INSTRUMENT_REGION();

(*impl_)(features1, features2, matches_info);

// Check if it makes sense to find homography

if (matches_info.matches.size() < static_cast<size_t>(num_matches_thresh1_))

return;

// Construct point-point correspondences for homography estimation

Mat src_points(1, static_cast<int>(matches_info.matches.size()), CV_32FC2);

Mat dst_points(1, static_cast<int>(matches_info.matches.size()), CV_32FC2);

for (size_t i = 0; i < matches_info.matches.size(); ++i)

{

const DMatch& m = matches_info.matches[i];

Point2f p = features1.keypoints[m.queryIdx].pt;

p.x -= features1.img_size.width * 0.5f;

p.y -= features1.img_size.height * 0.5f;

src_points.at<Point2f>(0, static_cast<int>(i)) = p;

p = features2.keypoints[m.trainIdx].pt;

p.x -= features2.img_size.width * 0.5f;

p.y -= features2.img_size.height * 0.5f;

dst_points.at<Point2f>(0, static_cast<int>(i)) = p;

}

// Find pair-wise motion

matches_info.H = findHomography(src_points, dst_points, matches_info.inliers_mask, RANSAC);

if (matches_info.H.empty() || std::abs(determinant(matches_info.H)) < std::numeric_limits<double>::epsilon())

return;

// Find number of inliers

matches_info.num_inliers = 0;

for (size_t i = 0; i < matches_info.inliers_mask.size(); ++i)

if (matches_info.inliers_mask[i])

matches_info.num_inliers++;

// These coeffs are from paper M. Brown and D. Lowe. "Automatic Panoramic Image Stitching

// using Invariant Features"

matches_info.confidence = matches_info.num_inliers / (8 + 0.3 * matches_info.matches.size());

cout<<"features.img_idx<1,2>confidence"<<"("<<features1.img_idx<<","<<features2.img_idx<<") :"<<matches_info.confidence<<endl;

// Set zero confidence to remove matches between too close images, as they don't provide

// additional information anyway. The threshold was set experimentally.

matches_info.confidence = matches_info.confidence > 3. ? 0. : matches_info.confidence;

cout<<"features.img_idx<1,2>num_inliers"<<"("<<features1.img_idx<<","<<features2.img_idx<<") :"<<matches_info.num_inliers<<endl;

// Check if we should try to refine motion

if (matches_info.num_inliers < num_matches_thresh2_)

return;

// Construct point-point correspondences for inliers only

src_points.create(1, matches_info.num_inliers, CV_32FC2);

dst_points.create(1, matches_info.num_inliers, CV_32FC2);

int inlier_idx = 0;

for (size_t i = 0; i < matches_info.matches.size(); ++i)

{

if (!matches_info.inliers_mask[i])

continue;

const DMatch& m = matches_info.matches[i];

Point2f p = features1.keypoints[m.queryIdx].pt;

p.x -= features1.img_size.width * 0.5f;

p.y -= features1.img_size.height * 0.5f;

src_points.at<Point2f>(0, inlier_idx) = p;

p = features2.keypoints[m.trainIdx].pt;

p.x -= features2.img_size.width * 0.5f;

p.y -= features2.img_size.height * 0.5f;

dst_points.at<Point2f>(0, inlier_idx) = p;

inlier_idx++;

}

// Rerun motion estimation on inliers only

matches_info.H = findHomography(src_points, dst_points, RANSAC);

//Mat R = findHomography(src_points, dst_points, RANSAC);

//Mat R = estimateAffine2D(m_LeftInlier, m_RightInlier);

/* double s = sqrt((R.at<double>(0, 0)) * (R.at<double>(0, 0)) + (R.at <double>(1, 0)) * (R.at <double>(1, 0)));

Mat H = cv::Mat(3, 3, R.type());

H.at< double>(0, 0) = R.at<double>(0, 0) / s;

H.at< double>(0, 1) = R.at <double>(0, 1) / s;

H.at< double>(0, 2) = R.at <double>(0, 2);//

H.at< double>(1, 0) = R.at <double>(1, 0) / s;

H.at< double>(1, 1) = R.at <double>(1, 1) / s;

H.at< double>(1, 2) = R.at <double>(1, 2);//

H.at< double>(2, 0) = 0.0;

H.at< double>(2, 1) = 0.0;

H.at<double>(2, 2) = 1.0;

//normalize(H, H, 1, 0, NORM_L2, -1);

matches_info.H = H;*/

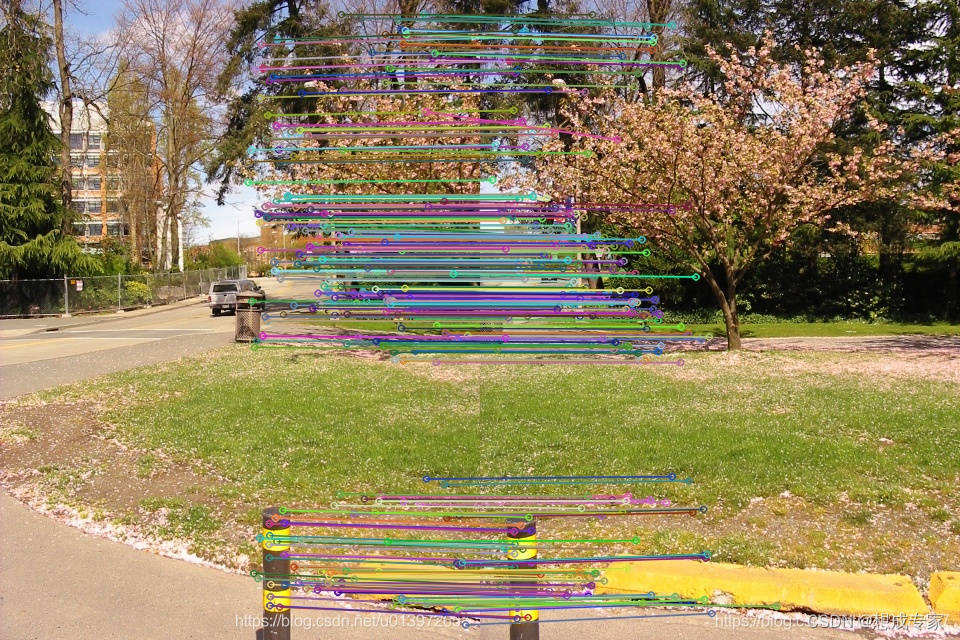

} 效果展示:

1010

1010

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?