1.单条语句的向量化

根据不同的向量化,对“中华女子学院:本科层次仅1专业招男生”这句话进行向量化

1.1 One-hot方法

# one-hot代码

import jieba

import os

import numpy as np

stopwords = open('./data/哈工大停用词表.txt').read().split("\n")

words = '中华女子学院:本科层次仅1专业招男生'

word = jieba.lcut(words)

# print(word)

word = [w for w in word if w not in stopwords] #去除停用词

one_hots = {}

lenth = len(word)

for index,word in enumerate(word):

one_hot = [0]*lenth #构造一个全为0的列表或者是一个一维矩阵

one_hot[index] = 1

one_hots[word] =one_hot

print(one_hots)

1.2 TF和TF-IDF向量法

方法1:先使用CountVectorizer完成文本的TF表示,之后再使用TfidfTransformer完成文本的TF-IDF表示

# TF-IDF方法制作一句话的向量

import jieba

import os

import numpy as np

stopwords = open('./data/哈工大停用词表.txt').read().split("\n")

words = '中华女子学院:本科层次仅1专业招男生'

word = jieba.lcut(words)

word = [w for w in word if w not in stopwords] #去除停用词

dic_data =[ " ".join(word)]

print(dic_data)

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer()

# 矩阵中包含一个元素a[i][j],它表示j词在i类文本下的词频。它通过fit_transform函数计算各个词语出现的次数

count = vectorizer.fit_transform(dic_data)

# print(count)

from sklearn.feature_extraction.text import TfidfTransformer

transformer = TfidfTransformer() #类调用

tfidf = transformer.fit_transform(count) #将词频统计成TF-IDF值

#查看数据结构 tfidf[i][j]表示i类文本中的tf-idf权重

print(tfidf.toarray())

方法2:直接使用TfidfVectorizer完成文本的向量化

import jieba

import os

import numpy as np

stopwords = open('./data/哈工大停用词表.txt').read().split("\n")

words = '中华女子学院:本科层次仅1专业招男生'

word = jieba.lcut(words)

word = [w for w in word if w not in stopwords] #去除停用词

dic_data =[ " ".join(word)]

print(dic_data)

from sklearn.feature_extraction.text import TfidfVectorizer

counttdidf = TfidfVectorizer()

count_tfidf = counttdidf.fit_transform(dic_data)

# print(count_tfidf)

train_weight = count_tfidf.toarray() #篇章(句)向量化 1800*dic

print(count_tfidf.toarray())

1.3 Word2Vec向量法

- 环境安装:pip install gensim

- 使用gensim进行Word2Vec进行向量化的数据格式为:[[‘中华’, ‘女子’, ‘学院’, ‘本科’, ‘层次’, ‘仅’, ‘1’, ‘专业’, ‘招’, ‘男生’], [‘两天’, ‘价’, ‘网站’, ‘背后’, ‘重重’, ‘迷雾’, ‘做个’, ‘网站’, ‘究竟’, ‘钱’], [‘东’, ‘5’, ‘环’, ‘海棠’, ‘公社’, ‘230’, ‘290’, ‘平’, ‘2’, ‘居’, ‘准现房’, ‘98’, ‘折’, ‘优惠’]]

from gensim.models import Word2Vec

stopwords = open('./data/哈工大停用词表.txt').read().split("\n")

words = '中华女子学院:本科层次仅1专业招男生'

word = jieba.lcut(words)

# print(word)

word = [[w for w in word if w not in stopwords]] #去除停用词

# print(word)

#训练模型

model = Word2Vec(word, vector_size=5,min_count=1,epochs=7) #vector_size:向量表示的数量;min_count:最小统计的词;epochs:迭代次数,使得w1和w2更新的次数

#保存模型

model.save('model.bin')

# load model

new_model = Word2Vec.load('model.bin')

print(new_model.wv['学院'])

print("和学院相关性最高的前5个词:",model.wv.most_similar('学院',topn=5))

1.4 BERT向量法

- 环境配置

1.python -V

2.conda create -n pytorch python==3.7

3.conda activate pytorch

4.打开pytorch官网:https://pytorch.org/

5.验证:

import torch

torch.version

torch.cuda.is_available()

6.pip install transformers

使用transformers进行向量化的数据格式为:['北京欢迎你','智能科学与技术']

"""

from transformers import BertTokenizer,BertModel

# 初始化分词器

tokenizer = BertTokenizer.from_pretrained('./bert_base_chinese')

#加载预训练模型

model = BertModel.from_pretrained('./bert_base_chinese')

batch_token1 = tokenizer(['北京欢迎你','智能科学与技术'],padding=True,return_tensors='pt') #padding=True:根据最长的句子进行填充;return_tensors='pt':表示使用pytorch版本

print(batch_token1)

encoded = model(batch_token1['input_ids']) #有id转向量化过程

print(encoded) #last_hidden_state:词向量;pooler_output:分类的输出结果

encoded_text = encoded[0] #仅仅获得词向量

print('词向量:',encoded_text)

print("输出词向量的形状:",encoded_text.shape) #torch.Size([2, 9, 768]) 2个句子*9个词id*768

2.多条语句的向量化

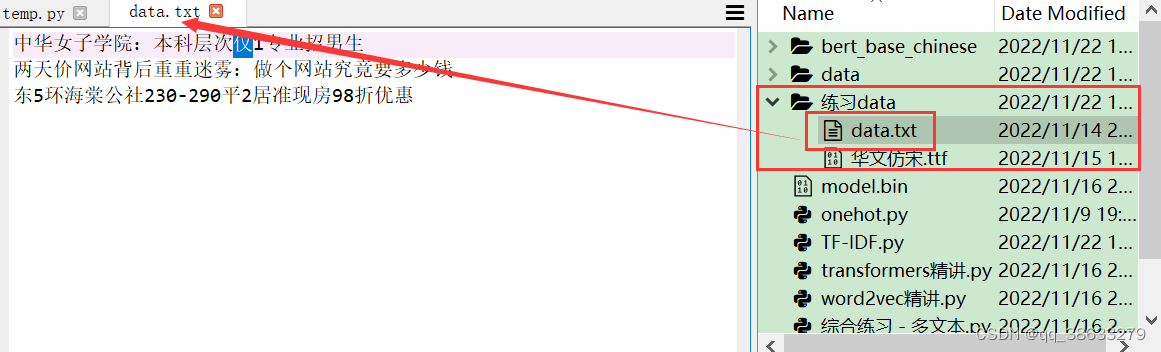

语料说明:将3个文本保存在data.txt文档中,同时将data.txt文档存放在“练习data”这个文件夹当中。同时我们的代码是和该文件夹在同一目录中。

2.1 One-hot方法

# one-hot代码

import jieba

import os

import numpy as np

stopwords = open('./data/哈工大停用词表.txt').read().split("\n")

dic = []

with open('./练习data/data.txt','r',encoding='utf-8') as f:

lines = f.read().split('\n')

for line in lines:

words = jieba.lcut(line)

# for w in words:

# if w not in stopwords:

# dic.append(w)

dic.append([w for w in words if w not in stopwords])

# print(dic)

def mak_dic(seg_dic):

dics = []

for lines in seg_dic:

for words in lines:

if words not in dics:

dics.append(words)

print(len(dics))

one_hots = {}

lenth = len(dics)

for index,word in enumerate(dics):

print(word)

one_hot = [0]*lenth #构造一个全为0的列表或者是一个一维矩阵

one_hot[index] = 1

print(one_hot)

one_hots[word] =one_hot

return one_hots

result = mak_dic(dic)

2.2 TF-IDF方法

使用skearn进行TF-IDF进行向量化的数据格式为:['北京 欢迎 北京','你们 喜欢 北京','北京 天安门']

# TF-IDF方法

stopwords = open('./data/哈工大停用词表.txt').read().split("\n")

dic = []

dic_data = []

with open('./练习data/data.txt','r',encoding='utf-8') as f:

lines = f.read().split('\n')

for line in lines:

words = jieba.lcut(line)

# for w in words:

# if w not in stopwords:

# dic.append(w)

dic.append([w for w in words if w not in stopwords])

dic_datas = " ".join(words)

dic_data.append(dic_datas)

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer()

# 矩阵中包含一个元素a[i][j],它表示j词在i类文本下的词频。它通过fit_transform函数计算各个词语出现的次数

count = vectorizer.fit_transform(dic_data)

# print(count)

from sklearn.feature_extraction.text import TfidfTransformer

transformer = TfidfTransformer() #类调用

tfidf = transformer.fit_transform(count) #将词频统计成TF-IDF值

#查看数据结构 tfidf[i][j]表示i类文本中的tf-idf权重

print(tfidf.toarray())

2.3 Word2Vec向量法

"""

# 环境配置:pip install gensim

使用gensim进行Word2Vec进行向量化的数据格式为:

[['中华', '女子', '学院', '本科', '层次', '仅', '1', '专业', '招', '男生'], ['两天', '价', '网站', '背后', '重重', '迷雾', '做个', '网站', '究竟', '钱'], ['东', '5', '环', '海棠', '公社', '230', '290', '平', '2', '居', '准现房', '98', '折', '优惠']]

"""

from gensim.models import Word2Vec

from sklearn.decomposition import PCA

import matplotlib.pyplot as plt

stopwords = open('./data/哈工大停用词表.txt').read().split("\n")

dic = []

dic_data = []

with open('./练习data/data.txt','r',encoding='utf-8') as f:

lines = f.read().split('\n')

for line in lines:

words = jieba.lcut(line)

# for w in words:

# if w not in stopwords:

# dic.append(w)

dic.append([w for w in words if w not in stopwords])

dic_datas = " ".join(words)

dic_data.append(dic_datas)

#x训练模型

model = Word2Vec(dic, vector_size=5,min_count=1,epochs=7)

#保存模型

model.save('./model.bin')

# load model

new_model = Word2Vec.load('model.bin')

print(model.wv['学院'])

print("和学院相关性最高的前5个词:",model.wv.most_similar('学院',topn=5))

## 判断词之间的相似度

print(model.wv.similarity('本科','本科')) #1.0

## 判断词之间的相似度

print(model.wv.similarity('男生','女子')) #-0.15

#PCA降维为2维空间

rawWordVec =[]

word2ind = {}

for i,w in enumerate(model.wv.index_to_key): #序号、词语

word2ind[w] = i

rawWordVec.append(model.wv[w])

rawWordVec = np.array(rawWordVec)

X_reduced = PCA(n_components=2).fit_transform(rawWordVec)

print(X_reduced)

#绘制星空表

# 绘制星空图

# 绘制所有单词向量的二维空间投影

fig = plt.figure(figsize = (5, 5))

ax = fig.gca()

ax.set_facecolor('white')

ax.plot(X_reduced[:, 0], X_reduced[:, 1], '.', markersize = 5, alpha = 0.3, color = 'black') #黑色表示所有的词语

# 绘制几个特殊单词的向量

words = ['学院','女子']

# 设置中文字体 否则乱码

import matplotlib

zhfont1 = matplotlib.font_manager.FontProperties(fname='./华文仿宋.ttf', size=16)

for w in words:

if w in word2ind:

ind = word2ind[w]

xy = X_reduced[ind]

plt.plot(xy[0], xy[1], '.', alpha =1, color = 'orange',markersize=10)

plt.text(xy[0], xy[1], w, fontproperties = zhfont1, alpha = 1, color = 'red')

2.4 BERT向量法

"""

# 环境配置

1.python -V

2.conda create -n pytorch python==3.7

3.conda activate pytorch

4.打开pytorch官网:https://pytorch.org/

5.验证:

import torch

torch.__version__

torch.cuda.is_available()

6.pip install transformers

使用transformers进行向量化的数据格式为:['北京欢迎你','智能科学与技术']

"""

dic = []

with open('./练习data/data.txt','r',encoding='utf-8') as f:

lines = f.read().split('\n')

for line in lines:

dic.append(line)

from transformers import BertTokenizer,BertModel

from transformers import logging

logging.set_verbosity_error()

model = BertModel.from_pretrained('./bert_base_chinese')

batch_token1 = tokenizer(dic,padding=True,return_tensors='pt') #padding=True:根据最长的句子进行填充;return_tensors='pt':表示使用pytorch版本

encoded = model(batch_token1['input_ids']) #有id转向量化过程

# print(encoded) #last_hidden_state:词向量;pooler_output:分类的输出结果

encoded_text = encoded[0] #仅仅获得词向量

print(encoded_text.shape) #torch.Size([2, 9, 768]) 2个句子*9个词id*768

839

839

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?