Incremental Learning of Object Detectors without Catastrophic Forgetting 无灾难性遗忘的增量学习目标检测器

论文地址:https://arxiv.org/abs/1708.06977

代码地址:https://github.com/kshmelkov/incremental_detectors

使用迁移学习方法 :首先训练一个fast-rcnn的网络结构使其能够检测原本的数据集CA,这个网络结构记为A(CA)。对先前训练得到的网络A(CA)做两份copies:一个冻结的网络通过蒸馏loss对原来的CA进行检测识别;另外一个B(CB)被扩充用来检测新的分类CB(在元数据中未出现或未被标注)。创建一个新的FC层用来只对新的分类检测,然后将其输出和原来的输出做concat,即:根据新增加的类别数对网络A进行扩展,即增加全连接层的输出个数,得到初始化的Network B网络。新的层是采用和先前的网络A一样的初始化方式进行随机初始化的。最终目标就是:训练一个网络能够仅仅使用新的数据,最后能够识别出新增分类和旧分类的网络。

摘要

Despite their success for object detection, convolutional neural networks are ill-equipped for incremental learning, mi.e., adapting the original model trained on a set of classes to additionally detect objects of new classes, in the absence of the initial training data. They suffer from “catastrophic forgetting”—an abrupt degradation of performance on the original set of classes, when the training objective is adapted to the new classes. We present a method to address this issue, and learn object detectors incrementally, when neither the original training data nor annotations for the original classes in the new training set are available. The core of our proposed solution is a loss function to balance the interplay between predictions on the new classes and a new distillation loss which minimizes the discrepancy between responses for old classes from the original and the updated networks. This incremental learning can be performed multiple times, for a new set of classes in each step, with a moderate drop in performance compared to the baseline network trained on the ensemble of data. We present object detection results on the PASCAL VOC 2007 and COCO datasets, along with a detailed empirical analysis of the approach.

尽管卷积神经网络在目标检测方面取得了成功,但它在增量学习方面的能力却很差,例如,在缺乏初始训练数据的情况下,调整在一组类上训练的原始模型,以额外检测新类的目标。他们会遇到“灾难性遗忘”问题——当训练目标适应新类时,原有类的表现突然下降。我们提出了一种方法来解决这个问题,当新训练集中的原始训练数据和原始类的标注都不可用时,我们可以增量地学习目标检测器。我们提出的解决方案的核心是一个损失函数,用于平衡新类别预测和新蒸馏损失之间的相互作用,从而最小化原始网络和更新网络中旧类别响应之间的差异。这种增量学习可以在每个步骤中对一组新的类执行多次,与在数据集合上训练的基线网络相比,性能略有下降。我们展示了PASCAL VOC 2007和COCO数据集上的目标检测结果,并对该方法进行了详细的实证分析。

介绍

Modern detection methods, such as [4,32], based on convolutional neural networks (CNNs) have achieved state-ofthe-art results on benchmarks such as PASCAL VOC [10] and COCO [24]. This, however, comes with a high training time to learn the models. Furthermore, in an era where datasets are evolving regularly, with new classes and samples, it is necessary to develop incremental learning methods. A popular way to mitigate this is to use CNNs pretrained on a certain dataset for a task, and adapt them to new datasets or tasks, rather than train the entire network from scratch.

基于卷积神经网络(CNN)的现代检测方法,如[4,32],已经在PASCAL VOC[10]和COCO[24]等基准上取得了最先进的结果。然而,这需要很长的训练时间来学习模型。此外,在一个数据集定期演变的时代,有了新的类和样本,有必要开发增量学习方法。缓解这一问题的一种流行方法是,在任务的特定数据集上使用预先训练的CNN,并使其适应新的数据集或任务,而不是从头开始训练整个网络。

Fine-tuning [15] is one approach to adapt a network to new data or tasks. Here, the output layer of the original network is adjusted, either by replacing it with classes corresponding to the new task, or by adding new classes to the existing ones. The weights in this layer are then randomly initialized, and all the parameters of the network are tuned with the objective for the new task. While this framework is very successful on the new classes, its performance on the old ones suffers dramatically, if the network is not trained on all the classes jointly. This issue, where a neural network forgets previously learned knowledge when adapted to a new task, is referred to as catastrophic interference or forgetting. It has been known for over a couple of decades in the context of feedforward fully connected networks [25, 30], and needs to be addressed in the current state-of-the-art object detector networks, if we want to do incremental learning.

微调[15]是使网络适应新数据或任务的一种方法。在这里,调整原始网络的输出层,或者用对应于新任务的类替换它,或者向现有类添加新类。然后随机初始化该层中的权重,并根据新任务的目标调整网络的所有参数。虽然这个框架在新类上非常成功,但如果网络不是在所有类上联合训练,它在旧类上的性能会受到极大的影响。这个问题被称为灾难性干扰或遗忘,即神经网络在适应新任务时忘记先前学习的知识。几十年来,在前馈全连接网络[25,30]的背景下,人们已经知道了这一点,如果我们想进行增量学习,则需要在当前最先进的目标检测器网络中加以解决。

Consider the example in Figure 1. It illustrates catastrophic forgetting when incrementally adding a class, horse in this object detection example. The first CNN (top) is trained on three classes, including person, and localizes the rider in the image. The second CNN (bottom) is an incrementally trained version of the first one for the category horse. In other words, the original network is adapted with images from only this new class. This adapted network localizes the horse in the image, but fails to detect the rider, which it was capable of originally, and despite the fact that the person class was not updated. In this paper, we present a method to alleviate this issue.

考虑图1中的示例。在这个目标检测示例中,它演示了当递增地添加一个类horse时灾难性的遗忘。第一个CNN(上图)在三个类接受训练,包括person,并在图像中定位骑手。第二个CNN(下图)是第一个类别马的增量训练版本。换言之,原始网络仅适用于来自这个新类别的图像。这个经过调整的网络在图像中定位了马,但是没有检测到骑手,尽管事实上person类没有更新,但它最初能够检测到骑手。在本文中,我们提出了一种方法来缓解这个问题。

Figure 1. Catastrophic forgetting. An object detector network originally trained for three classes, including person, detects the rider (top). When the network is retrained with images of the new class horse, it detects the horse in the test image, but fails to localize the rider (bottom) 最初为三个等级(包括人)训练的目标检测器网络检测骑手(顶部)。当网络使用新类别马的图像重新训练时,它在测试图像中检测到马,但无法定位骑手(底部)

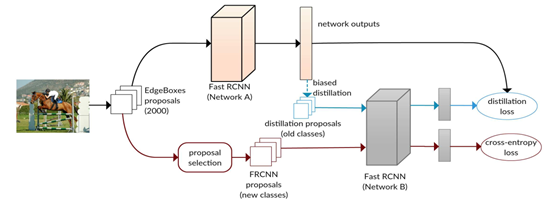

Using only the training samples for the new classes, we propose a method for not only adapting the old network to the new classes, but also ensuring performance on the old classes does not degrade. The core of our approach is a loss function balancing the interplay between predictions on the new classes, i.e., cross-entropy loss, and a new distillation loss which minimizes the discrepancy between responses for old classes from the original and the new networks. The overall approach is illustrated in Figure 2.

仅使用新类的训练样本,我们提出了一种方法,不仅可以使旧网络适应新类,而且可以确保旧类的性能不会降低。我们方法的核心是一个损失函数,它平衡了新类别预测之间的相互作用,即交叉熵损失,以及一个新的蒸馏损失,该损失最小化了原始网络和新网络中旧类别响应之间的差异。整体方法如图2所示。

Figure 2. Overview of our framework for learning object detectors incrementally. It is composed of a frozen copy of the detector (Network A) and the detector (Network B) adapted for the new class(es). See text for details.增量学习目标检测器的框架概述。它由检测器的冻结副本(网络a)和适用于新类别的检测器(网络B)组成。有关详细信息,请参阅文本。

We use a frozen copy of the original detection network to compute the distillation loss. This loss is related to the concept of “knowledge distillation” proposed in [19], but our application of it is significantly different from this previous work, as discussed in Section 3.2. We specifically target the problem of object detection, which has the additional challenge of localizing objects with bounding boxes, unlike other attempts [23,31] limited to the image classification task. We demonstrate experimental results on the PASCAL VOC and COCO datasets using Fast R-CNN [14] as the network. Our results show that we can add new classes incrementally to an existing network without forgetting the original classes, and with no access to the original trainingdata. We also evaluate variants of our method empirically, and show the influence of distillation and the loss function. Note that our framework is general and can be applied to any other CNN-based object detectors where proposals are computed externally, or static sliding windows are used.

我们使用原始检测网络的冻结副本来计算蒸馏损失。这种损失与[19]中提出的“知识蒸馏”概念有关,但我们对它的应用与之前的工作有很大不同,如第3.2节所述。我们专门针对目标检测问题,与其他仅限于图像分类任务的尝试[23,31]不同,该问题还面临使用边界框定位目标的额外挑战。我们使用Fast R-CNN[14]作为网络,在PASCAL VOC和COCO数据集上演示了实验结果。我们的结果表明,我们可以在不忘记原始类的情况下,向现有网络中增量添加新类,并且不需要访问原始训练数据。我们还对我们的方法进行了经验评估,并展示了蒸馏和损失函数的影响。请注意,我们的框架是通用的,可以应用于任何其他基于CNN的目标检测器,其中建议是外部计算的,或者使用静态滑动窗口。

相关工作

The problem of incremental learning has a long history in machine learning and artificial intelligence [6,29,36,37]. Some of the more recent work, e.g., [8, 9], focuses on continuously updating the training set with data acquired from the Internet. They are: (i) restricted to learning with a fixeddata representation [9], or (ii) keep all the collected data to retrain the model [8]. Other work partially addresses these issues by learning classifiers without access to the ensemble of data [26, 33], but uses a fixed image representation. Unlike these methods, our approach is aimed at learning the representation and classifiers jointly, without storing all the training examples. To this end, we use neural networks to model the task in an end-to-end fashion.

增量学习问题在机器学习和人工智能领域有着悠久的历史[6,29,36,37]。最近的一些工作,例如[8,9],侧重于使用从互联网获取的数据不断更新培训集。它们是:(i)仅限于使用固定数据表示进行学习[9],或(ii)保留所有收集的数据以重新训练模型[8]。其他工作通过学习分类器来部分解决这些问题,而无需访问数据集合[26,33],但使用固定的图像表示。与这些方法不同,我们的方法旨在联合学习表示和分类器,而不存储所有训练示例。为此,我们使用神经网络以端到端的方式对任务进行建模。

Our work is also topically related to transfer learning and domain adaptation methods. Transfer learning uses knowledge acquired from one task to help learn another. Domain adaptation transfers the knowledge acquired for a task from a data distribution to other (but related) data. These paradigms, and in particular fine-tuning, a special case of transfer learning, are very popular in computer vision. CNNs learned for image classification [21] are often used to train other vision tasks such as object detection [28,40] and semantic segmentation [7].

我们的工作还涉及迁移学习和领域适应方法。迁移学习使用从一项任务中获得的知识来帮助学习另一项任务。域自适应将为任务获取的知识从数据分发转移到其他(但相关)数据。这些范例,特别是微调,迁移学习的一个特例,在计算机视觉中非常流行。为图像分类学习的CNN[21]通常用于训练其他视觉任务,如目标检测[28,40]和语义分割[7]。

An alternative to transfer knowledge from one network to another is distillation [5, 19]. This was originally proposed to transfer knowledge between different neural networks—from a large network to a smaller one for efficient deployment. The method in [19] encouraged the large (old) and the small (new) networks to produce similar responses. It has found several applications in domain adaptation and model compression [17, 34, 39]. Overall, transfer learning and domain adaptation methods require at least unlabeled data for both the tasks or domains, and in its absence, the new network quickly forgets all the knowledge acquired in the source domain [12, 16, 25, 30]. In contrast, our approach addresses the challenging case where no training data is available for the original task (i.e., detecting objects belonging to the original classes), by building on the concept of knowledge distillation [19].

将知识从一个网络转移到另一个网络的另一种方法是蒸馏[5,19]。这最初是为了在不同的神经网络之间传递知识,从一个大的网络到一个小的网络,以便有效地部署。[19]中的方法鼓励大(旧)和小(新)网络产生类似的响应。它在域自适应和模型压缩方面有多个应用[17,34,39]。总的来说,迁移学习和领域适应方法至少需要任务或领域的未标记数据,如果没有这些数据,新网络会很快忘记在源领域获得的所有知识[12,16,25,30]。相比之下,我们的方法基于知识提取的概念,解决了原始任务没有可用训练数据(即检测属于原始类的对象)的挑战性情况[19]。

This phenomenon of forgetting is believed to be caused by two factors [11, 22]. First, the internal representations in hidden layers are often overlapping, and a small change in a single neuron can affect multiple representations at the same time [11]. Second, all the parameters in feedforward networks are involved in computations for every data point, and a backpropagation update affects all of them in each training step [22]. The problem of addressing these issues in neural networks has its origin in classical connectionist networks several years ago [2, 11–13, 25], but needs to beadapted to today’s large deep neural network architectures for vision tasks [23,31].

这种遗忘现象被认为是由两个因素引起的[11,22]。首先,隐藏层中的内部表示通常是重叠的,单个神经元的微小变化可能同时影响多个表示[11]。其次,前馈网络中的所有参数都涉及到每个数据点的计算,而反向传播更新会在每个训练步骤中影响所有参数[22]。在神经网络中解决这些问题的问题起源于几年前的经典连接主义网络[2,11–13,25],但需要适应今天用于视觉任务的大型深层神经网络架构[23,31]。

Li and Hoiem [23] use knowledge distillation for one of the classical vision tasks, image classification, formulated in a deep learning framework. However, their evaluation is limited to the case where the old network is trained on a dataset, while the new network is trained on a different one, e.g., Places365 for the old and PASCAL VOC for the new, ImageNet for the old and PASCAL VOC for the new, etc. While this is interesting, it is a simpler task, because: (i) different datasets often contain dissimilar classes, (ii) there is little confusion between datasets—it is in fact possible to identify a dataset simply from an image [38].

Li和Hoiem[23]将知识蒸馏用于在深度学习框架中制定的一项经典视觉任务,即图像分类。然而,其评估仅限于旧网络在数据集上进行训练的情况,而新网络在不同的数据集上进行训练,例如,Places365用于旧网络,PASCAL VOC用于新网络,ImageNet用于旧网络,PASCAL VOC用于新网络等。虽然这很有趣,但这是一项更简单的任务,因为:(i)不同的数据集通常包含不同的类,(ii)数据集之间几乎没有混淆。事实上,仅从图像识别数据集是可能的[38]。

Our method is significantly different from [23] in two ways. First, we deal with the more difficult problem of learning incrementally on the same dataset, i.e., the addition of classes to the network. As shown in [31], [23] fails in a similar setting of learning image classifiers incrementally. Second, we address the object detection task, where it is very common for the old and the new classes to co-occur, unlike the classification task.

我们的方法在两个方面与[23]明显不同。首先,我们处理在同一数据集上增量学习的更困难的问题,即向网络中添加类。如[31]所述,[23]在增量学习图像分类器的类似设置中失败。第二,我们处理目标检测任务,与分类任务不同,新类和旧类同时出现是非常常见的。

Very recently, Rebuffi et al. [31] address some of the drawbacks in [23] with their incremental learning approach for image classification. They also use knowledge distillation, but decouple the classifier and the representation learning. Additionally, they rely on a subset of the original training data to preserve the performance on the old classes. In comparison, our approach is an end-to-end learning framework, where the representation and the classifier are learned jointly, and we do not use any of the original training samples to avoid catastrophic forgetting.

最近,Rebuffi et al.[31]利用其用于图像分类的增量学习方法解决了[23]中的一些缺点。它们还使用知识蒸馏,但将分类器和表示学习解耦。此外,它们依赖于原始训练数据的子集来保持旧类的性能。相比之下,我们的方法是端到端学习框架,其中表示和分类器是联合学习的,并且我们不使用任何原始训练样本来避免灾难性遗忘。

Alternatives to distillation are: growing the capacity of the network with new layers [35], applying strong per-parameter regularization selectively [20]. The downside to these methods is the rapid increase in the number of new parameters to be learned [35], and their limited evaluation on the easier task of image classification [20].

蒸馏的替代方法是:增加新层的网络容量[35],选择性地应用强的参数正则化[20]。这些方法的缺点是需要学习的新参数数量迅速增加[35],且对更简单的图像分类任务的评估有限[20]。

In summary, none of the previous work addresses the problem of learning classifiers for object detection incrementally, without using previously seen training samples.

总之,以前的工作都没有解决在不使用旧训练样本的情况下增量学习分类器进行目标检测的问题。

3. Incremental learning of new classes

Our overall approach for incremental learning of a CNN model for object detection is illustrated in Figure 2. It contains a frozen copy of the original detector (denoted by Network A in the figure), which is used to: (i) select proposals corresponding to the old classes, i.e., distillation proposals, and (ii) compute the distillation loss. Network B in the figure is the adapted network for the new classes. It is obtained by increasing the number of outputs in the last layer of the original network, such that the new output layer includes the old as well as the new classes.

图2说明了我们对用于目标检测的CNN模型进行增量学习的总体方法。它包含原始检测器的冻结副本(图中由网络a表示),用于:(i)选择与旧类相对应的方案,即蒸馏方案,(ii)计算蒸馏损失。图中的网络B是新类的适应网络。它是通过增加原始网络最后一层的输出数量来实现的,这样新的输出层既包括旧的类,也包括新的类。

In order to avoid catastrophic forgetting, we constrain the learning process of the adapted network. We achieve this by incorporating a distillation loss, to preserve the performance on the old classes, as an additional term in the standard cross-entropy loss function (see §3.2). Specifically, we evaluate each new training sample on the frozen copy (Network A) to choose a diverse set of proposals (distillation proposals in Figure 2), and record their responses. With these responses in hand, we compute a distillation loss which measures the discrepancy between the two networks for the distillation proposals. This loss is added to the crossentropy loss on the new classes to make up the loss function for training the adapted detection network. As we show in the experimental evaluation, the distillation loss as well as the strategy to select the distillation proposals are critical in preserving the performance on the old classes (see §4).

为了避免灾难性遗忘,我们对自适应网络的学习过程进行了约束。我们通过加入蒸馏损失来实现这一点,以保持旧类别的性能,作为标准交叉熵损失函数中的附加项(见§3.2)。具体而言,我们评估冻结副本(网络A)上的每个新训练样本,以选择一组不同的建议框(图2中的蒸馏建议框),并记录其数据。有了这些数据,我们计算了一个蒸馏损失,用于测量蒸馏方案的两个网络之间的差异。该损失被添加到新类的交叉熵损失中,以弥补用于训练自适应检测网络的损失函数。正如我们在实验评估中所示,蒸馏损失以及选择蒸馏方案的策略对于保持旧等级的性能至关重要(见§4)。

In the remainder of this section, we provide details of the object detector network (§3.1), the loss functions and the learning algorithm (§3.2), and strategies to sample the object proposals (§3.3).

在本节剩余部分中,我们提供了目标探测器网络(§3.1)、损失函数和学习算法(§3.2)的详细信息,以及对目标建议框进行抽样的策略(§3.3)。

3.1. Object detection network

We use a variant of a popular framework for object detection—Fast R-CNN [14], which is a proposal-based detection method built with pre-computed object proposals, e.g., [3, 41]. We chose this instead of the more recent Faster R-CNN [32], which integrates the computation of category-specific proposals into the network, because we need proposals agnostic to object categories, such as EdgeBoxes [41], MCG [3]. We use EdgeBoxes [41] proposals for PASCAL VOC 2007 and MCG [3] for COCO. This allows us to focus on the problem of learning the representation and the classifier, given a pre-computed set of generic object proposals.

我们使用了一种流行的目标检测框架Fast R-CNN[14]的变体,这是一种基于建议框的检测方法,使用预先计算的目标建议框构建,例如[3,41]。我们选择了这一方案,而不是最近的Faster R-CNN[32],后者将特定类别建议框的计算集成到网络中,因为我们需要与目标类别无关的建议框,如EdgeBoxes[41],MCG[3]。我们对PASCAL VOC 2007使用EdgeBoxes[41]方案,对COCO使用MCG[3]方案。这使我们能够关注在给定一组预先计算的通用目标建议看的情况下学习表示和分类器的问题。

In our variant of Fast R-CNN, we replaced the VGG-16 trunk with a deeper ResNet-50 [18] component, which is faster and more accurate than VGG-16. We follow the suggestions in [18] to combine Fast R-CNN and ResNet architectures. The network processes the whole image through a sequence of residual blocks. Before the last strided convolution layer we insert a RoI pooling layer, which performs maxpooling over regions of varied sizes, i.e., proposals, into a 7 × 7 feature map. Then we add the remaining residual blocks, a layer for average pooling over spatial dimensions, and two fully connected layers: a softmax layer for classification (PASCAL or COCO classes, for example, along with the background class) and a regression layer for bounding box refinement, with independent corrections for each class.

在我们的Fast R-CNN变体中,我们用更深的ResNet-50[18]组件替换了VGG-16主干,该组件比VGG-16更快、更准确。我们按照[18]中的建议将Fast R-CNN和ResNet架构结合起来。网络通过一系列残差网络处理整个图像。在最后一个卷积层之前,我们插入一个RoI池层,该层在不同大小的区域(即建议框)上进行最大池化,并将其插入到7×7的特征图中。然后,我们添加剩余的残差网络、一个用于空间维度平均池的层和两个完全连接的层:一个用于分类的softmax层(例如,PASCAL或COCO类以及背景类)和一个用于边界框细化的回归层,每个类都有独立的校正。

The input to the network is an image and about 2000 precomputed object proposals represented as bounding boxes. During inference, the high-scoring proposals are refined according to bounding box regression. Then, a per-category non-maxima suppression (NMS) is performed to get the final detection results. The loss function to train the Fast RCNN detector, corresponding to a RoI, is given by:

网络的输入是一个图像和大约2000个用边界框表示的预计算目标。在推理过程中,根据边界框回归对高分建议框进行细化。然后,执行每类非最大值抑制(NMS)以获得最终检测结果。训练Fast RCNN检测器的损失函数,对应于RoI,由下式给出:

![]()

where p is the set of responses of the network for all the classes (i.e., softmax output), k∗ is a groundtruth class, t is an output of bounding box refinement layer, and t∗ isthe ground truth bounding box proposal. The first part of the loss denotes log-loss over classes, and the second part is localization loss. For more implementation details about Fast R-CNN, refer to the original paper [14].

其中p是针对所有类别的网络响应的集合(即softmax输出),k∗ 是一个groundtruth类,t是边界框细化层的输出,t∗ 是一个ground truth边界建议框。损失的第一部分表示类上的log-loss,第二部分是局部损失。有关Fast R-CNN的更多实现细节,请参阅原文[14]。

3.2. Dual-network learning 双网络学习

First, we train a Fast R-CNN to detect the original set of classes CA. We refer to this network as A(CA). The goal now is to add a new set of classes CB to this. We make two copies of A(CA): one that is frozen to recognize classes CA through distillation loss, and the second B(CB) that is extended to detect the new classes CB, which were not present or at least not annotated in the source images. The extension is done only in the last fully connected layers, i.e., classification and bounding box regression. We create sibling (i.e., fully-connected) layers [15] for new classes only and concatenate their outputs with the original ones. The new layers are initialized randomly in the same way as the corresponding layers in Fast R-CNN. Our goal is to train B(CB) to recognize classes CA ∪ CB using only new data and annotations for CB.

首先,我们训练一个Fast R-CNN来检测原始类别CA。我们将这个网络称为(CA)。现在的目标是在此基础上添加一组新的类CB。我们制作了两份A(CA):一份被冻结以通过蒸馏损失识别类别CA,另一份被扩展以检测新类别CB,这些类别CB在源图像中不存在或没有注释。扩展仅在最后一个完全连接的层中进行,即分类和边界框回归。我们仅为新类创建全连接层[15],并将其输出与原始类连接起来。新层以与Fast R-CNN中相应层相同的方式随机初始化。我们的目标是训练B(CB)识别CA∪ CB仅使用CB的新数据和注释。

The distillation loss represents the idea of “keeping all the answers of the network the same or as close as possible”. If we train B(CB) without distillation, average precision on the old classes will degrade quickly, after a few hundred SGD iterations. This is a manifestation of catastrophic forgetting. We illustrate this in Sections 4.3 and 4.4. We compute the distillation loss by applying the frozen copy of A(CA) to any new image. Even if no object is detected by A(CA), the unnormalized logits (softmax input) carry enough information to “distill” the knowledge of the old classes from A(CA) to B(CB). This process is illustrated in Figure 2.

蒸馏损失代表了“保持网络的所有答案相同或尽可能接近”的想法。如果我们在没有蒸馏的情况下训练B(CB),在几百次SGD迭代之后,旧类的平均精度将迅速降低。这是灾难性遗忘的表现。我们在第4.3节和第4.4节中对此进行了说明。我们通过将A(CA)的冻结副本应用于任何新图像来计算蒸馏损失。即使A(CA)未检测到任何目标,非规范化的logit(softmax输入)也会携带足够的信息,以便将旧类的知识从A(CA)提取到B(CB)。该过程如图2所示。

For each image we randomly sample 64 RoIs out of 128 with the smallest background score. The logits computed for these RoIs by A(CA) serve as targets for the old classes in the L2 distillation loss shown below. The logits for the new classes CB are not considered in this loss. We subtract the mean over the class dimension from these unnormalized logits (y) of each RoI to obtain the corresponding centered logits y¯ used in the distillation loss. Bounding box regression outputs tA (of the same set of proposals used for computing the logit loss) also constrain the loss of the network B(CB). We chose to use L2 loss instead of a crossentropy loss for regression outputs because it demonstrates more stable training and performs better (see §4.4). The distillation loss combining the logits and regression outputs is written as:

对于每幅图像,我们随机抽取128个具有最小背景分数的ROI中的64个。A(CA)为这些ROI计算的Logit作为L2蒸馏损失中旧类的目标,如下所示。本损失不考虑新类别CB的logits。我们从每个RoI的这些非标准化对数(y)中减去类别维度的平均值,以获得蒸馏损失中使用的相应中心对数y'。边界盒回归输出tA(用于计算logit损失的同一组建议)也约束网络B(CB)的损失。对于回归输出,我们选择使用L2损失而不是交叉熵损失,因为它显示了更稳定的训练,并且表现更好(见§4.4)。结合logits和回归输出的蒸馏损失写为:

where N is the number of RoIs sampled for distillation (i.e., 64 in this case), |CA| is the number of old classes, and the sum is over all the RoIs for the old classes. We distill logits without any smoothing, unlike [19], because most of the proposals already produce a smooth distribution of scores. Moreover, in our case, both the old and the new networks are similar with almost the same parameters (in the beginning), and so smoothing the logits distribution is not necessary to stabilize the learning.

其中,N是为蒸馏取样的ROI数量(即,在本例中为64),|CA |是旧类别的数量,总和是旧类别的所有ROI的总和。与[19]不同,我们在没有任何平滑的情况下提取Logit,因为大多数建议框已经产生了平滑的分数分布。此外,在我们的例子中,旧的和新的网络都是相似的,具有几乎相同的参数(在开始时),因此平滑logits分布对于稳定学习是不必要的。

The values of the bounding box regression are also distilled because we update all the layers, and any update of the convolutional layers will affect them indirectly. As box refinements are important to detect objects accurately, their values should be conserved as well. This is an easier task than keeping the classification scores because bounding box refinements for each class are independent, and are not linked by the softmax.

边界框回归的值也被提取,因为我们更新了所有层,卷积层的任何更新都将间接影响它们。由于框细化对于准确检测目标很重要,因此它们的值也应该保持不变。这比保持分类分数更容易,因为每个类的边界框细化是独立的,并且不由softmax链接。

The overall loss L to train the model incrementally is a weighted sum of the distillation loss (2), and the standard Fast R-CNN loss (1) that is applied only to new classes CB, where groundtruth bounding box annotation is available. In essence,

增量训练模型的总损失L是蒸馏损失(2)和仅适用于新类别CB的标准快速R-CNN损失(1)的加权和,在新类别CB中,可使用groundtruth边界框注释。实质上,,![]()

where the hyperparameter λ balances the two losses. We set λ to 1 in all the experiments with cross-validation (see §4.4).

其中超参数λ平衡两个损失。在所有交叉验证实验中,我们将λ设置为1(见§4.4)。

The interplay between the two networks A(CA) and B(CB) provides the necessary supervision that prevents the catastrophic forgetting in the absence of original training data used by A(CA). After the training of B(CB) is completed, we can add more classes by freezing the newly trained network and using it for distillation. We can thus add new classes sequentially. Since B(CB) is structurally identical to A(CA ∪ CB), the extension can be repeated to add more classes.

两个网络A(CA)和B(CB)之间的相互作用提供了必要的监督,以防止在缺乏A(CA)使用的原始训练数据的情况下发生灾难性遗忘。在B(CB)的训练完成后,我们可以通过冻结新训练的网络并将其用于蒸馏来添加更多的类。因此,我们可以按顺序添加新类。因为B(CB)在结构上与A相同(CA∪ CB),可以重复扩展以添加更多类。

3.3. Sampling strategy

As mentioned before, we choose 64 proposals out of 128 with the lowest background score, thus biasing the distillation to non-background proposals. We noticed that proposals recognized as confident background do not provide strong learning cues to conserve the original classes. One possibility is using an unbiased distillation that randomly samples 64 proposals out of the whole set of 2000 proposals. However, when doing so, the detection performance on old classes is noticeably worse because most of the distillation proposals are now background, and carry no strong signal about the object categories. Therefore, it is advantageous to select non-background proposals. We demonstrate this empirically in Section 4.5.

如前所述,我们从128个背景分数最低的建议框中选择了64个,从而使蒸馏偏向于非背景建议框。我们注意到,被完全视为背景的提案并不能提供强有力的学习线索,以保留原有的类。一种可能性是使用无偏蒸馏法,从2000份建议框中随机抽取64份。但是,这样做时,旧类上的检测性能明显较差,因为大多数蒸馏建议现在都是背景,并且没有关于目标类别的强信号。因此,选择非背景建议框是有利的。我们在第4.5节中以经验证明了这一点。

4 实验

4.1. Datasets and evaluation

We evaluate our method on the PASCAL VOC 2007 detection benchmark and the Microsoft COCO challenge dataset. VOC 2007 consists of 5K images in the trainval split and 5K images in the test split for 20 object classes. COCO on the other hand has 80K images in the training set and 40K images in the validation set for 80 object classes (which includes all the classes from VOC). We use the standard mean average precision (mAP) at 0.5 IoU threshold as the evaluation metric. We also report mAP weighted across different IoU from 0.5 to 0.95 on COCO, as recommended in the COCO challenge guidelines. Evaluation of the VOC 2007 experiments is done on the test split, while for COCO, we use the first 5000 images from the validation set.

我们在PASCAL VOC 2007检测基准和Microsoft COCO challenge数据集上评估了我们的方法。VOC 2007包含20个对象类的trainval分割中的5K图像和测试分割中的5K图像。另一方面,COCO在80个对象类(包括VOC中的所有类)的训练集中有80K图像,在验证集中有40K图像。我们使用0.5 IoU阈值下的标准平均精度(mAP)作为评估指标。我们还报告了COCO不同IoU的mAP加权值,从0.5到0.95,如COCO挑战指南所建议。VOC 2007实验的评估是在测试分割上进行的,而对于COCO,我们使用验证集中的前5000幅图像。

4.2. Implementation details

We use SGD with Nesterov momentum [27] to train the network in all the experiments. We set the learning rate to 0.001, decay to 0.0001 after 30K iterations, and momentum to 0.9. In the second stage of training, i.e., learning the extended network with new classes, we used a learning rate of 0.0001. The A(CA) network is trained for 40K iterations on PASCAL VOC 2007 and for 400K iterations on COCO. The B(CB) network is trained for 3K-5K iterations when only one class is added, and for the same number of iterations as A(CA) when many classes are added at once. Following Fast R-CNN [14], we regularize with weight decay of 0.00005 and take batches of two images each. All the layers of A(CA) and B(CB) networks are finetuned unless stated otherwise.

在所有实验中,我们使用带有Nesterov动量[27]的SGD来训练网络。我们将学习速率设置为0.001,30K迭代后衰减为0.0001,动量设置为0.9。在培训的第二阶段,即通过新类学习扩展网络,我们使用了0.0001的学习率。A(CA)网络在pascalvoc2007上进行了40K次迭代,在COCO上进行了400K次迭代。当只添加一个类时,B(CB)网络被训练为3K-5K迭代,当同时添加多个类时,B(CB)网络被训练为与A(CA)相同的迭代次数。根据Fast R-CNN[14],我们使用0.00005的权重衰减进行正则化,并对每个批次拍摄两张图像。除非另有说明,否则A(CA)和B(CB)网络的所有层都经过微调。

The integration of ResNet into Fast R-CNN (see §3.1) is done by adding a RoI pooling layer before the conv5 1 layer, and replacing the final classification layer by two sibling fully connected layers. The batch normalization layers are frozen, and as in Fast R-CNN, no dropout is used. RoIs are considered as detections if they have a score more than 0.5 for any of the classes. We apply per-class NMS with an IoU threshold of 0.3. Training is image-centric, and a batch is composed of 64 proposals per image, with 16 of them having an IoU of at least 0.5 with a groundtruth object. All the proposals are filtered to have IoU less than 0.7, as in [41].

通过在conv5 1层之前添加RoI池层,并用两个全连接层替换最终分类层,将ResNet集成到Fast R-CNN(见§3.1)中。批量标准化层被冻结,与Fast R-CNN一样,不使用任何dropout。如果任何类别的ROI得分超过0.5,则视为检测。我们应用每类NMS,IoU阈值为0.3。训练以图像为中心,每幅图像由64个建议框组成,其中16个建议框的IoU至少为0.5,其中有一个groundtruth目标。所有提案都经过过滤,使IoU小于0.7,如[41]所示。

We use TensorFlow [1] to develop our incremental learning framework. Each experiment begins with choosing a subset of classes to form the set CA. Then, a network is learned only on the subset of the training set composed of all the images containing at least one object from CA. Annotations for other classes in these images are ignored. With the new classes chosen to form the set CB, we learn the extended network as described in Section 3.2 with the subset of the training set containing at least one object from CB. As in the previous case, annotations of all the other classes, including those of the original classes CA, are ignored. For computational efficiency, we precomputed the responses of the frozen network A(CA) on the training data (as every image is typically used multiple times).

我们使用TensorFlow[1]开发我们的增量学习框架。每个实验都从选择类子集来形成集合CA开始。然后,只在训练集的子集上学习网络,该训练集由至少包含CA中一个对象的所有图像组成。忽略这些图像中其他类的注释。通过选择新类来形成集CB,我们学习第3.2节所述的扩展网络,其中训练集的子集包含至少一个来自CB的对象。与前一种情况一样,忽略所有其他类的注释,包括原始类CA的注释。为了提高计算效率,我们预先计算了冻结网络A(CA)对训练数据的响应(因为每个图像通常使用多次)。

4.3. Addition of one class

In the first experiment we take 19 classes in alphabetical order from the VOC dataset as CA, and the remaining one as the only new class CB. We then train the A(1-19) network on the VOC trainval subset containing any of the 19 classes, and the B(20) network is trained on the trainval subset containing the new class. A summary of the evaluation of these networks on the VOC test set is shown in Table 1, with the full results in Table 6.

在第一个实验中,我们从VOC数据集中按字母顺序选取19个类作为CA,剩下的一个作为唯一的新类CB。然后,我们在包含19个类中任何一个的VOC trainval子集上训练A(1-19)网络,在包含新类的trainval子集上训练B(20)网络。表1显示了VOC测试集上这些网络的评估总结,完整结果见表6。

A baseline approach for addition of a new class is to add an output to the last layer and freeze the rest of the network. This freezing, where the weights of the network’s convolutional layers are fixed (“B(20) w frozen trunk” in the tables), results in a lower performance on the new class as the previously learned representations have not been adapted for it. Furthermore, it does not prevent degradation of the performance on the old classes, where mAP drops by almost 15%. When wefreeze all the layers, including the old output layer (“B(20) w all layers frozen”), or apply distillation loss (“B(20) w frozen trunk and distill.”), the performance on the old classes is maintained, but that on the new class is poor. This shows that finetuning of convolutional layers is necessary to learn the new classes.

添加新类的基线方法是将输出添加到最后一层,并冻结网络的其余部分。在网络卷积层的权重固定的情况下(“表中的B(20)w冻结中继”),这种冻结会导致新类的性能降低,因为以前学习的表示法没有适用于它。此外,它不能防止旧类的性能下降,在旧类中,mAP下降了近15%。当我们冻结所有层,包括旧输出层(“B(20)w所有层冻结”)或应用蒸馏损失(“B(20)w冻结树干和蒸馏”)时,旧级的性能保持不变,但新级的性能较差。这表明卷积层的微调对于学习新类是必要的。

When the network B(20) is trained without the distillation loss (“B(20) w/o distillation” in the tables), it can learn the 20th class, but the performance decreases significantly on the other (old) classes. As seen in Table 6, the AP on classes like “cat”, “person” drops by over 60%. The same training procedure with distillation loss largely alleviates this catastrophic forgetting. Without distillation, the new network has 25.0% mAP on the old classes compared to 68.3% with distillation, and 69.6% mAP of baseline Fast R-CNN trained jointly on all classes (“A(1-20)”). With distillation the performance is similar to that of the old network A(1-19), but is lower for certain classes, e.g., “bottle”. The 20th class “tvmonitor” does not get the full performance of the baseline (73.9%), with or without distillation, and is less than 60%. This is potentially due to the size of the training set. The B(20) network is trained only a few hundred images containing instances of this class. Thus, the “tvmonitor” classifier does not see the full diversity of negatives.

当网络B(20)在没有蒸馏损失的情况下(表中的“B(20)w/o蒸馏”)进行训练时,它可以学习第20个类,但在其他(旧)类上性能会显著下降。如表6所示,“猫”、“人”类的AP下降了60%以上。相同的蒸馏损失训练程序在很大程度上缓解了这种灾难性遗忘。在没有蒸馏的情况下,新网络在旧类上有25.0%的mAP,而在蒸馏的情况下有68.3%,在所有类上联合训练的基线快速R-CNN有69.6%的mAP(“A(1-20)”。蒸馏的性能与旧网络A(1-19)相似,但某些类别的性能较低,例如“瓶”。20级“tvmonitor”在有无蒸馏的情况下均未达到基线的全部性能(73.9%),且低于60%。这可能是由于训练集的大小。B(20)网络只训练了几百个包含此类实例的图像。因此,“tvmonitor”分类器看不到底片的完全多样性。

We also performed the “addition of one class” experiment with each of the VOC categories being the new class. The behavior for each class is very similar to the “tvmonitor” case described above. The mAP varies from 66.1% (for new class “sheep”) to 68.3% (“tvmonitor”) with mean 67.38% and standard deviation of 0.6%.

我们还进行了“添加一类”实验,每个VOC类别都是新的类别。每个类的行为与上面描述的“tvmonitor”案例非常相似。地图范围从66.1%(对于新类别“绵羊”)到68.3%(tvmonitor),平均值为67.38%,标准偏差为0.6%。

4.4. Addition of multiple classes

In this scenario we train the network A(1-10) on the first 10 VOC classes (in alphabetical order) with the VOC trainval subset corresponding to these classes. In the second stage of training we used the remaining 10 classes as CB and trained only on the images containing the new classes. Table 2 shows a summary of the evaluation of these networks on the VOC test set, with the full results in Table 7.

在这个场景中,我们使用对应于这些类的VOC trainval子集,在前10个VOC类(按字母顺序)上训练网络A(1-10)。在第二阶段的培训中,我们使用剩余的10个类作为CB,只对包含新类的图像进行培训。表2显示了VOC测试集上这些网络的评估总结,完整结果见表7。

Training the network B(11-20) on the 10 new classes with distillation (for the old classes) achieves 63.1% mAP (“B(11-20) w distillation” in the tables) compared to 69.8% of the baseline network trained on all the 20 classes (“A(1- 20)”). Just as in the previous experiment of adding one class, performance on the new classes is slightly worse than with the joint training of all the classes. For example, as seen in Table 7, the performance for “person” is 73.2% vs 79.1%, and 72.5% vs 76.8% for the “train” class. The mAP on new classes is 63.1% for the network with distillation versus 71.3% for the jointly trained model. However, without distillation, the network achieves only 12.8% mAP (“+B(11-20) w/o distillation”) on the old classes. Note that the method without bounding box distillation (“+B(11- 20) w/o bbox distillation”) is inferior to our full method (“+B(11-20) w distillation”).

在10个新类(旧类)上对网络B(11-20)进行蒸馏训练,获得63.1%的mAP(“表中的B(11-20)w蒸馏”),而在所有20个类(“A(1-20)”上训练的基线网络为69.8%。正如之前增加一个班级的实验一样,新班级的表现略差于所有班级的联合培训。例如,如表7所示,“人”的表现为73.2%对79.1%,而“火车”的表现为72.5%对76.8%。对于有蒸馏的网络,新类的映射为63.1%,而对于联合训练的模型,映射为71.3%。然而,在没有蒸馏的情况下,网络在旧类上仅实现12.8%的mAP(“+B(11-20)w/o蒸馏”)。请注意,没有边界框蒸馏的方法(“+B(11-20)w/o bbox蒸馏”)不如我们的完整方法(“+B(11-20)w蒸馏”)。

We also performed the 10-class experiment for different values of λ in (3), the hyperparameter controlling the relative importance of distillation and Fast R-CNN loss. Results shown in Figure 3 demonstrate that when the distillation is weak (λ = 0.1) the new classes are easier to learn, but the old ones are more easily forgotten. When distillation is strong (λ = 10), it destabilizes training and impedes learning the new classes. Setting λ to 1 is a good trade-off between learning new classes and preventing catastrophic forgetting.

我们还对控制蒸馏相对重要性和快速R-CNN损失的超参数λ(3)的不同值进行了10级实验。图3所示的结果表明,当蒸馏较弱(λ=0.1)时,新类更容易学习,但旧类更容易忘记。当蒸馏很强(λ=10)时,它会破坏训练的稳定性并阻碍学习新类。将λ设置为1是学习新课程和防止灾难性遗忘之间的一个良好权衡。

We also compare our approach with elastic weight consolidation (EWC) [20], which is an alternative to distillation and applies per-parameter regularization selectively to alleviate catastrophic forgetting. We reimplemented EWC and verified that it produces results comparable to those reported in [20] on MNIST, and then adapted it to our object detection task. We do this by using the Fast R-CNN batches during the training phase (as done in Section 4.2), and by replacing log loss with the Fast R-CNN loss. Our approach outperforms EWC for this case, when we add 10 classes at once, as shown in Tables 2 and 7.

我们还将我们的方法与弹性权重整合(EWC)[20]进行了比较,后者是蒸馏的替代方法,并选择性地应用每参数正则化来缓解灾难性遗忘。我们重新实现了EWC,并验证其产生的结果与MNIST上[20]中报告的结果相当,然后将其应用于我们的目标检测任务。我们通过在培训阶段使用快速R-CNN批次(如第4.2节所述),并用Fast R-CNN损失替换日志损失来实现这一点。在这种情况下,当我们一次添加10个类时,我们的方法优于EWC,如表2和表7所示。

We evaluated the influence of the number of new classes in incremental learning. To this end, we learn a network for 15 classes first, and then train for the remaining 5 classes, all added at once on VOC. These results are summarized in Table 3, with the per-class results shown in Table 8. The network B(16-20) has better overall performance than B(11- 20): 65.9% mAP versus 63.1% mAP. As in the experiment with 10 classes, the performance is lower for a few classes, e.g., “table”, “horse”, for example, than the initial model A(1-15). The performance on the new classes is lower than jointly trained baseline Fast R-CNN A(1-20). Overall, mAP of B(16-20) is lower than baseline Fast R-CNN (65.9% versus 69.8%).

我们评估了增量学习中新类数量的影响。为此,我们先学习一个15类的网络,然后再训练剩下的5类,所有课程都在VOC上一次添加。表3总结了这些结果,表8显示了每类结果。网络B(16-20)比网络B(11-20)具有更好的整体性能:65.9%的mAP比63.1%的mAP。与10个类的实验一样,一些类(例如“table”、“horse”)的性能低于初始模型a(1-15)。新类的成绩低于联合训练的基线Fast R-CNN A(1-20)。总体而言,B(16-20)的mAP低于基线Fast R-CNN(65.9%对69.8%)。

The evaluation on COCO, shown in Table 4, is done with the first 40 classes in the initial set, and the remaining 40 in the new second stage. The network B(41-80) trained with the distillation loss obtains 37.4% mAP in the PASCALstyle metric and 21% mAP in the COCO-style metric. The baseline network trained on 80 classes is similar in performance with 38.1% and 22.6% mAP respectively. We observe that our proposed method overcomes catastrophic forgetting, just as in the case of VOC seen earlier.

如表4所示,对COCO的评估是在初始设置的前40个类中进行的,剩余的40个类在新的第二阶段中进行。用蒸馏损失训练的网络B(41-80)在PASCALstyle度量中获得37.4%的mAP,在COCO度量中获得21%的mAP。在80个班上训练的基线网络在性能上相似,mAP分别为38.1%和22.6%。我们观察到,我们提出的方法克服了灾难性遗忘,就像前面所看到的VOC一样。

We also studied if distillation depends on the distribution of images used in this loss. To this end, we used the model A(1-10) trained on VOC, and then performed the second stage learning in two settings: B(11-20) learned on the subset of VOC as before, and another model trained for the same set of classes, but using a subset of COCO. From Table 5 we see that indeed, distillation works better when background samples have exactly the same distribution in both stages of training. However, it is still very effective even when the dataset in the second stage is different from the one used in the first.

我们还研究了蒸馏是否依赖于此损失中使用的图像分布。为此,我们使用在VOC上训练的模型A(1-10),然后在两种设置中执行第二阶段学习:B(11-20)像以前一样在VOC子集上学习,另一个模型为同一组类训练,但使用COCO子集。从表5中我们可以看出,当背景样本在两个训练阶段的分布完全相同时,蒸馏效果确实更好。但是,即使第二阶段中的数据集与第一阶段中使用的数据集不同,它仍然非常有效。

4.5. Sequential addition of multiple classes

In order to evaluate incremental learning of classes added sequentially, we update the frozen copy of the network with the one learned with the new class, and then repeat the process with another new class. For example, we take a network learned for 15 classes of VOC, train it for the 16th on the subset containing only this class, and then use the 16-class network as the frozen copy to then learn the 17th class. This is then continued until the 20th class. We denote this incremental extension as B(16)(17)(18)(19)(20).

为了评估按顺序添加的类的增量学习,我们用新类学习到的网络副本更新网络的冻结副本,然后用另一个新类重复该过程。例如,我们为15类VOC学习了一个网络,在仅包含该类的子集上对其进行第16次训练,然后使用16类网络作为冻结副本,然后学习第17类。然后一直持续到第20类。我们将这个增量扩展表示为B(16)(17)(18)(19)(20)。

Results of adding classes sequentially are shown in Tables 8 and 9. After adding the 5 classes we obtain 62.4% mAP (row 3 in Table 8), which is lower than 65.9% obtained by adding all the 5 classes at once (row 2). Table 9 shows intermediate evaluations after adding each class. We observe that the performance of the original classes remains stable at each step in most cases, but for a few classes, which is not recovered in the following steps. We empirically evaluate the importance of using biased non-background proposals (cf. §3.3). Here we add the 5 classes one by one, but use unbiased distillation (“B(16)(17)(18)(19)(20) w unbiased distill.” in Tables 3 and 8), i.e., randomly sampled proposals are used for distillation. This results in much worse overall performance (46% vs 62.4%) and some classes (“person”, “chair”) suffer from a significant performance drop of 10- 20%. We also performed sequential addition experiment with 10 classes, and present the results in Table 10. Although the drop in mAP is more significant than for the previous experiment with 5 classes, it is far from catastrophic forgetting.

按顺序添加类的结果如表8和表9所示。添加5个类后,我们得到62.4%的mAP(表8中的第3行),这低于同时添加所有5个类(第2行)得到的65.9%。表9显示了添加每个类后的中间评估。我们观察到,在大多数情况下,原始类的性能在每个步骤中都保持稳定,但对于少数类,在以下步骤中不会恢复。我们根据经验评估使用有偏见的非背景提案的重要性(参见§3.3)。在这里,我们逐个添加5个类别,但使用无偏蒸馏(“B(16)(17)(18)(19)(20)w无偏蒸馏。”在表3和表8中),即,随机抽样的方案用于蒸馏。这导致整体表现更差(46%对62.4%),一些班级(“个人”、“主席”)表现显著下降10-20%。我们还对10个班进行了顺序加法实验,结果见表10。虽然mAP的下降比之前的5个班的实验更显著,但它远不是灾难性的遗忘。

4.6. Other alternatives

Learning multiple networks. Another solution for learning multiple classes is to train a new network for each class, and then combine their detections. This is an expensive strategy at test time, as each network has to be run independently, including the extraction of features. This may seem like a reasonable thing to do as evaluation of object detection is done independently for each class, However, learning is usually not independent. Although we can learn a decent detection network for 10 classes, it is much more difficult when learning single classes independently. To demonstrate this, we trained a network for 1-15 classes and then separate networks for each of the 16-20 classes. This results in 6 networks in total (row “+A(16)+...+A(20)” in Table 3), compared to incremental learning of 5 classes implemented with a single network (“+B(16)(17)...(20) w distill.”). The results confirm that new classes are difficult to learn in isolation.

学习多个网络。学习多个类的另一个解决方案是为每个类训练一个新的网络,然后结合它们的检测。这在测试时是一种昂贵的策略,因为每个网络都必须独立运行,包括特征提取。这似乎是一件合理的事情,因为每个类的目标检测评估都是独立完成的,然而,学习通常不是独立的。虽然我们可以为10个类学习一个像样的检测网络,但独立学习单个类时要困难得多。为了证明这一点,我们为1-15个类训练了一个网络,然后为16-20个班级中的每个类分别训练了一个网络。这导致总共有6个网络(表3中的“+A(16)+…+A(20)”行),相比之下,使用单个网络(“+B(16)(17)…(20)w提取”)实现的5个类的增量学习。结果证实,新类很难单独学习。

Varying distillation loss. As noted in [19], knowledge distillation can also be expressed as a cross-entropy loss. We compared this with L2-based loss on the one class extension experiment (“B(20) w cross-entropy distill.” in Tables 1 and 6). Cross-entropy distillation works as well as L2 distillation keeping old classes intact (67.3% vs 67.8%), but performs worse than L2 on the new class “tvmonitor” (52% vs 58.3%). We also observed that cross-entropy is more sensitive to the training schedule. According to [19], both formulations should be equivalent in the limit of a high

smoothing factor for logits (cf. §3.2), but our choice of not smoothing leads to this different behavior.

不同的蒸馏损失。如[19]所述,知识蒸馏也可以表示为交叉熵损失。我们将其与单类扩展实验中基于L2的损失进行了比较(“表1和表6中的B(20)w交叉熵提取”)。交叉熵蒸馏与L2蒸馏一样工作,保持旧类的完整性(67.3%对67.8%),但在新类“tvmonitor”上的性能比L2差(52%对58.3%)。我们还观察到交叉熵对训练计划更为敏感。根据[19],两种配方应在高浓度范围内等效

logits的平滑因子(参见§3.2),但我们选择不平滑会导致这种不同的行为。

Bounding box regression distillation. Addition of 10 classes (Table 2) without distilling bounding box regression values performs consistently worse than the full distillation loss. Overall B(11-20) without distilling bounding box regression gets 60.9% vs 63.1% with the full distillation. However, on a few new classes the performance can be higher than with the full distillation (Table 7). This is also the case for B(20) without bounding box distillation (Table 6) that has better performance on “tvmonitor” (62.7% vs 58.3%). This is not the case when other categories are chosen as the new class. Indeed, bounding box distillation shows an improvement of 2% for the “sheep” class.

边界框回归蒸馏。添加10个类别(表2)而不蒸馏边界框回归值的表现始终比完全蒸馏损失差。整体B(11-20)无蒸馏边界框回归得到60.9%,而完全蒸馏得到63.1%。然而,在一些新类别上,性能可能高于全蒸馏(表7)。无边界框蒸馏的B(20)也是如此(表6),其在“tvmonitor”上具有更好的性能(62.7%对58.3%)。当选择其他类别作为新类别时,情况并非如此。事实上,边界框蒸馏显示“sheep”类提高了2%。

5 结论

In this paper, we have presented an approach for incremental learning of object detectors for new classes, without access to the training data corresponding to the old classes. We address the problem of catastrophic forgetting in this context, with a loss function that optimizes the performance on the new classes, in addition to preserving the performance on the old classes. Our extensive experimental analysis demonstrates that our approach performs well, even in the extreme case of adding new classes one by one. Part of future work is adapting our method to learned proposals, e.g., from RPN for Faster R-CNN [32], by reformulating RPN as a single class detector that works on sliding window proposals. This requires adding another term for RPNbased knowledge distillation in the loss function.

在本文中,我们提出了一种新类目标检测器的增量学习方法,不需要访问与旧类对应的训练数据。在这种情况下,我们使用损失函数来解决灾难性遗忘问题,除了保留旧类的性能外,还可以优化新类的性能。我们广泛的实验分析表明,即使在逐个添加新类的极端情况下,我们的方法也表现良好。未来工作的一部分是使我们的方法适应所学的建议框,例如,通过将RPN重新格式化为一个单类检测器来处理滑动窗口建议,从RPN获得Fast R-CNN[32]。这需要在损失函数中为基于RPN的知识提取添加另一个术语。

2418

2418

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?