准备工作

- 创建JAVA / Maven工程

- 导入所依赖的Jar包

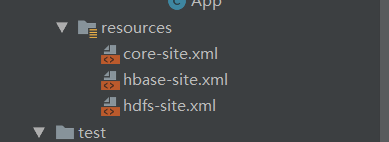

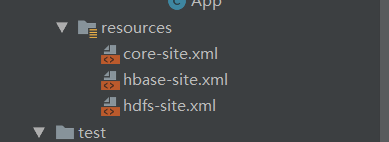

- 导入依赖的配置文件

思考:从MapReduce框架的角度去考虑,分析数据框架,要么读取数据,要么写入数据

- 数据源 -TableMapper

从HBASE中读取数据

TableMapper<ImmutableBytesWritable, Result>

ImmutableBytesWritable:rowkey

Result:是一条数据(一行数据库) - 数据终端- TableReducer

将分析的数据/ETL的数据写入到HBase表中

ImmutableBytesWritable:key

Put:value - 既做数据源也做数据终端

从HBase表中读取数据,分析ETL处理,将结果写会到HBase表中。

Jar包导入

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

<hadoop.version>2.7.3</hadoop.version>

<hive.version>1.2.1</hive.version>

<hbase.version>1.2.0-cdh5.7.6</hbase.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-service</artifactId>

<version>${hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>${hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>${hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>${hbase.version}</version>

</dependency>

</dependencies>

配置文件导入

代码实现

public class saleOrdersMapReducer extends Configured implements Tool {

private final static String ORDERS_TABLE_NAME="ns1:orders";

private final static String HISTORY_ORDERS_TABLE_NAME="orders:history_orders88";

static class ReadOrderMapper extends TableMapper<ImmutableBytesWritable,Put>{

private final static String ORDER_COLUMN_NAME_USER_ID = "user_id";

private final static String ORDER_COLUMN_NAME_ORDER_ID = "order_id";

private final static String ORDER_COLUMN_NAME_DATE = "date";

private final static String HISTORY_ROW_KEY_SEPARATOR = "_";

private final static byte[] HISTORY_COLUMN_FAMILY= Bytes.toBytes( "order" );

private ImmutableBytesWritable mapOutput = new ImmutableBytesWritable( );

@Override

protected void map(ImmutableBytesWritable key, Result value, Context context) throws IOException, InterruptedException {

Put put = resultToPut(key,value);

mapOutput.set( put.getRow() );

context.write( mapOutput,put );

}

private Put resultToPut(ImmutableBytesWritable key, Result result) {

String orderId = Bytes.toString( key.get() );

HashMap<String, String> orderMap = new HashMap<>();

for (Cell cell:result.rawCells()) {

String filed = Bytes.toString(CellUtil.cloneQualifier( cell ));

String value = Bytes.toString(CellUtil.cloneValue( cell ));

orderMap.put( filed ,value);

}

StringBuffer sb = new StringBuffer();

sb.append( orderMap.get( ORDER_COLUMN_NAME_USER_ID ) ).reverse();

sb.append( HISTORY_ROW_KEY_SEPARATOR );

sb.append( orderMap.get( ORDER_COLUMN_NAME_DATE ) );

sb.append( HISTORY_ROW_KEY_SEPARATOR );

sb.append( orderId );

Put put = new Put(Bytes.toBytes( sb.toString() ));

for (Map.Entry<String,String> entry:orderMap.entrySet()) {

put.addColumn(

HISTORY_COLUMN_FAMILY,

Bytes.toBytes( entry.getKey() ),

Bytes.toBytes( entry.getValue() ) );

}

put.addColumn(

HISTORY_COLUMN_FAMILY,

Bytes.toBytes( ORDER_COLUMN_NAME_ORDER_ID ),

Bytes.toBytes( orderId ) );

return put;

}

}

@Override

public int run(String[] args) throws Exception {

Configuration conf = this.getConf();

Job job = Job.getInstance( conf, F_SaleOrdersMapReducer.class.getName() );

job.setJarByClass( F_SaleOrdersMapReducer.class );

Scan scan = new Scan();

scan.setCaching(500);

scan.setCacheBlocks(false);

TableMapReduceUtil.initTableMapperJob(

ORDERS_TABLE_NAME,

scan,

ReadOrderMapper.class,

ImmutableBytesWritable.class,

Put.class,

job);

TableMapReduceUtil.initTableReducerJob(

HISTORY_ORDERS_TABLE_NAME,

null,

job);

job.setNumReduceTasks(0);

boolean isSuccess = job.waitForCompletion( true );

return isSuccess?0:1;

}

public static void main(String[] args) {

Configuration conf = HBaseConfiguration.create();

try {

int status = ToolRunner.run( conf, new F_SaleOrdersMapReducer(), args );

System.exit( status );

} catch (Exception e) {

e.printStackTrace();

}

}

}

545

545

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?