首先在此考虑针对每家店分别做销量预测的尝试.

导入计算库

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

plt.style.use("fivethirtyeight")

plt.rcParams["font.sans-serif"] = ["Microsoft YaHei"]

plt.rcParams["axes.unicode_minus"] = False

import warnings

warnings.filterwarnings("ignore")

from sklearn.preprocessing import OneHotEncoder

from fbprophet import Prophet

导入数据

先尝试使用没有被压缩的数据做探索,根据之前的探索,ID号,和日期都可以被去除,日期被转化为运营日期。

path_train = "../preocess_data/train_data_o.csv"

path_test = "../data/test_data.csv"

data = pd.read_csv(path_train)

data_test = pd.read_csv(path_test)

data["运营日期"] = pd.to_datetime(data["运营日期"] )

data_test["运营日期"] = pd.to_datetime(data_test["日期"])

data.drop(["行ID","日期"],axis=1,inplace=True)

data_test.drop(["行ID","日期"],axis=1,inplace=True)

data

| 商店ID | 商店类型 | 位置 | 地区 | 节假日 | 折扣 | 销量 | 运营日期 | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1 | S1 | L3 | R1 | 1 | Yes | 7011.84 | 2018-01-01 |

| 1 | 253 | S4 | L2 | R1 | 1 | Yes | 51789.12 | 2018-01-01 |

| 2 | 252 | S3 | L2 | R1 | 1 | Yes | 36868.20 | 2018-01-01 |

| 3 | 251 | S2 | L3 | R1 | 1 | Yes | 19715.16 | 2018-01-01 |

| 4 | 250 | S2 | L3 | R4 | 1 | Yes | 45614.52 | 2018-01-01 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 188335 | 149 | S2 | L3 | R2 | 1 | Yes | 37272.00 | 2019-05-31 |

| 188336 | 153 | S4 | L2 | R1 | 1 | No | 54572.64 | 2019-05-31 |

| 188337 | 154 | S1 | L3 | R2 | 1 | No | 31624.56 | 2019-05-31 |

| 188338 | 155 | S3 | L1 | R2 | 1 | Yes | 49162.41 | 2019-05-31 |

| 188339 | 152 | S2 | L1 | R1 | 1 | No | 37977.00 | 2019-05-31 |

188340 rows × 8 columns

特征编码

商店类型,位置, 地区,折扣 四个需要进行编码,但是根据之前的推论,只用对折扣进行编码就好(OneHot)。

折扣编码

enc = OneHotEncoder(drop="if_binary")

enc.fit(data["折扣"].values.reshape(-1,1))

enc.transform(data["折扣"].values.reshape(-1,1)).toarray()

enc.transform(data_test["折扣"].values.reshape(-1,1)).toarray()

array([[0.],

[0.],

[0.],

...,

[1.],

[0.],

[0.]])

data["折扣"] = enc.transform(data["折扣"].values.reshape(-1,1)).toarray()

data_test["折扣"] = enc.transform(data_test["折扣"].values.reshape(-1,1)).toarray()

data_train_1 = data[data["商店ID"]==1]

data_train_1

| 商店ID | 商店类型 | 位置 | 地区 | 节假日 | 折扣 | 销量 | 运营日期 | year | month | day | quarter | weekofyear | dayofweek | weekend | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | S1 | L3 | R1 | 1 | 1.0 | 7011.84 | 2018-01-01 | 2018 | 1 | 1 | 1 | 1 | 1 | 0 |

| 607 | 1 | S1 | L3 | R1 | 0 | 1.0 | 42369.00 | 2018-01-02 | 2018 | 1 | 2 | 1 | 1 | 2 | 0 |

| 1046 | 1 | S1 | L3 | R1 | 0 | 1.0 | 50037.00 | 2018-01-03 | 2018 | 1 | 3 | 1 | 1 | 3 | 0 |

| 1207 | 1 | S1 | L3 | R1 | 0 | 1.0 | 44397.00 | 2018-01-04 | 2018 | 1 | 4 | 1 | 1 | 4 | 0 |

| 1752 | 1 | S1 | L3 | R1 | 0 | 1.0 | 47604.00 | 2018-01-05 | 2018 | 1 | 5 | 1 | 1 | 5 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 186569 | 1 | S1 | L3 | R1 | 0 | 1.0 | 33075.00 | 2019-05-27 | 2019 | 5 | 27 | 2 | 22 | 1 | 0 |

| 187165 | 1 | S1 | L3 | R1 | 0 | 1.0 | 37317.00 | 2019-05-28 | 2019 | 5 | 28 | 2 | 22 | 2 | 0 |

| 187391 | 1 | S1 | L3 | R1 | 0 | 1.0 | 44652.00 | 2019-05-29 | 2019 | 5 | 29 | 2 | 22 | 3 | 0 |

| 187962 | 1 | S1 | L3 | R1 | 0 | 1.0 | 42387.00 | 2019-05-30 | 2019 | 5 | 30 | 2 | 22 | 4 | 0 |

| 188113 | 1 | S1 | L3 | R1 | 1 | 1.0 | 39843.78 | 2019-05-31 | 2019 | 5 | 31 | 2 | 22 | 5 | 0 |

516 rows × 15 columns

month, quarter 编码

enc_ = OneHotEncoder(drop="if_binary")

enc_.fit(data_train_1[["month","quarter"]])

OneHotEncoder(drop='if_binary')

data_onehot_train = pd.DataFrame(enc_.transform(data_train_1[["month","quarter"]]).toarray())

data_onehot_list_train = [f"month_{i}" for i in range(1,13)]

data_onehot_list_train.extend([f"quarter_{i}" for i in range(1,5)])

data_onehot_train.columns = data_onehot_list_train

data_onehot_test= pd.DataFrame(enc_.transform(data_test_1[["month","quarter"]]).toarray())

data_onehot_list_test = [f"month_{i}" for i in range(1,13)]

data_onehot_list_test.extend([f"quarter_{i}" for i in range(1,5)])

data_onehot_test.columns = data_onehot_list_test

特征衍生

def time_derivation(t,col="运营日期"):

t["year"] = t[col].dt.year

t["month"] = t[col].dt.month

t["day"] = t[col].dt.day

t["quarter"] = t[col].dt.quarter

t["weekofyear"] = t[col].dt.weekofyear

t["dayofweek"] = t[col].dt.dayofweek+1

t["weekend"] = (t["dayofweek"]>5).astype(int)

return t

data_train = time_derivation(data)

data_test_ = time_derivation(data_test)

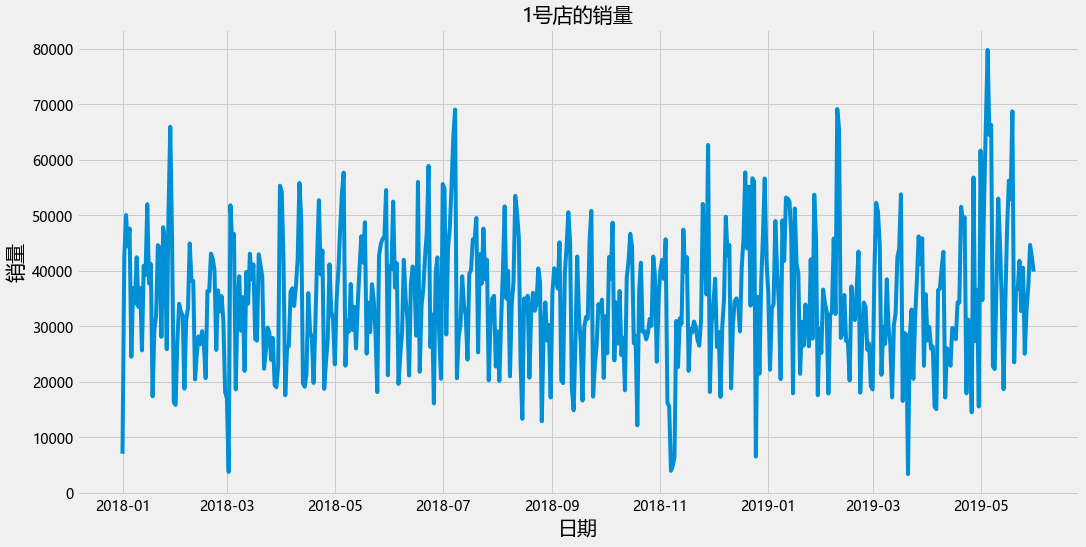

对每家店探索

对第一家先进行尝试

对于第一家店而言,其实测试集合是训练集接着的61天的数据,也就是两个月。类似的其他的数据集是相同的情况。销量作为y,其他的作为外部变量。 因此先考虑使用prophet来尝试。

# 训练集合

data_train_1 = data_train[data_train["商店ID"] ==1]

data_train_1

| 商店ID | 商店类型 | 位置 | 地区 | 节假日 | 折扣 | 销量 | 运营日期 | year | month | day | quarter | weekofyear | dayofweek | weekend | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | S1 | L3 | R1 | 1 | 1.0 | 7011.84 | 2018-01-01 | 2018 | 1 | 1 | 1 | 1 | 1 | 0 |

| 607 | 1 | S1 | L3 | R1 | 0 | 1.0 | 42369.00 | 2018-01-02 | 2018 | 1 | 2 | 1 | 1 | 2 | 0 |

| 1046 | 1 | S1 | L3 | R1 | 0 | 1.0 | 50037.00 | 2018-01-03 | 2018 | 1 | 3 | 1 | 1 | 3 | 0 |

| 1207 | 1 | S1 | L3 | R1 | 0 | 1.0 | 44397.00 | 2018-01-04 | 2018 | 1 | 4 | 1 | 1 | 4 | 0 |

| 1752 | 1 | S1 | L3 | R1 | 0 | 1.0 | 47604.00 | 2018-01-05 | 2018 | 1 | 5 | 1 | 1 | 5 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 186569 | 1 | S1 | L3 | R1 | 0 | 1.0 | 33075.00 | 2019-05-27 | 2019 | 5 | 27 | 2 | 22 | 1 | 0 |

| 187165 | 1 | S1 | L3 | R1 | 0 | 1.0 | 37317.00 | 2019-05-28 | 2019 | 5 | 28 | 2 | 22 | 2 | 0 |

| 187391 | 1 | S1 | L3 | R1 | 0 | 1.0 | 44652.00 | 2019-05-29 | 2019 | 5 | 29 | 2 | 22 | 3 | 0 |

| 187962 | 1 | S1 | L3 | R1 | 0 | 1.0 | 42387.00 | 2019-05-30 | 2019 | 5 | 30 | 2 | 22 | 4 | 0 |

| 188113 | 1 | S1 | L3 | R1 | 1 | 1.0 | 39843.78 | 2019-05-31 | 2019 | 5 | 31 | 2 | 22 | 5 | 0 |

516 rows × 15 columns

# 测试集合

data_test_1 = data_test_[data_test_["商店ID"] ==1]

plt.figure(figsize=(16,8))

plt.plot(data_train_1["运营日期"],data_train_1["销量"])

plt.xlabel("日期",fontsize= 20)

plt.ylabel("销量",fontsize= 20)

plt.title("1号店的销量",fontsize=20)

Text(0.5, 1.0, '1号店的销量')

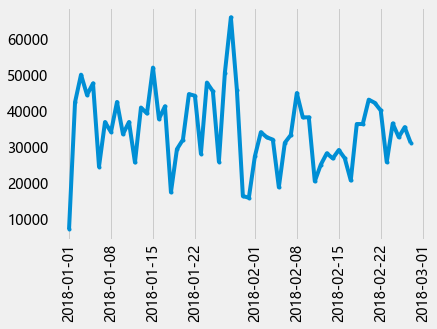

plot_data = data_train_1[ (data_train_1['运营日期']>='2018-01-01') &

(data_train_1['运营日期'] <'2018-02-28' )]

plt.plot( plot_data["运营日期"], plot_data["销量"], '.-' )

plt.xticks(rotation = 90)

plt.grid( axis='y' )

data_train_1[["节假日","折扣"]]

| 节假日 | 折扣 | |

|---|---|---|

| 0 | 1 | 1.0 |

| 607 | 0 | 1.0 |

| 1046 | 0 | 1.0 |

| 1207 | 0 | 1.0 |

| 1752 | 0 | 1.0 |

| ... | ... | ... |

| 186569 | 0 | 1.0 |

| 187165 | 0 | 1.0 |

| 187391 | 0 | 1.0 |

| 187962 | 0 | 1.0 |

| 188113 | 1 | 1.0 |

516 rows × 2 columns

data_train_1["折扣"].value_counts()

0.0 280

1.0 236

Name: 折扣, dtype: int64

data_train_1[data_train_1["节假日"]==1]["折扣"].value_counts()

1.0 34

0.0 34

Name: 折扣, dtype: int64

Prophet的尝试

假期变量的影响

#添加是否是假期。

holidays_= pd.DataFrame({

'holiday': 'holiday_',

'ds':data_train_1[data_train_1["节假日"]==1]["运营日期"].values,

'lower_window': 0,

'upper_window': 1,

})

# 添加打折日

discounts = pd.DataFrame({

'holiday': 'discount',

'ds':data_train_1[data_train_1["折扣"]==1]["运营日期"].values,

'lower_window': -1,

'upper_window': 0,

})

holidays = pd.concat([holidays_,discounts])

m = Prophet(holidays = holidays,

changepoint_prior_scale=0.005,

seasonality_prior_scale=10,

yearly_seasonality=True,

weekly_seasonality=True,

# changepoint_prior_scale = 35,

# seasonality_prior_scale = 0.5,

# changepoint_range = 0.3 ,

seasonality_mode = "additive",

holidays_prior_scale = 10,

)

m.add_country_holidays(country_name='CHN')

<fbprophet.forecaster.Prophet at 0x18016b483c8>

添加其他外部变量

# m.add_seasonality(name='monthly', period=30.5, fourier_order=5,condition_name="month")

# m.add_seasonality(name='quarterly', period=91.25, fourier_order=4,condition_name='quarter')

# m.add_seasonality(name='weekly', period=7, fourier_order=3, condition_name='dayofweek')

m.add_regressor(name="weekend",mode='additive')

<fbprophet.forecaster.Prophet at 0x18016b483c8>

data_train_prophet = data_train_1[["运营日期","销量","dayofweek","weekend","折扣"]].copy()

data_train_prophet.columns= ["ds","y","dayofweek","weekend","折扣"]

m.fit(data_train_prophet)

INFO:fbprophet:Disabling daily seasonality. Run prophet with daily_seasonality=True to override this.

<fbprophet.forecaster.Prophet at 0x18016b483c8>

预测未来61天

horizon = 61

future = m.make_future_dataframe(periods= horizon)

future

| ds | |

|---|---|

| 0 | 2018-01-01 |

| 1 | 2018-01-02 |

| 2 | 2018-01-03 |

| 3 | 2018-01-04 |

| 4 | 2018-01-05 |

| ... | ... |

| 572 | 2019-07-27 |

| 573 | 2019-07-28 |

| 574 | 2019-07-29 |

| 575 | 2019-07-30 |

| 576 | 2019-07-31 |

577 rows × 1 columns

a = list(data_train_1["折扣"].values)

a.extend(data_test_1["折扣"].values)

len(a)

577

future["year"] = future["ds"].dt.year

future["month"] = future["ds"].dt.month

future["quarter"] = future["ds"].dt.quarter

future["dayofweek"] = future["ds"].dt.dayofweek+1

future["weekend"] = (future["dayofweek"]>5).astype(int)

future["折扣"] = np.array(a)

forecast = m.predict(future)

forecast[['ds', 'yhat', 'yhat_lower', 'yhat_upper',

'trend','trend_lower','trend_upper',

'weekly']].head(5)

| ds | yhat | yhat_lower | yhat_upper | trend | trend_lower | trend_upper | weekly | |

|---|---|---|---|---|---|---|---|---|

| 0 | 2018-01-01 | 30775.770424 | 20889.757685 | 40936.492598 | 26748.747097 | 26748.747097 | 26748.747097 | 1189.775785 |

| 1 | 2018-01-02 | 39995.262978 | 30214.814101 | 49360.974394 | 26756.473204 | 26756.473204 | 26756.473204 | -1664.402306 |

| 2 | 2018-01-03 | 44372.074808 | 34877.677359 | 54502.685711 | 26764.199312 | 26764.199312 | 26764.199312 | 1178.083305 |

| 3 | 2018-01-04 | 42600.412597 | 33153.613066 | 52712.333605 | 26771.925420 | 26771.925420 | 26771.925420 | -617.245171 |

| 4 | 2018-01-05 | 49308.412161 | 39745.454258 | 58877.905254 | 26779.651528 | 26779.651528 | 26779.651528 | -1129.635885 |

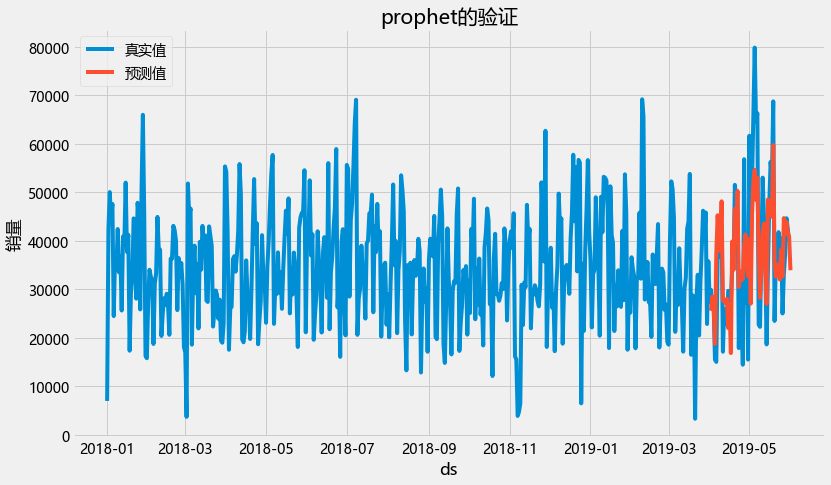

plt.figure(figsize=(12,7))

plt.plot(data_train_1[["运营日期"]],data_train_1[["销量"]],label = "真实值")

plt.plot(forecast.loc[(forecast.index<517)&(forecast.index>455),["ds"]],

forecast.loc[(forecast.index<517)&(forecast.index>455),["yhat"]],label = "预测值")

plt.xlabel("ds")

plt.ylabel("销量")

plt.title("prophet的验证")

plt.legend()

<matplotlib.legend.Legend at 0x1802269bb48>

np.array(forecast.loc[(forecast.index<517)&(forecast.index>455),["yhat"]])

array([[25617.87079196],

[28494.61718356],

[26754.69257591],

[18749.93589485],

[37333.08107403],

[45267.56610754],

[40559.29950886],

[37876.496955 ],

[48141.90279336],

[27787.36958936],

[27512.35859756],

[27134.7722622 ],

[28060.79911766],

[22017.4621406 ],

[26628.40357149],

[16879.79807243],

[39869.34910728],

[34012.96673227],

[46561.0685432 ],

[43356.350005 ],

[50279.07500986],

[30475.47854009],

[33662.95616483],

[32207.92923537],

[32029.12790642],

[38878.69776961],

[41322.45509755],

[35289.42433161],

[32722.54429931],

[40463.1729284 ],

[27064.96799 ],

[45561.0861559 ],

[52296.89175555],

[54613.93978621],

[48441.39667443],

[52950.98586258],

[37124.29614574],

[28245.81736758],

[33458.53713267],

[41473.1328489 ],

[43579.87026573],

[37187.3847213 ],

[27011.29818529],

[48500.29246951],

[46540.19772446],

[45827.8909742 ],

[44959.19819402],

[59731.23505757],

[35801.89591473],

[32621.89790062],

[35113.30049962],

[32945.15253098],

[32042.23570321],

[38163.17905462],

[32652.05219235],

[44677.94503143],

[41411.4922227 ],

[43850.23619555],

[41665.70544608],

[40854.0543816 ],

[33941.84453036]])

def symmetric_mean_absolute_percentage_error(y_true, y_pred):

y_true, y_pred = np.array(y_true), np.array(y_pred)

return np.sum(np.abs(y_true - y_pred) * 2) / np.sum(np.abs(y_true) + np.abs(y_pred))

def prophet_smape(y_true, y_pred):

smape_val = symmetric_mean_absolute_percentage_error(y_true, y_pred)

return 'SMAPE', smape_val, False

prophet_smape(np.array(data_train_1.tail(61)["销量"]).reshape(-1,1),

np.array(forecast.loc[(forecast.index<517)&(forecast.index>455),["yhat"]]))

('SMAPE', 0.2754594181133183, False)

from sklearn.metrics import mean_squared_error,r2_score

mean_squared_error(np.array(data_train_1.tail(61)["销量"]).reshape(-1,1),

np.array(forecast.loc[(forecast.index<517)&(forecast.index>455),["yhat"]]))**0.5

13748.092397062905

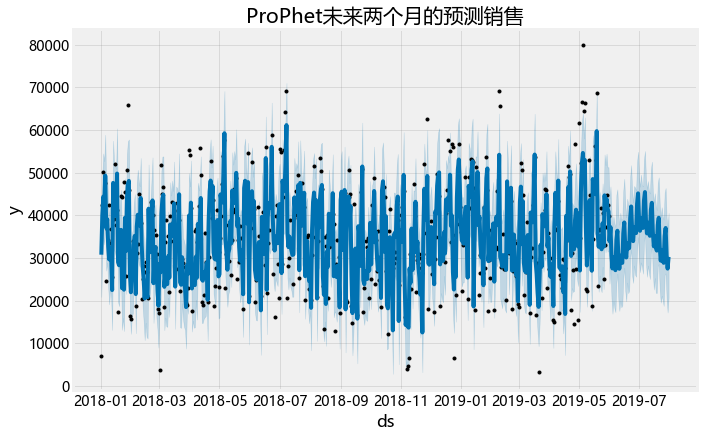

plt.figure(figsize =(12,8))

fig1 = m.plot(forecast)

plt.title("ProPhet未来两个月的预测销售")

Text(0.5, 1.0, 'ProPhet未来两个月的预测销售')

<Figure size 864x576 with 0 Axes>

forecast_prophet = forecast[forecast["ds"]>"2019-05-31"]

forecast_prophet.to_csv("../preocess_data/prophet_pre.csv")

forecast_prophet

| ds | trend | yhat_lower | yhat_upper | trend_lower | trend_upper | Chinese New Year (Spring Festival) | Chinese New Year (Spring Festival)_lower | Chinese New Year (Spring Festival)_upper | Dragon Boat Festival | ... | weekly | weekly_lower | weekly_upper | yearly | yearly_lower | yearly_upper | multiplicative_terms | multiplicative_terms_lower | multiplicative_terms_upper | yhat | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 516 | 2019-06-01 | 30717.593081 | 23978.988018 | 43887.743153 | 30717.593081 | 30717.593081 | 0.0 | 0.0 | 0.0 | 0.0 | ... | -543.034260 | -543.034260 | -543.034260 | -692.226218 | -692.226218 | -692.226218 | 0.0 | 0.0 | 0.0 | 33941.844530 |

| 517 | 2019-06-02 | 30725.274734 | 27820.204589 | 46776.099986 | 30725.274734 | 30725.274734 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 1586.458531 | 1586.458531 | 1586.458531 | -1009.473895 | -1009.473895 | -1009.473895 | 0.0 | 0.0 | 0.0 | 37240.329938 |

| 518 | 2019-06-03 | 30732.956388 | 20579.302294 | 40967.934382 | 30732.956388 | 30732.956388 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 1189.775785 | 1189.775785 | 1189.775785 | -1288.709842 | -1288.709842 | -1288.709842 | 0.0 | 0.0 | 0.0 | 30634.022331 |

| 519 | 2019-06-04 | 30740.638041 | 16933.731881 | 37228.678796 | 30740.638041 | 30740.638041 | 0.0 | 0.0 | 0.0 | 0.0 | ... | -1664.402306 | -1664.402306 | -1664.402306 | -1524.746417 | -1524.746417 | -1524.746417 | 0.0 | 0.0 | 0.0 | 27551.489318 |

| 520 | 2019-06-05 | 30748.319695 | 19840.260773 | 39788.224886 | 30748.319623 | 30748.319720 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 1178.083305 | 1178.083305 | 1178.083305 | -1712.887352 | -1712.887352 | -1712.887352 | 0.0 | 0.0 | 0.0 | 30213.515648 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 572 | 2019-07-27 | 31147.765672 | 26002.865718 | 45344.820879 | 31147.622428 | 31147.914102 | 0.0 | 0.0 | 0.0 | 0.0 | ... | -543.034260 | -543.034260 | -543.034260 | -1416.639602 | -1416.639602 | -1416.639602 | 0.0 | 0.0 | 0.0 | 35126.162378 |

| 573 | 2019-07-28 | 31155.447326 | 26388.789659 | 46396.207525 | 31155.301592 | 31155.599849 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 1586.458531 | 1586.458531 | 1586.458531 | -1650.182907 | -1650.182907 | -1650.182907 | 0.0 | 0.0 | 0.0 | 37029.793518 |

| 574 | 2019-07-29 | 31163.128979 | 20421.281612 | 39356.141199 | 31162.979949 | 31163.286931 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 1189.775785 | 1189.775785 | 1189.775785 | -1842.411772 | -1842.411772 | -1842.411772 | 0.0 | 0.0 | 0.0 | 30510.492992 |

| 575 | 2019-07-30 | 31170.810632 | 17209.813312 | 37025.898551 | 31170.655128 | 31170.973969 | 0.0 | 0.0 | 0.0 | 0.0 | ... | -1664.402306 | -1664.402306 | -1664.402306 | -1994.025550 | -1994.025550 | -1994.025550 | 0.0 | 0.0 | 0.0 | 27512.382776 |

| 576 | 2019-07-31 | 31178.492286 | 20642.313505 | 40558.963843 | 31178.330230 | 31178.661314 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 1178.083305 | 1178.083305 | 1178.083305 | -2106.380041 | -2106.380041 | -2106.380041 | 0.0 | 0.0 | 0.0 | 30250.195551 |

61 rows × 55 columns

from fbprophet.diagnostics import cross_validation

df_cv = cross_validation(m, initial='400 days', period='20 days', horizon = '61 days')

INFO:fbprophet:Making 3 forecasts with cutoffs between 2019-02-19 00:00:00 and 2019-03-31 00:00:00

0%| | 0/3 [00:00<?, ?it/s]

df_cv

| ds | yhat | yhat_lower | yhat_upper | y | cutoff | |

|---|---|---|---|---|---|---|

| 0 | 2019-02-20 | 39169.948020 | 31173.118340 | 48219.377964 | 36735.00 | 2019-02-19 |

| 1 | 2019-02-21 | 45788.522253 | 37014.177142 | 54540.948835 | 43452.00 | 2019-02-19 |

| 2 | 2019-02-22 | 26886.260775 | 17929.663460 | 35342.798292 | 18024.00 | 2019-02-19 |

| 3 | 2019-02-23 | 32745.811135 | 23818.474855 | 41838.147688 | 26976.00 | 2019-02-19 |

| 4 | 2019-02-24 | 35213.159136 | 25766.012478 | 44371.683291 | 34272.00 | 2019-02-19 |

| ... | ... | ... | ... | ... | ... | ... |

| 178 | 2019-05-27 | 44303.517769 | 33653.940290 | 54457.758109 | 33075.00 | 2019-03-31 |

| 179 | 2019-05-28 | 41422.737708 | 31734.476845 | 51089.499696 | 37317.00 | 2019-03-31 |

| 180 | 2019-05-29 | 43684.591502 | 34259.138724 | 52989.369602 | 44652.00 | 2019-03-31 |

| 181 | 2019-05-30 | 41666.494248 | 31393.191189 | 51114.015019 | 42387.00 | 2019-03-31 |

| 182 | 2019-05-31 | 40736.571206 | 30548.912252 | 50292.000845 | 39843.78 | 2019-03-31 |

183 rows × 6 columns

from fbprophet.diagnostics import performance_metrics

df_p = performance_metrics(df_cv)

df_p

| horizon | mse | rmse | mae | mape | mdape | coverage | |

|---|---|---|---|---|---|---|---|

| 0 | 6 days | 5.036577e+07 | 7096.884863 | 4918.194810 | 0.225068 | 0.117936 | 0.888889 |

| 1 | 7 days | 6.514631e+07 | 8071.326656 | 5856.013065 | 0.269997 | 0.145172 | 0.777778 |

| 2 | 8 days | 6.050749e+07 | 7778.655803 | 5522.820783 | 0.259015 | 0.144838 | 0.833333 |

| 3 | 9 days | 5.499201e+07 | 7415.659853 | 4861.737092 | 0.227230 | 0.089064 | 0.833333 |

| 4 | 10 days | 7.613189e+07 | 8725.358811 | 6104.734432 | 0.514230 | 0.144838 | 0.722222 |

| 5 | 11 days | 8.454131e+07 | 9194.635103 | 6733.888274 | 0.544253 | 0.144838 | 0.666667 |

| 6 | 12 days | 5.702142e+07 | 7551.253189 | 5739.286869 | 0.466166 | 0.125538 | 0.722222 |

| 7 | 13 days | 6.444249e+07 | 8027.608125 | 6066.512978 | 0.454786 | 0.128257 | 0.777778 |

| 8 | 14 days | 6.994715e+07 | 8363.441080 | 6519.985453 | 0.473044 | 0.146653 | 0.722222 |

| 9 | 15 days | 7.334660e+07 | 8564.263056 | 6887.624032 | 0.489976 | 0.190327 | 0.722222 |

| 10 | 16 days | 5.113090e+07 | 7150.587245 | 5473.236556 | 0.194382 | 0.120843 | 0.833333 |

| 11 | 17 days | 4.776812e+07 | 6911.448113 | 5177.201837 | 0.168350 | 0.120843 | 0.833333 |

| 12 | 18 days | 4.879434e+07 | 6985.294200 | 5304.380258 | 0.172545 | 0.134687 | 0.833333 |

| 13 | 19 days | 3.374120e+07 | 5808.717248 | 4594.632498 | 0.160506 | 0.130753 | 0.833333 |

| 14 | 20 days | 2.835123e+07 | 5324.586964 | 4093.307775 | 0.137914 | 0.104959 | 0.888889 |

| 15 | 21 days | 2.597542e+07 | 5096.608660 | 3953.328901 | 0.125678 | 0.108755 | 0.888889 |

| 16 | 22 days | 3.005056e+07 | 5481.838863 | 4165.879585 | 0.135987 | 0.108755 | 0.833333 |

| 17 | 23 days | 3.412751e+07 | 5841.875501 | 4694.064916 | 0.167489 | 0.132673 | 0.833333 |

| 18 | 24 days | 3.173372e+07 | 5633.269469 | 4438.663112 | 0.161150 | 0.108755 | 0.833333 |

| 19 | 25 days | 2.580198e+07 | 5079.564654 | 3966.904822 | 0.149305 | 0.105248 | 0.888889 |

| 20 | 26 days | 6.629733e+07 | 8142.317261 | 5613.016240 | 0.280759 | 0.115520 | 0.777778 |

| 21 | 27 days | 1.040308e+08 | 10199.548006 | 7449.355947 | 0.340696 | 0.177644 | 0.611111 |

| 22 | 28 days | 1.062327e+08 | 10306.926933 | 7764.975241 | 0.351447 | 0.178371 | 0.611111 |

| 23 | 29 days | 9.940728e+07 | 9970.319716 | 7215.224151 | 0.317101 | 0.148194 | 0.666667 |

| 24 | 30 days | 1.241672e+08 | 11143.035696 | 8762.404184 | 0.626465 | 0.257710 | 0.555556 |

| 25 | 31 days | 1.802528e+08 | 13425.824888 | 10750.960946 | 0.672327 | 0.333554 | 0.444444 |

| 26 | 32 days | 1.466661e+08 | 12110.576143 | 9492.556532 | 0.555481 | 0.257710 | 0.500000 |

| 27 | 33 days | 1.176510e+08 | 10846.702382 | 8278.451679 | 0.516509 | 0.193604 | 0.611111 |

| 28 | 34 days | 1.384843e+08 | 11767.935920 | 9222.302781 | 0.530433 | 0.253314 | 0.555556 |

| 29 | 35 days | 1.890791e+08 | 13750.603926 | 10618.997444 | 0.542913 | 0.283066 | 0.500000 |

| 30 | 36 days | 1.901535e+08 | 13789.615377 | 10244.912611 | 0.249752 | 0.253314 | 0.555556 |

| 31 | 37 days | 1.558550e+08 | 12484.190357 | 9460.521845 | 0.216429 | 0.249387 | 0.555556 |

| 32 | 38 days | 1.536181e+08 | 12394.278523 | 9451.254663 | 0.222619 | 0.249387 | 0.611111 |

| 33 | 39 days | 1.480860e+08 | 12169.057681 | 8861.828873 | 0.210835 | 0.241381 | 0.611111 |

| 34 | 40 days | 1.252158e+08 | 11189.986285 | 7736.677584 | 0.182831 | 0.147683 | 0.722222 |

| 35 | 41 days | 8.926055e+07 | 9447.780057 | 7120.294127 | 0.179871 | 0.129423 | 0.722222 |

| 36 | 42 days | 6.410505e+07 | 8006.563142 | 6015.148609 | 0.163817 | 0.120477 | 0.777778 |

| 37 | 43 days | 4.810944e+07 | 6936.096997 | 5269.969759 | 0.171384 | 0.120477 | 0.833333 |

| 38 | 44 days | 4.262756e+07 | 6528.978153 | 4811.961491 | 0.157647 | 0.107473 | 0.833333 |

| 39 | 45 days | 4.413978e+07 | 6643.777542 | 5155.720735 | 0.170257 | 0.120477 | 0.888889 |

| 40 | 46 days | 7.963271e+07 | 8923.716078 | 6627.717504 | 0.295001 | 0.132407 | 0.777778 |

| 41 | 47 days | 1.036545e+08 | 10181.087101 | 7713.244395 | 0.314883 | 0.205121 | 0.666667 |

| 42 | 48 days | 1.164146e+08 | 10789.561689 | 8732.133775 | 0.343534 | 0.239747 | 0.555556 |

| 43 | 49 days | 1.167080e+08 | 10803.149147 | 8749.359553 | 0.315651 | 0.229185 | 0.555556 |

| 44 | 50 days | 1.329107e+08 | 11528.688433 | 9989.718561 | 0.375879 | 0.263346 | 0.444444 |

| 45 | 51 days | 1.857242e+08 | 13628.065153 | 11618.643655 | 0.409629 | 0.263346 | 0.333333 |

| 46 | 52 days | 1.526817e+08 | 12356.442706 | 10289.614124 | 0.294648 | 0.230352 | 0.388889 |

| 47 | 53 days | 1.239579e+08 | 11133.636525 | 9111.686535 | 0.275983 | 0.190616 | 0.444444 |

| 48 | 54 days | 1.335311e+08 | 11555.566150 | 9144.473747 | 0.269482 | 0.195774 | 0.500000 |

| 49 | 55 days | 1.803036e+08 | 13427.718151 | 10451.743405 | 0.289974 | 0.230276 | 0.500000 |

| 50 | 56 days | 1.899438e+08 | 13782.008262 | 10445.910805 | 0.246963 | 0.230276 | 0.555556 |

| 51 | 57 days | 1.620768e+08 | 12730.939480 | 10040.752197 | 0.226912 | 0.251473 | 0.500000 |

| 52 | 58 days | 1.608247e+08 | 12681.666976 | 10108.406902 | 0.234770 | 0.251473 | 0.555556 |

| 53 | 59 days | 1.473948e+08 | 12140.626141 | 8939.009436 | 0.209127 | 0.248878 | 0.666667 |

| 54 | 60 days | 1.296210e+08 | 11385.123358 | 8287.961040 | 0.195689 | 0.196069 | 0.722222 |

| 55 | 61 days | 9.342384e+07 | 9665.600614 | 7432.173954 | 0.184672 | 0.160993 | 0.722222 |

网格搜索

(这段代码是可以用的,后来赶时间给打断了。)

import itertools

# 设置想要优化的超参数

param_grid = {

'changepoint_prior_scale': [0.001,0.005,0.01,0.15,0.2,0.25,0.30,35,0.4,0.45,0.5],

'seasonality_prior_scale': [0.05, 0.1, 0.5, 1, 5, 10, 15],

"changepoint_range" : [i / 10 for i in range(3, 10)],

"seasonality_mode" : ['additive', 'multiplicative'],

"holidays_prior_scale" : [0.05, 0.1, 0.5, 1, 5, 10, 15]

}

# 构建全部超参数组合

all_params = [dict(zip(param_grid.keys(), v)) for v in itertools.product(*param_grid.values())]

#记录模型验证误差

mapes = []

#grid-search

for params in all_params:

m = Prophet(**params).fit(data_train_prophet)

df_cv = cross_validation(m, initial='300 days', period='20 days', horizon = '61 days')

df_p = performance_metrics(df_cv, rolling_window=1)

mapes.append(df_p['mape'].values[0])

INFO:fbprophet:Disabling yearly seasonality. Run prophet with yearly_seasonality=True to override this.

INFO:fbprophet:Disabling daily seasonality. Run prophet with daily_seasonality=True to override this.

WARNING:fbprophet.models:Optimization terminated abnormally. Falling back to Newton.

INFO:fbprophet:Making 8 forecasts with cutoffs between 2018-11-11 00:00:00 and 2019-03-31 00:00:00

0%| | 0/8 [00:00<?, ?it/s]

WARNING:fbprophet.models:Optimization terminated abnormally. Falling back to Newton.

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

C:\ProgramData\Anaconda3\lib\site-packages\fbprophet\models.py in fit(self, stan_init, stan_data, **kwargs)

244 try:

--> 245 self.stan_fit = self.model.optimizing(**args)

246 except RuntimeError:

C:\ProgramData\Anaconda3\lib\site-packages\pystan\model.py in optimizing(self, data, seed, init, sample_file, algorithm, verbose, as_vector, **kwargs)

580

--> 581 ret, sample = fit._call_sampler(stan_args)

582 pars = pystan.misc._par_vector2dict(sample['par'], m_pars, p_dims)

stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536.pyx in stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536.StanFit4Model._call_sampler()

stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536.pyx in stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536._call_sampler()

RuntimeError: Something went wrong after call_sampler.

During handling of the above exception, another exception occurred:

KeyboardInterrupt Traceback (most recent call last)

~\AppData\Local\Temp\ipykernel_27972\1694823081.py in <module>

20 for params in all_params:

21 m = Prophet(**params).fit(data_train_prophet)

---> 22 df_cv = cross_validation(m, initial='300 days', period='20 days', horizon = '61 days')

23 df_p = performance_metrics(df_cv, rolling_window=1)

24 mapes.append(df_p['mape'].values[0])

C:\ProgramData\Anaconda3\lib\site-packages\fbprophet\diagnostics.py in cross_validation(model, horizon, period, initial, parallel, cutoffs)

187 predicts = [

188 single_cutoff_forecast(df, model, cutoff, horizon, predict_columns)

--> 189 for cutoff in tqdm(cutoffs)

190 ]

191

C:\ProgramData\Anaconda3\lib\site-packages\fbprophet\diagnostics.py in <listcomp>(.0)

187 predicts = [

188 single_cutoff_forecast(df, model, cutoff, horizon, predict_columns)

--> 189 for cutoff in tqdm(cutoffs)

190 ]

191

C:\ProgramData\Anaconda3\lib\site-packages\fbprophet\diagnostics.py in single_cutoff_forecast(df, model, cutoff, horizon, predict_columns)

225 'Increase initial window.'

226 )

--> 227 m.fit(history_c, **model.fit_kwargs)

228 # Calculate yhat

229 index_predicted = (df['ds'] > cutoff) & (df['ds'] <= cutoff + horizon)

C:\ProgramData\Anaconda3\lib\site-packages\fbprophet\forecaster.py in fit(self, df, **kwargs)

1164 self.params = self.stan_backend.sampling(stan_init, dat, self.mcmc_samples, **kwargs)

1165 else:

-> 1166 self.params = self.stan_backend.fit(stan_init, dat, **kwargs)

1167

1168 # If no changepoints were requested, replace delta with 0s

C:\ProgramData\Anaconda3\lib\site-packages\fbprophet\models.py in fit(self, stan_init, stan_data, **kwargs)

250 )

251 args['algorithm'] = 'Newton'

--> 252 self.stan_fit = self.model.optimizing(**args)

253

254 params = dict()

C:\ProgramData\Anaconda3\lib\site-packages\pystan\model.py in optimizing(self, data, seed, init, sample_file, algorithm, verbose, as_vector, **kwargs)

579 stan_args = pystan.misc._get_valid_stan_args(stan_args)

580

--> 581 ret, sample = fit._call_sampler(stan_args)

582 pars = pystan.misc._par_vector2dict(sample['par'], m_pars, p_dims)

583 if not as_vector:

stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536.pyx in stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536.StanFit4Model._call_sampler()

stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536.pyx in stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536._call_sampler()

stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536.pyx in stanfit4anon_model_f5236004a3fd5b8429270d00efcc0cf9_7332008770348935536._dict_from_stanargs()

C:\ProgramData\Anaconda3\lib\enum.py in __call__(cls, value, names, module, qualname, type, start)

313 """

314 if names is None: # simple value lookup

--> 315 return cls.__new__(cls, value)

316 # otherwise, functional API: we're creating a new Enum type

317 return cls._create_(value, names, module=module, qualname=qualname, type=type, start=start)

KeyboardInterrupt:

mapes

[0.45648954214880777,

0.45648954214880777,

0.45648954214880777,

0.45648954214880777,

0.45648954214880777,

0.45648954214880777,

0.45648954214880777,

0.45912830121340914,

0.45912830121340914,

0.45912830121340914,

0.45912830121340914,

0.45912830121340914,

0.45912830121340914,

0.45912830121340914,

0.4568007117395657,

0.4568007117395657,

0.4568007117395657,

0.4568007117395657,

0.4568007117395657,

0.4568007117395657,

0.4568007117395657,

0.4591920515559872,

0.4591920515559872,

0.4591920515559872,

0.4591920515559872,

0.4591920515559872,

0.4591920515559872,

0.4591920515559872,

0.45510449474358516,

0.45510449474358516,

0.45510449474358516,

0.45510449474358516,

0.45510449474358516,

0.45510449474358516,

0.45510449474358516,

0.45823943263013306,

0.45823943263013306,

0.45823943263013306,

0.45823943263013306,

0.45823943263013306,

0.45823943263013306,

0.45823943263013306,

0.4554239499688715,

0.4554239499688715,

0.4554239499688715,

0.4554239499688715,

0.4554239499688715,

0.4554239499688715,

0.4554239499688715,

0.45879268876194945,

0.45879268876194945,

0.45879268876194945,

0.45879268876194945,

0.45879268876194945,

0.45879268876194945,

0.45879268876194945,

0.45667322427892104,

0.45667322427892104,

0.45667322427892104,

0.45667322427892104,

0.45667322427892104,

0.45667322427892104,

0.45667322427892104,

0.4607103676236504,

0.4607103676236504,

0.4607103676236504,

0.4607103676236504,

0.4607103676236504,

0.4607103676236504,

0.4607103676236504,

0.5206491117824643,

0.5206491117824643,

0.5206491117824643,

0.5206491117824643,

0.5206491117824643,

0.5206491117824643,

0.5206491117824643,

0.5249336893238647,

0.5249336893238647,

0.5249336893238647,

0.5249336893238647,

0.5249336893238647,

0.5249336893238647,

0.5249336893238647,

0.5236945915365312,

0.5236945915365312,

0.5236945915365312,

0.5236945915365312,

0.5236945915365312,

0.5236945915365312,

0.5236945915365312,

0.5260598977697104,

0.5260598977697104,

0.5260598977697104,

0.5260598977697104,

0.5260598977697104,

0.5260598977697104,

0.5260598977697104,

0.45904743702515494,

0.45904743702515494,

0.45904743702515494,

0.45904743702515494,

0.45904743702515494,

0.45904743702515494,

0.45904743702515494,

0.45913177773182684,

0.45913177773182684,

0.45913177773182684,

0.45913177773182684,

0.45913177773182684,

0.45913177773182684,

0.45913177773182684,

0.45961175007785626,

0.45961175007785626,

0.45961175007785626,

0.45961175007785626,

0.45961175007785626,

0.45961175007785626,

0.45961175007785626,

0.4588563774303946,

0.4588563774303946,

0.4588563774303946,

0.4588563774303946,

0.4588563774303946,

0.4588563774303946,

0.4588563774303946,

0.4564027795629576,

0.4564027795629576,

0.4564027795629576,

0.4564027795629576,

0.4564027795629576,

0.4564027795629576,

0.4564027795629576,

0.4581067253308648,

0.4581067253308648,

0.4581067253308648,

0.4581067253308648,

0.4581067253308648,

0.4581067253308648,

0.4581067253308648,

0.4573986858665292,

0.4573986858665292,

0.4573986858665292,

0.4573986858665292,

0.4573986858665292,

0.4573986858665292,

0.4573986858665292,

0.45918990550647126,

0.45918990550647126,

0.45918990550647126,

0.45918990550647126,

0.45918990550647126,

0.45918990550647126,

0.45918990550647126,

0.45814826453953184,

0.45814826453953184,

0.45814826453953184,

0.45814826453953184,

0.45814826453953184,

0.45814826453953184,

0.45814826453953184,

0.45934417584343573,

0.45934417584343573,

0.45934417584343573,

0.45934417584343573,

0.45934417584343573,

0.45934417584343573,

0.45934417584343573,

0.520743204175105,

0.520743204175105,

0.520743204175105,

0.520743204175105,

0.520743204175105,

0.520743204175105,

0.520743204175105,

0.5247093553511951,

0.5247093553511951,

0.5247093553511951,

0.5247093553511951,

0.5247093553511951,

0.5247093553511951,

0.5247093553511951,

0.5247960672686005,

0.5247960672686005,

0.5247960672686005,

0.5247960672686005,

0.5247960672686005,

0.5247960672686005,

0.5247960672686005,

0.4954321092488347,

0.4954321092488347,

0.4954321092488347,

0.4954321092488347,

0.4954321092488347,

0.4954321092488347,

0.4954321092488347,

0.45776923159529403,

0.45776923159529403,

0.45776923159529403,

0.45776923159529403,

0.45776923159529403,

0.45776923159529403,

0.45776923159529403,

0.45870477732006926,

0.45870477732006926,

0.45870477732006926,

0.45870477732006926,

0.45870477732006926,

0.45870477732006926,

0.45870477732006926,

0.45940105978119594,

0.45940105978119594,

0.45940105978119594,

0.45940105978119594,

0.45940105978119594,

0.45940105978119594,

0.45940105978119594,

0.45767226940712247,

0.45767226940712247,

0.45767226940712247,

0.45767226940712247,

0.45767226940712247,

0.45767226940712247,

0.45767226940712247,

0.4564011583170134,

0.4564011583170134,

0.4564011583170134,

0.4564011583170134,

0.4564011583170134,

0.4564011583170134,

0.4564011583170134,

0.4592087592435913,

0.4592087592435913,

0.4592087592435913,

0.4592087592435913,

0.4592087592435913,

0.4592087592435913,

0.4592087592435913,

0.45777443140182345,

0.45777443140182345,

0.45777443140182345,

0.45777443140182345,

0.45777443140182345,

0.45777443140182345,

0.45777443140182345,

0.45924616460485934,

0.45924616460485934,

0.45924616460485934,

0.45924616460485934,

0.45924616460485934,

0.45924616460485934,

0.45924616460485934,

0.4583897787591276,

0.4583897787591276,

0.4583897787591276,

0.4583897787591276,

0.4583897787591276,

0.4583897787591276,

0.4583897787591276,

0.45956928793276064,

0.45956928793276064,

0.45956928793276064,

0.45956928793276064,

0.45956928793276064,

0.45956928793276064,

0.45956928793276064,

0.519717041131953,

0.519717041131953,

0.519717041131953,

0.519717041131953,

0.519717041131953,

0.519717041131953,

0.519717041131953,

0.4895468397347071,

0.4895468397347071,

0.4895468397347071,

0.4895468397347071,

0.4895468397347071,

0.4895468397347071,

0.4895468397347071,

0.48624758680837576,

0.48624758680837576,

0.48624758680837576,

0.48624758680837576,

0.48624758680837576,

0.48624758680837576,

0.48624758680837576,

0.5242938412806915,

0.5242938412806915,

0.5242938412806915,

0.5242938412806915,

0.5242938412806915,

0.5242938412806915,

0.5242938412806915,

0.45732537831372994,

0.45732537831372994,

0.45732537831372994,

0.45732537831372994,

0.45732537831372994,

0.45732537831372994,

0.45732537831372994,

0.45964326126801874,

0.45964326126801874,

0.45964326126801874,

0.45964326126801874,

0.45964326126801874,

0.45964326126801874,

0.45964326126801874,

0.45826588652465855,

0.45826588652465855,

0.45826588652465855,

0.45826588652465855,

0.45826588652465855,

0.45826588652465855,

0.45826588652465855,

0.4590153544745371,

0.4590153544745371,

0.4590153544745371,

0.4590153544745371,

0.4590153544745371,

0.4590153544745371,

0.4590153544745371,

0.4559833616122703,

0.4559833616122703,

0.4559833616122703,

0.4559833616122703,

0.4559833616122703,

0.4559833616122703,

0.4559833616122703,

0.45857840596563093,

0.45857840596563093,

0.45857840596563093,

0.45857840596563093,

0.45857840596563093,

0.45857840596563093,

0.45857840596563093,

0.4556990122121692,

0.4556990122121692,

0.4556990122121692,

0.4556990122121692,

0.4556990122121692,

0.4556990122121692,

0.4556990122121692,

0.45917761156046616,

0.45917761156046616,

0.45917761156046616,

0.45917761156046616,

0.45917761156046616,

0.45917761156046616,

0.45917761156046616,

0.4569928761431437,

0.4569928761431437,

0.4569928761431437,

0.4569928761431437,

0.4569928761431437,

0.4569928761431437,

0.4569928761431437,

0.45938906512694433,

0.45938906512694433,

0.45938906512694433,

0.45938906512694433,

0.45938906512694433,

0.45938906512694433,

0.45938906512694433,

0.5195442695864627,

0.5195442695864627,

0.5195442695864627,

0.5195442695864627,

0.5195442695864627,

0.5195442695864627,

0.5195442695864627,

0.5237921242093493,

0.5237921242093493,

0.5237921242093493,

0.5237921242093493,

0.5237921242093493,

0.5237921242093493,

0.5237921242093493,

0.5236331415297112,

0.5236331415297112,

0.5236331415297112,

0.5236331415297112,

0.5236331415297112,

0.5236331415297112,

0.5236331415297112,

0.5257152671018255,

0.5257152671018255,

0.5257152671018255,

0.5257152671018255,

0.5257152671018255,

0.5257152671018255,

0.5257152671018255,

0.45742449248679157,

0.45742449248679157,

0.45742449248679157,

0.45742449248679157,

0.45742449248679157,

0.45742449248679157,

0.45742449248679157,

0.46006417379971354,

0.46006417379971354,

0.46006417379971354,

0.46006417379971354,

0.46006417379971354,

0.46006417379971354,

0.46006417379971354,

0.45805002081483975,

0.45805002081483975,

0.45805002081483975,

0.45805002081483975,

0.45805002081483975,

0.45805002081483975,

0.45805002081483975,

0.45906816327999206,

0.45906816327999206,

0.45906816327999206,

0.45906816327999206,

0.45906816327999206,

0.45906816327999206,

0.45906816327999206,

0.4558062815453554,

0.4558062815453554,

0.4558062815453554,

0.4558062815453554,

0.4558062815453554,

0.4558062815453554,

0.4558062815453554,

0.45814752370252865,

0.45814752370252865,

0.45814752370252865,

0.45814752370252865,

0.45814752370252865,

0.45814752370252865,

0.45814752370252865,

0.4554758339749052,

0.4554758339749052,

0.4554758339749052,

0.4554758339749052,

0.4554758339749052,

0.4554758339749052,

0.4554758339749052,

0.4592112628621341,

0.4592112628621341,

0.4592112628621341,

0.4592112628621341,

0.4592112628621341,

0.4592112628621341,

0.4592112628621341,

0.456751237891377,

0.456751237891377,

0.456751237891377,

0.456751237891377,

0.456751237891377,

0.456751237891377,

0.456751237891377,

0.45951038595264654,

0.45951038595264654,

0.45951038595264654,

0.45951038595264654,

0.45951038595264654,

0.45951038595264654,

0.45951038595264654,

0.5190684498265534,

0.5190684498265534,

0.5190684498265534,

0.5190684498265534,

0.5190684498265534,

0.5190684498265534,

0.5190684498265534,

0.5242254242885218,

0.5242254242885218,

0.5242254242885218,

0.5242254242885218,

0.5242254242885218,

0.5242254242885218,

0.5242254242885218,

0.5236403994221426,

0.5236403994221426,

0.5236403994221426,

0.5236403994221426,

0.5236403994221426,

0.5236403994221426,

0.5236403994221426,

0.5245127323972775,

0.5245127323972775,

0.5245127323972775,

0.5245127323972775,

0.5245127323972775,

0.5245127323972775,

0.5245127323972775,

0.45743857846100333,

0.45743857846100333,

0.45743857846100333,

0.45743857846100333,

0.45743857846100333,

0.45743857846100333,

0.45743857846100333,

0.4581023784528862,

0.4581023784528862,

0.4581023784528862,

0.4581023784528862,

0.4581023784528862,

0.4581023784528862,

0.4581023784528862,

0.45804155074040936,

0.45804155074040936,

0.45804155074040936,

0.45804155074040936,

0.45804155074040936,

0.45804155074040936,

0.45804155074040936,

0.45904207260264823,

0.45904207260264823,

0.45904207260264823,

0.45904207260264823,

0.45904207260264823,

0.45904207260264823,

0.45904207260264823,

0.45580530661993646,

0.45580530661993646,

0.45580530661993646,

0.45580530661993646,

0.45580530661993646,

0.45580530661993646,

0.45580530661993646,

0.4605528126286541,

0.4605528126286541,

0.4605528126286541,

0.4605528126286541,

0.4605528126286541,

0.4605528126286541,

0.4605528126286541,

0.455476409951663,

0.455476409951663,

0.455476409951663,

0.455476409951663,

0.455476409951663,

0.455476409951663,

0.455476409951663,

0.4590906059792743,

0.4590906059792743,

0.4590906059792743,

0.4590906059792743,

0.4590906059792743,

0.4590906059792743,

0.4590906059792743,

0.4567476334857499,

0.4567476334857499,

0.4567476334857499,

0.4567476334857499,

0.4567476334857499,

0.4567476334857499,

0.4567476334857499,

0.4595237291251589,

0.4595237291251589,

0.4595237291251589,

0.4595237291251589,

0.4595237291251589,

0.4595237291251589,

0.4595237291251589,

0.518423356810763,

0.518423356810763,

0.518423356810763,

0.518423356810763,

0.518423356810763,

0.518423356810763,

0.518423356810763,

0.5237199847421963,

0.5237199847421963,

0.5237199847421963,

0.5237199847421963,

0.5237199847421963,

0.5237199847421963,

0.5237199847421963,

0.5236691149175264,

0.5236691149175264,

0.5236691149175264,

0.5236691149175264,

0.5236691149175264,

0.5236691149175264,

0.5236691149175264,

0.49475402516004363,

0.49475402516004363,

0.49475402516004363,

0.49475402516004363,

0.49475402516004363,

0.49475402516004363,

0.49475402516004363,

0.4574330893288633,

0.4574330893288633,

0.4574330893288633,

0.4574330893288633,

0.4574330893288633,

0.4574330893288633,

0.4574330893288633,

0.4576504652718613,

0.4576504652718613,

0.4576504652718613,

0.4576504652718613,

0.4576504652718613,

0.4576504652718613,

0.4576504652718613,

0.4580471650876356,

0.4580471650876356,

0.4580471650876356,

0.4580471650876356,

0.4580471650876356,

0.4580471650876356,

0.4580471650876356,

0.45907145875343724,

0.45907145875343724,

0.45907145875343724,

0.45907145875343724,

0.45907145875343724,

0.45907145875343724,

0.45907145875343724,

0.4558073164160799,

0.4558073164160799,

0.4558073164160799,

0.4558073164160799,

0.4558073164160799,

0.4558073164160799,

0.4558073164160799,

0.4605935211413079,

0.4605935211413079,

0.4605935211413079,

0.4605935211413079,

0.4605935211413079,

0.4605935211413079,

0.4605935211413079,

0.45547605176401995,

0.45547605176401995,

0.45547605176401995,

0.45547605176401995,

0.45547605176401995,

0.45547605176401995,

0.45547605176401995,

0.45910135612245917,

0.45910135612245917,

0.45910135612245917,

0.45910135612245917,

0.45910135612245917,

0.45910135612245917,

0.45910135612245917,

0.456748769553789,

0.456748769553789,

0.456748769553789,

0.456748769553789,

0.456748769553789,

0.456748769553789,

0.456748769553789,

0.4595384807994946,

0.4595384807994946,

0.4595384807994946,

0.4595384807994946,

0.4595384807994946,

0.4595384807994946,

0.4595384807994946,

0.5195265552613648,

0.5195265552613648,

0.5195265552613648,

0.5195265552613648,

0.5195265552613648,

0.5195265552613648,

0.5195265552613648,

0.5241649673697295,

0.5241649673697295,

0.5241649673697295,

0.5241649673697295,

0.5241649673697295,

0.5241649673697295,

0.5241649673697295,

0.5236822877873643,

0.5236822877873643,

0.5236822877873643,

0.5236822877873643,

0.5236822877873643,

0.5236822877873643,

0.5236822877873643,

0.48931463105703094,

0.48931463105703094,

0.48931463105703094,

0.48931463105703094,

0.48931463105703094,

0.48931463105703094,

0.48931463105703094,

0.39666068603467913,

0.39666068603467913,

0.39666068603467913,

0.39666068603467913,

0.39666068603467913,

0.39666068603467913,

0.39666068603467913,

0.3901798392098369,

0.3901798392098369,

0.3901798392098369,

0.3901798392098369,

0.3901798392098369,

0.3901798392098369,

0.3901798392098369,

0.3885609967595123,

0.3885609967595123,

0.3885609967595123,

0.3885609967595123,

0.3885609967595123,

0.3885609967595123,

0.3885609967595123,

0.3865859839155998,

0.3865859839155998,

0.3865859839155998,

0.3865859839155998,

0.3865859839155998,

0.3865859839155998,

0.3865859839155998,

0.38240879237653774,

0.38240879237653774,

0.38240879237653774,

0.38240879237653774,

0.38240879237653774,

0.38240879237653774,

0.38240879237653774,

0.3982902466772551,

0.3982902466772551,

0.3982902466772551,

0.3982902466772551,

0.3982902466772551,

0.3982902466772551,

0.3982902466772551,

0.3816506485272468,

0.3816506485272468,

0.3816506485272468,

0.3816506485272468,

0.3816506485272468,

0.3816506485272468,

0.3816506485272468,

0.38819283273744465,

0.38819283273744465,

0.38819283273744465,

0.38819283273744465,

0.38819283273744465,

0.38819283273744465,

0.38819283273744465,

0.3835185359826565,

0.3835185359826565,

0.3835185359826565,

0.3835185359826565,

0.3835185359826565,

0.3835185359826565,

0.3835185359826565,

0.3833135827625621,

0.3833135827625621,

0.3833135827625621,

0.3833135827625621,

0.3833135827625621,

0.3833135827625621,

0.3833135827625621,

0.3869849805112024,

0.3869849805112024,

0.3869849805112024,

0.3869849805112024,

0.3869849805112024,

0.3869849805112024,

0.3869849805112024,

0.38602263519113766,

0.38602263519113766,

0.38602263519113766,

0.38602263519113766,

0.38602263519113766,

0.38602263519113766,

0.38602263519113766,

0.38411209707335087,

0.38411209707335087,

0.38411209707335087,

0.38411209707335087,

0.38411209707335087,

0.38411209707335087,

0.38411209707335087,

0.3836342938422278,

0.3836342938422278,

0.3836342938422278,

0.3836342938422278,

0.3836342938422278,

0.3836342938422278,

0.3836342938422278,

0.39662580862686186,

0.39662580862686186,

0.39662580862686186,

0.39662580862686186,

0.39662580862686186,

0.39662580862686186,

0.39662580862686186,

0.3830553318350906,

0.3830553318350906,

0.3830553318350906,

0.3830553318350906,

0.3830553318350906,

0.3830553318350906,

0.3830553318350906,

0.3823118251955382,

0.3823118251955382,

0.3823118251955382,

0.3823118251955382,

0.3823118251955382,

0.3823118251955382,

0.3823118251955382,

0.38255510970482315,

0.38255510970482315,

0.38255510970482315,

0.38255510970482315,

0.38255510970482315,

0.38255510970482315,

0.38255510970482315,

0.38640382361419084,

0.38640382361419084,

0.38640382361419084,

0.38640382361419084,

0.38640382361419084,

0.38640382361419084,

0.38640382361419084,

0.38415364556395093,

0.38415364556395093,

0.38415364556395093,

0.38415364556395093,

0.38415364556395093,

0.38415364556395093,

0.38415364556395093,

0.3818837737582994,

0.3818837737582994,

0.3818837737582994,

0.3818837737582994,

0.3818837737582994,

0.3818837737582994,

0.3818837737582994,

0.37792739926390617,

0.37792739926390617,

0.37792739926390617,

0.37792739926390617,

0.37792739926390617,

0.37792739926390617,

0.37792739926390617,

0.37736810319664643,

0.37736810319664643,

0.37736810319664643,

0.37736810319664643,

0.37736810319664643,

0.37736810319664643,

0.37736810319664643,

0.3812731577523757,

0.3812731577523757,

0.3812731577523757,

0.3812731577523757,

0.3812731577523757,

0.3812731577523757,

0.3812731577523757,

0.38421582153462613,

0.38421582153462613,

0.38421582153462613,

0.38421582153462613,

0.38421582153462613,

0.38421582153462613,

0.38421582153462613,

0.3889604354373833,

0.3889604354373833,

0.3889604354373833,

0.3889604354373833,

0.3889604354373833,

0.3889604354373833,

0.3889604354373833,

0.3861872963607218,

0.3861872963607218,

0.3861872963607218,

0.3861872963607218,

0.3861872963607218,

0.3861872963607218,

0.3861872963607218,

0.39118932154019814,

0.39118932154019814,

0.39118932154019814,

0.39118932154019814,

0.39118932154019814,

0.39118932154019814,

0.39118932154019814,

0.38736339748522314,

0.38736339748522314,

0.38736339748522314,

0.38736339748522314,

0.38736339748522314,

0.38736339748522314,

0.38736339748522314,

0.3896752109205865,

0.3896752109205865,

0.3896752109205865,

0.3896752109205865,

0.3896752109205865,

0.3896752109205865,

0.3896752109205865,

0.38977562697069384,

0.38977562697069384,

0.38977562697069384,

0.38977562697069384,

0.38977562697069384,

0.38977562697069384,

0.38977562697069384,

0.38461408781610934,

0.38461408781610934,

0.38461408781610934,

0.38461408781610934,

0.38461408781610934,

0.38461408781610934,

0.38461408781610934,

0.38525662593794624,

0.38525662593794624,

0.38525662593794624,

0.38525662593794624,

0.38525662593794624,

0.38525662593794624,

0.38525662593794624,

0.3907619488290876,

0.3907619488290876,

0.3907619488290876,

0.3907619488290876,

0.3907619488290876,

0.3907619488290876,

0.3907619488290876,

0.3823301807634558,

0.3823301807634558,

0.3823301807634558,

0.3823301807634558,

0.3823301807634558,

0.3823301807634558,

0.3823301807634558,

0.38428628810978344,

0.38428628810978344,

0.38428628810978344,

0.38428628810978344,

0.38428628810978344,

0.38428628810978344,

0.38428628810978344,

0.38762293845906487,

0.38762293845906487,

0.38762293845906487,

0.38762293845906487,

0.38762293845906487,

0.38762293845906487,

0.38762293845906487,

0.38077971307423275,

0.38077971307423275,

0.38077971307423275,

0.38077971307423275,

0.38077971307423275,

0.38077971307423275,

0.38077971307423275,

0.37348132870423595,

0.37348132870423595,

0.37348132870423595,

0.37348132870423595,

0.37348132870423595,

0.37348132870423595,

0.37348132870423595,

0.38047858538493773,

0.38047858538493773,

0.38047858538493773,

0.38047858538493773,

0.38047858538493773,

0.38047858538493773,

0.38047858538493773,

0.38065416099287325,

0.38065416099287325,

0.38065416099287325,

0.38065416099287325,

0.38065416099287325,

0.38065416099287325,

0.38065416099287325,

0.38802750495965405,

0.38802750495965405,

0.38802750495965405,

0.38802750495965405,

0.38802750495965405,

0.38802750495965405,

0.38802750495965405,

0.3896374109795356,

0.3896374109795356,

0.3896374109795356,

0.3896374109795356,

0.3896374109795356,

0.3896374109795356,

0.3896374109795356,

0.3891513317710575,

0.3891513317710575,

0.3891513317710575,

0.3891513317710575,

0.3891513317710575,

0.3891513317710575,

0.3891513317710575,

0.3873051573174501,

0.3873051573174501,

0.3873051573174501,

0.3873051573174501,

0.3873051573174501,

0.3873051573174501,

...]

#找到最优超参数

tuning_results = pd.DataFrame(all_params)

tuning_results['mape'] = mapes

tuning_results.sort_values(by = "mape")

| changepoint_prior_scale | seasonality_prior_scale | changepoint_range | seasonality_mode | holidays_prior_scale | mape | |

|---|---|---|---|---|---|---|

| 5003 | 35.000 | 0.50 | 0.3 | additive | 10.00 | 0.360404 |

| 5001 | 35.000 | 0.50 | 0.3 | additive | 1.00 | 0.360404 |

| 5000 | 35.000 | 0.50 | 0.3 | additive | 0.50 | 0.360404 |

| 4999 | 35.000 | 0.50 | 0.3 | additive | 0.10 | 0.360404 |

| 4998 | 35.000 | 0.50 | 0.3 | additive | 0.05 | 0.360404 |

| ... | ... | ... | ... | ... | ... | ... |

| 95 | 0.001 | 0.05 | 0.9 | multiplicative | 5.00 | 0.526060 |

| 94 | 0.001 | 0.05 | 0.9 | multiplicative | 1.00 | 0.526060 |

| 93 | 0.001 | 0.05 | 0.9 | multiplicative | 0.50 | 0.526060 |

| 92 | 0.001 | 0.05 | 0.9 | multiplicative | 0.10 | 0.526060 |

| 91 | 0.001 | 0.05 | 0.9 | multiplicative | 0.05 | 0.526060 |

7546 rows × 6 columns

6275

6275

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?