多元线性回归:

逻辑回归(对数几率回归):

习题:编程实现对率回归,并给出西瓜书数据集3.0α上的结果。

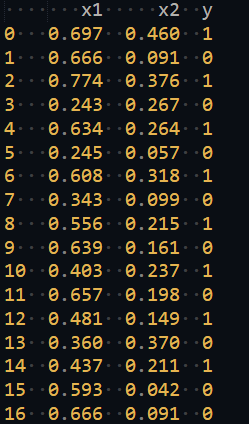

由于数据集前半部分全是正例,后半部分全是负例,直接划分训练集容易全是正例,先调整数据集的顺序。调整后如下图:

数据集80%作为训练集,20%作为测试集。使用梯度下降进行求解:

(书上提到的牛顿法之后补上)

python:

#包初始化

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

# 数据初始化

x = [[0.697,0.460],[0.774,0.376],[0.634,0.264],[0.608,0.318],[0.556,0.215],[0.403,0.237],[0.481,0.149],[0.437,0.211],[0.666,0.091],[0.243,0.267],[0.245,0.057],[0.343,0.099],[0.639,0.161],[0.657,0.198],[0.360,0.370],[0.593,0.042],[0.719,0.103]]

y = [1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0]

n = len(x) // 2

x1 = [x[i // 2] if i % 2 == 0 else x[i // 2 + n] for i in range(len(x))]

y1 = [y[i // 2] if i % 2 == 0 else y[i // 2 + n] for i in range(len(y))]

tt = list()

for i in range(len(x1)):

tmp = list()

for j in range(len(x1[i])):

tmp.append(x1[i][j])

tmp.append(y1[i])

tt.append(tmp)

# print(tt)

data_tt = pd.DataFrame(tt,columns=['x1','x2','y'])

print(data_tt)

data_x = pd.DataFrame(x1,columns=['x1','x2'])

data_y = pd.Series(y1)

# print(data_x)

# print(data_y)

# 划分数据

def split_data(data_x,data_y,test_rate = 0.2):

cnt = int(len(data_y) * (1 - test_rate))

return data_x[:cnt],data_y[:cnt],data_x[cnt:],data_y[cnt:]

train_x,train_y,test_x,test_y = split_data(data_x,data_y)

# print(f'{train_x}\n{train_y}\n{test_x}\n{test_y}')

# 进行训练

#初始化w和b

data_dim = train_x.shape[1]

data_size = train_x.shape[0]

w = np.zeros((data_dim,))

# print(w)

b = np.zeros((1,))

# help(np.clip)

def _sigmod(z):

return np.clip(1/(1+np.exp(-z)),1e-8,1-(1e-8))

def _f(x,w,b):

# print(np.dot(w.T(),x))

return _sigmod(np.dot(x,w)+b)

def get_gradient(x,y,w,b):

y_hat = _f(x,w,b)

e_y = y - y_hat

w_grad = -np.sum(e_y * x.T,1)

b_grad = -np.sum(e_y)

return w_grad , b_grad

def _shuffle(x,y):

randomize = np.arange(len(x))

np.random.shuffle(randomize)

return x[randomize],y[randomize]

def _acc(y_pred,y):

return 1 - np.mean(np.abs(y_pred - y))

max_iter = 60000

# batch_size = 8

learning_rate = 0.15

step = 1

train_acc = []

test_acc = []

for i in range(max_iter):

w_grad , b_grad = get_gradient(train_x,train_y,w,b)

w -= learning_rate / np.sqrt(step) * w_grad

b -= learning_rate / np.sqrt(step) * b_grad

step += 1

train_pred = _f(train_x,w,b)

test_pred = _f(test_x,w,b)

train_pred = np.round(train_pred)

test_pred = np.round(test_pred)

train_acc.append(_acc(train_pred,train_y))

test_acc.append(_acc(test_pred,test_y))

print('Train_acc={}'.format(train_acc[-1]))

print('Test_acc={}'.format(test_acc[-1]))

print(w)

# print('w = {}'.format(w))

# Accuracy curve

plt.plot(train_acc)

plt.plot(test_acc)

plt.title('Accuracy')

plt.legend(['train', 'test'])

plt.savefig('acc.png')

plt.show()

518

518

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?