1.数据

再kaggle里下载猫狗的图片数据,各12500张作为训练集,下载另外12500张作为训练集。这是一个二分类问题,可以让猫的标签为0,狗的标签为1。

2.步骤

(1).生成图片和图片对应标签的lists。

(2).用lists列表生成tensorflow queue队列。

(3).使用一种tensorflow中的读取器读取队列。

(4).使用一种tensorflow中的解码器根据数据不同格式对数据解码。

(5).把数据生成相关的批次。

(6).把这些数据送进计算图中进行计算。

(7).训练

3.Epoch和Batch和Iteration/step

Epoch:一个epoch指把所有数据执行一次。一个Epoch包含n个Iteration/step

Batch:每一个批次里的图片张数

比如有5000张图片,batch_size为10,则需要训练500个step训练完一次,训练完一次就叫一个epoch。

4.input_data.py 用于读取数据,生成批次。

import tensorflow as tf

import numpy as np

import os

img_width=208

img_height=208

train_dir='D:/software/mycodes/python3/524/image/train/train/'

def get_files(file_dir):

cats=[]

label_cats=[]

dogs=[]

label_dogs=[]

for file in os.listdir(file_dir):

name=file.split(sep='.')

if name[0]=='cat':

cats.append(file_dir+file)

label_cats.append(0)

else:

dogs.append(file_dir+file)

label_dogs.append(1)

print('There are %d cats\nThere are %d dogs'%(len(cats),len(dogs)))

image_list=np.hstack((cats,dogs))

label_list=np.hstack((label_cats,label_dogs))

temp=np.array([image_list,label_list])

temp=temp.transpose()

np.random.shuffle(temp)

image_list=list(temp[:,0])

label_list=list(temp[:,1])

label_list=[int(i) for i in label_list]

return image_list,label_list

image_list,label_list=get_files(train_dir)

def get_batch(image,label,image_W,image_H,batch_size,capacity):

image=tf.cast(image,tf.string)

label=tf.cast(label,tf.int32)

input_queue=tf.train.slice_input_producer([image,label])

label=input_queue[1]

image_contents=tf.read_file(input_queue[0])

image=tf.image.decode_jpeg(image_contents,channels=3)

image=tf.image.resize_image_with_crop_or_pad(image, image_W,image_H)

image=tf.image.per_image_standardization(image)

image_batch,label_batch=tf.train.batch([image,label],batch_size=batch_size,num_threads=64,capacity=capacity)

label_batch=tf.reshape(label_batch,[batch_size])

return image_batch,label_batch

import matplotlib.pyplot as plt

BATCH_SIZE=2

CAPACITY=256

IMG_W=208

IMG_H=208

train_dir='D:/software/mycodes/python3/524/image/train/train/'

image_list,label_list=get_files(train_dir)

image_batch,label_batch=get_batch(image_list,label_list,IMG_W,IMG_H,BATCH_SIZE,CAPACITY)

with tf.Session() as sess:

i=0

coord=tf.train.Coordinator()

threads=tf.train.start_queue_runners(coord=coord)

try:

while not coord.should_stop() and i<1:

img,label=sess.run([image_batch,label_batch])

for j in np.arange(BATCH_SIZE):

print('label:%d'%label[j])

plt.imshow(img[j,:,:,:])

plt.show()

i+=1

except tf.errors.OutOfRangeError:

print('done!')

finally:

coord.request_stop()

coord.join(threads)

6.model.py 网络模型文件

import tensorflow as tf

def inference(images,batch_size,n_classes):

with tf.variable_scope('conv1') as scope:

weights=tf.get_variable('weights',

shape=[3,3,3,16],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.1, dtype=tf.float32))

biases=tf.get_variable("biases",

shape=[16],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

conv=tf.nn.conv2d(images,weights,strides=[1,1,1,1],padding='SAME')

pre_activation=tf.nn.bias_add(conv,biases)

conv1=tf.nn.relu(pre_activation,name=scope.name)

with tf.variable_scope('pooling1_lrn') as scope:

pool1=tf.nn.max_pool(conv1,ksize=[1,3,3,1],strides=[1,2,2,1],

padding='SAME',name='pooling1')

norm1=tf.nn.lrn(pool1,depth_radius=4,bias=1.0,alpha=0.001/9.0,

beta=0.75,name='norm1')

with tf.variable_scope('conv2') as scope:

weights=tf.get_variable('weights',

shape=[3,3,16,16],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.1,dtype=tf.float32))

biases=tf.get_variable('biases',

shape=[16],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

conv=tf.nn.conv2d(norm1,weights,strides=[1,1,1,1],padding='SAME')

pre_activation=tf.nn.bias_add(conv,biases)

conv2=tf.nn.relu(pre_activation,name='conv2')

with tf.variable_scope('pooling2_lrn') as scope:

norm2=tf.nn.lrn(conv2,depth_radius=4,bias=1.0,alpha=0.001/9.0,

beta=0.75,name='norm2')

pool2=tf.nn.max_pool(norm2,ksize=[1,3,3,1],strides=[1,1,1,1],

padding='SAME',name='pooling2')

with tf.variable_scope('local3') as scope:

reshape = tf.reshape(pool2,shape=[batch_size,-1])

dim = reshape.get_shape()[1].value

weights = tf.get_variable('weights',

shape=[dim, 128],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.005,dtype=tf.float32))

biases=tf.get_variable('biases',

shape=[128],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

local3=tf.nn.relu(tf.matmul(reshape,weights)+biases,name=scope.name)

with tf.variable_scope('local4') as scope:

weights = tf.get_variable('weights',

shape=[128, 128],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.005,dtype=tf.float32))

biases =tf.get_variable('biases',

shape=[128],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

local4=tf.nn.relu(tf.matmul(local3,weights)+biases,name='local4')

with tf.variable_scope('softmax_linear') as scope:

weights=tf.get_variable('softmax_linear',

shape=[128,n_classes],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.005,dtype=tf.float32))

biases=tf.get_variable('biases',

shape=[n_classes],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

softmax_linear=tf.add(tf.matmul(local4,weights),biases,name='softmax_linear')

return softmax_linear

def losses(logits,labels):

with tf.variable_scope('loss') as scope:

cross_entropy=tf.nn.sparse_softmax_cross_entropy_with_logits\

(logits=logits,labels=labels,name='xentropy_per_example')

loss=tf.reduce_mean(cross_entropy,name='loss')

tf.summary.scalar(scope.name+'/loss',loss)

return loss

def training(loss,learning_rate):

with tf.name_scope('optimizer'):

optimizer=tf.train.AdadeltaOptimizer(learning_rate=learning_rate)

global_step=tf.Variable(0,name='global_step',trainable=False)

train_op=optimizer.minimize(loss,global_step=global_step)

return train_op

def evaluation(logits,labels):

with tf.variable_scope('accuracy') as scope:

correct=tf.nn.in_top_k(logits,labels,1)

correct=tf.cast(correct,tf.float16)

accuracy=tf.reduce_mean(correct)

tf.summary.scalar(scope.name+'/accuracy',accuracy)

return accuracy 7.training.py 训练文件

import os

import numpy as np

import tensorflow as tf

import input_data

import model

N_CLASSES=2

IMG_W=208

IMG_H=208

BATCH_SIZE=16

CAPACITY=2000

MAX_STEP=15000

learing_rate=0.0001

def run_training():

train_dir='D:/software/mycodes/python3/524/image/train/train/'

logs_train_dir='D:/software/mycodes/python3/524/logs/train/'

train,train_label=input_data.get_files(train_dir)

train_batch,train_label_batch=input_data.get_batch(train,

train_label,

IMG_W,

IMG_H,

BATCH_SIZE,

CAPACITY)

train_logits=model.inference(train_batch,BATCH_SIZE,N_CLASSES)

train_loss=model.losses(train_logits,train_label_batch)

train_op=model.training(train_loss,learing_rate)

train_acc=model.evaluation(train_logits,train_label_batch)

summary_op=tf.summary.merge_all()

sess=tf.Session()

train_writer=tf.summary.FileWriter(logs_train_dir,sess.graph)

saver=tf.train.Saver()

sess.run(tf.global_variables_initializer())

coord=tf.train.Coordinator()

threads=tf.train.start_queue_runners(sess=sess,coord=coord)

try:

for step in np.arange(MAX_STEP):

if coord.should_stop():

break

_,tra_loss,tra_acc=sess.run([train_op,train_loss,train_acc])

if step%50==0:

print('step %d,train loss=%.2f,train accuracy=%.2f%%'%(step,tra_loss,tra_acc))

summary_str=sess.run(summary_op)

train_writer.add_summary(summary_str,step)

if step%2000==0 or (step+1)==MAX_STEP:

checkpoint_path=os.path.join(logs_train_dir,'model.ckpt')

saver.save(sess,checkpoint_path,global_step=step)

except tf.errors.OutOfRangeError:

print('Done traing -- epoch limit reached')

finally:

coord.request_stop()

coord.join(threads)

sess.close()

run_training()

8.测试文件:

from PIL import Image

import matplotlib.pyplot as plt

import numpy as np

import input_data

import tensorflow as tf

import model

def get_one_image(train):

n=len(train)

ind=np.random.randint(0,n)

img_dir=train[ind]

image=Image.open(img_dir)

plt.imshow(image)

image=image.resize([208,208])

image=np.array(image)

return image

def evaluate_one_image():

train_dir='D:/software/mycodes/python3/524/image/train/train/'

train,train_label=input_data.get_files(train_dir)

image_array=get_one_image(train)

with tf.Graph().as_default():

BATCH_SIZE=1

N_CLASSES=2

image=tf.cast(image_array,tf.float32)

image=tf.reshape(image,[1,208,208,3])

logit=model.inference(image,BATCH_SIZE,N_CLASSES)

logit=tf.nn.softmax(logit)

x=tf.placeholder(tf.float32,shape=[208,208,3])

logs_train_dir='D:/software/mycodes/python3/524/logs/train/'

saver=tf.train.Saver()

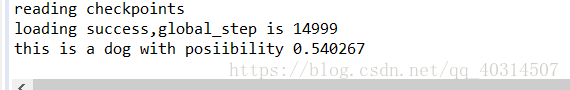

with tf.Session() as sess:

print("reading checkpoints")

ckpt=tf.train.get_checkpoint_state(logs_train_dir)

if ckpt and ckpt.model_checkpoint_path:

global_step=ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1]

saver.restore(sess, ckpt.model_checkpoint_path)

print('loading success,global_step is %s'% global_step)

else:

print('no checkpoint file found')

prediction=sess.run(logit,feed_dict={x:image_array})

max_index=np.argmax(prediction)

if max_index==0:

print("this is a cat with possibility %.6f"%prediction[:,0])

else:

print("this is a dog with posiibility %.6f"%prediction[:,1])

evaluate_one_image()

evaluate_one_image()

evaluate_one_image()

4228

4228

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?