图像匹配Siamese网络实验记录

Ⅰ. Siamese 网络介绍

在吴恩达深度学习课程中提到:

在此有网友的笔记:

https://www.cnblogs.com/xiaojianliu/articles/9938388.html

https://www.pytorchtutorial.com/pytorch-one-shot-learning/#Contrastive_Loss_function

https://www.cnblogs.com/king-lps/p/8342452.html

Ⅱ. 数据集

AT&T

读取数据集

class SiameseNetworkDataset():

__epoch_size__ = 200

def __init__(self,transform=None,should_invert=False):

self.imageFolderDataset = []

self.train_dataloader = []

self.transform = transform

self.should_invert = should_invert

def __getitem__(self):

'''

如果图像来自同一个类,标签将为0,否则为1

'''

img0_class = random.randint(0,40-1)

#we need to make sure approx 50% of images are in the same class

should_get_same_class = random.randint(0,1)

if should_get_same_class:

temp = random.sample(list(range(0,10)), 2)

img0_tuple = (self.imageFolderDataset[img0_class][temp[0]], img0_class)

img1_tuple = (self.imageFolderDataset[img0_class][temp[1]], img0_class)

else:

img1_class = random.randint(0, 40 - 1)

# 保证属于不同类别

while img1_class == img0_class:

img1_class = random.randint(0, 40 - 1)

img0_tuple = (self.imageFolderDataset[img0_class][random.randint(0,9)], img0_class)

img1_tuple = (self.imageFolderDataset[img1_class][random.randint(0,9)], img1_class)

img0 = Image.open(img0_tuple[0])

img1 = Image.open(img1_tuple[0])

if self.should_invert:

# 二值图像黑白反转

img0 = PIL.ImageOps.invert(img0)

img1 = PIL.ImageOps.invert(img1)

return img0, img1, should_get_same_class

def att_face_data(self):

''' AT&T 数据集: 共40类, 每类十张图像 '''

local = 'D:/MINE_FILE/dataSet/att_faces/'

self.imageFolderDataset = []

for i in range(1, 40 + 1):

temp = []

sub_floder = local + 's' + str(i) + '/'

for j in range(1, 10 + 1):

temp.append(sub_floder + str(j) + '.pgm')

self.imageFolderDataset.append(temp)

# 为数据集添加数据

for i in range(self.__epoch_size__):

img0, img1, label = self.__getitem__()

self.train_dataloader.append((img0, img1, label))

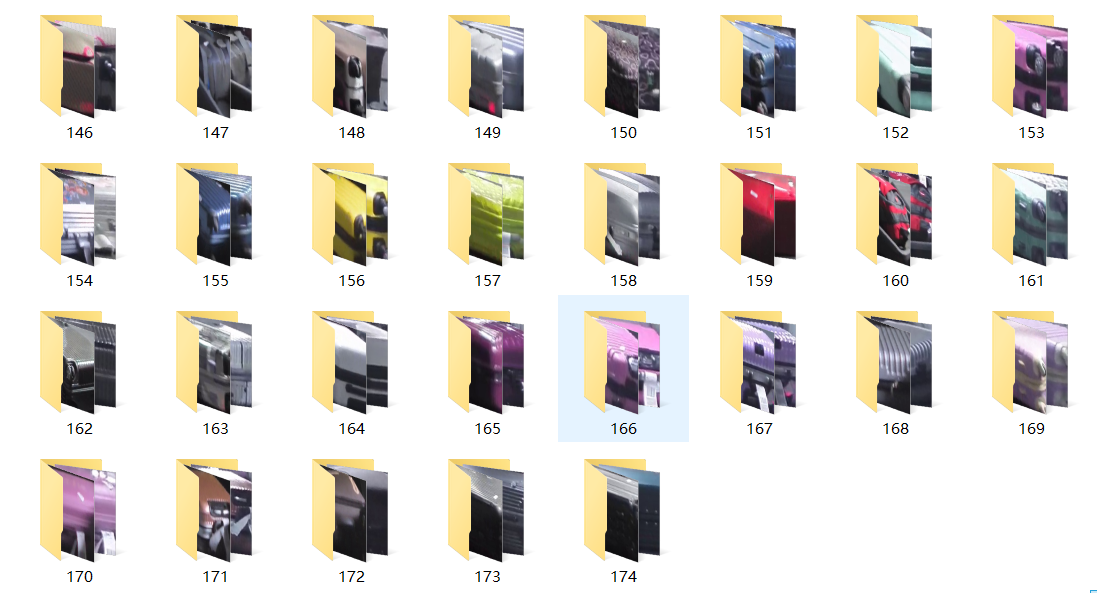

分拣行李匹配图像

class SiameseNetworkDataset():

__set_size__ = 90

__batch_size__ = 10

def __init__(self,set_size=90,batch_size=10,transform=None,should_invert=False):

self.imageFolderDataset = []

self.train_dataloader = []

self.__set_size__ = set_size

self.__batch_size__ = batch_size

self.transform = tfs.Compose([

tfs.Resize((image_width,image_height)),

# tfs.RandomHorizontalFlip(),

# tfs.RandomCrop(128),

tfs.ToTensor()

])

self.should_invert = should_invert

def __getitem__(self, class_num=40):

'''

如果图像来自同一个类,标签将为1,否则为0

TODO: 由于classed_pack 每类可能有2-3张, 此时参数item_num无效,故删去参数中的item_num

'''

data0 = torch.empty(0, 3, image_width, image_height)

data1 = torch.empty(0, 3, image_width, image_height)

should_get_same_class = random.randint(0,1)

for i in range(self.__batch_size__):

img0_class = random.randint(0,class_num-1)

#we need to make sure approx 50% of images are in the same class

if should_get_same_class:

item_num = len(self.imageFolderDataset[img0_class])

# temp = random.sample(list(range(0,item_num)), 2)

# 稍微改变策略,让其有可能与自身图片搭配

img0_tuple = (self.imageFolderDataset[img0_class][random.randint(0, item_num - 1)], img0_class)

img1_tuple = (self.imageFolderDataset[img0_class][random.randint(0, item_num - 1)], img0_class)

else:

img1_class = random.randint(0, class_num - 1)

# 保证属于不同类别

while img1_class == img0_class:

img1_class = random.randint(0, class_num - 1)

item_num = len(self.imageFolderDataset[img0_class])

img0_tuple = (self.imageFolderDataset[img0_class][random.randint(0, item_num - 1)], img0_class)

item_num = len(self.imageFolderDataset[img1_class])

img1_tuple = (self.imageFolderDataset[img1_class][random.randint(0, item_num - 1)], img1_class)

img0 = Image.open(img0_tuple[0])

img1 = Image.open(img1_tuple[0])

# 用以指定一种色彩模式, "L"8位像素,黑白

# img0 = img0.convert("L")

# img1 = img1.convert("L")

# img0 = img0.resize((100,100),Image.BILINEAR)

# img1 = img1.resize((100,100),Image.BILINEAR)

if self.should_invert:

# 二值图像黑白反转,默认不采用

img0 = PIL.ImageOps.invert(img0)

img1 = PIL.ImageOps.invert(img1)

if self.transform is not None:

img0 = self.transform(img0)

img1 = self.transform(img1)

img0 = torch.unsqueeze(img0, dim=0).float()

img1 = torch.unsqueeze(img1, dim=0).float()

data0 = torch.cat((data0, img0), dim=0)

data1 = torch.cat((data1, img1), dim=0)

# return data0, data1, should_get_same_class

# XXX:torch.from_numpy(np.array([int(img1_tuple[1]!=img0_tuple[1])],dtype=np.float32))

return data0, data1, torch.from_numpy(np.array([should_get_same_class ^ 1], dtype=np.float32))

def classed_pack(self):

local = 'image/classed_pack/2019-03-14 22-19-img/'

local1 = 'image/classed_pack/2019-03-14 16-30-img/'

local2 = 'image/classed_pack/2019-03-15 13-10-img/'

self.imageFolderDataset = []

# floader1

subFloader = os.listdir(local)

for i in subFloader:

temp = []

sub_dir = local + i + '/'

subsubFloader = os.listdir(sub_dir)

for j in subsubFloader:

temp.append(sub_dir + j)

self.imageFolderDataset.append(temp)

# floader2

subFloader = os.listdir(local1)

for i in subFloader:

temp = []

sub_dir = local1 + i + '/'

subsubFloader = os.listdir(sub_dir)

for j in subsubFloader:

temp.append(sub_dir + j)

self.imageFolderDataset.append(temp)

# floader3

subFloader = os.listdir(local2)

for i in subFloader:

temp = []

sub_dir = local2 + i + '/'

subsubFloader = os.listdir(sub_dir)

for j in subsubFloader:

temp.append(sub_dir + j)

self.imageFolderDataset.append(temp)

# 为数据集添加数据

for i in range(self.__set_size__):

img0, img1, label = self.__getitem__(len(self.imageFolderDataset))

self.train_dataloader.append((img0, img1, label))

Ⅲ. 实验记录

A. 模型1

数据集: AT&T

reference: https://www.pytorchtutorial.com/pytorch-one-shot-learning/#Contrastive_Loss_function

1. 实验1

数据集: AT&T

# 网络结构为

SiameseNetwork(

(cnn1): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): Conv2d(1, 4, kernel_size=(3, 3), stride=(1, 1))

(2): ReLU(inplace=True)

(3): BatchNorm2d(4, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): Dropout2d(p=0.2, inplace=False)

(5): ReflectionPad2d((1, 1, 1, 1))

(6): Conv2d(4, 8, kernel_size=(3, 3), stride=(1, 1))

(7): ReLU(inplace=True)

(8): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(9): Dropout2d(p=0.2, inplace=False)

(10): ReflectionPad2d((1, 1, 1, 1))

(11): Conv2d(8, 8, kernel_size=(3, 3), stride=(1, 1))

(12): ReLU(inplace=True)

(13): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(14): Dropout2d(p=0.2, inplace=False)

)

(fc1): Sequential(

(0): Linear(in_features=80000, out_features=500, bias=True)

(1): ReLU(inplace=True)

(2): Linear(in_features=500, out_features=500, bias=True)

(3): ReLU(inplace=True)

(4): Linear(in_features=500, out_features=5, bias=True)

)

)

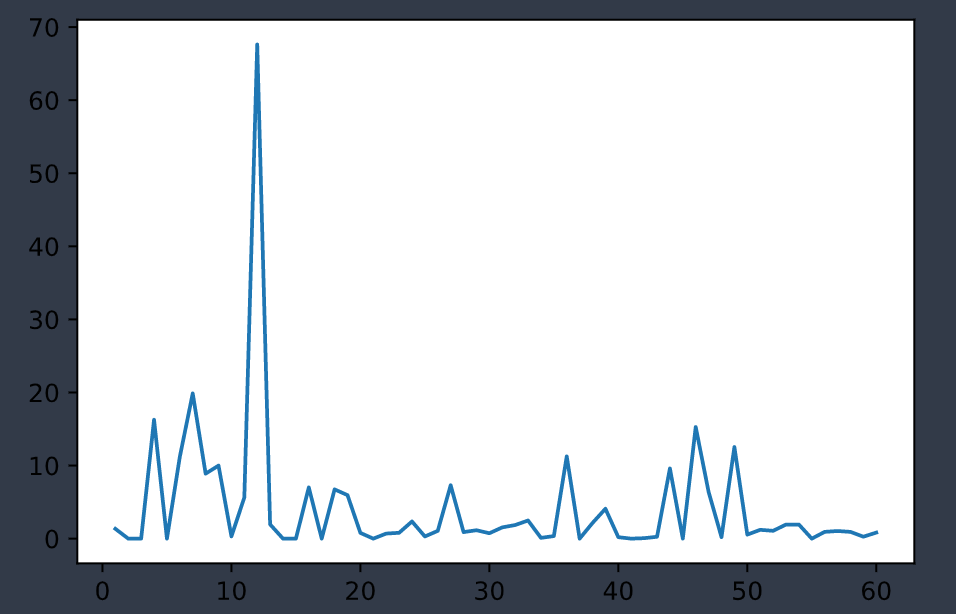

2. 实验2

reference: https://www.cnblogs.com/king-lps/p/8342452.html

数据集: AT&T

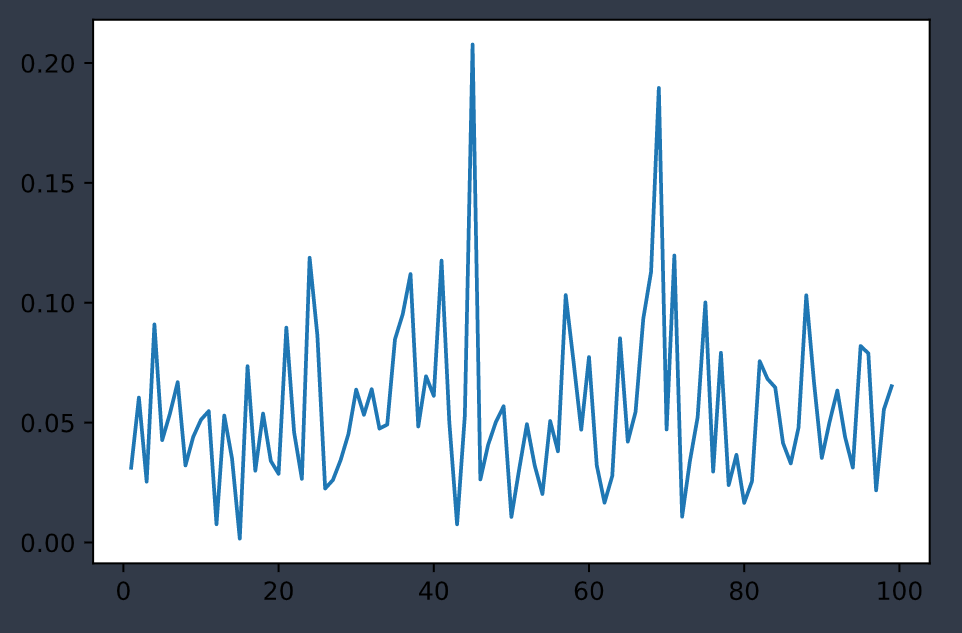

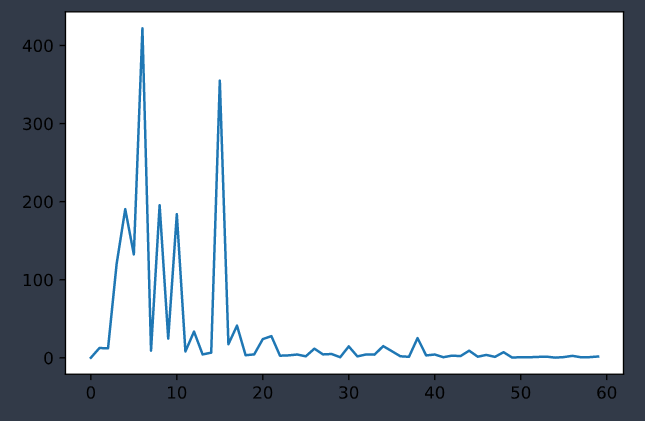

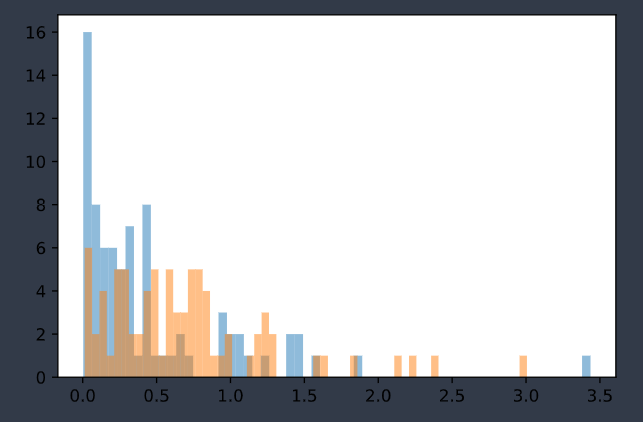

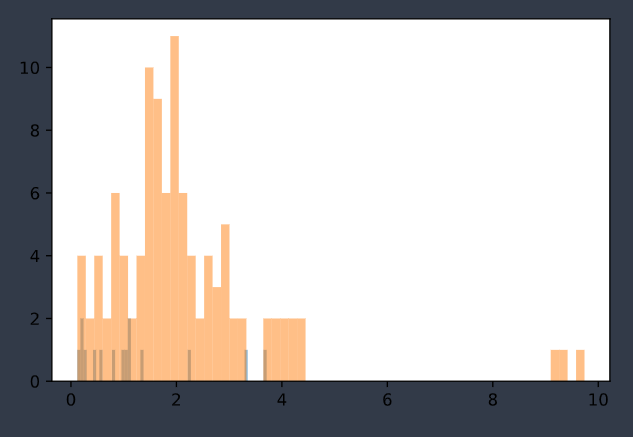

误差曲线为:

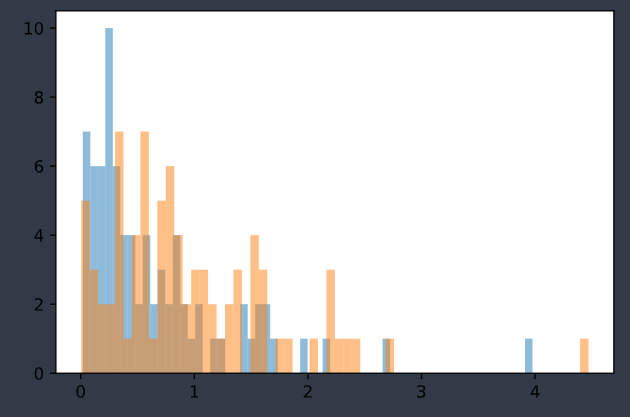

显示训练后的模型对同类和不同类图片的误差:

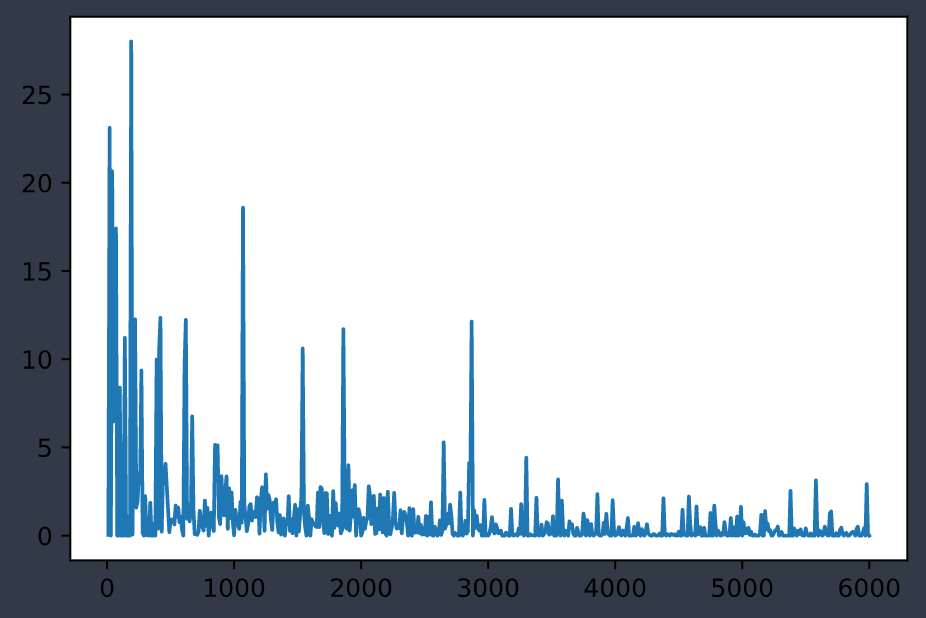

3. 实验3

数据集: (自制)pack

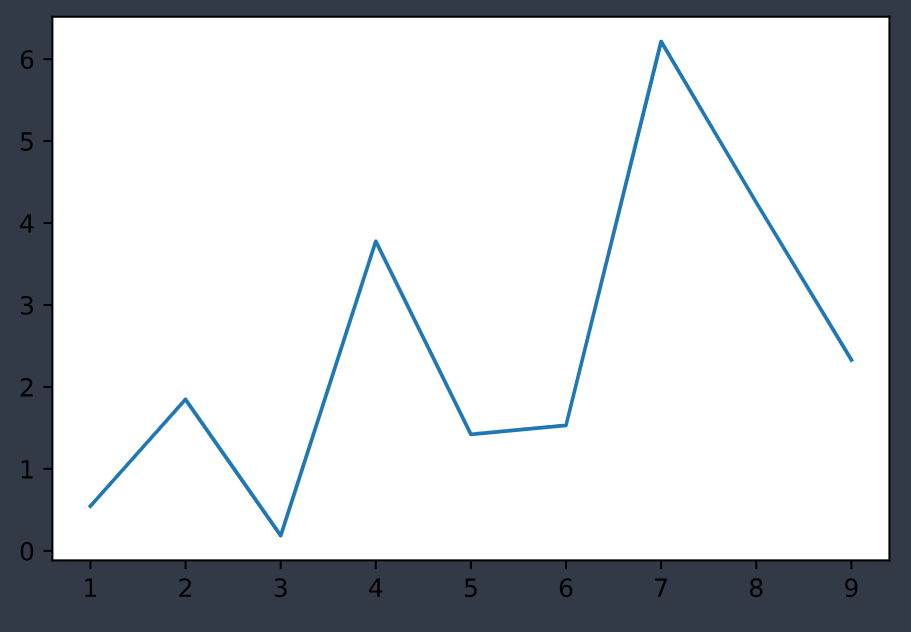

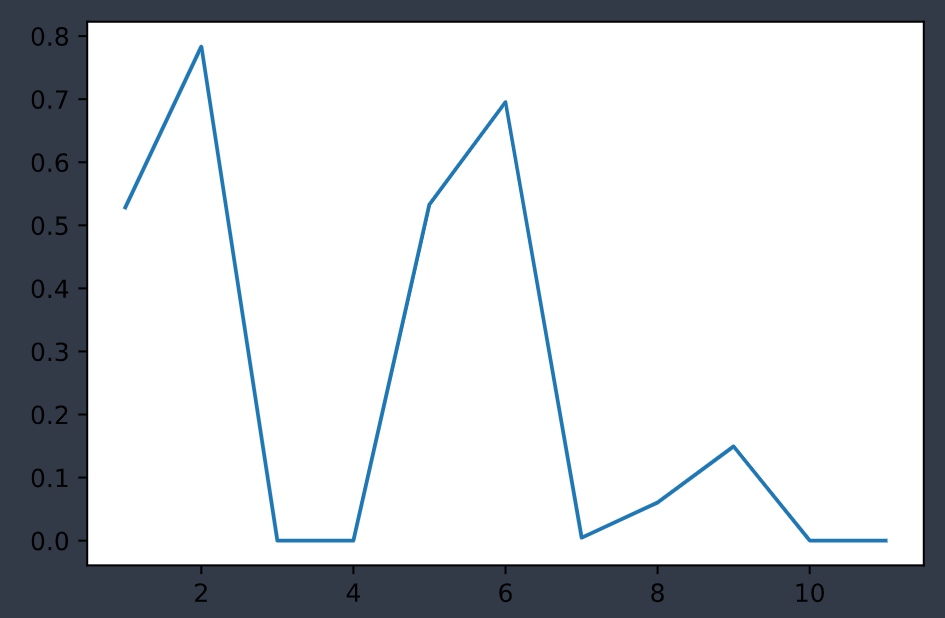

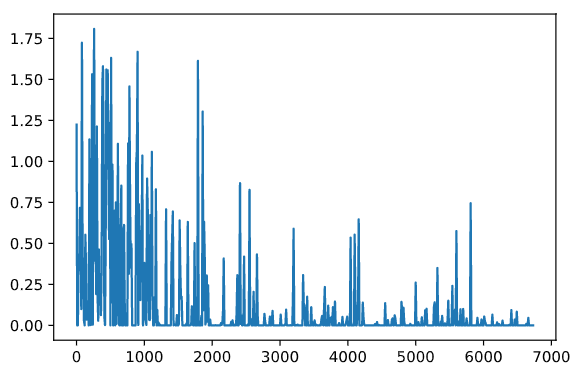

使用训练前的模型计算的误差曲线如下,左右分别为label0、1

误差曲线如下:

B. 模型2

数据集: 行李分拣图像

行李分拣图像数据集每个图像有2-3张图片

SiameseNetwork(

(cnn1): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): Conv2d(3, 4, kernel_size=(3, 3), stride=(1, 1))

(2): ReLU(inplace=True)

(3): BatchNorm2d(4, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): Dropout2d(p=0.2, inplace=False)

(5): ReflectionPad2d((1, 1, 1, 1))

(6): Conv2d(4, 8, kernel_size=(3, 3), stride=(1, 1))

(7): ReLU(inplace=True)

(8): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(9): Dropout2d(p=0.2, inplace=False)

(10): ReflectionPad2d((1, 1, 1, 1))

(11): Conv2d(8, 8, kernel_size=(3, 3), stride=(1, 1))

(12): ReLU(inplace=True)

(13): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(14): Dropout2d(p=0.2, inplace=False)

)

(fc1): Sequential(

(0): Linear(in_features=320000, out_features=512, bias=True)

(1): ReLU(inplace=True)

(2): Linear(in_features=512, out_features=512, bias=True)

(3): ReLU(inplace=True)

(4): Linear(in_features=512, out_features=5, bias=True)

)

)

实验1 (3.17)

图片集:800对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200

只复制测试集的部分到博客上:

# temp = np.percentile(e_dis[0], (75,78,80,82,85,90,95,97), interpolation='midpoint')

# print('label 0 quartile:', temp)

# temp = np.percentile(e_dis[1], (3,5,10,15,18,20,22,25), interpolation='midpoint')

# print('label 1 quartile:', temp)

# 0,5,10,14

Epoch Test 0: label0 83->0.1620, label1 77->0.6271

label 0 quartile: [0.7158902 0.73214555 0.74398038 0.7569533 0.76356792 0.82454967 1.07923743 1.27540636]

label 1 quartile: [0.1817814 0.2308277 0.37762058 0.45172186 0.50063759 0.55775705 0.59324759 0.6341216 ]

error numbers for label 0 and 1: [5, 44]

Epoch Test 5: label0 83->0.2521, label1 77->0.5287

label 0 quartile: [0.88209683 0.93045935 0.94216055 0.97645894 0.99700648 1.13626337 1.37563056 1.46406162]

label 1 quartile: [0.39171842 0.39903614 0.43926243 0.47115058 0.49870707 0.57160473 0.60444131 0.66674411]

error numbers for label 0 and 1: [13, 38]

Epoch Test 10: label0 83->0.3732, label1 77->0.4769

label 0 quartile: [0.92170364 0.98256665 1.03442353 1.12832326 1.26045287 1.47625029 1.85940778 1.94125605]

label 1 quartile: [0.26481998 0.31101069 0.49074917 0.52606165 0.54815516 0.60736233 0.62225154 0.67621607]

error numbers for label 0 and 1: [19, 33]

Epoch Test 14: label0 83->0.3739, label1 77->0.4549

label 0 quartile: [0.9747808 1.08377302 1.12426609 1.23155147 1.36393613 1.47681826 1.64795393 1.77929193]

label 1 quartile: [0.23954196 0.2733479 0.47021253 0.64109564 0.65909216 0.69494125 0.71807566 0.76516104]

error numbers for label 0 and 1: [21, 30]

在训练集中满足条件的图片越来越多,但测试集(160对)中判断错误的图片总数几乎不变,并且其中label为0(相似)的图片误差越来越分散。

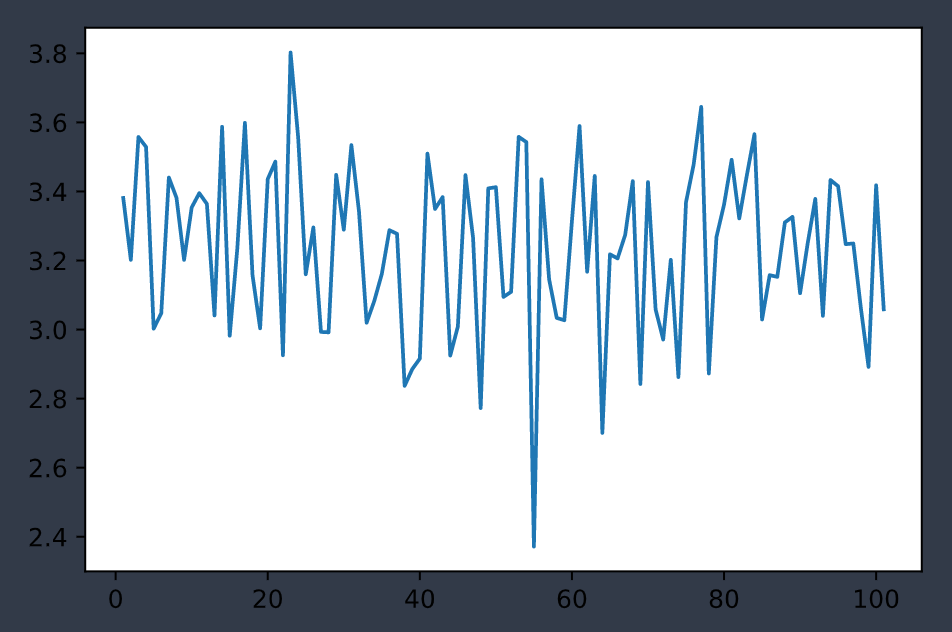

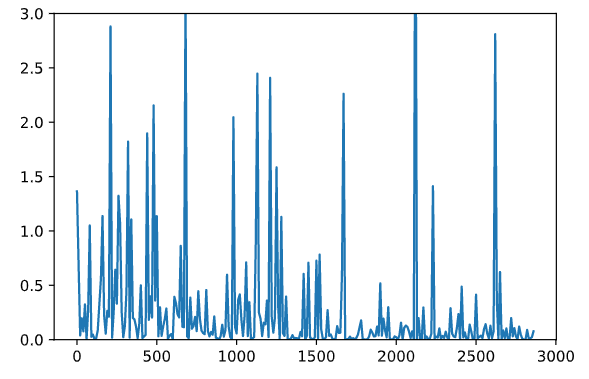

label为0和1的训练集误差曲线如下:

实验2

图片集:1000对训练集:80%测试集:20%学习率:0.0005image_width = 100image_height = 100

# temp = np.percentile(e_dis[0], (75,78,80,82,85,90,95,97), interpolation='midpoint')

# print('label 0 quartile:', temp)

# temp = np.percentile(e_dis[1], (3,5,10,15,18,20,22,25), interpolation='midpoint')

# print('label 1 quartile:', temp)

# 0,10,20,30,50,80

Epoch Test 0: label0 91->1.5374, label1 109->0.7805

label 0 quartile: [1.72765619 1.82872415 1.86390436 2.12885189 2.36031544 3.11245394 3.97622979 4.65814257]

label 1 quartile: [7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 1.08779006e-01 1.48222767e-01 2.64381945e-01]

error numbers for label 0 and 1: [36, 56]

Epoch Test 10: label0 91->0.3374, label1 109->0.8303

label 0 quartile: [0.95802465 1.04538548 1.06486011 1.0855754 1.13269544 1.32654285 1.52294904 1.68308794]

label 1 quartile: [7.99999816e-06 1.26058787e-01 2.33688205e-01 3.27700123e-01 3.52225974e-01 3.58412251e-01 3.72223631e-01 4.17031437e-01]

error numbers for label 0 and 1: [23, 76]

Epoch Test 20: label0 91->0.4392, label1 109->0.8419

label 0 quartile: [1.13361007 1.1974858 1.21589446 1.22746861 1.26535058 1.42446542 1.65094739 1.86934656]

label 1 quartile: [7.99999816e-06 7.99999816e-06 2.34756984e-01 3.43695953e-01 3.81314784e-01 4.08363506e-01 4.18087393e-01 4.57673758e-01]

error numbers for label 0 and 1: [30, 77]

Epoch Test 30: label0 91->0.4715, label1 109->0.8054

label 0 quartile: [1.21624649 1.25916255 1.2650578 1.32205606 1.45206922 1.63812661 1.75634962 1.85559589]

label 1 quartile: [7.99999816e-06 1.31768096e-01 2.99624026e-01 3.58396113e-01 4.09164563e-01 4.11337093e-01 4.18671578e-01 4.34955269e-01]

error numbers for label 0 and 1: [30, 75]

Epoch Test 50: label0 91->0.4023, label1 109->0.8966

label 0 quartile: [0.99017602 1.0637424 1.12643278 1.15265942 1.23145729 1.54070365 1.76694596 1.85873866]

label 1 quartile: [0.0578028 0.21815401 0.29163927 0.36389756 0.39364646 0.39939454 0.40634869 0.43026939]

error numbers for label 0 and 1: [23, 89]

Epoch Test 80: label0 91->0.5454, label1 109->0.6308

label 0 quartile: [1.07125872 1.10748285 1.24689937 1.3042506 1.41496533 1.60847652 2.01995593 2.34613466]

label 1 quartile: [0.26057182 0.28916954 0.49482587 0.56373966 0.58854628 0.59898874 0.60584956 0.67787558]

error numbers for label 0 and 1: [27, 65]

随着训练次数的增加,训练效果几乎没有变动

实验3(fail)

图片集:800对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200

# 模型结构

SiameseNetwork(

(cnn1): Sequential(

(0): ReflectionPad2d((2, 2, 2, 2))

(1): Conv2d(3, 4, kernel_size=(5, 5), stride=(1, 1))

(2): ReLU(inplace=True)

(3): BatchNorm2d(4, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): Dropout2d(p=0.1, inplace=False)

(5): ReflectionPad2d((1, 1, 1, 1))

(6): Conv2d(4, 8, kernel_size=(3, 3), stride=(1, 1))

(7): ReLU(inplace=True)

(8): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(9): Dropout2d(p=0.1, inplace=False)

(10): ReflectionPad2d((1, 1, 1, 1))

(11): Conv2d(8, 8, kernel_size=(3, 3), stride=(1, 1))

(12): ReLU(inplace=True)

(13): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(14): Dropout2d(p=0.1, inplace=False)

)

(fc1): Sequential(

(0): Linear(in_features=320000, out_features=512, bias=True)

(1): ReLU(inplace=True)

(2): Linear(in_features=512, out_features=256, bias=True)

(3): ReLU(inplace=True)

(4): Linear(in_features=256, out_features=64, bias=True)

)

)

训练结果:

# temp = np.percentile(e_dis[0], (75,78,80,82,85,90,95,97), interpolation='midpoint')

# print('label 0 quartile:', temp)

# temp = np.percentile(e_dis[1], (3,5,10,15,18,20,22,25), interpolation='midpoint')

# print('label 1 quartile:', temp)

Epoch Test 0: label0 82->18.8394, label1 78->1.6166

label 0 quartile: [7.99999816e-06 7.99999816e-06 9.07898759e-02 1.08435822e+00 1.32852960e+00 3.38537025e+00 6.28398037e+00 1.55585589e+01]

label 1 quartile: [7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06]

error numbers for label 0 and 1: [15, 66]

Epoch Test 2: label0 82->1.0190, label1 78->1.8004

label 0 quartile: [7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 6.31472066e-01 2.42083299e+00]

label 1 quartile: [7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06 7.99999816e-06]

error numbers for label 0 and 1: [4, 72]

...

模型无法收敛

实验4

在经历了换部分conv size从3到5失败,调整MLP层从5变大失败,去掉随机drop channel失败和缩小图片size失败后又回到了最初的模型。

这次为了贴近实际场景将训练集配对换为以正太分布概率匹配临近序列图片。

图片集:800对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200Epoch= 20

SiameseNetwork(

(cnn1): Sequential(

(0): ReflectionPad2d((1, 1, 1, 1))

(1): Conv2d(3, 4, kernel_size=(3, 3), stride=(1, 1))

(2): ReLU(inplace=True)

(3): BatchNorm2d(4, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): Dropout2d(p=0.2, inplace=False)

(5): ReflectionPad2d((1, 1, 1, 1))

(6): Conv2d(4, 8, kernel_size=(3, 3), stride=(1, 1))

(7): ReLU(inplace=True)

(8): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(9): Dropout2d(p=0.2, inplace=False)

(10): ReflectionPad2d((1, 1, 1, 1))

(11): Conv2d(8, 8, kernel_size=(3, 3), stride=(1, 1))

(12): ReLU(inplace=True)

(13): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(14): Dropout2d(p=0.2, inplace=False)

)

(fc1): Sequential(

(0): Linear(in_features=320000, out_features=512, bias=True)

(1): ReLU(inplace=True)

(2): Linear(in_features=512, out_features=512, bias=True)

(3): ReLU(inplace=True)

(4): Linear(in_features=512, out_features=5, bias=True)

)

)

紧接着实验1的参数训练

# temp = np.percentile(e_dis[0], (75,78,80,82,85,90,95,97), interpolation='midpoint')

# print('label 0 quartile:', temp)

# temp = np.percentile(e_dis[1], (3,5,10,15,18,20,22,25), interpolation='midpoint')

# print('label 1 quartile:', temp)

Epoch Test 0: label0 78->1.3743, label1 82->1.2640

label 0 quartile: [0.55888975 0.67027643 0.68802303 0.76952583 0.96229041 1.36388028 4.0104413 4.01044226]

label 1 quartile: [0.02888928 0.03946535 0.05059958 0.0807505 0.08576608 0.09473649 0.097671 0.10426903]

error numbers for label 0 and 1: [12, 68]

Epoch Test 5: label0 78->0.3444, label1 82->0.8430

label 0 quartile: [0.75159624 0.87311718 0.89756691 0.96464625 1.07016551 1.24671048 1.57037711 1.6506992 ]

label 1 quartile: [0.03501491 0.04280457 0.12707485 0.19185039 0.19620433 0.20249537 0.20828354 0.27016349]

error numbers for label 0 and 1: [14, 49]

Epoch Test 10: label0 78->1.1436, label1 82->0.6885

label 0 quartile: [1.30594879 1.45608526 1.51971132 1.63586009 1.74043304 1.88373029 2.64578724 2.9188813 ]

label 1 quartile: [0.08070351 0.12424976 0.17298107 0.22679571 0.23469673 0.2476977 0.25094841 0.308386 ]

error numbers for label 0 and 1: [26, 40]

Epoch Test 15: label0 78->0.8272, label1 82->0.5488

label 0 quartile: [1.26610523 1.29454911 1.31922746 1.49084806 1.68135875 1.96677649 2.36984169 2.58422446]

label 1 quartile: [0.06601212 0.12309504 0.27127041 0.3877487 0.47448578 0.5336878 0.54738459 0.61449504]

error numbers for label 0 and 1: [28, 33]

Epoch Test 19: label0 78->0.8046, label1 82->0.5215

label 0 quartile: [1.29342777 1.34785849 1.36026883 1.47410375 1.73619092 2.0540995 2.50330627 2.92953098]

label 1 quartile: [0.09626893 0.18224674 0.31161426 0.4375957 0.53488852 0.57985544 0.58656853 0.62558031]

error numbers for label 0 and 1: [27, 33]

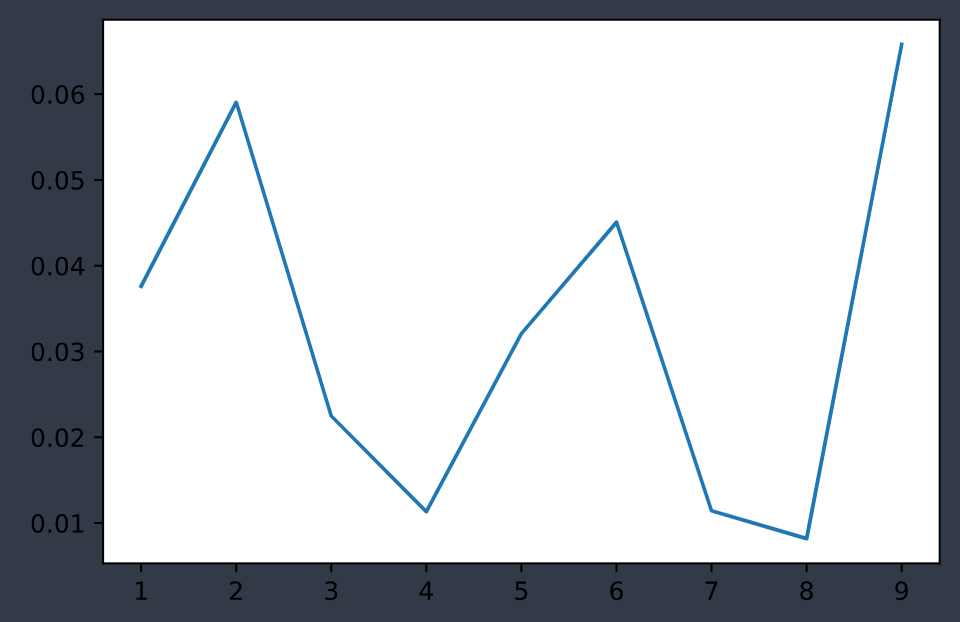

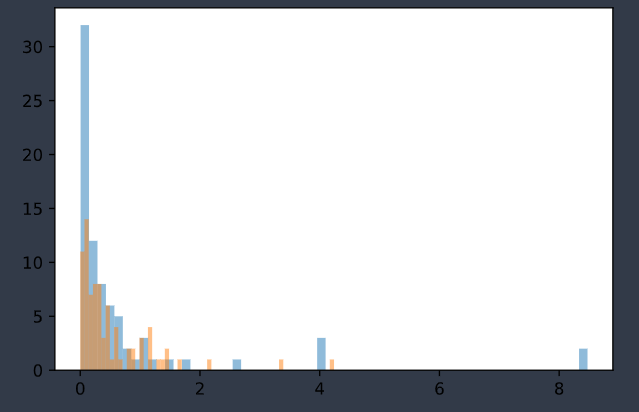

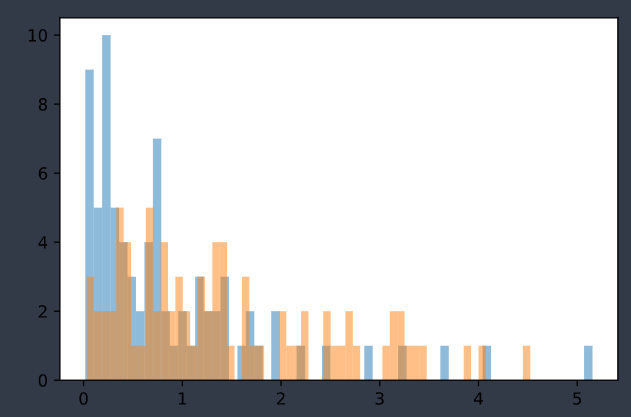

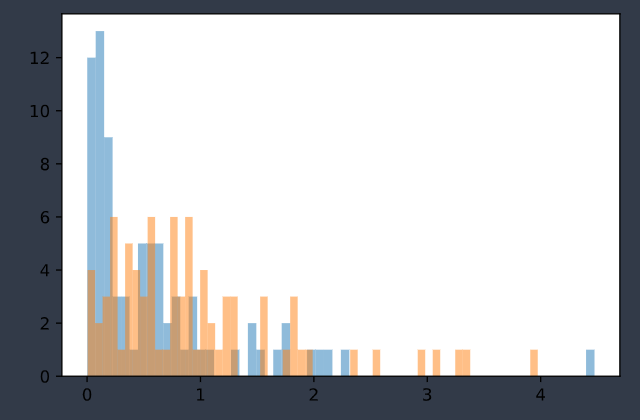

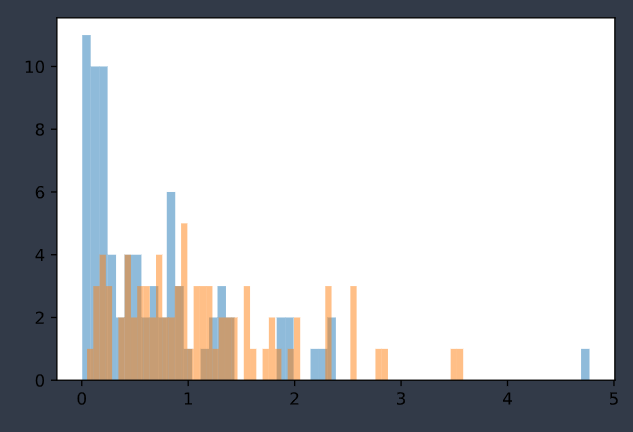

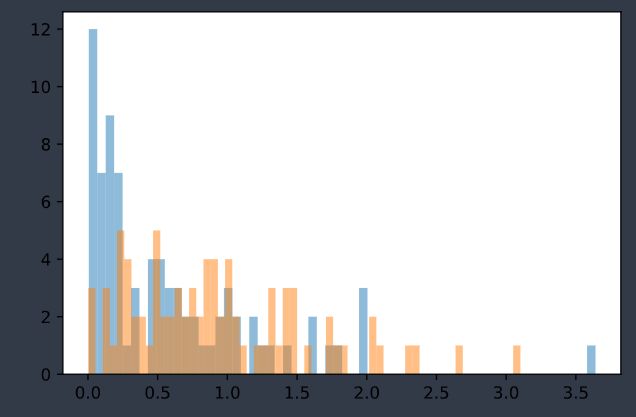

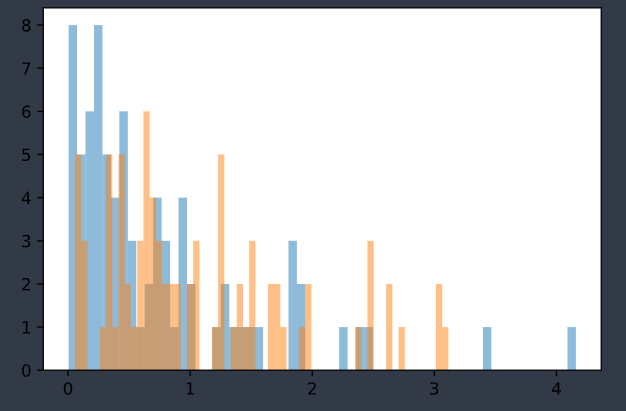

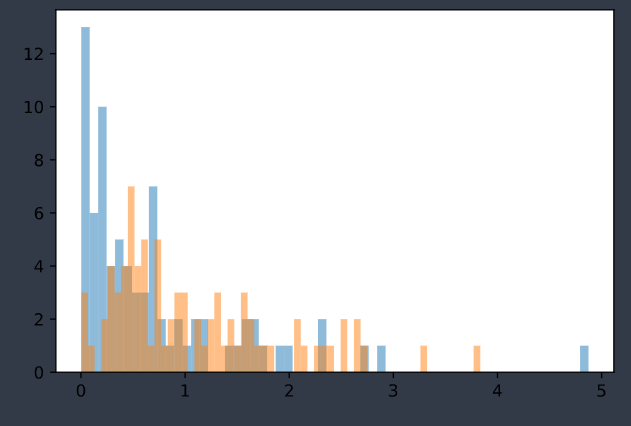

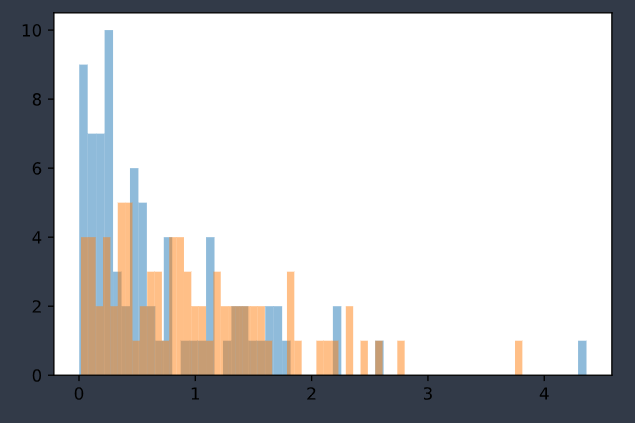

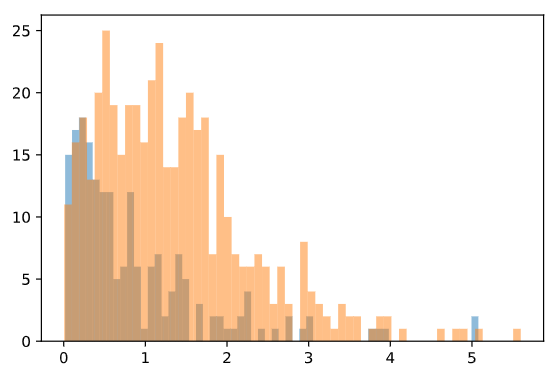

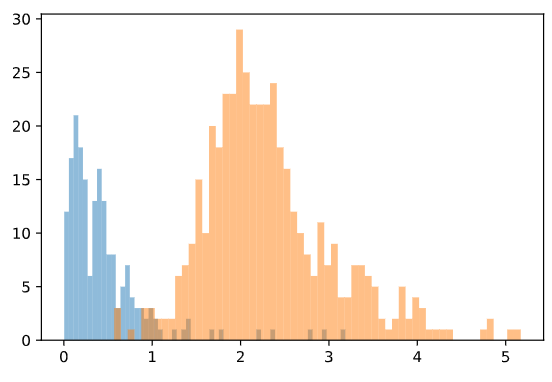

下面是部分Epoch后测试集样本的 d i s t a n c e e u c l i d e a n distance_{euclidean} distanceeuclidean 分布图。其中蓝色和橙色分布分别是测试集中label0和label1的 d i s t a n c e e u c l i d e a n distance_{euclidean} distanceeuclidean 分布情况。

|

|

|

|

|

|

两分布似乎在向外面分离,但没有形成有效分离。或许需要改变数据集,不能每次都随机生成。

将该模型保存为net032001_normal_params

实验5

实验5

接着net032001_normal_params的参数进行实验。但数据集仍是随机生成。

图片集:800对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200Epoch= 30

# temp = np.percentile(e_dis[0], (75,78,80,82,85,90,95,97), interpolation='midpoint')

# print('label 0 quartile:', temp)

# temp = np.percentile(e_dis[1], (3,5,10,15,18,20,22,25), interpolation='midpoint')

# print('label 1 quartile:', temp)

error numbers for train label 0 and 1: [67, 227]

Epoch Test 0: label0 79->0.2560, label1 81->0.8807

label 0 quartile: [0.60331309 0.67048407 0.70267773 0.75424519 0.93925929 1.10876143 1.61191624 1.7277019 ]

label 1 quartile: [0.05190407 0.10190329 0.12947555 0.19093691 0.24671859 0.2600964 0.31019682 0.33293331]

error numbers for label 0 and 1: [11, 57]

error numbers for train label 0 and 1: [14, 11]

Epoch Test 10: label0 79->0.4022, label1 81->0.8745

label 0 quartile: [0.79908943 0.92039618 1.05769122 1.10196811 1.17286581 1.29391021 1.78889161 2.18571639]

label 1 quartile: [0.03328206 0.04366843 0.12533523 0.21696787 0.267791 0.33897641 0.35664593 0.39555448]

error numbers for label 0 and 1: [18, 53]

error numbers for train label 0 and 1: [12, 4]

Epoch Test 20: label0 79->0.3793, label1 81->0.8402

label 0 quartile: [0.74877748 0.81421864 0.9199762 1.01927397 1.1587559 1.41632444 1.7376833 1.79076648]

label 1 quartile: [0.02023832 0.03976901 0.0858559 0.18339764 0.1933112 0.25657022 0.33203998 0.37356466]

error numbers for label 0 and 1: [15, 52]

error numbers for train label 0 and 1: [12, 13]

Epoch Test 27: label0 79->0.4369, label1 81->0.8026

label 0 quartile: [0.77139834 0.81222135 0.92736983 0.94037005 1.18054146 1.6120491 2.01404649 2.12090063]

label 1 quartile: [0.04182272 0.09833772 0.18364981 0.24191757 0.28822963 0.3422049 0.36599465 0.40026259]

error numbers for label 0 and 1: [14, 52]

error numbers for train label 0 and 1: [11, 4]

Epoch Test 28: label0 79->0.2590, label1 81->0.9194

label 0 quartile: [0.5558812 0.63718623 0.70639083 0.72341552 0.98395523 1.26406902 1.53598577 1.58981997]

label 1 quartile: [0.06412696 0.08869804 0.20952427 0.25327516 0.26638275 0.26753896 0.28075959 0.32243639]

error numbers for label 0 and 1: [12, 62]

error numbers for train label 0 and 1: [7, 5]

Epoch Test 29: label0 79->0.4499, label1 81->0.7754

label 0 quartile: [0.80306715 0.93761033 1.01173496 1.07229686 1.37811863 1.6002214 2.04968387 2.13516295]

label 1 quartile: [0.07622917 0.0914327 0.15927666 0.21650432 0.25096866 0.31578162 0.33560939 0.44944227]

error numbers for label 0 and 1: [17, 47]

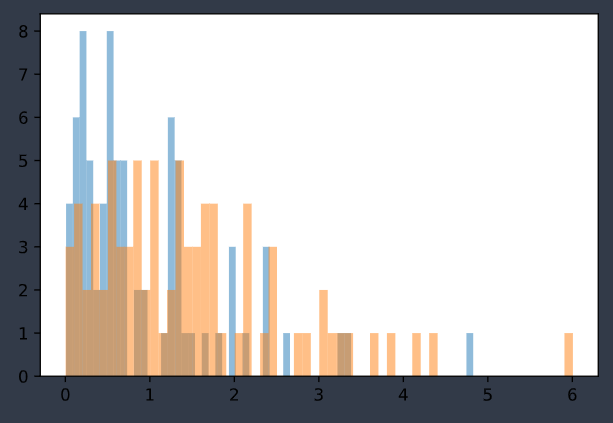

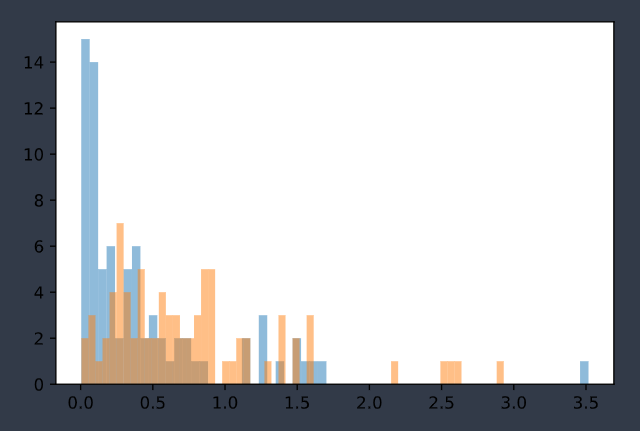

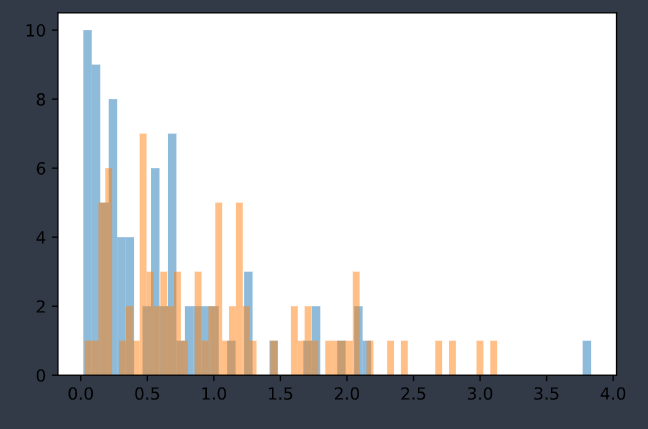

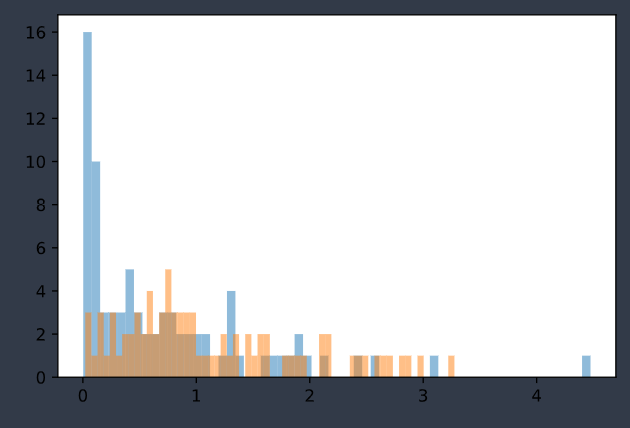

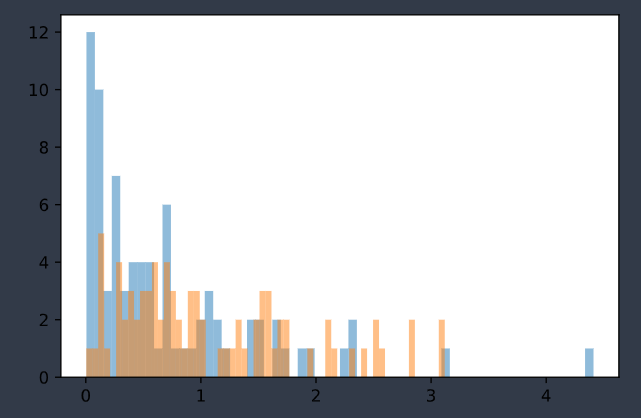

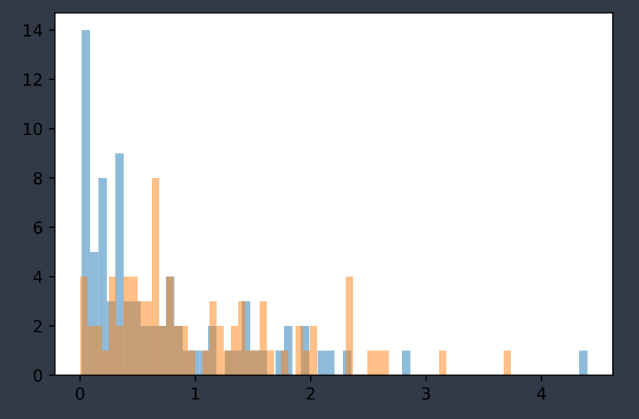

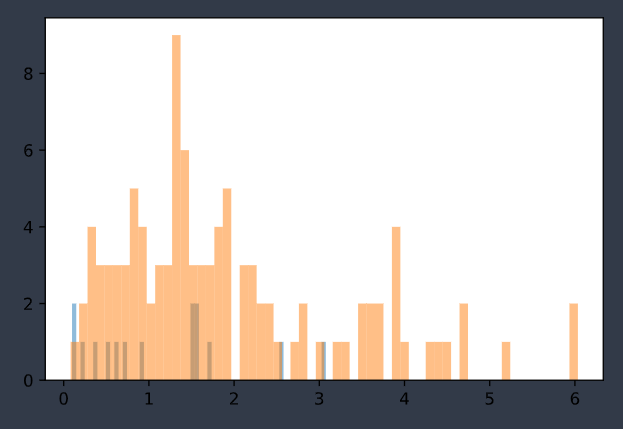

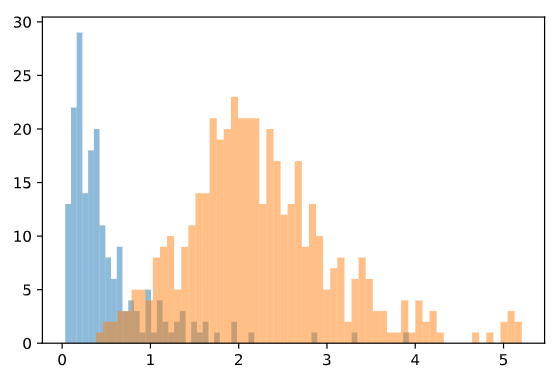

|

|

|

|

|

|

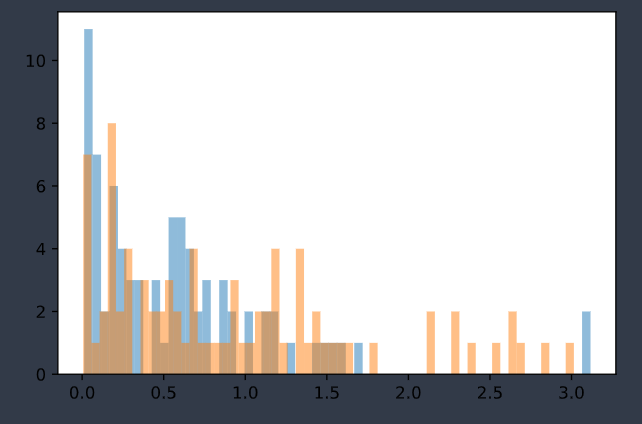

结果不是特别理想,似乎label0和label1仍未有效分离。

将模型参数保存为net032101_normal_params

实验6

接着net032101_normal_params的参数进行实验。但数据集承接实验5。

图片集:800对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200Epoch= 15

# temp = np.percentile(e_dis[0], (75,78,80,82,85,90,95,97), interpolation='midpoint')

# print('label 0 quartile:', temp)

# temp = np.percentile(e_dis[1], (3,5,10,15,18,20,22,25), interpolation='midpoint')

# print('label 1 quartile:', temp)

error numbers for train label 0 and 1: [8, 3]

Epoch Test 0: label0 79->0.4023, label1 81->0.7622

label 0 quartile: [0.69246459 0.74032906 0.91863462 1.11276823 1.23893011 1.63581121 1.8707599 1.93956631]

label 1 quartile: [0.1035036 0.1527216 0.21822351 0.26092896 0.3003215 0.32645234 0.34282559 0.37721297]

error numbers for label 0 and 1: [16, 49]

error numbers for train label 0 and 1: [8, 2]

Epoch Test 5: label0 79->0.2584, label1 81->0.9940

label 0 quartile: [0.58564723 0.64023229 0.83082703 0.93379942 0.9818148 1.16805738 1.46112651 1.529733 ]

label 1 quartile: [0.02504645 0.05261827 0.12172495 0.16706192 0.22925539 0.23751174 0.26844838 0.29307562]

error numbers for label 0 and 1: [11, 65]

error numbers for train label 0 and 1: [9, 3]

Epoch Test 10: label0 79->0.5338, label1 81->0.6357

label 0 quartile: [0.92618981 0.95579913 1.18949121 1.22778493 1.32882214 1.85181946 2.19969416 2.27952528]

label 1 quartile: [0.15716235 0.18070176 0.23116393 0.40238249 0.42398745 0.45305049 0.48435326 0.55087805]

error numbers for label 0 and 1: [17, 43]

error numbers for train label 0 and 1: [9, 2]

Epoch Test 12: label0 79->0.4039, label1 81->0.7110

label 0 quartile: [0.81291312 0.87639785 0.960069 0.97132063 1.18621689 1.5761227 2.03127581 2.08241963]

label 1 quartile: [0.15037677 0.16647227 0.19394311 0.22793521 0.37454613 0.42542025 0.45675254 0.46767271]

error numbers for label 0 and 1: [13, 44]

error numbers for train label 0 and 1: [12, 0]

Epoch Test 13: label0 79->0.4833, label1 81->0.6586

label 0 quartile: [0.93557674 1.0485518 1.12070984 1.18872541 1.35504472 1.74693 2.11255693 2.16595805]

label 1 quartile: [0.15101928 0.17144679 0.27649784 0.33131468 0.44687305 0.4724685 0.48356323 0.52775037]

error numbers for label 0 and 1: [19, 43]

error numbers for train label 0 and 1: [5, 2]

Epoch Test 14: label0 79->0.4065, label1 81->0.7917

label 0 quartile: [0.82375449 0.89473799 0.95594129 0.99628985 1.19545197 1.58538914

1.96402109 2.05216646]

label 1 quartile: [0.06293369 0.08791102 0.17698388 0.19833633 0.28865082 0.33140871

0.36708578 0.44745922]

error numbers for label 0 and 1: [15, 48]

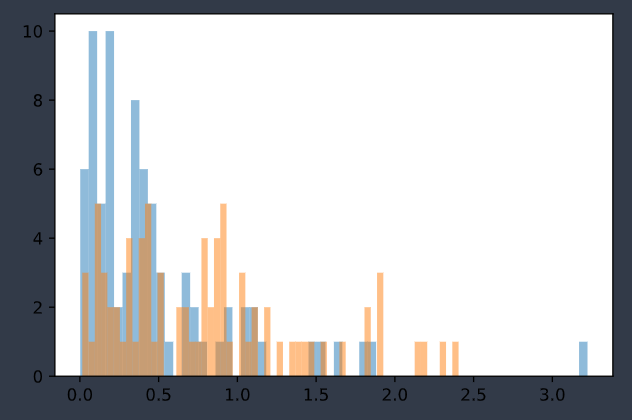

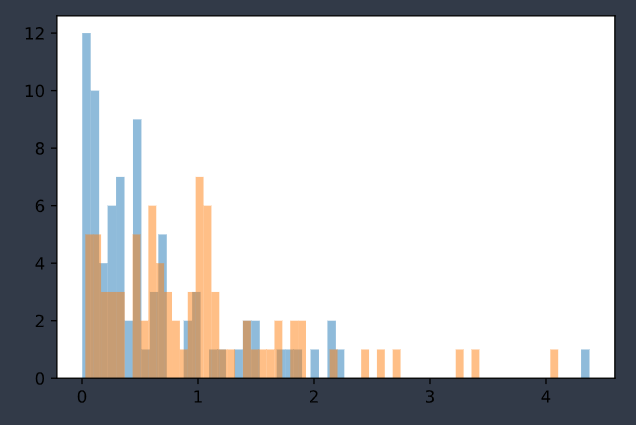

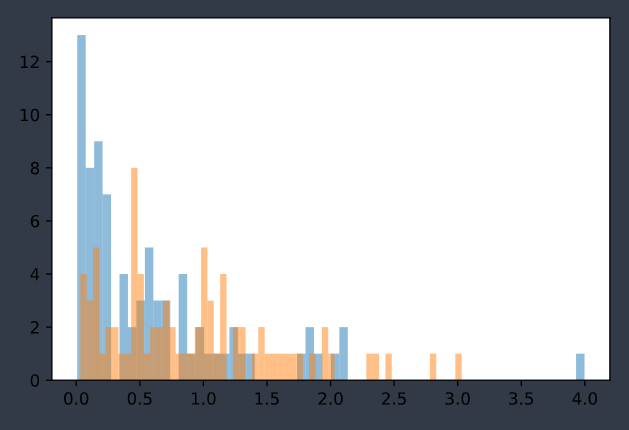

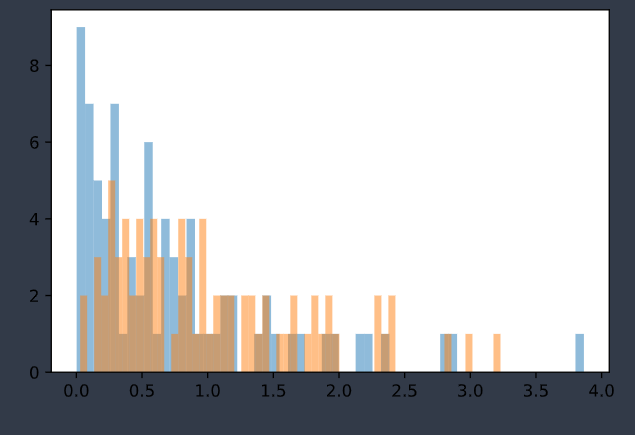

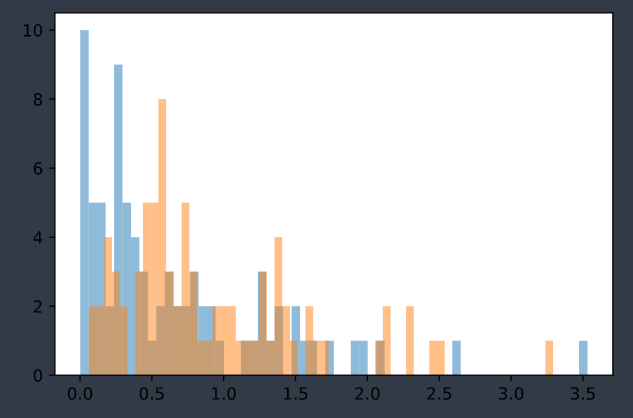

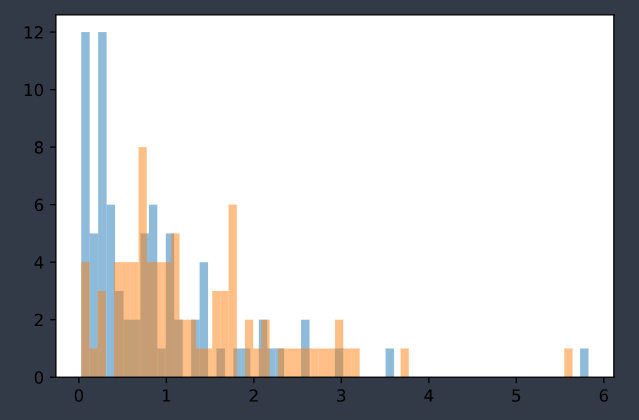

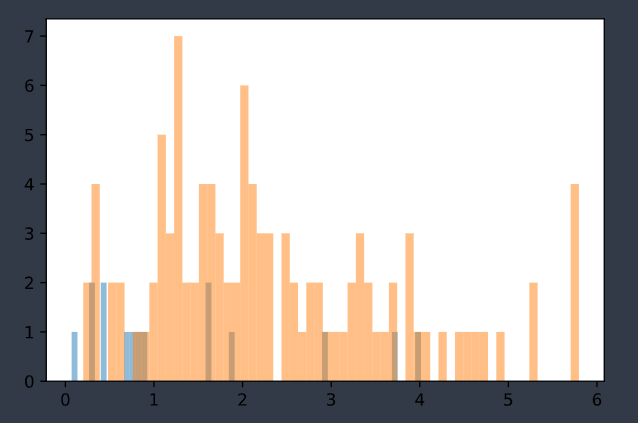

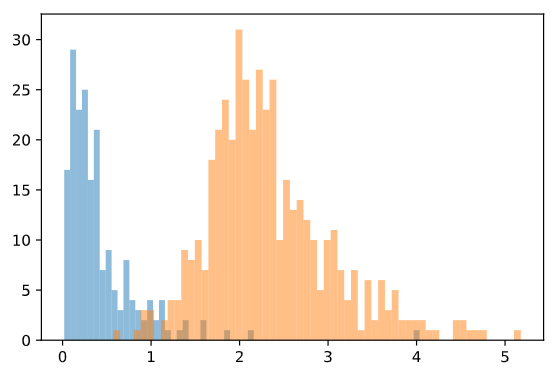

|

|

|

|

|

|

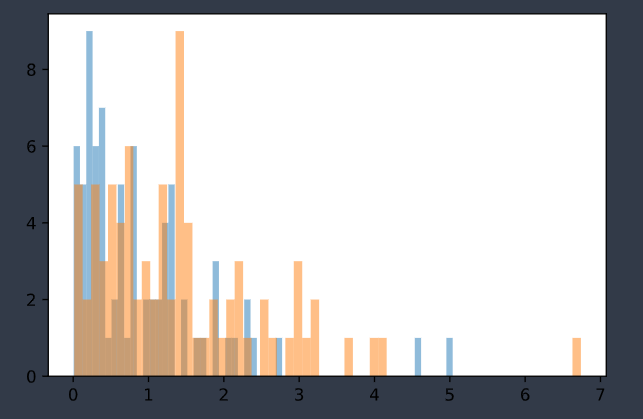

实验7

紧接着实验6的数据和参数进行。

图片集:800对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200Epoch= 20

error numbers for train label 0 and 1: [7, 0]

Epoch Test 0: label0 79->0.3761, label1 81->0.7653

label 0 quartile: [0.89393926 0.96582121 1.03093511 1.04356456 1.17976797 1.5213176 1.86971921 1.96648115]

label 1 quartile: [0.08603884 0.13261268 0.21826732 0.27556634 0.31136476 0.34251082 0.37249841 0.45366183]

error numbers for label 0 and 1: [18, 51]

error numbers for train label 0 and 1: [4, 0]

Epoch Test 5: label0 79->0.5902, label1 81->0.6598

label 0 quartile: [1.044231 1.17529702 1.2864421 1.30294365 1.48336458 1.86360157 2.25020027 2.46973515]

label 1 quartile: [0.09173935 0.13112041 0.24333405 0.35825726 0.42598081 0.47376618 0.48820314 0.54829991]

error numbers for label 0 and 1: [21, 47]

error numbers for train label 0 and 1: [10, 4]

Epoch Test 10: label0 79->0.5514, label1 81->0.7197

label 0 quartile: [0.99609572 1.09103167 1.16610312 1.1867283 1.46719247 1.78704429 2.28552854 2.58264136]

label 1 quartile: [0.16793605 0.18841334 0.25241742 0.29652849 0.33984348 0.36072731 0.39049183 0.44435391]

error numbers for label 0 and 1: [20, 48]

error numbers for train label 0 and 1: [7, 1]

Epoch Test 15: label0 79->0.5650, label1 81->0.6864

label 0 quartile: [0.99759865 1.04919064 1.10271281 1.1186952 1.22750658 1.80494195 2.30130291 2.58294487]

label 1 quartile: [0.16522489 0.17096621 0.21290109 0.31546679 0.3603361 0.38599303 0.43037912 0.46336949]

error numbers for label 0 and 1: [20, 45]

error numbers for train label 0 and 1: [6, 0]

Epoch Test 18: label0 79->0.5754, label1 81->0.6975

label 0 quartile: [0.95451638 1.00537312 1.249861 1.2947166 1.45793611 1.83815819 2.30322134 2.41481721]

label 1 quartile: [0.09741778 0.10728455 0.26628441 0.3574965 0.39470373 0.42439529 0.44379365 0.48788974]

error numbers for label 0 and 1: [18, 46]

error numbers for train label 0 and 1: [5, 1]

Epoch Test 19: label0 79->0.5526, label1 81->0.6824

label 0 quartile: [1.01957476 1.09638369 1.13573027 1.20510721 1.49413604 1.69552016 2.25972855 2.28826094]

label 1 quartile: [0.1264741 0.13522775 0.27547583 0.34817949 0.37942898 0.41483384 0.45755075 0.50345212]

error numbers for label 0 and 1: [21, 48]

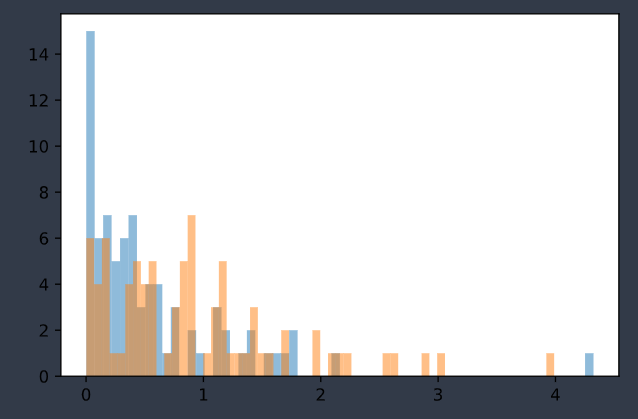

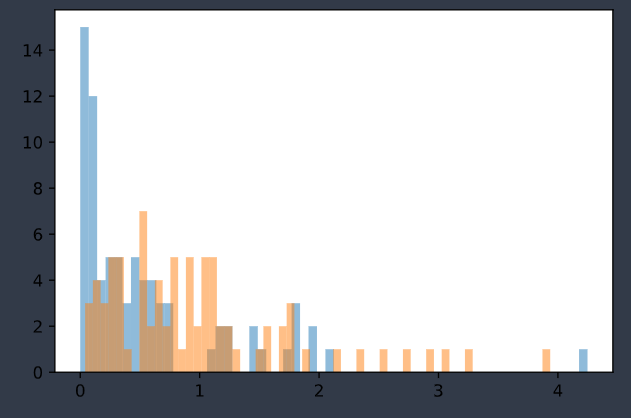

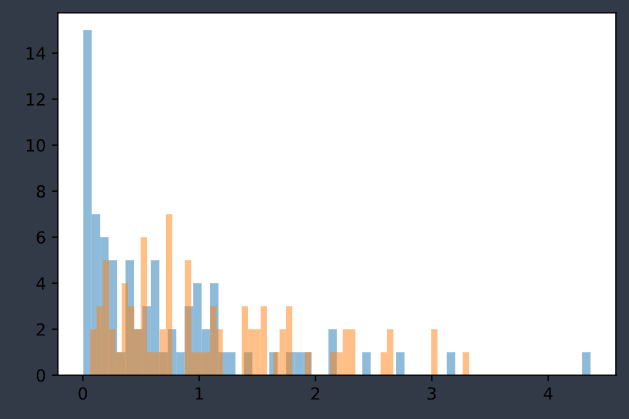

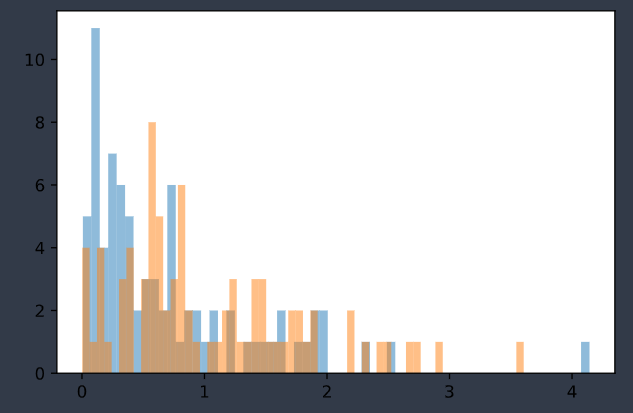

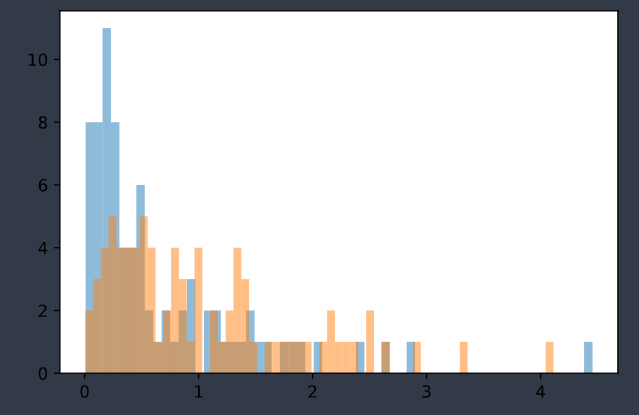

|

|

|

|

|

|

实验8

紧接着实验7的数据和参数进行。

图片集:800对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200Epoch= 15

error numbers for train label 0 and 1: [6, 2]

Epoch Test 0: label0 79->0.4334, label1 81->0.7721

label 0 quartile: [0.89374313 1.06467685 1.2197789 1.25286847 1.34939414 1.53840846

1.95286632 2.04250455]

label 1 quartile: [0.15104765 0.17765567 0.23427919 0.31105825 0.41078641 0.44090548

0.46284164 0.48704115]

error numbers for label 0 and 1: [18, 52]

error numbers for train label 0 and 1: [4, 0]

Epoch Test 10: label0 79->0.5187, label1 81->0.7081

label 0 quartile: [0.98957491 1.04676408 1.19469124 1.28860301 1.55173004 1.79972738

1.9783237 2.15031385]

label 1 quartile: [0.03410132 0.09130815 0.17003278 0.3616192 0.3887203 0.39985594

0.50048135 0.55006576]

error numbers for label 0 and 1: [20, 47]

error numbers for train label 0 and 1: [3, 1]

Epoch Test 13: label0 79->0.5847, label1 81->0.6859

label 0 quartile: [0.92322093 1.03832927 1.14118958 1.1529218 1.54671413 1.69326305 2.3048296 2.52410257]

label 1 quartile: [0.08837533 0.23071352 0.30661783 0.38556689 0.41956697 0.4417038 0.4640647 0.48072389]

error numbers for label 0 and 1: [18, 46]

error numbers for train label 0 and 1: [3, 0]

Epoch Test 14: label0 79->0.5526, label1 81->0.7289

label 0 quartile: [1.05860943 1.13867849 1.3090204 1.39532441 1.50129932 1.78771698 2.09872198 2.24260676]

label 1 quartile: [0.06067828 0.08352163 0.22187527 0.30638948 0.37312871 0.41378871 0.41858131 0.48028305]

error numbers for label 0 and 1: [21, 48]

|

|

|

|

实验9

紧接着实验8的数据和参数进行。

图片集:800对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200Epoch= 15

error numbers for train label 0 and 1: [5, 1]

Epoch Test 5: label0 79->0.8081, label1 81->0.5434

label 0 quartile: [1.08758575 1.20923483 1.37556028 1.40186632 1.53811985 2.1157403 2.56878078 2.7914598 ]

label 1 quartile: [0.09427275 0.18274984 0.40251073 0.49672776 0.5654636 0.59302223 0.60262138 0.68739736]

error numbers for label 0 and 1: [25, 37]

error numbers for train label 0 and 1: [2, 0]

Epoch Test 10: label0 79->0.5303, label1 81->0.7543

label 0 quartile: [0.91848856 1.0940994 1.18456084 1.20639038 1.41123503 1.67969847 2.23161256 2.51136136]

label 1 quartile: [0.12790693 0.14078884 0.20908813 0.26888508 0.29797232 0.3100414 0.33898377 0.39921615]

error numbers for label 0 and 1: [19, 46]

error numbers for train label 0 and 1: [1, 0]

Epoch Test 13: label0 79->0.4367, label1 81->0.7632

label 0 quartile: [0.87195212 0.91697699 0.99581736 1.02042693 1.31616199 1.58652586 1.80915433 2.04822183]

label 1 quartile: [0.0214555 0.04897951 0.19923984 0.30436152 0.33816271 0.35774031 0.36638524 0.4719438 ]

error numbers for label 0 and 1: [16, 51]

error numbers for train label 0 and 1: [5, 0]

Epoch Test 14: label0 79->0.4971, label1 81->0.7573

label 0 quartile: [1.08221239 1.0930267 1.19179648 1.29330713 1.38970578 1.65953499 1.96719754 2.1984868 ]

label 1 quartile: [0.0738718 0.08653293 0.16189066 0.24867384 0.28027287 0.33906442 0.36545858 0.39421245]

error numbers for label 0 and 1: [21, 47]

|

|

|

|

实验10

图片集:600对训练集:80%测试集:20%学习率:0.0005image_width = 200image_height = 200Epoch= 13

error numbers for train label 0 and 1: [30, 120]

Epoch Test 0: label0 15->0.9580, label1 105->0.3320

label 0 quartile: [1.55858916 1.55858916 1.63404727 1.63404727 1.63404727 2.13353235 2.81705296 2.81705296]

label 1 quartile: [0.34235817 0.37326908 0.51667082 0.65802711 0.76551357 0.83827007 0.86783218 0.90244752]

error numbers for label 0 and 1: [7, 28]

error numbers for train label 0 and 1: [15, 10]

Epoch Test 4: label0 15->1.5734, label1 105->0.2028

label 0 quartile: [1.73943979 1.73943979 2.39483321 2.39483321 2.39483321 3.32768321 3.87932324 3.87932324]

label 1 quartile: [0.35556974 0.44483256 0.83594504 1.04952377 1.12071306 1.21353734 1.24750531 1.26633072]

error numbers for label 0 and 1: [6, 14]

error numbers for train label 0 and 1: [4, 0]

Epoch Test 12: label0 15->1.1651, label1 105->0.2404

label 0 quartile: [1.22568351 1.22568351 1.79384065 1.79384065 1.79384065 2.77050042 3.51659358 3.51659358]

label 1 quartile: [0.27338409 0.3918646 0.69325978 0.84318715 0.9394435 1.02456802 1.15641832 1.28566813]

error numbers for label 0 and 1: [7, 20]

|

|

|

其他数据

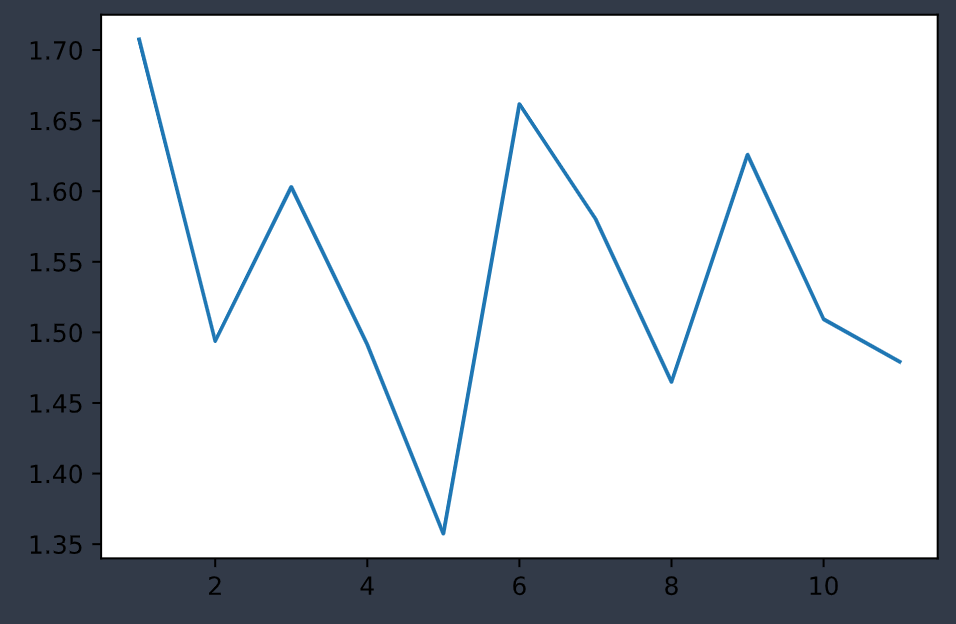

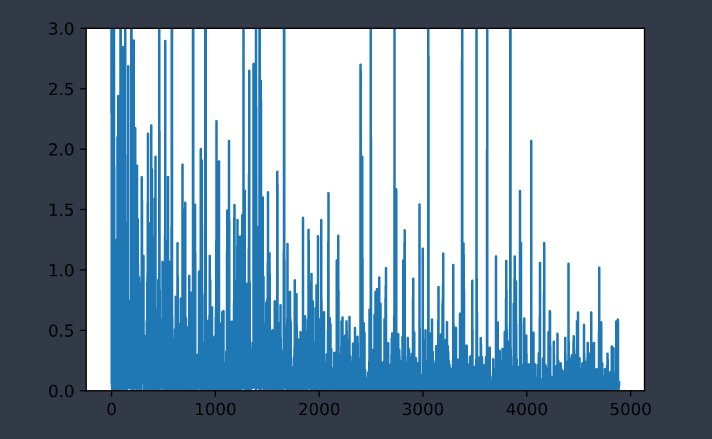

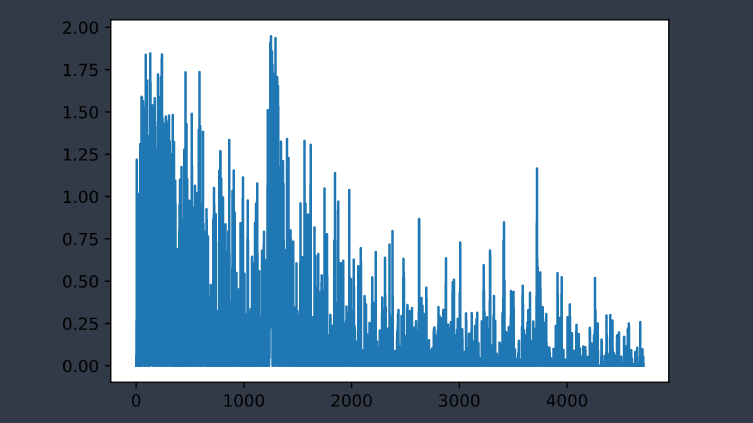

训练集分布变化

|

|

|

|

|

|

5147

5147

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?