调试手写数字识别代码时出现的问题,将cpu的代码改用gpu训练时虽然可以训练,详见上一条博客(Mnist手写数字识别cpu训练与gpu训练),但是会出现Error。查找资料后以下是解决过程。

先说结论: 这个问题的出现就是显存不足导致的,物理上让显存扩大是最有效的解决方法。要是没有条件,就试试下面的方法,希望能够帮到你。🧐

一、调整前代码&调整后代码

1、前

import torch

from torchvision import datasets, transforms

import torch.nn as nn

import torch.optim as optim

from datetime import datetime

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

class Config:

batch_size = 64

epoch = 10

momentum = 0.9

alpha = 1e-3

print_per_step = 100

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

# 3*3的卷积

self.conv1 = nn.Sequential(

nn.Conv2d(1, 32, 3, 1, 2), #kernel_size卷积核大小 stride卷积步长 padding特征图填充

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.conv2 = nn.Sequential(

nn.Conv2d(32, 64, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2) #2*2的最大池化层

)

self.fc1 = nn.Sequential(

nn.Linear(64 * 5 * 5, 128),

nn.BatchNorm1d(128),

nn.ReLU()

)

self.fc2 = nn.Sequential(

nn.Linear(128, 64),

nn.BatchNorm1d(64), # 加快收敛速度的方法(注:批标准化一般放在全连接层后面,激活函数层的前面)

nn.ReLU()

)

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size()[0], -1)

x = self.fc1(x)

x = self.fc2(x)

x = self.fc3(x)

return x

class TrainProcess:

def __init__(self):

self.train, self.test = self.load_data()

#修改

self.net = LeNet().to(device)

#修改

self.criterion = nn.CrossEntropyLoss() # 定义损失函数

self.optimizer = optim.SGD(self.net.parameters(), lr=Config.alpha, momentum=Config.momentum)

@staticmethod

def load_data():

print("Loading Data......")

"""加载MNIST数据集,本地数据不存在会自动下载"""

train_data = datasets.MNIST(root='./data/',

train=True,

transform=transforms.ToTensor(),

download=True)

test_data = datasets.MNIST(root='./data/',

train=False,

transform=transforms.ToTensor())

# 返回一个数据迭代器

# shuffle:是否打乱顺序

train_loader = torch.utils.data.DataLoader(dataset=train_data,

batch_size=Config.batch_size,

shuffle=True)

test_loader = torch.utils.data.DataLoader(dataset=test_data,

batch_size=Config.batch_size,

shuffle=False)

return train_loader, test_loader

def train_step(self):

steps = 0

start_time = datetime.now()

print("Training & Evaluating......")

for epoch in range(Config.epoch):

print("Epoch {:3}".format(epoch + 1))

for data, label in self.train:

data, label = data.to(device),label.to(device)

self.optimizer.zero_grad() # 将梯度归零

outputs = self.net(data) # 将数据传入网络进行前向运算

loss = self.criterion(outputs, label) # 得到损失函数

loss.backward() # 反向传播

self.optimizer.step() # 通过梯度做一步参数更新

# 每100次打印一次结果

if steps % Config.print_per_step == 0:

_, predicted = torch.max(outputs, 1)

correct = int(sum(predicted == label))

accuracy = correct / Config.batch_size # 计算准确率

end_time = datetime.now()

time_diff = (end_time - start_time).seconds

time_usage = '{:3}m{:3}s'.format(int(time_diff / 60), time_diff % 60)

msg = "Step {:5}, Loss:{:6.2f}, Accuracy:{:8.2%}, Time usage:{:9}."

print(msg.format(steps, loss, accuracy, time_usage))

steps += 1

test_loss = 0.

test_correct = 0

for data, label in self.test:

data, label = data.to(device),label.to(device)

outputs = self.net(data)

loss = self.criterion(outputs, label)

test_loss += loss * Config.batch_size

_, predicted = torch.max(outputs, 1)

correct = int(sum(predicted == label))

test_correct += correct

accuracy = test_correct / len(self.test.dataset)

loss = test_loss / len(self.test.dataset)

print("Test Loss: {:5.2f}, Accuracy: {:6.2%}".format(loss, accuracy))

end_time = datetime.now()

time_diff = (end_time - start_time).seconds

print("Time Usage: {:5.2f} mins.".format(time_diff / 60.))

if __name__ == "__main__":

p = TrainProcess()

p.train_step()

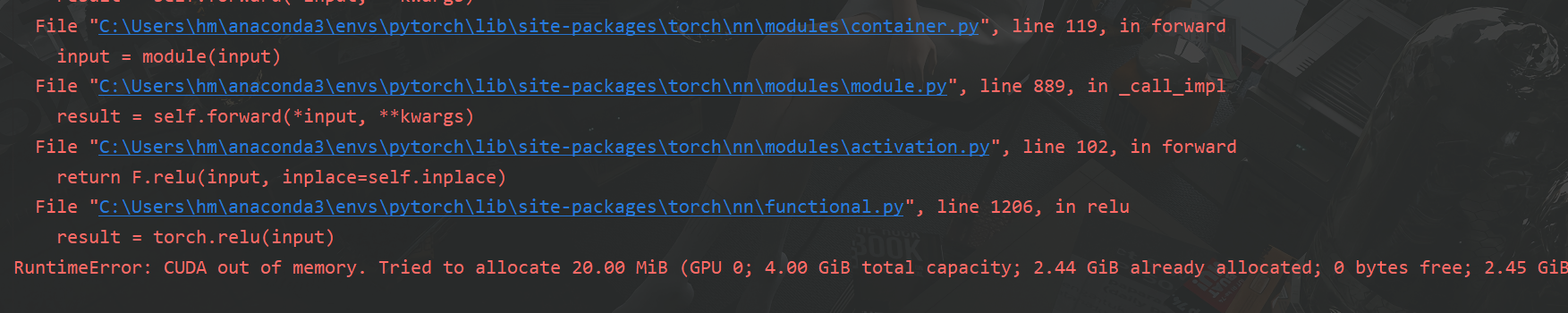

报错:RuntimeError: CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 4.00 GiB total capacity; 2.44 GiB already allocated; 0 bytes free; 2.45 GiB reserved in total by PyTorch)

2、后

import torch

from torchvision import datasets, transforms

import torch.nn as nn

import torch.optim as optim

from torch.autograd import Variable

from datetime import datetime

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

class Config:

batch_size = 64

epoch = 10

momentum = 0.9

alpha = 1e-3

print_per_step = 100

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

# 3*3的卷积

self.conv1 = nn.Sequential(

nn.Conv2d(1, 32, 3, 1, 2), #kernel_size卷积核大小 stride卷积步长 padding特征图填充

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.conv2 = nn.Sequential(

nn.Conv2d(32, 64, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2) #2*2的最大池化层

)

self.fc1 = nn.Sequential(

nn.Linear(64 * 5 * 5, 128),

nn.BatchNorm1d(128),

nn.ReLU()

)

self.fc2 = nn.Sequential(

nn.Linear(128, 64),

nn.BatchNorm1d(64), # 加快收敛速度的方法(注:批标准化一般放在全连接层后面,激活函数层的前面)

nn.ReLU()

)

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size()[0], -1)

x = self.fc1(x)

x = self.fc2(x)

x = self.fc3(x)

return x

class TrainProcess:

def __init__(self):

self.train, self.test = self.load_data()

self.net = LeNet().to(device)

self.criterion = nn.CrossEntropyLoss() # 定义损失函数

self.optimizer = optim.SGD(self.net.parameters(), lr=Config.alpha, momentum=Config.momentum)

@staticmethod

def load_data():

print("Loading Data......")

"""加载MNIST数据集,本地数据不存在会自动下载"""

train_data = datasets.MNIST(root='./data/',

train=True,

transform=transforms.ToTensor(),

download=True)

test_data = datasets.MNIST(root='./data/',

train=False,

transform=transforms.ToTensor())

# 返回一个数据迭代器

# shuffle:是否打乱顺序

train_loader = torch.utils.data.DataLoader(dataset=train_data,

batch_size=Config.batch_size,

shuffle=True)

test_loader = torch.utils.data.DataLoader(dataset=test_data,

batch_size=Config.batch_size,

shuffle=False)

return train_loader, test_loader

def train_step(self):

steps = 0

start_time = datetime.now()

print("Training & Evaluating......")

for epoch in range(Config.epoch):

print("Epoch {:3}".format(epoch + 1))

for data, label in self.train:

data, label = data.to(device),label.to(device)

self.optimizer.zero_grad() # 将梯度归零

outputs = self.net(data) # 将数据传入网络进行前向运算

loss = self.criterion(outputs, label) # 得到损失函数

loss.backward() # 反向传播

self.optimizer.step() # 通过梯度做一步参数更新

# 每100次打印一次结果

if steps % Config.print_per_step == 0:

_, predicted = torch.max(outputs, 1)

correct = int(sum(predicted == label))

accuracy = correct / Config.batch_size # 计算准确率

end_time = datetime.now()

time_diff = (end_time - start_time).seconds

time_usage = '{:3}m{:3}s'.format(int(time_diff / 60), time_diff % 60)

msg = "Step {:5}, Loss:{:6.2f}, Accuracy:{:8.2%}, Time usage:{:9}."

print(msg.format(steps, loss, accuracy, time_usage))

steps += 1

test_loss = 0.

test_correct = 0

for data, label in self.test:

with torch.no_grad():# *************修改*******************

data, label = data.to(device),label.to(device)

outputs = self.net(data)

loss = self.criterion(outputs, label)

test_loss += loss * Config.batch_size

_, predicted = torch.max(outputs, 1)

correct = int(sum(predicted == label))

test_correct += correct

accuracy = test_correct / len(self.test.dataset)

loss = test_loss / len(self.test.dataset)

print("Test Loss: {:5.2f}, Accuracy: {:6.2%}".format(loss, accuracy))

end_time = datetime.now()

time_diff = (end_time - start_time).seconds

print("Time Usage: {:5.2f} mins.".format(time_diff / 60.))

if __name__ == "__main__":

print(device)

p = TrainProcess()

p.train_step()

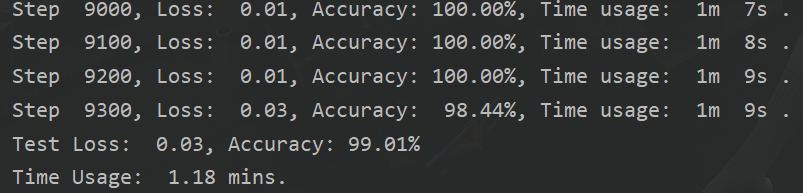

运行结果:

做了修改后便不会报该错误了。

二、解决方法

方法一:调整batch_size大小

网上的解决方法大多让调整batch_size大小,但是我在调整后,并没有解决问题。

方法二:不计算梯度

使用with torch.no_grad():

给出一篇博主写的博客:pytorch运行错误:CUDA out of memory.

注:本文使用的就是方法二解决了问题。

方法三:释放内存

在报错代码前加上以下代码,释放无关内存:

if hasattr(torch.cuda, 'empty_cache'):

torch.cuda.empty_cache()

参考博客:解决:RuntimeError: CUDA out of memory. Tried to allocate 2.00 MiB

1146

1146

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?