import requests

from bs4 import BeautifulSoup

import re

import sys

import csv

import time

url ="http://detail.zol.com.cn"

funList = ["server"]

cookie = "zolapp-float-qrcode-closed=1; ip_ck=58eG4vvzj7QuMDA3NTE0LjE1ODc2MTEyMDk%3D; __gads=ID=5a4b998771bfa249:T=1589598862:S=ALNI_Mb32C6_R60loyJB1QNenHhdmFxtNw; __utma=139727160.1786308364.1590110116.1590110116.1590110116.1; __utmz=139727160.1590110116.1.1.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; listSubcateId=31; realLocationId=115487; userFidLocationId=115487; Hm_lvt_ae5edc2bc4fc71370807f6187f0a2dd0=1593996619; z_pro_city=s_provice%3Dhebei%26s_city%3Dshijiazhuang; userProvinceId=8; userCityId=6; userCountyId=139; userLocationId=115487; Adshow=1; Hm_lpvt_ae5edc2bc4fc71370807f6187f0a2dd0=1593996634; z_day=ixgo20=1&rdetail=9; lv=1594014942; vn=8; visited_subcateProId=31-1178761; questionnaire_pv=1593993627"

cookie_dict = {i.split("=")[0]:i.split("=")[-1] for i in cookie.split("; ")}

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.100 Safari/537.36",

"Content-Type": "text/html; charset=GBK"

}

zolConLists = []

infoDicts = []

def RequestsZol(url):

response=requests.get(url=url,headers=headers,cookies=cookie_dict)

return response.text

def RequestsZolCon(response):

soup = BeautifulSoup(response, "html.parser")

a = soup.find_all("a","more")

for soupAUrl in a:

if re.findall(".*?更多参数.*?",str(soupAUrl)):

zolConLists.append(str(soupAUrl).split('"')[3])

def Subpage(conList):

print(conList)

conUrl = url + conList

response = RequestsZol(conUrl)

soup = BeautifulSoup(response, "html.parser")

subUrl = conUrl

name = soup.find("h3","goods-card__title").a.get_text()

price = soup.find("div","goods-card__price").span.get_text()[1:]

try:

score = soup.find("span","score").get_text()

except Exception as e:

score = 0

try:

scoreNum = soup.find("a","j_praise").get_text().split("条")[0]

except Exception as e:

scoreNum = 0

content = str(soup.find("ul","product-param-item pi-31 clearfix param-important"))

contents = re.findall('.*?<p title="(.*?)">',content)

try:

productCategory = contents[0]

productMix = contents[1]

CPUModel = contents[2]

standardCPUQuantity = contents[3]

memoryType = contents[4]

memoryCapacity = contents[5]

hardDiskInterfaceType = contents[6]

standardHardDiskCapacity = contents[7]

except Exception as e:

productCategory = 0

productMix = 0

CPUModel = 0

standardCPUQuantity = 0

memoryType = 0

memoryCapacity = 0

hardDiskInterfaceType = 0

standardHardDiskCapacity = 0

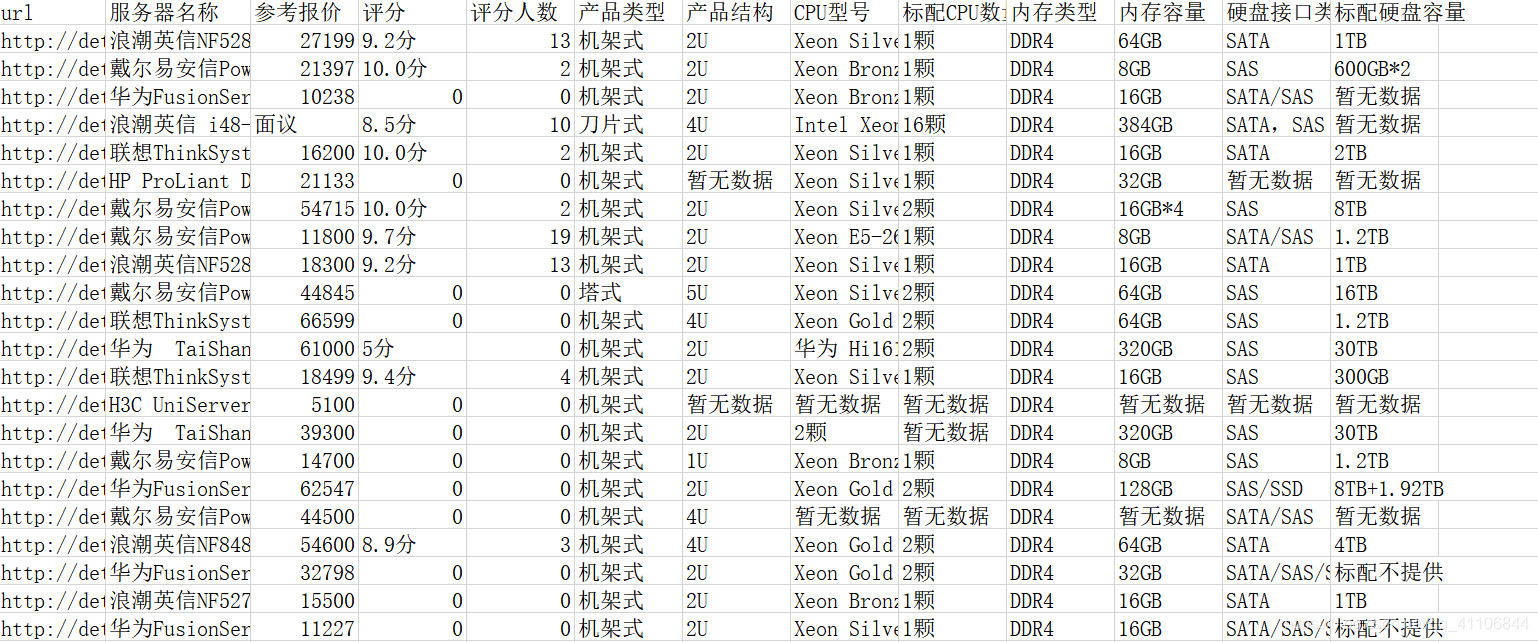

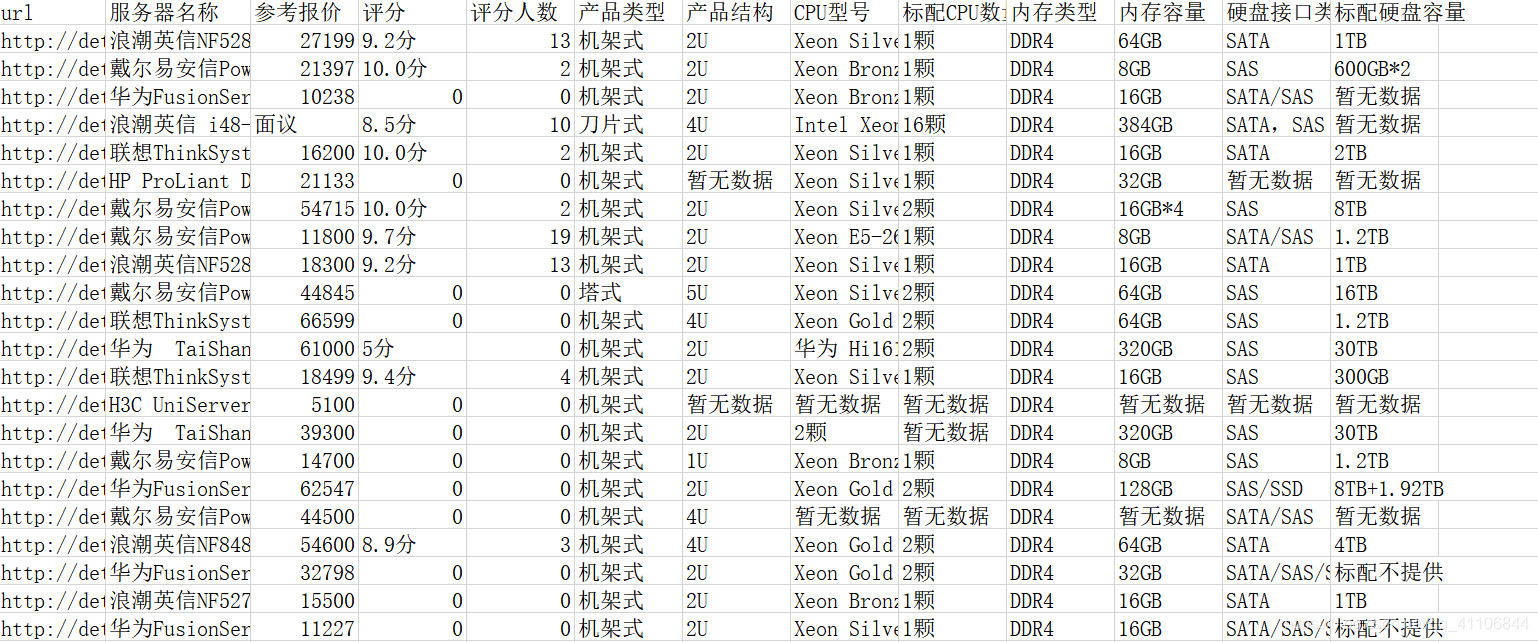

infoDict = {

"url":subUrl,

"服务器名称":name,

"参考报价":price,

"评分":score,

"评分人数":scoreNum,

"产品类型":productCategory,

"产品结构":productMix,

"CPU型号":CPUModel,

"标配CPU数量":standardCPUQuantity,

"内存类型":memoryType,

"内存容量":memoryCapacity,

"硬盘接口类型":hardDiskInterfaceType,

"标配硬盘容量":standardHardDiskCapacity

}

infoDicts.append(infoDict)

def ToCsv(infoDicts):

print("开始写入")

keys = []

for key in infoDicts[0].keys():

keys.append(key)

filename = './country_a.csv'

with open(filename, 'a', newline='',encoding='utf-8') as f:

writer = csv.DictWriter(f, keys)

writer.writeheader()

for row in infoDicts:

writer.writerow(row)

def InputStrJu(inputStr):

if inputStr in funList:

print("可以爬取")

else:

raise Exception('inputStr 还不是支持的功能。inputStr 的值为: {},目前只支持:{}'.format(inputStr,funList))

def PageIntJu(pageInt):

if pageInt > 0 and type(pageInt) == type(1):

print("完成信息确认")

else:

raise Exception('pageInt必须是比0大的整数。pageInt 的值为: {}'.format(pageInt))

if __name__ == "__main__":

print('''

目前只支持爬取服务器相关信息,请输入server

''')

try:

inputStr = input ("想要爬取哪类信息:")

InputStrJu(inputStr)

pageInt = eval(input("想要爬取多少页(1页20条信息):"))

PageIntJu(pageInt)

for page in range(1,pageInt+1):

conLists = []

requestsUrl = url + "/" + inputStr + "/" + str(page) + ".html"

response = RequestsZol(requestsUrl)

RequestsZolCon(response)

for zolConList in zolConLists:

Subpage(zolConList)

ToCsv(infoDicts)

except Exception as e:

print(e)

sys.exit(0)

1007

1007

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?