原始程序:

template_ftp_server_old.py:

import socket

import json

import struct

import os

import time

import pymysql.cursors

soc = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

HOST = '192.168.31.111'

PORT = 4101

soc.bind((HOST,PORT))

port_str = str(PORT)

slice_id = int(port_str[:2]) - 20

ueX_str = 'user'

ue_id = int(port_str[3])

ueX_delay = ueX_str + str(ue_id) + '_delay' #

ueX_v = ueX_str + str(ue_id) + '_v' #

ueX_loss = ueX_str + str(ue_id) + '_loss' #

soc.listen(5)

cnx = pymysql.connect(host='localhost', port=3306, user='root', password='123456', db='qt', charset='utf8mb4', connect_timeout=20)

cursor = cnx.cursor()

# 上传函数

def uploading_file():

ftp_dir = r'C:/Users/yinzhao/Desktop/ftp/ftpseverfile' # 将传送的name.txt保存在该文件夹当中

if not os.path.isdir(ftp_dir):

os.mkdir(ftp_dir)

head_bytes_len = conn.recv(4) # 拿到struct后的头长度

head_len = struct.unpack('i', head_bytes_len)[0] # 取出真正的头长度

# 拿到真正的头部内容

conn.send("1".encode('utf8'))

head_bytes = conn.recv(head_len)

conn.send("1".encode('utf8'))

# 反序列化后取出头

head = json.loads(head_bytes)

file_name = head['file_name']

print(file_name)

file_path = os.path.join(ftp_dir, file_name)

data_len = head['data_len']

received_size = 0

data=b''

#****************************************************旧程序问题1:一次发送最大数据量,越大传输速率越快*************************************

max_size=65536*4

while received_size < data_len:

#************************************************旧程序问题2:在接收文件的循环中有大量统计 计算 操作数据库的代码,占用时间**************8

# ftp_v = open('E:\FTP_test\FTP_v.txt', 'w', encoding='utf-8')

# ftp_delay = open('E:\FTP_test\FTP_delay.txt', 'w', encoding='utf-8')

if data_len - received_size > max_size:

size = max_size

else:

size = data_len - received_size

receive_data = conn.recv(size) # 接收文本内容

#************************************************旧程序问题3:基于socket的tcp无需手动在服务端进行ack的操作,既若无需要服务端可以不手动回发消息**********

# conn.send("1".encode('utf8'))

# delay=conn.recv(1024).decode('utf8') # 接受时延

# conn.send(str(size).encode('utf-8')) #

# v = conn.recv(1024).decode('utf8') # 接受速率

# conn.send("1".encode('utf-8')) #

# loss = conn.recv(1024).decode('utf8') # 接受loss

# conn.send("1".encode('utf-8'))

# print("delay:",delay)

# delay_float = float(delay)

# v_float = float(v)

# loss_float = float(loss)

# formatted_delay = "{:.2f}".format(delay_float)

# formatted_v = "{:.2f}".format(v_float)

# formatted_loss = "{:.2f}".format(loss_float)

# cursor.execute("UPDATE slice_v2 SET " + ueX_v + "= %s," + ueX_delay + "=%s, " + ueX_loss + " =%s WHERE slice_id = %s", [str(formatted_v), str(formatted_delay), str(formatted_loss), slice_id])

# cnx.commit()

data+=receive_data

receive_datalen = len(receive_data)

received_size += receive_datalen

# print(delay, file=ftp_delay)

# print(v, file=ftp_v)

#print(loss)

# ftp_delay.close()

#ftp_v.close()

#***********************************************旧程序问题4:接收文件后进行组装,组装后统一写入,且在一个线程里,耗时*******************************

with open(file_path, 'wb') as fw:

fw.write(data)

# print("上传文件 " + str(file_name) + " 成功")

#uploading_file()

while True:

encoding = 'utf-8'

print('等待客户端连接...')

conn, addr = soc.accept()

print('客户端已连接:', addr)

uploading_file()

conn.close()

client_renew_old.py

# coding: UTF-8

import socket

import os

import struct

import json

import hashlib

import time

# lient_soc.connect(('192.168.1.101', 8025))

# 上传函数

def uploading_file(filepath,filename):

client_soc = socket.socket()

client_soc.connect(('192.168.31.111', 4101))

try:

encoding = 'utf-8'

with open(filepath+filename, 'rb') as fr:#发送固定文件

data = fr.read()

head = {'data_len': len(data), 'file_name': filename} # 自定义头

head_bytes = json.dumps(head).encode('utf8')

head_bytes_len = struct.pack('i', len(head_bytes))

client_soc.send(head_bytes_len)

client_soc.recv(20).decode('utf8')

client_soc.send(head_bytes)

client_soc.recv(20).decode('utf8')

print(str(int(len(data))/1024)+'KB')

# client_soc.send(data)

max_size = 65536*4

#max_size = 1024

# 计算需要分成几个数据包发送

num_packets = len(data) // max_size

if len(data) % max_size > 0:

num_packets += 1

# 循环分批发送数据

for i in range(num_packets):

# 将延时、速率传到txt文件中

start = i * max_size

end = start + max_size

# if(i==num_packets-1):

# end=len(data)

packet = data[start:end]

#从这里开始计时

start = time.perf_counter()

client_soc.sendall(packet)

# client_soc.recv(1024).decode('utf8')#计算时间段

# delay=float(time.perf_counter() - start)# s

#v=round(len(packet)/1048576/delay,2)#MB/S

# delay=int(delay*1000)

# print("延时:",delay)

# print("传输速率:",v)

#client_soc.send(str(delay).encode('utf8'))

# rev_size=client_soc.recv(1024).decode('utf8')

# loss=(len(packet)-int(rev_size))/len(packet)

# client_soc.send(str(v).encode('utf8'))

# client_soc.recv(1024).decode('utf8')

# client_soc.send(str(loss).encode('utf8'))

# client_soc.recv(1024).decode('utf8')

# print("丢包率:",loss)

print("发送完毕")

#uploading_file()

except socket.error as e:

print("发生错误:", str(e))

finally:

# 关闭连接

client_soc.close()

if __name__ == '__main__':

filepath='C:/Users/yinzhao/Desktop/ftp/'

filename='test.7z'

i = 0

while i<2 :

encoding = "utf8"

uploading_file(filepath,filename)

i = i+1

print(i)

目前有如下支持:

- 传输任何文件,若成功传输到服务器,不会损坏

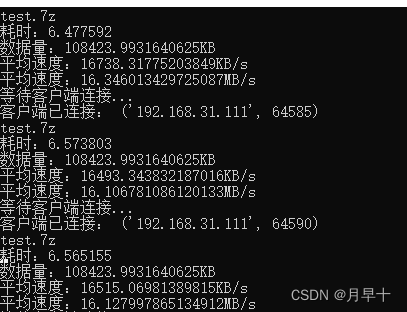

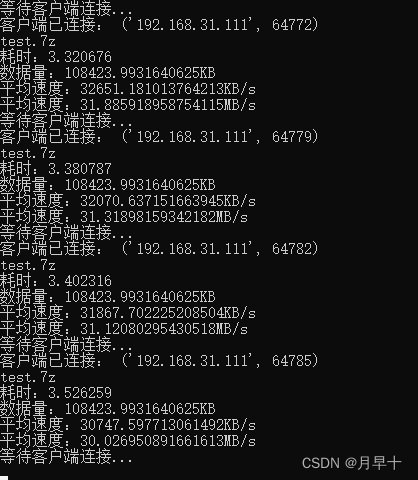

- 传输速率:

max_size =655364

max_size =655368

该代码经过测试,有如下问题与特性:

4. 一次发送最大数据量,越大传输速率越快。之前每次发送1024,现在修改为65536*4

5. 在接收文件的循环中有大量统计 计算 操作数据库的代码,占用时间

6. 基于socket的tcp无需手动在服务端进行ack的操作,既若无需要服务端可以不手动回发消息。原程序有大量手动进行确认的过程,占用大量时间

7. 完整接收文件后再进行组装,组装后统一写入,也可以改成接收一次包写入一次

8. 所有程序都在同一个线程里,可以将数据传输 统计 数据写入放在不同的程序里,提供程序执行速度

修改代码

import socket

import json

import struct

import os

import time

import pymysql.cursors

import datetime

import threading

soc = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

HOST = '192.168.84.1'

PORT = 4101

soc.bind((HOST,PORT))

port_str = str(PORT)

slice_id = int(port_str[:2]) - 20

ueX_str = 'user'

ue_id = int(port_str[3])

ueX_delay = ueX_str + str(ue_id) + '_delay' #

ueX_v = ueX_str + str(ue_id) + '_v' #

ueX_loss = ueX_str + str(ue_id) + '_loss' #

soc.listen(5)

cnx = pymysql.connect(host='localhost', port=3306, user='root', password='123456', db='qt', charset='utf8mb4', connect_timeout=20)

cursor = cnx.cursor()

def up_to_mysql(v,delay,loss):

delay_float = float(delay)

v_float = float(v)

loss_float = float(loss)

formatted_delay = "{:.2f}".format(delay_float)

formatted_v = "{:.2f}".format(v_float)

formatted_loss = "{:.2f}".format(loss_float)

cursor.execute("UPDATE slice_v2 SET " + ueX_v + "= %s," + ueX_delay + "=%s, " + ueX_loss + " =%s WHERE slice_id = %s", [str(formatted_v), str(formatted_delay), str(formatted_loss), slice_id])

cnx.commit()

def write_file(file_path,data):

with open(file_path, 'wb') as fw:

fw.write(data)

# 上传函数

def uploading_file():

ftp_dir = r'/home/lab/changeyaml/test' # 将传送的name.txt保存在该文件夹当中

if not os.path.isdir(ftp_dir):

os.mkdir(ftp_dir)

head_bytes_len = conn.recv(4) # 拿到struct后的头长度

head_len = struct.unpack('i', head_bytes_len)[0] # 取出真正的头长度

# 拿到真正的头部内容

conn.send("1".encode('utf8'))

head_bytes = conn.recv(head_len)

conn.send("1".encode('utf8'))

# 反序列化后取出头

head = json.loads(head_bytes)

file_name = head['file_name']

print(file_name)

file_path = os.path.join(ftp_dir, file_name)

data_len = head['data_len']

received_size = 0

data=b''

max_size=65536*8

time_begin = datetime.datetime.now()

time_rev_begin=datetime.datetime.now()

time_rev_end=datetime.datetime.now()

datasize_rev_last=0

while received_size < data_len:

if data_len - received_size > max_size:

size = max_size

else:

size = data_len - received_size

receive_data = conn.recv(size) # 接收文本内容

data+=receive_data

receive_datalen = len(receive_data)

received_size += receive_datalen

time_rev_end=datetime.datetime.now()

time_gap=time_rev_end-time_rev_begin

if time_gap.total_seconds()>=1 :

time_rev_begin=time_rev_end

# print((time_rev_end-time_rev_begin).total_seconds)

data_rev_1s_KB=(received_size-datasize_rev_last)/1024

# print("data:"+str(data_rev_1s_KB)+'KB')

# print("speed:"+str(data_rev_1s_KB)+'KB/s')

# print("speed:"+str(data_rev_1s_KB/1024)+'MB/s')

delay= 1/(data_rev_1s_KB*1024/max_size)

# print("delay:"+str(delay)+'s')

delay_ms=delay*1000

print("delay:"+str(delay_ms)+'ms')

datasize_rev_last=received_size

up_mysql_thread = threading.Thread(target=up_to_mysql, args=(8*data_rev_1s_KB/1024, delay_ms,0))

up_mysql_thread.start()

time_end= datetime.datetime.now()

time_transport=time_end-time_begin

time_transport_s=time_transport.total_seconds()

print("耗时:"+str(time_transport_s))

data_len_KB=data_len/1024

data_len_MB=data_len/(1024*1024)

print("数据量:"+str(data_len_KB)+"KB")

v_average=data_len_KB/time_transport_s

# print("平均速度:"+str(v_average)+'KB/s')

print("平均速度:"+str(data_len_MB/time_transport_s)+'MB/s')

write_file_thread = threading.Thread(target=write_file, args=(file_path, data))

write_file_thread.start()

while True:

encoding = 'utf-8'

print('等待客户端连接...')

conn, addr = soc.accept()

print('客户端已连接:', addr)

uploading_file()

conn.close()

# coding: UTF-8

import socket

import os

import struct

import json

import hashlib

import time

# 上传函数

def uploading_file(filepath,filename):

client_soc = socket.socket()

client_soc.connect(('192.168.1.21', 4101))

try:

encoding = 'utf-8'

with open(filepath+filename, 'rb') as fr:#发送固定文件

data = fr.read()

head = {'data_len': len(data), 'file_name': filename} # 自定义头

head_bytes = json.dumps(head).encode('utf8')

head_bytes_len = struct.pack('i', len(head_bytes))

client_soc.send(head_bytes_len)

client_soc.recv(20).decode('utf8')

client_soc.send(head_bytes)

client_soc.recv(20).decode('utf8')

print(str(int(len(data))/1024)+'KB')

# client_soc.send(data)

max_size = 65536*8

#max_size = 1024

# 计算需要分成几个数据包发送

num_packets = len(data) // max_size

if len(data) % max_size > 0:

num_packets += 1

# 循环分批发送数据

for i in range(num_packets):

start = i * max_size

end = start + max_size

# if(i==num_packets-1):

# end=len(data)

packet = data[start:end]

#从这里开始计时

start = time.perf_counter()

client_soc.sendall(packet)

print("发送完毕")

#uploading_file()

except socket.error as e:

print("发生错误:", str(e))

finally:

# 关闭连接

client_soc.close()

if __name__ == '__main__':

filepath='C:/Users/QD689/Desktop/ftp1.01/'

filename='name.txt'

i = 0

while i<3 :

encoding = "utf8"

uploading_file(filepath,filename)

uploading_file(filepath,filename)

i = i+1

print(i)

经过精简代码与多线程使用,以及合理配置每次发送数据量大小,ftp文件传输速率极大提高了,并且可以正确测算到速率与时延

测试数据:

等待客户端连接...

客户端已连接: ('192.168.31.111', 54250)

name.txt

data:56703.759765625KB

speed:56703.759765625KB/s

max_size=524288

delay:9.029383626698861ms

data:24576.0KB

speed:24576.0KB/s

max_size=524288

delay:20.833333333333332ms

data:19456.0KB

speed:19456.0KB/s

max_size=524288

delay:26.31578947368421ms

data:16384.0KB

speed:16384.0KB/s

max_size=524288

delay:31.25ms

data:13824.0KB

speed:13824.0KB/s

max_size=524288

delay:37.03703703703704ms

data:12800.0KB

speed:12800.0KB/s

max_size=524288

delay:40.0ms

data:11776.0KB

speed:11776.0KB/s

max_size=524288

delay:43.47826086956522ms

data:10752.0KB

speed:10752.0KB/s

max_size=524288

delay:47.61904761904761ms

data:10240.0KB

speed:10240.0KB/s

max_size=524288

delay:50.0ms

data:9728.0KB

speed:9728.0KB/s

max_size=524288

delay:52.63157894736842ms

data:9216.0KB

speed:9216.0KB/s

max_size=524288

delay:55.55555555555555ms

data:8704.0KB

speed:8704.0KB/s

max_size=524288

delay:58.8235294117647ms

data:8704.0KB

speed:8704.0KB/s

max_size=524288

delay:58.8235294117647ms

data:8192.0KB

speed:8192.0KB/s

max_size=524288

delay:62.5ms

data:7680.0KB

speed:7680.0KB/s

max_size=524288

delay:66.66666666666667ms

data:7680.0KB

speed:7680.0KB/s

max_size=524288

delay:66.66666666666667ms

data:7680.0KB

speed:7680.0KB/s

max_size=524288

delay:66.66666666666667ms

data:7168.0KB

speed:7168.0KB/s

max_size=524288

delay:71.42857142857143ms

data:7168.0KB

speed:7168.0KB/s

max_size=524288

delay:71.42857142857143ms

data:7168.0KB

speed:7168.0KB/s

max_size=524288

delay:71.42857142857143ms

data:6656.0KB

speed:6656.0KB/s

max_size=524288

delay:76.92307692307693ms

data:6656.0KB

speed:6656.0KB/s

max_size=524288

delay:76.92307692307693ms

data:6144.0KB

speed:6144.0KB/s

max_size=524288

delay:83.33333333333333ms

耗时:24.30927

数据量:288512.255859375KB

平均速度:11.590239026621157MB/s

等待客户端连接...

客户端已连接: ('192.168.31.111', 54267)

name.txt

data:56832.0KB

speed:56832.0KB/s

max_size=524288

delay:9.00900900900901ms

data:25088.0KB

speed:25088.0KB/s

max_size=524288

delay:20.408163265306122ms

data:19456.0KB

speed:19456.0KB/s

max_size=524288

delay:26.31578947368421ms

data:16384.0KB

speed:16384.0KB/s

max_size=524288

delay:31.25ms

data:14336.0KB

speed:14336.0KB/s

max_size=524288

delay:35.714285714285715ms

data:12288.0KB

speed:12288.0KB/s

max_size=524288

delay:41.666666666666664ms

data:11776.0KB

speed:11776.0KB/s

max_size=524288

delay:43.47826086956522ms

data:11264.0KB

speed:11264.0KB/s

max_size=524288

delay:45.45454545454545ms

data:10240.0KB

speed:10240.0KB/s

max_size=524288

delay:50.0ms

data:9728.0KB

speed:9728.0KB/s

max_size=524288

delay:52.63157894736842ms

data:9216.0KB

speed:9216.0KB/s

max_size=524288

delay:55.55555555555555ms

data:8704.0KB

speed:8704.0KB/s

max_size=524288

delay:58.8235294117647ms

data:8704.0KB

speed:8704.0KB/s

max_size=524288

delay:58.8235294117647ms

data:8192.0KB

speed:8192.0KB/s

max_size=524288

delay:62.5ms

data:8192.0KB

speed:8192.0KB/s

max_size=524288

delay:62.5ms

data:7680.0KB

speed:7680.0KB/s

max_size=524288

delay:66.66666666666667ms

data:7680.0KB

speed:7680.0KB/s

max_size=524288

delay:66.66666666666667ms

data:7168.0KB

speed:7168.0KB/s

max_size=524288

delay:71.42857142857143ms

data:7168.0KB

speed:7168.0KB/s

max_size=524288

delay:71.42857142857143ms

data:6656.0KB

speed:6656.0KB/s

max_size=524288

delay:76.92307692307693ms

data:6656.0KB

speed:6656.0KB/s

max_size=524288

delay:76.92307692307693ms

data:6656.0KB

speed:6656.0KB/s

max_size=524288

delay:76.92307692307693ms

data:6144.0KB

speed:6144.0KB/s

max_size=524288

delay:83.33333333333333ms

耗时:24.16348

数据量:288512.255859375KB

平均速度:11.660168562751346MB/s

等待客户端连接...

客户端已连接: ('192.168.31.111', 54284)

name.txt

data:56895.9599609375KB

speed:56895.9599609375KB/s

max_size=524288

delay:8.99888147333341ms

data:25088.0KB

speed:25088.0KB/s

max_size=524288

delay:20.408163265306122ms

data:19456.0KB

speed:19456.0KB/s

max_size=524288

delay:26.31578947368421ms

data:16384.0KB

speed:16384.0KB/s

max_size=524288

delay:31.25ms

data:14336.0KB

speed:14336.0KB/s

max_size=524288

delay:35.714285714285715ms

data:12800.0KB

speed:12800.0KB/s

max_size=524288

delay:40.0ms

data:11776.0KB

speed:11776.0KB/s

max_size=524288

delay:43.47826086956522ms

data:10752.0KB

speed:10752.0KB/s

max_size=524288

delay:47.61904761904761ms

data:10240.0KB

speed:10240.0KB/s

max_size=524288

delay:50.0ms

data:9728.0KB

speed:9728.0KB/s

max_size=524288

delay:52.63157894736842ms

data:9216.0KB

speed:9216.0KB/s

max_size=524288

delay:55.55555555555555ms

data:8704.0KB

speed:8704.0KB/s

max_size=524288

delay:58.8235294117647ms

data:8704.0KB

speed:8704.0KB/s

max_size=524288

delay:58.8235294117647ms

data:8192.0KB

speed:8192.0KB/s

max_size=524288

delay:62.5ms

data:7680.0KB

speed:7680.0KB/s

max_size=524288

delay:66.66666666666667ms

data:7680.0KB

speed:7680.0KB/s

max_size=524288

delay:66.66666666666667ms

data:7680.0KB

speed:7680.0KB/s

max_size=524288

delay:66.66666666666667ms

data:7168.0KB

speed:7168.0KB/s

max_size=524288

delay:71.42857142857143ms

data:512.0KB

speed:512.0KB/s

max_size=524288

delay:1000.0ms

问题:随着时间的推移时延不断增大

原因:本次测试传输文件为281MB的文本文档,传输大文件时随着时间的推移传输速度变慢,此种统计方法在表现上时延便逐渐增加。 当链路的带宽受限时表现更加明显。

- 将print等放入另一个线程中,结果几乎一样 :

import socket

import json

import struct

import os

import time

import pymysql.cursors

import datetime

import threading

soc = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

HOST = '192.168.31.111'

PORT = 4101

soc.bind((HOST,PORT))

port_str = str(PORT)

slice_id = int(port_str[:2]) - 20

ueX_str = 'user'

ue_id = int(port_str[3])

ueX_delay = ueX_str + str(ue_id) + '_delay' #

ueX_v = ueX_str + str(ue_id) + '_v' #

ueX_loss = ueX_str + str(ue_id) + '_loss' #

soc.listen(5)

cnx = pymysql.connect(host='localhost', port=3306, user='root', password='123456', db='qt', charset='utf8mb4', connect_timeout=20)

cursor = cnx.cursor()

def up_to_mysql(v,delay,loss):

delay_float = float(delay)

v_float = float(v)

loss_float = float(loss)

formatted_delay = "{:.2f}".format(delay_float)

formatted_v = "{:.2f}".format(v_float)

formatted_loss = "{:.2f}".format(loss_float)

cursor.execute("UPDATE slice_v2 SET " + ueX_v + "= %s," + ueX_delay + "=%s, " + ueX_loss + " =%s WHERE slice_id = %s", [str(formatted_v), str(formatted_delay), str(formatted_loss), slice_id])

cnx.commit()

def write_file(file_path,data):

with open(file_path, 'wb') as fw:

fw.write(data)

#多线程用到的全局变量

data_rev_1s_KB=0

delay_ms=0

datasize_rev_last=0

delay_ms=0

max_size=65536*8

time_rev_begin=datetime.datetime.now()

time_rev_end=datetime.datetime.now()

received_size=0

def get_information():

global time_rev_begin,time_rev_end

global data_rev_1s_KB,delay_ms

global datasize_rev_last

global max_size

global received_size

time_rev_begin=time_rev_end

# print((time_rev_end-time_rev_begin).total_seconds)

data_rev_1s_KB=(received_size-datasize_rev_last)/1024

print("data:"+str(data_rev_1s_KB)+'KB')

print("speed:"+str(data_rev_1s_KB)+'KB/s')

print("max_size="+str(max_size))

# print("speed:"+str(data_rev_1s_KB/1024)+'MB/s')

delay= 1/(data_rev_1s_KB*1024/max_size)

# print("delay:"+str(delay)+'s')

delay_ms=delay*1000

print("delay:"+str(delay_ms)+'ms')

datasize_rev_last=received_size

# 上传函数

def uploading_file():

global time_rev_begin,time_rev_end

global data_rev_1s_KB,delay_ms

global datasize_rev_last

global max_size

global received_size

ftp_dir = r'C:\Users\yinzhao\Desktop\ftp\ftpseverfile'

# ftp_dir = r'/home/lab/changeyaml/test' # 将传送的name.txt保存在该文件夹当中

if not os.path.isdir(ftp_dir):

os.mkdir(ftp_dir)

head_bytes_len = conn.recv(4) # 拿到struct后的头长度

head_len = struct.unpack('i', head_bytes_len)[0] # 取出真正的头长度

# 拿到真正的头部内容

conn.send("1".encode('utf8'))

head_bytes = conn.recv(head_len)

conn.send("1".encode('utf8'))

# 反序列化后取出头

head = json.loads(head_bytes)

file_name = head['file_name']

print(file_name)

file_path = os.path.join(ftp_dir, file_name)

data_len = head['data_len']

received_size = 0

data=b''

max_size=65536*8

time_begin = datetime.datetime.now()

time_rev_begin=datetime.datetime.now()

time_rev_end=datetime.datetime.now()

# datasize_rev_last=0

while received_size < data_len:

if data_len - received_size > max_size:

size = max_size

else:

size = data_len - received_size

receive_data = conn.recv(size) # 接收文本内容

data+=receive_data

receive_datalen = len(receive_data)

received_size += receive_datalen

time_rev_end=datetime.datetime.now()

time_gap=time_rev_end-time_rev_begin

if time_gap.total_seconds()>=1 :

get_information_thread = threading.Thread(target=get_information)

get_information_thread.start()

up_mysql_thread = threading.Thread(target=up_to_mysql, args=(8*data_rev_1s_KB/1024, delay_ms,0))

up_mysql_thread.start()

time_end= datetime.datetime.now()

time_transport=time_end-time_begin

time_transport_s=time_transport.total_seconds()

print("耗时:"+str(time_transport_s))

data_len_KB=data_len/1024

data_len_MB=data_len/(1024*1024)

print("数据量:"+str(data_len_KB)+"KB")

v_average=data_len_KB/time_transport_s

# print("平均速度:"+str(v_average)+'KB/s')

print("平均速度:"+str(data_len_MB/time_transport_s)+'MB/s')

write_file_thread = threading.Thread(target=write_file, args=(file_path, data))

write_file_thread.start()

while True:

encoding = 'utf-8'

print('等待客户端连接...')

conn, addr = soc.accept()

print('客户端已连接:', addr)

uploading_file()

conn.close()

7003

7003

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?