爬取怀化学院数学与科学学院官网所有数据

import requests

import time

import re

from bs4 import BeautifulSoup

import random

import os

pages = set()

#拼接链接

def completion(newUrl):

if "http" in newUrl:

newUrl = newUrl

else:

newUrl = "http://math.hhtc.edu.cn/" + newUrl

return newUrl

pass

def downloadImg(html):

# 数据存储到本地

soup = BeautifulSoup(html, 'lxml')

image = soup.find_all(src=re.compile("uploads"))

imgurl = []

for i in range(0, len(image)):

imgurl.append(image[i].get('src'))

pass

for i in range(0, len(imgurl)):

if 'http://' in imgurl[i]:

v = imgurl[i].split('/')

try:

pic = requests.get(imgurl[i])

pass

except requests.exceptions.ConnectionError:

print("图片无法下载")

pass

# 保存路径

path=os.getcwd()

path=path+"\coment\图片"

fp = open(path+"\\"+str(v[len(v) - 1]), 'wb',1)

fp.write(pic.content)

fp.close()

pass

else:

imgurl[i] = imgurl[i].replace('/uploads', 'uploads')

v = imgurl[i].split('/')

img = 'http://math.hhtc.edu.cn/' + imgurl[i]

try:

#超时异常处理

pic = requests.get(img, timeout=10)

pass

except requests.exceptions.ConnectionError:

print("图片无法下载")

pass

# 保存路径

path = os.getcwd()

path = path + "\coment\图片"

fp = open(path + "\\" + str(v[len(v) - 1]), 'wb', 1)

fp.write(pic.content)

fp.close()

pass

pass

def downloadTxt(html,title0):

"""

title0:这个是提取出

"不忘初心"这种形式的\\"这样就保存路径出错,直接把里面的变成中文的“

"""

title0=str(title0).replace("\"","'")

#需要处理一种情况:空白页

try:

soup = BeautifulSoup(html, 'lxml')

#去除js脚本内容,避免提取内容时,出现提取出注释中的文字

#1.首先获取标题,可能没有标题需要处理下

title = soup.find_all(name='td',attrs={"style": "text-align:center; font-weight:bold; font-size:24px; padding-top:2px;"})

if len(title)!=0:

title1=''.join(title[0].get_text())

title1="标题:"+title1

pass

else:

title1="标题:Null"

#2.获取作者点击量等信息,作者信息需要处理下

author = soup.find_all(name="td", attrs={"style": "text-align:center; border-bottom:1px dotted #0B476C"})

if len(author)!=0:

txt = author[0].get_text().split()

author1 = "".join(txt)

pass

else:

author1="作者、点击量、发表时间、出自:Null"

pass

#3.获取正文信息并处理让他自动换行

text = soup.select("#maindiv > table:nth-child(4) > tr")

pattern = re.compile(r"[\u4e00-\u9fa5]+|[\(\)\《\》\——\;\,\。\“\”\!]+|-?[0-9]\d")

text1 = ''.join(pattern.findall(text[0].get_text()))

print(text1)

#字符串变成列表利用insert插入"\n"实现换行

text2 = list(text1)

le=len(text1)/60+len(text1)

#有可能小于字数40

if len(text1)>60:

for i in range(0, int(le)):

if (i + 1) % 60 == 0:

text2.insert(i, "\n")

pass

pass

pass

text1 = "".join(text2)

pass

#把标题,作者,正文等信息存储到一个字符串中

word=title1+"\n"+author1+"\n"+text1

#开始把文本存入到文件夹

title3 = title0 + ".txt"

path = os.getcwd()

path = path + "\coment\源码和内容"

# 这里由于word是字符串形式,所以不用wb

file1 = open(path + "\\" + title3, "w", 1,encoding="utf-8");

file1.write(word);

file1.flush()

file1.close();

except:

print("此页为空白页")

pass

def downloadHtml(html,title):

"""

title0:这个是提取出

不忘初心"这种形式的\\"这样就保存路径出错,直接把里面的变成中文的“

"""

title = str(title).replace("\"", "'")

html1=html

title1=title+".html"

path=os.getcwd()

path = path + "\coment\源码和内容"

file1 = open(path+"\\"+title1, "wb", 1);

file1.write(html1);

file1.close();

pass

#对每个具体页面进行数据爬取

def specificPage(newUrl):

print(newUrl+":正在爬取数据")

try:

agentsList = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0E; .NET4.0C)"

]

user_agent = random.choice(agentsList)

headers = {"User-Agent": user_agent}

# 得到网页的源码

html_data = requests.get(url=newUrl, headers=headers)

time.sleep(0.5)

html = html_data.content

soup = BeautifulSoup(html, 'lxml')

try:

title=soup.title.get_text()

"""

得到的是:学术交流 » 怀化学院数学与计算科学学院,利用》》切割

特例:首页title里面是怀化学院数学与计算科学学院所以分情况讨论

"""

if "»" in title:

title=title.split("»")[0]

pass

title = title.replace(" ", "")

"""

title即可保存为文件名,但是有时会出现两个title相同的情况

解决方法:采用title+list-??的命名方法

"""

try:

url = newUrl.split("?")[1]

pass

except:

url=""

pass

url = url.split(".")[0]

title=title+url

#开始下载文本、其他文件、源码

#首先下载源码

downloadHtml(html,title)

#下载图片

downloadImg(html)

#下载文本

downloadTxt(html,title)

pass

except Exception as a:

print(a)

pass

except Exception as exc:

print('There was a problem: %s' % (exc))

pass

#获取页面所有链接,并对简写的链接进行补全

def hrefList(url):

global pages

try:

rule = "index.php"

agentsList = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0E; .NET4.0C)"

]

# 随机选取hearder

user_agent = random.choice(agentsList)

headers = {"User-Agent": user_agent}

# 得到网页的源码

html_data = requests.get(url=url, headers=headers)

time.sleep(0.5)

# 休眠一定时间,模拟人的操作

# time.sleep(1)

#判断是否链接上

if html_data.status_code==200:

# 获得网页源码

html = html_data.content

h = BeautifulSoup(html, 'lxml')

for a in h.find_all('a', href=re.compile(rule)):

# if判断a里面是否有链接

if a.get('href'):

if "首 页" != a.string:

"""

在这里需要做判断,保证链接都是数计院的

特点是有math

"""

# 保证每个链接的唯一性

if a.get('href') not in pages:

newUrl = a.get('href')

if "http://" in a.get("href") and "math" in a.get("href") and "down" not in a.get("href") and "piclist" not in a.get("href"):

pages.add(newUrl)

# 得到链接处理每个具体的页面

#print(newUrl)

specificPage(newUrl)

hrefList(newUrl)

elif "http://" not in a.get("href") and "down" not in a.get("href") and "piclist" not in a.get("href"):

pages.add(newUrl)

#print(newUrl)

newUrl="http://math.hhtc.edu.cn/"+newUrl

# 得到链接处理每个具体的页面

specificPage(newUrl)

hrefList(newUrl)

pass

else:

print("网页无响应,正在重新链接")

except Exception as a:

print("爬取完成",a)

#创建存储爬取数据的文件夹

file_name=["\图片","\源码和内容"]

try:

path = os.getcwd()

path = path+"\coment"

print(path)

for name in file_name:

print(path+name)

os.makedirs(path+name)

pass

except Exception as a:

print("文件夹已存在")

pass

specificPage('http://math.hhtc.edu.cn')

hrefList('http://math.hhtc.edu.cn')

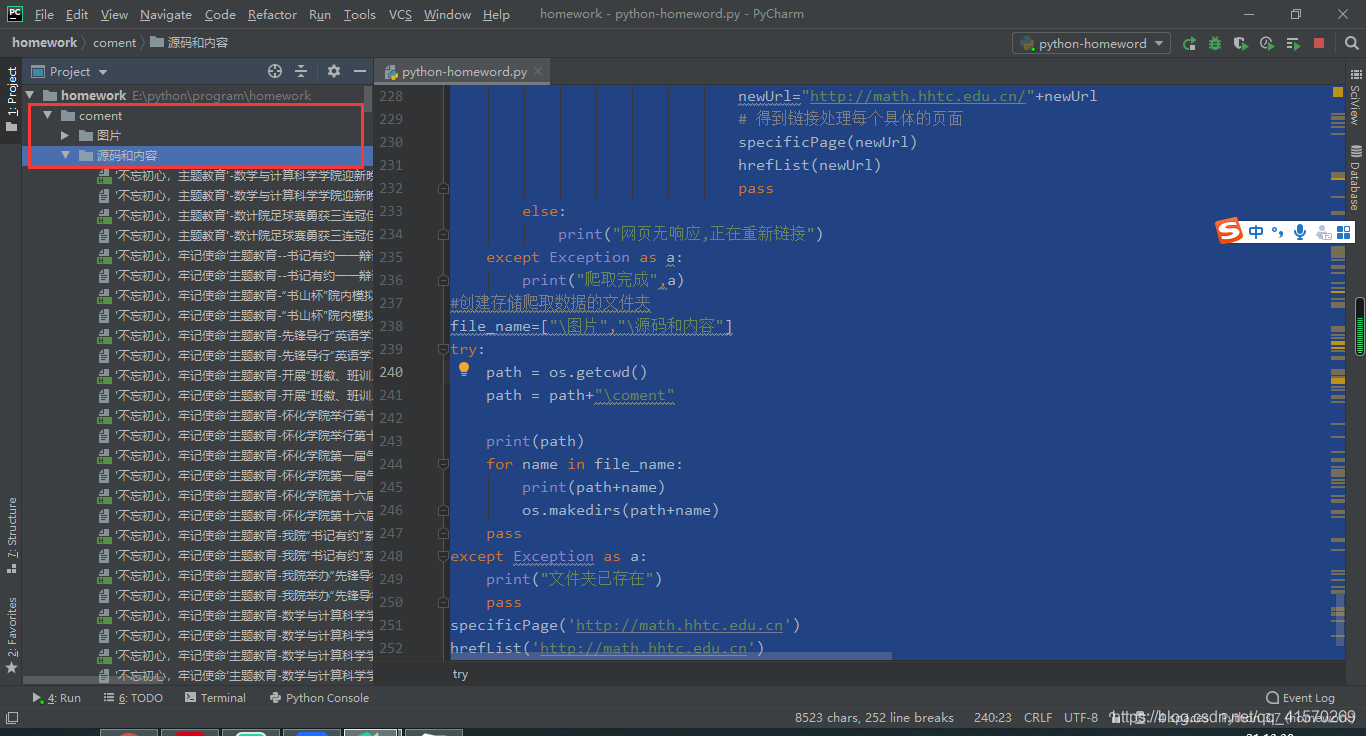

具体效果如下(包含所有图片及文字信息和下载文件等):

608

608

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?