转换和增强图像https://pytorch.org/vision/stable/transforms.html

变换是常见的图像变换,torchvision.transforms module转换模块。可以使用Compose将它们链接在一起。大多数变换同时接受PIL像和张量像,尽管有些变换是只PIL的,有些是只张量的。转换变换可用于转换到和从PIL图像。

接受张量像的变换也接受成批的张量像。张量图像是一个形状为(C, H, W)的张量,其中C是通道数,H和W是图像的高度和宽度。一批张量图像是(B, C, H, W)形状的张量,其中B是批图像的个数。

随机转换将对给定批处理的所有图像应用相同的转换,但它们将在调用之间产生不同的转换。对于跨调用的可重现转换,可以使用函数转换。

编写脚本的转换

In order to script the transformations, please use torch.nn.Sequential instead of Compose.

transforms = torch.nn.Sequential(

transforms.CenterCrop(10),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)),

)

scripted_transforms = torch.jit.script(transforms)

Make sure to use only scriptable transformations, i.e. that work with torch.Tensor and does not require lambda functions or PIL.Image.

For any custom transformations to be used with torch.jit.script, they should be derived from torch.nn.Module

Compositions of transforms

torchvision.transforms.Compose(transforms)

将几个变换组合在一起。这个转换不支持torchscript。请参阅下面的说明。

Parameters

transforms (list of Transform objects) – list of transforms to compose.

>>> transforms.Compose([

>>> transforms.CenterCrop(10),

>>> transforms.PILToTensor(),

>>> transforms.ConvertImageDtype(torch.float),

>>> ])

Transforms on PIL Image and torch.*Tensor

1、CenterCrop(size),Crops the given image at the center,对给定的图像进行中间裁切

torchvision.transforms.CenterCrop(size)

Parameters

size (sequence or int) – Desired output size of the crop. If size is an int instead of sequence like (h, w), a square crop (size, size) is made. If provided a sequence of length 1, it will be interpreted as (size[0], size[0]).

forward(img)[SOURCE]

Parameters

img (PIL Image or Tensor) – Image to be cropped.

Returns

Cropped image.

Return type

PIL Image or Tensor

import torch.nn as nn

transforms = torch.nn.Sequential(

T.RandomCrop(224),

T.RandomHorizontalFlip(p=0.3),

)

device = 'cuda' if torch.cuda.is_available() else 'cpu'

dog1 = dog1.to(device)

dog2 = dog2.to(device)

transformed_dog1 = transforms(dog1)

transformed_dog2 = transforms(dog2)

show([transformed_dog1, transformed_dog2])

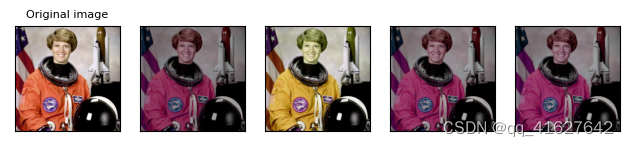

2、ColorJitter([brightness, contrast, …]),Randomly change the brightness, contrast, saturation and hue of an image.随机改变亮度,对比度,饱和度和色调的图像

torchvision.transforms.ColorJitter(brightness=0, contrast=0, saturation=0, hue=0)

Parameters

brightness (float or tuple of python:float (min, max)) – How much to jitter brightness. brightness_factor is chosen uniformly from [max(0, 1 - brightness), 1 + brightness] or the given [min, max]. Should be non negative numbers.

contrast (float or tuple of python:float (min, max)) – How much to jitter contrast. contrast_factor is chosen uniformly from [max(0, 1 - contrast), 1 + contrast] or the given [min, max]. Should be non negative numbers.

saturation (float or tuple of python:float (min, max)) – How much to jitter saturation. saturation_factor is chosen uniformly from [max(0, 1 - saturation), 1 + saturation] or the given [min, max]. Should be non negative numbers.

hue (float or tuple of python:float (min, max)) – How much to jitter hue. hue_factor is chosen uniformly from [-hue, hue] or the given [min, max]. Should have 0<= hue <= 0.5 or -0.5 <= min <= max <= 0.5.

jitter = T.ColorJitter(brightness=.5, hue=.3)

jitted_imgs = [jitter(orig_img) for _ in range(4)]

plot(jitted_imgs)

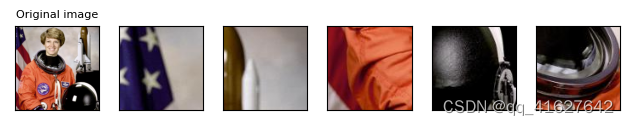

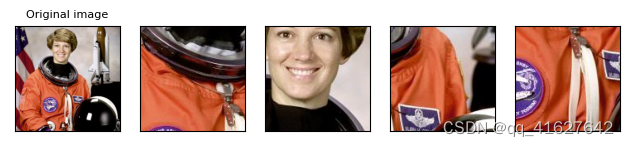

3、FiveCrop(size),Crop the given image into four corners and the central crop,将给定的图像裁剪成四个角和中心裁剪

torchvision.transforms.FiveCrop(size)

top_left, top_right, bottom_left, bottom_right, center) = T.FiveCrop(size=(100, 100))(orig_img)

plot([top_left, top_right, bottom_left, bottom_right, center])

4、Grayscale([num_output_channels]),Convert image to grayscale,将图像转换为灰度

gray_img = T.Grayscale()(orig_img)

plot([gray_img], cmap='gray')

5、Pad(padding[, fill, padding_mode]),Pad the given image on all sides with the given “pad” value.用给定的“填充”值在所有的边填充给定的图像

padded_imgs = [T.Pad(padding=padding)(orig_img) for padding in (3, 10, 30, 50)]

plot(padded_imgs)

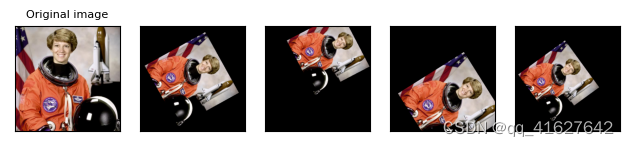

6、RandomAffine(degrees[, translate, scale, …]),Random affine transformation of the image keeping center invariant.图像中心保持不变的随机仿射变换

torchvision.transforms.RandomAffine(degrees, translate=None, scale=None, shear=None, interpolation=<InterpolationMode.NEAREST: 'nearest'>, fill=0, fillcolor=None, resample=None, center=None)

Parameters

degrees (sequence or number) – Range of degrees to select from. If degrees is a number instead of sequence like (min, max), the range of degrees will be (-degrees, +degrees). Set to 0 to deactivate rotations.

translate (tuple, optional) – tuple of maximum absolute fraction for horizontal and vertical translations. For example translate=(a, b), then horizontal shift is randomly sampled in the range -img_width * a < dx < img_width * a and vertical shift is randomly sampled in the range -img_height * b < dy < img_height * b. Will not translate by default.

scale (tuple, optional) – scaling factor interval, e.g (a, b), then scale is randomly sampled from the range a <= scale <= b. Will keep original scale by default.

shear (sequence or number, optional) – Range of degrees to select from. If shear is a number, a shear parallel to the x axis in the range (-shear, +shear) will be applied. Else if shear is a sequence of 2 values a shear parallel to the x axis in the range (shear[0], shear[1]) will be applied. Else if shear is a sequence of 4 values, a x-axis shear in (shear[0], shear[1]) and y-axis shear in (shear[2], shear[3]) will be applied. Will not apply shear by default.

interpolation (InterpolationMode) – Desired interpolation enum defined by torchvision.transforms.InterpolationMode. Default is InterpolationMode.NEAREST. If input is Tensor, only InterpolationMode.NEAREST, InterpolationMode.BILINEAR are supported. For backward compatibility integer values (e.g. PIL.Image.NEAREST) are still acceptable.

fill (sequence or number) – Pixel fill value for the area outside the transformed image. Default is 0. If given a number, the value is used for all bands respectively.

fillcolor (sequence or number, optional) –

affine_transfomer = T.RandomAffine(degrees=(30, 70), translate=(0.1, 0.3), scale=(0.5, 0.75))

affine_imgs = [affine_transfomer(orig_img) for _ in range(4)]

plot(affine_imgs)

7、 RandomApply(transforms[, p]),Apply randomly a list of transformations with a given probability.以给定的概率随机应用一组转换。

torchvision.transforms.RandomApply(transforms, p=0.5)

applier = T.RandomApply(transforms=[T.RandomCrop(size=(64, 64))], p=0.5)

transformed_imgs = [applier(orig_img) for _ in range(4)]

plot(transformed_imgs)

RandomCrop(size[, padding, pad_if_needed, …]),Crop the given image at a random location.在一个随机的位置裁剪给定的图像。

torchvision.transforms.RandomCrop(size, padding=None, pad_if_needed=False, fill=0, padding_mode=‘constant’)

Parameters

size (sequence or int) – Desired output size of the crop. If size is an int instead of sequence like (h, w), a square crop (size, size) is made. If provided a sequence of length 1, it will be interpreted as (size[0], size[0]).

padding (int or sequence, optional) –

Optional padding on each border of the image. Default is None. If a single int is provided this is used to pad all borders. If sequence of length 2 is provided this is the padding on left/right and top/bottom respectively. If a sequence of length 4 is provided this is the padding for the left, top, right and bottom borders respectively.

NOTE

In torchscript mode padding as single int is not supported, use a sequence of length 1: [padding, ].

pad_if_needed (boolean) – It will pad the image if smaller than the desired size to avoid raising an exception. Since cropping is done after padding, the padding seems to be done at a random offset.

fill (number or str or tuple) – Pixel fill value for constant fill. Default is 0. If a tuple of length 3, it is used to fill R, G, B channels respectively. This value is only used when the padding_mode is constant. Only number is supported for torch Tensor. Only int or str or tuple value is supported for PIL Image.

padding_mode (str) –

Type of padding. Should be: constant, edge, reflect or symmetric. Default is constant.

constant: pads with a constant value, this value is specified with fill

edge: pads with the last value at the edge of the image. If input a 5D torch Tensor, the last 3 dimensions will be padded instead of the last 2

reflect: pads with reflection of image without repeating the last value on the edge. For example, padding [1, 2, 3, 4] with 2 elements on both sides in reflect mode will result in [3, 2, 1, 2, 3, 4, 3, 2]

symmetric: pads with reflection of image repeating the last value on the edge. For example, padding [1, 2, 3, 4] with 2 elements on both sides in symmetric mode will result in [2, 1, 1, 2, 3, 4, 4, 3]

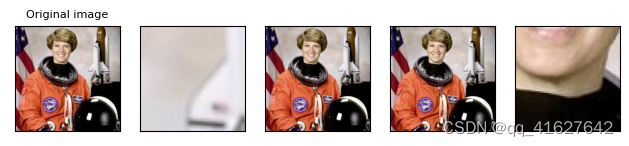

cropper = T.RandomCrop(size=(128, 128))

crops = [cropper(orig_img) for _ in range(4)]

plot(crops)

RandomGrayscale([p]),Randomly convert image to grayscale with a probability of p (default 0.1).随机将图像转换为灰度,概率为p(默认为0.1)。

RandomHorizontalFlip([p]),Horizontally flip the given image randomly with a given probability.以给定的概率水平随机翻转给定的图像。

RandomPerspective([distortion_scale, p, …]),Performs a random perspective transformation of the given image with a given probability.以给定的概率对给定的图像进行随机的透视变换。

RandomResizedCrop(size[, scale, ratio, …]),Crop a random portion of image and resize it to a given size.裁剪图像的随机部分,并将其调整到给定的大小。

RandomRotation(degrees[, interpolation, …]),Rotate the image by angle.旋转图像的角度。

RandomVerticalFlip([p]),Vertically flip the given image randomly with a given probability.按照给定的概率,垂直随机翻转给定的图像。

Resize(size[, interpolation, max_size, …]),Resize the input image to the given size.将输入图像的大小调整为给定的大小。

TenCrop(size[, vertical_flip]),Crop the given image into four corners and the central crop plus the flipped version of these (horizontal flipping is used by default).将给定的图像裁剪为四个角,中央裁剪加上这些角的翻转版本(默认使用水平翻转)。

torchvision.transforms.TenCrop(size, vertical_flip=False)

>>> transform = Compose([

>>> TenCrop(size), # this is a list of PIL Images

>>> Lambda(lambda crops: torch.stack([ToTensor()(crop) for crop in crops])) # returns a 4D tensor

>>> ])

>>> #In your test loop you can do the following:

>>> input, target = batch # input is a 5d tensor, target is 2d

>>> bs, ncrops, c, h, w = input.size()

>>> result = model(input.view(-1, c, h, w)) # fuse batch size and ncrops

>>> result_avg = result.view(bs, ncrops, -1).mean(1) # avg over crops

GaussianBlur(kernel_size[, sigma]),Blurs image with randomly chosen Gaussian blur.模糊图像随机选择高斯模糊

RandomInvert([p]),Inverts the colors of the given image randomly with a given probability.以给定的概率随机反转给定图像的颜色。

RandomPosterize(bits[, p]),Posterize the image randomly with a given probability by reducing the number of bits for each color channel.通过减少每个颜色通道的比特数,以给定的概率随机分离图像。

RandomSolarize(threshold[, p]),Solarize the image randomly with a given probability by inverting all pixel values above a threshold.通过倒置高于阈值的所有像素值,以给定的概率随机地对图像进行日光浴。

RandomAdjustSharpness(sharpness_factor[, p]),Adjust the sharpness of the image randomly with a given probability.根据给定的概率随机调整图像的锐度。

RandomAutocontrast([p]),Autocontrast the pixels of the given image randomly with a given probability.以给定的概率随机地自动对比给定图像的像素。

RandomEqualize([p]),Equalize the histogram of the given image randomly with a given probability.以给定的概率随机均衡给定图像的直方图。

Transforms on PIL Image only,RandomChoice(transforms[, p])

Apply single transformation randomly picked from a list.,RandomOrder(transforms)

Apply a list of transformations in a random order.

Transforms on torch.*Tensor only

LinearTransformation(transformation_matrix, …)Transform a tensor image with a square transformation matrix and a mean_vector computed offline.用平方变换矩阵和脱机计算的均值向量对张量图像进行变换。

torchvision.transforms.LinearTransformation(transformation_matrix, mean_vector)

Parameters

transformation_matrix (Tensor) – tensor [D x D], D = C x H x W

mean_vector (Tensor) – tensor [D], D = C x H x W

forward(tensor: torch.Tensor) → torch.Tensor[SOURCE]

Parameters

tensor (Tensor) – Tensor image to be whitened.

Returns

Transformed image.

Return type

Tensor

Normalize(mean, std[, inplace]),Normalize a tensor image with mean and standard deviation.,用均值和标准差归一化张量像。

orchvision.transforms.Normalize(mean, std, inplace=False)

Parameters

mean (sequence) – Sequence of means for each channel.

std (sequence) – Sequence of standard deviations for each channel.

inplace (bool,optional) – Bool to make this operation in-place.

def preprocess(batch):

transforms = T.Compose(

[

T.ConvertImageDtype(torch.float32),

T.Normalize(mean=0.5, std=0.5), # map [0, 1] into [-1, 1]

T.Resize(size=(520, 960)),

]

)

batch = transforms(batch)

return batch

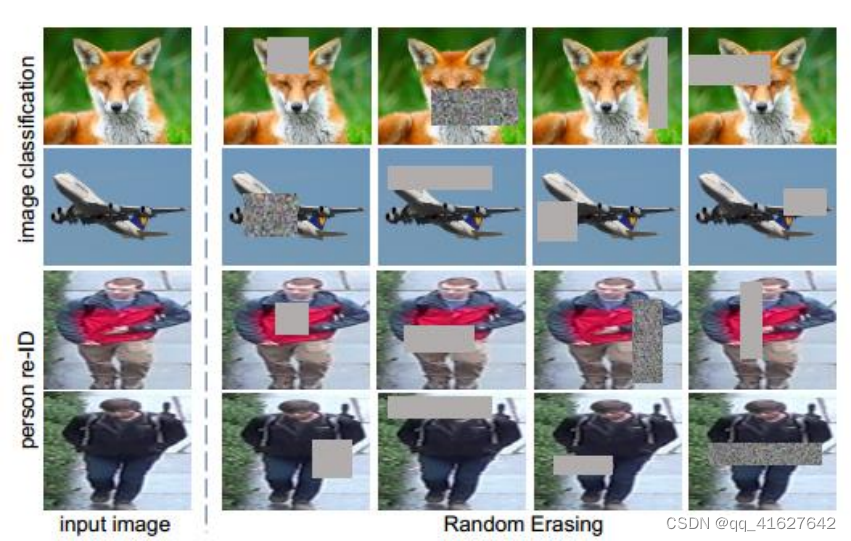

RandomErasing([p, scale, ratio, value, inplace]),Randomly selects a rectangle region in an torch Tensor image and erases its pixels.在火炬张量图像中随机选择一个矩形区域并擦除其像素。

功能:对图像进行随机遮挡

• p:概率值,执行该操作的概率

• scale:遮挡区域的面积

• ratio:遮挡区域长宽比

• value:设置遮挡区域的像素值,(R, G, B) or (Gray)

torchvision.transforms.RandomErasing(p=0.5, scale=(0.02, 0.33), ratio=(0.3, 3.3), value=0, inplace=False)

Parameters

p – probability that the random erasing operation will be performed.执行随机擦除操作的概率。

scale – range of proportion of erased area against input image.擦除区域与输入图像的比例范围。

ratio – range of aspect ratio of erased area.擦除面积的纵横比范围。

value – erasing value. Default is 0. If a single int, it is used to erase all pixels. If a tuple of length 3, it is used to erase R, G, B channels respectively. If a str of ‘random’, erasing each pixel with random values.

inplace – boolean to make this transform inplace. Default set to False.

>>> transform = transforms.Compose([

>>> transforms.RandomHorizontalFlip(),

>>> transforms.PILToTensor(),

>>> transforms.ConvertImageDtype(torch.float),

>>> transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)),

>>> transforms.RandomErasing(),

>>> ])

RandomErasing(p=0.5,

scale=(0.02, 0.33),

ratio=(0.3, 3.3),

value=0,

inplace=False)

ConvertImageDtype(dtype),Convert a tensor image to the given dtype and scale the values accordingly This function does not support PIL Image.将张量图像转换为给定的dtype,并相应地缩放值

torchvision.transforms.ConvertImageDtype(dtype: torch.dtype)

258

258

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?