SIFT图像拼接

前言

结合SIFT、单应性变换、Ransac和SVD等算法实现指定几幅图像之间的拼接,并理解其原理。实验用图如图1,我们需要将图像按照(a)-(b)-©-(d)的顺序拼接起来,将图像都变换到同一个像素坐标系。实验原理如图所示:

实验原理图:

二、相关工作

1.SIFT

SIFT即尺度不变特征变换,是用于图像处理领域的一种描述。这种描述具有尺度不变性,可在图像中检测出关键点,是一种局部特征描述子。SIFT算法具有稳定性、不变性、区分性多量性、高速性和可扩展性等优良特点,因此在图像特征提取方面广泛。

SIFT特征检测主要包括以下5个基本步骤:

⦁ 生成高斯金字塔:生成高斯金字塔可以分为两个过程,一是高斯模糊,二是降采样。如图2,Octave表示一幅图像可产生的图像组数。另外,降采样时,高斯金字塔上一组图像的初始图像(底层图像)是由前一组图像的倒数第三张图像隔点采样得到的。

⦁ 尺度空间极值检测:搜索所有尺度上的图像位置。通过高斯微分函数来识别潜在的对于尺度和旋转不变的兴趣点。

⦁ 关键点定位:在每个候选的位置上,通过一个拟合精细的模型来确定位置和尺度。关键点的选择依据于它们的稳定程度。

⦁ 方向确定:基于图像局部的梯度方向,分配给每个关键点位置一个或多个方向。所有后面的对图像数据的操作都相对于关键点的方向、尺度和位置进行变换,从而提供对于这些变换的不变性。

⦁ 关键点描述:在每个关键点周围的邻域内,在选定的尺度上测量图像局部的梯度。这些梯度被变换成一种表示,这种表示允许比较大的局部形状的变形和光照变化。

如果要实现图像之间的特征点匹配,要通过特征描述子集之间比对完成。常见的匹配器有暴力匹配器和快速近似最邻近算法匹配器。暴力匹配器就是将两幅图像中的特征描述子全都匹配一遍,得到最优的结果,它的优点是精度高,但是缺点也是显而易见的,在大量的匹配时,匹配时间会很长。快速近似最邻近算法匹配器,故名思意,它只搜索邻近的点,找到邻近的最优匹配,它的匹配准确度会比暴力匹配器低,但是它的匹配时间大大的缩减了,可用于对匹配准确度要求不高的场合。

网图,金字塔模型

2.RANSAC拟合

RANSAC是Random Sample Consensus的缩写,其基本操作是通过迭代的方法得出一组包含噪声数据的数学模型参数。其基本的思想如下:

1)选定数学模型,准备足够多的点。

2)根据点确定模型参数。

3)判定数据集中其他的点是否符合模型,并进行统计。

4)反复以上操作,直到模型满足最多的点符合模型为止。

RANSAC算法不是一种确定的算法,只能在一定概率下产生结果,这个概率是会随着数据集的增大而增加的。

在RANSAC拟合过程中选取的点不能太过于靠近。理论上说迭代的次数越大它的获取好样本概率就越大,但是考虑到算法力求简单快捷,我们可以根据需要的样本概率等参数来决定迭代次数。

其中,K为需要采样的次数;z为获取一个好样本的概率,一般设为99%; w为点集中内点的比例,一般可以在初始时设置一个较小值,如0.1,然后迭代更新; n为模型参数估计需要的最小点个数,直线拟合最少需要2个点。除了迭代次数之外,我们还要设置一个距离阀值,当点距直线距离小于这个值时,被即为内点(即符合要求的点),当点距离直线的距离大于这个点就称为外点。

在实验中, SIFT算法得到的匹配点中还是存在比较多的误匹配点,我们需要利用RANSAC算法剔除误差比较大的匹配点。

3单应性变换

单应性变换,也常被称为射影变换,可简单理解为世界坐标系中一平面上的点在不同像素坐标系之间的映射关系,对应的矩阵称为单应性矩阵,是一个3*3的非奇异矩阵,经常用H表示。单应性变换用H可表示为:

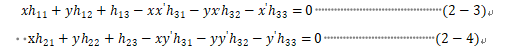

可简要表示为,其中,表示第一幅图像中一点的齐次坐标,表示该点在另一幅图像中的对应点,H为第一幅图到第二幅图的单应性变换矩阵,值得注意的是第二幅图到第一幅图的单应性变换矩阵为。为了求解出单应性矩阵的各个元素,我们将式(1-1)展开,可得到以下两个方程:

由于H是一个齐次方程,我们只需知道他的比率即可。因此将归一化之后,我们只需要求8个未知元素,需要8个非线性方程。一组对应点可以分解出两组方程,应此我们至少需要四组对应点,但是要注意,我们取的四组对应点必须三三不共线,否则方程组没有唯一解。

4.SVD分解

在H的求解过程中,通过RANSAC筛选到的点对不可能完全匹配,所以我们要尽可能把所有筛选到的点都使用上,减少随机误差。假设我们筛选出n对匹配点,那我们需要列出2n个方程,表示为AX=B的形式有:

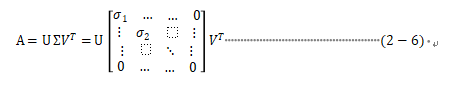

理想状态下,方程应该有唯一解,但是由于随机误差的影响方程很可能无解。因此,我们应该求其极小范数最小二乘解。。通过SVD分解 (2-3)

其中U是一个nn的正交阵,V为一个88的正交阵为一个n*8的矩阵。

表示A的广义逆。可以求解x:

三、实现步骤

1)利用SIFT算法,提取四幅图的特征点和特征描述子,相邻两个图用暴力匹配器进行匹配,生成3个特征点对集合;

2)RANSAC筛选可靠点对;

3)SVD分解求极小范数最小二乘解,分别解出一二幅图,二三幅图,三四幅图的单应性矩阵H12、H23、H34;

将所有图片转换到第三幅图像所在像素平面,进行图像拼接

四、效果

SIFT提取特征点,再通过暴力匹配器匹配后的结果:

RANSAC筛选后的点:

拼接后的图像:

总结

通过实验得到的拼接后的图像效果还行,但是仍然存在两个问题:一是照片的亮度不协调;另一个是还是会有部分拼接的不到位。照片亮度不协调是由于,相机为了呈现好的拍照效果,自动调节参数,导致有的相片亮有的相片暗。拼接部分不到位可能会有两个原因:求解的H有误差,还有就是场景深度差异大,用一个H难以描述。

代码

#include <iostream>

#include <stdio.h>

#include "opencv2/core.hpp"

#include "opencv2/core/utility.hpp"

#include "opencv2/core/ocl.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/features2d.hpp"

#include "opencv2/calib3d.hpp"

#include "opencv2/imgproc.hpp"

#include"opencv2/flann.hpp"

#include"opencv2/features2d.hpp"

#include"opencv2/ml.hpp"

#include <opencv2/imgproc/types_c.h>

#include "opencv2/core/operations.hpp"

#include "opencv2/core/core.hpp"

#include<cmath>

#include <opencv.hpp>

#include "opencv2/features2d.hpp"

#include "opencv2/core/types_c.h"

using namespace cv;

using namespace std;

typedef struct

{

Point2f left_top;

Point2f left_bottom;

Point2f right_top;

Point2f right_bottom;

}four_corners_t;

four_corners_t corners[10];

void goodMatches(vector<DMatch>& matches, Mat& imageDesc1, vector< DMatch >& good_matches, int n);

void fitLineRansac(vector<KeyPoint>& keyPoints1,vector<KeyPoint>& keyPoints2,vector<DMatch>& matches,vector<DMatch>& inlierMatch,int iterations, double sigma);

void FITSVD(vector<KeyPoint>& keyPoints1, vector<KeyPoint>& keyPoints2, vector<DMatch>& matches, Mat& H);

void CalcCorners(const Mat& H, const Mat& src,int i)

{

double v2[] = { 0, 0, 1 };//左上角

double v1[3];//变换后的坐标值

Mat V2 = Mat(3, 1, CV_64FC1, v2); //列向量

Mat V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

//左上角(0,0,1)

cout << "V2: " << V2 << endl;

cout << "V1: " << V1 << endl;

corners[i].left_top.x = v1[0] / v1[2];

corners[i].left_top.y = v1[1] / v1[2];

//左下角(0,src.rows,1)

v2[0] = 0;

v2[1] = src.rows;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners[i].left_bottom.x = v1[0] / v1[2];

corners[i].left_bottom.y = v1[1] / v1[2];

//右上角(src.cols,0,1)

v2[0] = src.cols;

v2[1] = 0;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners[i].right_top.x = v1[0] / v1[2];

corners[i].right_top.y = v1[1] / v1[2];

//右下角(src.cols,src.rows,1)

v2[0] = src.cols;

v2[1] = src.rows;

v2[2] = 1;

V2 = Mat(3, 1, CV_64FC1, v2); //列向量

V1 = Mat(3, 1, CV_64FC1, v1); //列向量

V1 = H * V2;

corners[i].right_bottom.x = v1[0] / v1[2];

corners[i].right_bottom.y = v1[1] / v1[2];

}

int main(int argc, char* argv)

{

//读取图像

Mat image01 = imread("01.jpg", 1);

Mat image02 = imread("02.jpg", 1);

Mat image03 = imread("03.jpg", 1);

Mat image04 = imread("04.jpg", 1);

if (image01.empty() || image02.empty()||image03.empty()||image04.empty())

{

cout << "Can't read image" << endl;

return -1;

}

//缩减图像大小并灰度化

Mat img1, img2,img3,img4,img1_gray,img2_gray,img3_gray,img4_gray;

resize(image01, img1, Size(image01.rows * 0.5, image01.cols * 0.5));

resize(image02, img2, Size(image02.rows * 0.5, image02.cols * 0.5));

resize(image03, img3, Size(image03.rows * 0.5, image03.cols * 0.5));

resize(image04, img4, Size(image04.rows * 0.5, image04.cols * 0.5));

cvtColor(img1, img1_gray, CV_BGR2GRAY);

cvtColor(img2, img2_gray, CV_BGR2GRAY);

cvtColor(img3, img3_gray, CV_BGR2GRAY);

cvtColor(img4, img4_gray, CV_BGR2GRAY);

vector<KeyPoint>keyPoints1;

vector<KeyPoint>keyPoints2;

vector<KeyPoint>keyPoints3;

vector<KeyPoint>keyPoints4;

int numFeatures = 800;

Ptr <cv::SIFT> sift = sift->create(numFeatures);;

sift->detect(img1_gray, keyPoints1);

sift->detect(img2_gray, keyPoints2);

sift->detect(img3_gray, keyPoints3);

sift->detect(img4_gray, keyPoints4);

//提取特征点

Mat outimg1,outimg2,outimg3,outimg4;

drawKeypoints(img1, keyPoints1, outimg1);

imshow("image01 keypoints", outimg1);

drawKeypoints(img2, keyPoints2, outimg2);

imshow("image02 keypoints", outimg2);

drawKeypoints(img1, keyPoints1, outimg3);

imshow("image03 keypoints", outimg3);

drawKeypoints(img2, keyPoints2, outimg4);

imshow("image04 keypoints", outimg4);

//提取特征描述子

Mat imageDesc1, imageDesc2, imageDesc3, imageDesc4;

cv::Ptr<SiftDescriptorExtractor>extractor = SiftDescriptorExtractor::create(1800);

extractor->compute(img1_gray, keyPoints1, imageDesc1);

extractor->compute(img2_gray, keyPoints2, imageDesc2);

extractor->compute(img3_gray, keyPoints3, imageDesc3);

extractor->compute(img4_gray, keyPoints4, imageDesc4);

//特征点匹配

BFMatcher matcher;

vector<DMatch> matches12,matches23,matches34;

matcher.match(imageDesc1, imageDesc2,matches12);

matcher.match(imageDesc2, imageDesc3, matches23);

matcher.match(imageDesc3, imageDesc4, matches34);

/* Mat image_match2;

drawMatches(img1, keyPoints1, img2, keyPoints2, matches, image_match2);

imshow("没有经过Rancsanc处理后图片", image_match2);

imwrite("E:/A/没有经过Rancsanc处理后图片.jpg", image_match2);*/

/* FlannBasedMatcher matcher1;

vector< DMatch > matches1;

matcher1.match(imageDesc1, imageDesc2, matches1);*/

//去除匹配误差比较大的点

vector<DMatch> good_matches12, good_matches23, good_matches34;

goodMatches(matches12, imageDesc1, good_matches12, 5);

goodMatches(matches23, imageDesc2, good_matches23, 6);

goodMatches(matches34, imageDesc3, good_matches34,5);

Mat image_match12;

drawMatches(img1, keyPoints1, img2, keyPoints2, good_matches12, image_match12);

imshow("match12后图片", image_match12);

imwrite("E:/A/image_match12.jpg", image_match12);

Mat image_match23;

drawMatches(img2, keyPoints2, img3, keyPoints3, good_matches23, image_match23);

imshow("match23", image_match23);

imwrite("E:/A/image_match23.jpg", image_match23);

Mat image_match34;

drawMatches(img3, keyPoints3, img4, keyPoints4, good_matches34, image_match34);

imshow("match43", image_match34);

imwrite("E:/A/image_match34.jpg", image_match34);

/*sort(inlinerMatch12.begin(), inlinerMatch12.end());*/

Mat image_match3;

vector<DMatch> inlinerMatch12, inlinerMatch23, inlinerMatch34;

fitLineRansac(keyPoints1, keyPoints2, good_matches12, inlinerMatch12, 3000, 10);

fitLineRansac(keyPoints2, keyPoints3, good_matches23, inlinerMatch23, 3000, 10);

fitLineRansac(keyPoints3, keyPoints4, good_matches34, inlinerMatch34, 3000, 10);

/* Mat image_linermatch12;

drawMatches(img1, keyPoints1, img2, keyPoints2, inlinerMatch12, image_linermatch12);

imshow("linermatch12", image_linermatch12);

imwrite("E:/A/image_matchliner12.jpg", image_linermatch12);

Mat image_linermatch23;

drawMatches(img2, keyPoints2, img3, keyPoints3, inlinerMatch23, image_linermatch23);

imshow("linermatch23", image_linermatch23);

imwrite("E:/A/image_matchliner23.jpg", image_linermatch23);

Mat image_linermatch34;

drawMatches(img3, keyPoints3, img4, keyPoints4, inlinerMatch34, image_linermatch34);

imshow("linermatch34", image_linermatch34);

imwrite("E:/A/image_linermatch34.jpg", image_linermatch34);*/

/*int a12_1=img1.at<cv::Vec3b>(keyPoints1[inlinerMatch12[1].queryIdx].pt.x, keyPoints1[inlinerMatch12[1].queryIdx].pt.y)[0] / img2.at<cv::Vec3b>(keyPoints2[inlinerMatch12[1].trainIdx].pt.x, keyPoints2[inlinerMatch12[1].trainIdx].pt.y)[0];

int a12_2 = img1.at<cv::Vec3b>(keyPoints1[inlinerMatch12[1].queryIdx].pt.x, keyPoints1[inlinerMatch12[1].queryIdx].pt.y)[1] / img2.at<cv::Vec3b>(keyPoints2[inlinerMatch12[1].trainIdx].pt.x, keyPoints2[inlinerMatch12[1].trainIdx].pt.y)[1];

int a12_3 = img1.at<cv::Vec3b>(keyPoints1[inlinerMatch12[1].queryIdx].pt.x, keyPoints1[inlinerMatch12[1].queryIdx].pt.y)[2] / img2.at<cv::Vec3b>(keyPoints2[inlinerMatch12[1].trainIdx].pt.x, keyPoints2[inlinerMatch12[1].trainIdx].pt.y)[2];

int b23_1= img2.at<cv::Vec3b>(keyPoints1[inlinerMatch23[1].queryIdx].pt.x, keyPoints1[inlinerMatch23[1].queryIdx].pt.y)[0] / img3.at<cv::Vec3b>(keyPoints2[inlinerMatch23[1].trainIdx].pt.x, keyPoints2[inlinerMatch23[1].trainIdx].pt.y)[0];

int b23_2 = img2.at<cv::Vec3b>(keyPoints1[inlinerMatch23[1].queryIdx].pt.x, keyPoints1[inlinerMatch23[1].queryIdx].pt.y)[1] / img3.at<cv::Vec3b>(keyPoints2[inlinerMatch23[1].trainIdx].pt.x, keyPoints2[inlinerMatch23[1].trainIdx].pt.y)[1];

int b23_3 = img2.at<cv::Vec3b>(keyPoints1[inlinerMatch23[1].queryIdx].pt.x, keyPoints1[inlinerMatch23[1].queryIdx].pt.y)[2] / img3.at<cv::Vec3b>(keyPoints2[inlinerMatch23[1].trainIdx].pt.x, keyPoints2[inlinerMatch23[1].trainIdx].pt.y)[2];

int c34_1= img3.at<cv::Vec3b>(keyPoints1[inlinerMatch23[1].queryIdx].pt.x, keyPoints1[inlinerMatch23[1].queryIdx].pt.y)[0] / img4.at<cv::Vec3b>(keyPoints2[inlinerMatch23[1].trainIdx].pt.x, keyPoints2[inlinerMatch23[1].trainIdx].pt.y)[0];

int c34_2 = img3.at<cv::Vec3b>(keyPoints1[inlinerMatch23[1].queryIdx].pt.x, keyPoints1[inlinerMatch23[1].queryIdx].pt.y)[1] / img4.at<cv::Vec3b>(keyPoints2[inlinerMatch23[1].trainIdx].pt.x, keyPoints2[inlinerMatch23[1].trainIdx].pt.y)[1];

int c34_3 = img3.at<cv::Vec3b>(keyPoints1[inlinerMatch23[1].queryIdx].pt.x, keyPoints1[inlinerMatch23[1].queryIdx].pt.y)[2] / img4.at<cv::Vec3b>(keyPoints2[inlinerMatch23[1].trainIdx].pt.x, keyPoints2[inlinerMatch23[1].trainIdx].pt.y)[2];*/

Mat H12, H23, H34;

FITSVD(keyPoints1, keyPoints2, inlinerMatch12, H12);

FITSVD(keyPoints2, keyPoints3, inlinerMatch23, H23);

FITSVD(keyPoints3, keyPoints4, inlinerMatch34, H34);

//没有平移时图一变换后左角点的位置

CalcCorners(H12*H23, img1, 0);

Mat adjustMat = (Mat_<double>(3, 3) << 1.0, 0,MIN(abs(corners[0].left_top.x), abs(corners[0].left_bottom.x)), 0, 1.0, corners[0].left_top.x, 0, 0, 1.0);

Mat adjustHomo13= adjustMat * H23 * H12;

Mat adjustHomo23 = adjustMat * H23;

Mat adjustHomo43 = adjustMat*H34.inv();

CalcCorners(adjustHomo13, img1,1);

CalcCorners(adjustHomo23, img2,2);

CalcCorners(adjustMat, img3, 3);

CalcCorners(adjustHomo43, img4,4);

// cout << "left_top:" << corners.left_top << endl;

// cout << "left_bottom:" << corners.left_bottom << endl;

// cout << "right_top:" << corners.right_top << endl;

// cout << "right_bottom:" << corners.right_bottom << endl;

Mat imageTransform1, imageTransform2, imageTransform3, imageTransform4;

float trans_bottom = MIN(corners[1].right_bottom.y, corners[1].left_bottom.y, corners[2].right_bottom.y, corners[2].left_bottom.y, corners[3].right_bottom.y, corners[3].left_bottom.y, corners[4].right_bottom.y, corners[4].left_bottom.y);

warpPerspective(img1, imageTransform1, adjustHomo13, Size(MIN(corners[1].right_top.x, corners[1].right_bottom.x), trans_bottom));

warpPerspective(img3, imageTransform3, adjustMat, Size(MIN(corners[3].right_top.x, corners[3].right_bottom.x), trans_bottom));

warpPerspective(img2, imageTransform2, adjustHomo23, Size(MIN(corners[2].right_top.x, corners[2].right_bottom.x),trans_bottom));

warpPerspective(img4, imageTransform4, adjustHomo43, Size(MIN(corners[4].right_top.x, corners[4].right_bottom.x),trans_bottom));

imshow("trans1", imageTransform1);

imshow("trans2", imageTransform2);

imwrite("E:/A/trans2.jpg", imageTransform2);

imwrite("E:/A/trans1.jpg", imageTransform1);

imshow("trans3", imageTransform3);

imwrite("E:/A/trans3.jpg", imageTransform3);

imshow("trans4", imageTransform4);

imwrite("E:/A/trans4.jpg", imageTransform4);

Mat imageTransform12(Size(imageTransform2.cols, img2.rows), CV_8UC3);

imageTransform2.copyTo(imageTransform12(Rect(0, 0, imageTransform2.cols, imageTransform2.rows)));

imageTransform1.copyTo(imageTransform12(Rect(0, 0, imageTransform1.cols, imageTransform1.rows)));

Mat imageTransform123(Size(imageTransform3.cols, img2.rows), CV_8UC3);

imageTransform3.copyTo(imageTransform123(Rect(0, 0, imageTransform3.cols, imageTransform3.rows)));

imageTransform12.copyTo(imageTransform123(Rect(0, 0,imageTransform12.cols, imageTransform12.rows)));

Mat imageTransform1234(Size(imageTransform4.cols, img2.rows), CV_8UC3);

imageTransform4.copyTo(imageTransform1234(Rect(0, 0, imageTransform4.cols, imageTransform4.rows)));

imageTransform123.copyTo(imageTransform1234(Rect(0, 0, imageTransform123.cols, imageTransform123.rows)));

imshow("trans1234", imageTransform1234);

imwrite("E:/A/trans121234.jpg", imageTransform1234);

waitKey(0);

return 0;

}

//去除误差比较大的点

void goodMatches(vector<DMatch>& matches, Mat& imageDesc1, vector< DMatch >& good_matches, int n)

{

double max_dist = 0; double min_dist = 100;//最小距离和最大距离

for (int i = 0; i < imageDesc1.rows; i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

//【7】存下匹配距离小于n*min_dist的点对

for (int i = 0; i < matches.size(); i++)

{

if (matches[i].distance < n* min_dist)

{

good_matches.push_back(matches[i]);

}

}

}

//Ransanc

void fitLineRansac( vector<KeyPoint>& keyPoints1,vector<KeyPoint>& keyPoints2, vector<DMatch>&matches,vector<DMatch>&inlierMatch,int iterations ,double sigma)

{

unsigned int n = matches.size();

if (n < 4)//如果点数小于4则不能实现拟合,四对点才能确定一H矩阵

{

fprintf(stderr, "Warning: too few points in Matches(), %s line %d\n", __FILE__, __LINE__);

}

double bestScore = -1.;

cv::RNG rng;

for (int k = 0; k < iterations; k++)

{

int i1 = 0, i2 = 0, i3 = 0, i4 = 0;

i1 =rand()% matches.size();

i2 = rand() % matches.size();

i3 = rand() % matches.size();

i4 = rand() % matches.size();

double score = 0;

vector<Point2f> imagePoints12_1, imagePoints12_2;

imagePoints12_1.push_back(keyPoints1[matches[i1].queryIdx].pt);

imagePoints12_1.push_back(keyPoints1[matches[i2].queryIdx].pt);

imagePoints12_1.push_back(keyPoints1[matches[i3].queryIdx].pt);

imagePoints12_1.push_back(keyPoints1[matches[i4].queryIdx].pt);

imagePoints12_2.push_back((keyPoints2[matches[i1].trainIdx].pt));

imagePoints12_2.push_back((keyPoints2[matches[i2].trainIdx].pt));

imagePoints12_2.push_back((keyPoints2[matches[i3].trainIdx].pt));

imagePoints12_2.push_back((keyPoints2[matches[i4].trainIdx].pt));

Mat homo1to2 = findHomography(imagePoints12_1, imagePoints12_2);

vector<DMatch> inlierMatch12;

for (int i = 0; i < n; i++)

{

Mat kp2 = Mat::zeros(3, 1, CV_64FC1);

Mat kp1 = (Mat_<double>(3, 1) << keyPoints1[matches[i].queryIdx].pt.x, keyPoints1[matches[i].queryIdx].pt.y, 1);

kp2 = homo1to2 * kp1;

float x = 0, x1 = 0, x2 = 0, y = 0, y1 = 0, y2 = 0, d;

x1 = kp2.at<double>(0, 0) / kp2.at<double>(2, 0);

y1 = kp2.at<double>(1, 0) / kp2.at<double>(2, 0);

x2 = keyPoints2[matches[i].trainIdx].pt.x;

y2= keyPoints2[matches[i].trainIdx].pt.y;

x = fabs(x2 - x1);

y = fabs(y2 - y1);

d = sqrt(x * x + y * y );

if (d < sigma)

{

score += 1;

inlierMatch12.push_back(matches[i]);

}

}

if (score > bestScore)

{

vector<DMatch>().swap(inlierMatch);

inlierMatch = inlierMatch12;

bestScore = score;

}

else {

vector<DMatch>().swap(inlierMatch12);

}

}

}

//SVD分解求H矩阵

void FITSVD(vector<KeyPoint>& keyPoints1,vector<KeyPoint>& keyPoints2, vector<DMatch>&matches, Mat&H )

{

int n = matches.size();

if (n < 4)

{

fprintf(stderr, "Warning: too few points in lsq_homog(), %s line %d\n", __FILE__, __LINE__);

return ;

}

Mat A = Mat::zeros(2 * n, 8, CV_64FC1);//2*n行,8列

Mat B = Mat::zeros(2 * n, 1, CV_64FC1);

for (int i = 0; i < n; i++)

{

A.at<double>(i, 0) = keyPoints1[matches[i].queryIdx].pt.x;

A.at<double>(i + n, 3) = keyPoints1[matches[i].queryIdx].pt.x;

A.at<double>(i, 1) = keyPoints1[matches[i].queryIdx].pt.y;

A.at<double>(i + n, 4) = keyPoints1[matches[i].queryIdx].pt.y;

A.at<double>(i, 2) = 1.0;

A.at<double>(i + n, 5) = 1.0;

A.at<double>(i, 6) = -keyPoints1[matches[i].queryIdx].pt.x * keyPoints2[matches[i].trainIdx].pt.x;

A.at<double>(i, 7) = -keyPoints1[matches[i].queryIdx].pt.y * keyPoints2[matches[i].trainIdx].pt.x;

A.at<double>(i + n, 6) = -keyPoints1[matches[i].queryIdx].pt.x * keyPoints2[matches[i].trainIdx].pt.y;

A.at<double>(i + n, 7) = -keyPoints1[matches[i].queryIdx].pt.y * keyPoints2[matches[i].trainIdx].pt.y;

B.at<double>(i, 0) = keyPoints2[matches[i].trainIdx].pt.x;

B.at<double>(i + n, 0) = keyPoints2[matches[i].trainIdx].pt.y;

}

Mat S = Mat::zeros(8, 1, CV_64FC1);

Mat W = Mat::zeros(8, 2 * n, CV_64FC1);

Mat VT = Mat::zeros(8, 8, CV_64FC1);

Mat U = Mat::zeros(2 * n, 2 * n, CV_64FC1);

Mat X = Mat::zeros(8, 1, CV_64FC1);

H = Mat::zeros(3, 3, CV_64FC1);

SVD thissvd;

thissvd.compute(A, S, U, VT, SVD::FULL_UV);

for (int j = 0; j < 8; j++)

W.at<double>(j, j) = 1 / S.at<double>(j, 0);

X = VT.t() * W * U.t() * B;

H.at<double>(0, 0) = X.at<double>(0, 0);

H.at<double>(0, 1) = X.at<double>(1, 0);

H.at<double>(0, 2) = X.at<double>(2, 0);

H.at<double>(1, 0) = X.at<double>(3, 0);

H.at<double>(1, 1) = X.at<double>(4, 0);

H.at<double>(1, 2) = X.at<double>(5, 0);

H.at<double>(2, 0) = X.at<double>(6, 0);

H.at<double>(2, 1) = X.at<double>(7, 0);

H.at<double>(2, 2) = 1;

}

2569

2569

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?