论文解读与个人理解(欢迎小伙伴们指正):Spatial-Temporal Multi-Cue Network for Continuous Sign Language Recognition

-

十分感谢小伙伴的鼓励,我会持续更新此论文后续的补充内容。

-

非常感谢此论文的一作,给了我一些很 nice 的建议。作者的实验室因为合作方的要求不允许公开代码,所以很遗憾要复现此论文有难度。虽然作者分享了论文中模型的搭建代码,但是作者说:“从过去一些人经验来看,基于这个复现我的结果是有一定困难的,主要难点在于如何得到迭代的伪标签。”同时一作大佬推了 3 个 GitHub 上的代码,可能有帮助。还建议尝试复现ECCV2020上的那篇 fully convolutional network 做手语识别的代码,可以只实现主体部分,基于这一部分也是可以达到目前sota的性能。后续我会复现大佬建议的部分。再次感谢大佬的建议,指出了一个方向。🙏🙏🙏代码、论文链接在文末

-

(2020-连续-RGB-手形-头-关节/RGB-CSL/PHOENIX2014/PHOENIX204T)

| 年份 | 识别类型 | 输入数据类型 | 手动特征 | 非手动特征 | Fullframe | 数据集 | 识别对象 |

|---|---|---|---|---|---|---|---|

| 2020 | 连续语句 | RGB | Shape(手形) | Head(头) | Bodyjoints(身体关节)、RGB | Phoenix14、Phoenix14-T、CSL | DGS(德语)、CSL(汉语) |

Abstract

Despite the recent success of deep learning in continuous sign language recognition (CSLR), deep models typically focus on the most discriminative features, ignoring other potentially non-trivial and informative contents. Such characteristic heavily constrains their capability to learn implicit visual grammars behind the collaboration of different visual cues (i,e., hand shape, facial expression and body posture). By injecting multi-cue learning into neural network design, we propose a spatial-temporal multi-cue (STMC) network to solve the vision-based sequence learning problem. Our STMC network consists of a spatial multi-cue (SMC) module and a temporal multi-cue (TMC) module. The SMC module is dedicated to spatial representation and explicitly decomposes visual features of different cues with the aid of a self-contained pose estimation branch. The TMC module models temporal correlations along two parallel paths, i.e., intra-cue and inter-cue, which aims to preserve the uniqueness and explore the collaboration of multiple cues. Finally, we design a joint optimization strategy to achieve the end-to-end sequence learning of the STMC network. To validate the effectiveness, we perform experiments on three large-scale CSLR benchmarks: PHOENIX-2014, CSL and PHOENIX-2014-T. Experimental results demonstrate that the proposed method achieves new state-of-the-art performance on all three benchmarks.

尽管深度学习最近在连续手语识别(CSLR)方面取得了成功,但深度模型通常专注于最具区别性的特征,而忽略了其他潜在的非琐碎和信息内容。这种特征严重限制了他们在不同视觉线索协同作用下学习内隐视觉语法的能力。(比如手的形状、面部表情和身体姿势)。通过在神经网络设计中注入多线索学习,我们提出了一种 spatial-temporal multi-cue (STMC) 网络来解决基于视觉的 sequence 学习问题。STMC网络由 spatial multi-cue (SMC) 模块和 temporal multi-cue (TMC) 模块组成。SMC 模块致力于 spatial representation,并借助 self-contained pose estimation branch 明确分解不同 cues 的视觉特征。TMC模块沿两条平行 paths ( intra-cue 和 inter-cue)建立时间相关性模型,旨在保持线索的独特性,并探讨多种线索之间的协作关系。最后,我们设计了一个联合优化策略来实现STMC网络的端到端的序列学习。为了验证该方法的有效性,在PHOENIX-2014、CSL和PHOENIX-2014-T三种大规模CSLR基准上进行了实验。实验结果表明,该方法在这三个数据集上都达到了目前最高的性能水平。

| 研究对象 | 手型、面部表情、身体姿势 |

|---|---|

| 研究方法 | spatial-temporal multi-cue (STMC) 网络(由 spatial multi-cue (SMC) 模块和temporal multi-cue (TMC) 模块组成) |

| STMC网络 | spatial-temporal multi-cue 时空多线索,将多线索学习和神经网络相结合 |

| SMC模块 | Spatial Multi-Cue 空间多线索,用于空间表示,并借助一个独立的姿势估计模块来分解不同线索的视觉特征 |

| TMC模块 | Temporal Multi-Cue 时间多线索,沿两个平行路径对时间相关性进行建模 |

| STMC端到端学习 | 联合优化策略 |

| 数据集 | PHOENIX-2014 、PHOENIX- 2014-T 、CSL |

| 数据集 | 验证集(Dev)WER(Word Error Rate) | 测试集(Test) WER(越低越好) |

|---|---|---|

| PHOENIX-2014 | 21.1 | 20.7 |

| PHOENIX- 2014-T | 19.6 | 21.0 |

| 数据集 | Split I | Split II |

|---|---|---|

| CSL | 2.1 | 28.6 |

在CSL上的评估标准和其他两个数据集不一样,猜测:可能在CSL上的效果不理想,就换了评价标准。需要在源码上验证。

训练集、验证集(dev)和测试集

- 在模型训练的时候通常将我们所得的数据分成3部分:训练集、dev验证集和测试集

- dev用来统计的那一评估指标、调节参数,选择算法;而test用来在最后整体评估模型性能

- dev和训练集一起被输入到模型算法中,但又不参与模型训练,可以一边训练一边根据dev查看指标

- dev和测试集都是用来评估模型好坏,但dev只能用来统计单一评估指标;而测试集能够提供更多的评估模型指标,如混淆矩阵、roc、召回率、F1 Score等

- dev可以用来快速评估指标的,并及时做出参数调整,但不全面;而测试集能提供一个模型的完整评估报告,能更好的从多个角度评价模型的性能,缺点是比较费时,一般在dev把参数调整差不多后,才会用到测试集

- dev和测试集要保持同一分布

- 大数据时代以前,通常将数据按照8:1:1划分数据集,大数据时代(百万数量级),通常可以将数据按照98:1:1的比例划分

————————————————

原文链接:https://blog.csdn.net/weixin_43821376/article/details/103777454

1 Introduction

Sign language is the primary language of the deaf community. To facilitate the daily communication between the deaf-mute and the hearing people, it is significant to develop sign language recognition (SLR) techniques. Recently, SLR has gained considerable attention for its abundant visual information and systematic grammar rules (Cui, Liu, and Zhang 2017; Huang et al. 2018; Koller et al. 2019; Pu, Zhou, and Li 2019; Li et al. 2019). In this paper, we concentrate on continuous SLR (CSLR), which aims to translate a series of signs to the corresponding sign gloss sentence.

手语是聋人群体的主要语言。为了方便聋哑人与听障人的日常交流,发展手语识别技术具有重要意义。近年来,手语识别因其丰富的视觉信息和系统的语法规则而受到广泛关注(Cui, Liu, and Zhang 2017;黄等2018;Koller等人2019;普,周,李2019;Li et al. 2019)。本文主要研究连续手语识别(CSLR),它的目的是将一系列的手语翻译成相应的口语。

- CSLR:continuous sign language recognition 连续手语识别

Sign language mainly relies on, but not limits to, hand gestures. To effectively and accurately express the desired idea, sign language simultaneously leverages both manual elements from hands and non-manual elements from the face and upper-body posture (Koller, Forster, and Ney 2015). To be specific, manual elements include the shape, position, orientation and movement of both hands, while non-manual elements include the eye gaze, mouth shape, facial expression and body pose. Human visual perception allows us to process and analyze these simultaneous yet complex information without much effort. However, with no expert knowledge, it is difficult for a deep neural network to discover the implicit collaboration of multiple visual cues automatically. Especially for CSLR, the transitions between sign glosses may come with temporal variations and switches of different cues.

手语主要依靠手势但不仅仅只依靠手势。为了准确有效地表达思想,手语同时利用了手部的手动特征和面部与上半身姿势的非手动特征(Koller, Forster, and Ney 2015)。具体来说,手动特征包括双手的形状、位置、方向和运动,非手动元素包括眼睛注视、嘴型、面部表情和身体姿势。人类的视觉感知使我们能够轻而易举地同时处理和分析这些复杂的信息。然而,在缺乏专业知识的情况下,深度神经网络很难自动发现多种视觉线索的隐式协作。尤其对于 CSLR 来说,sign glosses 的先后顺序和不同部位的相互切换深深影响着手语翻译的效果。

- Sign glosses:手势光泽???

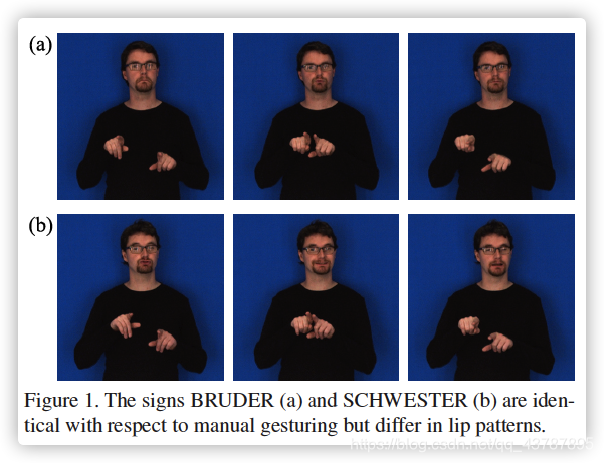

阐明CSRL的难度:我可以借鉴"The Significance of Facial Features For Automatic Sign Language Recognition”中用图片的方式来说明

To explore multi-cue information, some methods rely on external tools. For example, an off-the-shelf detector is utilized for hand detection, together with a tracker to cope with shape variation and occlusion (Cihan Camgoz et al. 2017; Huang et al. 2018). Some methods adopt multi-stream networks with inferred labels (i.e., mouth shape labels, hand shape labels) to guide each stream to focus on individual visual cue (Koller et al. 2019). Despite their improvement, they mostly suffer two limitations: First, external tools impede the end-to-end learning on the differentiable structure of neural networks. Second, off-the-shelf tools and multi-stream networks bring repetitive feature extraction of the same region, incurring expensive computational overhead for such a video-based translation task.

为了探索多线索信息,一些依赖于外部工具的方法。例如,一种现成的检测器被用于手部检测,同时还有一个跟踪器来处理形状变化和遮挡(Cihan Camgoz et al. 2017;黄等,2018)。一些方法采用带有推测标签的多流网络(如口形标签、手形标签)引导每个流关注手语视觉线索(Koller et al. 2019)。尽管有所改进,但它们大多存在两个局限性:一是外部工具阻碍了对神经网络可微结构的端到端学习。其次,现成的工具和多流网络带来了同一区域的重复特征提取,提高了计算开销。

举其他方法的例子:要最近几年的研究,不可是太久以前的研究,没说服力

| 年份 | 论文 | 方法 | 缺点 |

|---|---|---|---|

| 2017 | Cihan Camgoz, N.; Hadfield, S.; Koller, O.; and Bowden, R. 2017.Subunets: end-to-end hand shape and continuous sign language recognition. In ICCV | 现成的检测器被用于手部检测 | 外部工具阻碍了对神经网络可微结构的端到端学习 |

| 2018 | Cihan Camgoz, N.; Hadfield, S.; Koller, O.; Ney, H.; and Bowden,R. 2018. Neural sign language translation. In CVPR. | 跟踪器来处理形状变化和遮挡 | 外部工具阻碍了对神经网络可微结构的端到端学习 |

| 2019 | Koller, O.; Camgoz, C.; Ney, H.; and Bowden, R. 2019. Weakly supervised learning with multi-stream cnn-lstm-hmms to discover sequential parallelism in sign language videos. TPAMI. | 用带有推测标签的多流网络(如口形标签、手形标签)引导每个流关注手语视觉线索 | 多流网络带来了同一区域的重复特征提取,提高了计算开销。 |

To temporally exploit multi-cue features, an intuitive idea is to concatenate features and feed them into a temporal fusion module. In action recognition, two-stream fusion shows significant performance improvement by fusing temporal features of RGB and optical flow (Simonyan and Zisserman 2014; Feichtenhofer, Pinz, and Zisserman 2016). Nevertheless, the aforementioned fusion approaches are based on two counterpart features in terms of the representation capability. But when it turns to multiple diverse cues with unequal feature importance, how to fully exploit the synergy between strong features and weak features still leaves a challenge. Moreover, for deep learning based methods, neural networks tend to merely focus on strong features for quick convergence, potentially omitting other informative cues, which limits the further performance improvement.

为了利用短暂的多线索特征,一种直观的想法是将特征连接起来,并将它们输入一个时间融合模块。在动作识别方面,通过融合RGB和 optical flow 的时间特征,双流融合显著提高了性能(Simonyan and Zisserman 2014;Feichtenhofer, Pinz和Zisserman 2016)。然而,上述的融合方法在表示能力方面是基于两个对等的特征。但当它转向多个不同的特征重要性不等的线索时,如何充分利用强特征和弱特征之间的协同作用仍然是一个挑战。此外,对于基于深度学习的方法,神经网络往往只关注强特征以快速收敛,可能忽略其他信息线索,这限制了进一步的性能改进。

- optical flow:光流???

同一时间段里多个部位如何协同问题:比如中国手语中的“聚餐”,在一个短暂的时间段里要识别 双手,头,嘴。

| 问题 | 年份 | 论文 | 方法 | 优点 | 缺点 |

|---|---|---|---|---|---|

| 同一时间段里多个部位如何协同 | 2008 | The Significance of Facial Features for Automatic Sign Language Recognition | 将手动特征和面部特征合并到一个特征向量 z t = [ x t , y t ] \boldsymbol{z}_{t}=\left[\boldsymbol{x}_{t}, \boldsymbol{y}_{t}\right] zt=[xt,yt] | 暂不知,猜:用此方法来构建特征,再用深度学习来训练会不会有效果 | 猜:需要把手动特征和面部特征用公式表示,计算难,难以实现 |

| 2014 | Simonyan, K., and Zisserman, A. 2014. Two-stream convolutional networks for action recognition in videos. In NeurIPS. | 将特征连接起来,通过融合RGB和 optical flow 的时间特征 | 性能显著提高 | 此融合方法在表达能力方面是基于两个对等的特征,但当它转向多个不同的特征重要性不等的线索时,强特征和弱特征之间的协同作用仍然是一个挑战 | |

| 同上 | 2016 | Feichtenhofer, C.; Pinz, A.; and Zisserman, A. 2016. Convolutional two-stream network fusion for video action recognition. In CVPR. | 同上 | 同上 | 同上 |

基于深度学习的方法,神经网络往往只关注强特征以快速收敛,可能忽略其他信息线索,这限制了进一步的性能改进。

To address the above difficulties, we propose a novel spatial-temporal multi-cue (STMC) framework. In the SMC module, we add two extra deconvolutional layers (Zeiler et al. 2010; Xiao, Wu, and Wei 2018) for pose estimation on the top layer of our backbone. A soft-argmax trick (Chapelle and Wu 2010) is utilized to regress the positions of keypoints and make it differentiable for subsequent operations in the temporal part. The spatial representations of other cues are acquired by the reuse of feature maps from the middle layer. Based on the learned spatial representations, we decompose the temporal modelling part into the intra-cue path and inter-cue path in the TMC module. The inter-cue path fuses the temporal correlations between different cues with temporal convolutional (TCOV) layers. The intra-cue path models the internal temporal dependency of each cue and feeds them to the inter-cue path at different time scales. To fully exploit the potential of STMC network, we design a joint optimization strategy with connectionist temporal classification (CTC) (Graves et al. 2006) and keypoint regression, making the whole structure end-to-end trainable.

针对上述问题,我们提出了一种新的时空多线索(STMC)框架。在 SMC 模块中,我们增加了两个额外的反卷积层(Zeiler et al. 2010;Xiao, Wu, and Wei 2018),用于框架顶层的姿态估计。利用一种 soft-argmax 技巧(Chapelle and Wu 2010)对关键点的位置进行回归,使其在短暂的时间内可微。通过对中间层特征图的重复使用,获得其他线索的空间表示。基于学习到的空间表示,我们在 TMC 模块中将时间建模部分分解为线索内路径和线索间路径。线索间路径利用时间卷积(TCOV)层融合不同线索之间的时间相关性。线索内路径对每个线索的内部时间依赖性进行建模,并以不同的时间尺度将它们提供给线索间路径。为了充分挖掘 STMC 网络的潜力,我们设计了一个结合 connectionist temporal classification (CTC) (Graves et al. 2006)和关键点回归的联合优化策略,使整个结构端到端可训练。

| 问题 | 年份 | 论文 | 方法 |

|---|---|---|---|

| 框架顶层的姿态估计 | 2010 | 在 SMC 模块中加两个反卷积层 | Zeiler, M. D.; Krishnan, D.; Taylor, G. W.; and Fergus, R. 2010. Deconvolutional networks. In CVPR |

| 同上 | 2018 | Xiao, B.; Wu, H.; and Wei, Y. 2018. Simple baselines for human pose estimation and tracking. In ECCV. | 同上 |

| 对关键点的位置进行回归,使其在短暂的时间内可微 | 2010 | Chapelle, O., and Wu, M. 2010. Gradient descent optimization of smoothed information retrieval metrics. Information Retrieval. | soft-argmax |

| 获得其他线索的空间表示 | 对中间层特征图的重复使用 | ||

| 融合不同线索之间的时间相关性 | 时间卷积层 TCOV (temporal convolutional) | ||

| 可端到端训练 | 2006 | Graves, A.; Fern´andez, S.; Gomez, F.; and Schmidhuber, J. 2006. Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. In ICML. | 将connectionist temporal classification (CTC) 与关键点回归结合的联合优化策略 |

用以前(2012年之前)的方法或结合以前的方法来解决新问题

Our main contributions are summarized as follows:

- We design an SMC module with a self-contained pose estimation branch. It provides multi-cue features in an end-to-end fashion and maintains efficiency at the same time.

- We propose a TMC module composed of stacked TMC blocks. Each block includes intra-cue and inter-cue paths to preserve the uniqueness and explore the synergy of different cues at the same time.

- A joint optimization strategy is proposed for the end-to-end sequence learning of our STMC network.

- Through extensive experiments, we demonstrate that our STMC network surpasses previous state-of-the-art models on three publicly available CSLR benchmarks.

我们的主要贡献总结如下: - 设计了一个具有自包含姿态估计分支的SMC模块。它以端到端的方式提供多线索特性,同时保持效率。

- 我们提议一个由堆叠的TMC块组成的TMC模块。每个块包括线索内路径和线索间路径,以保持不同线索的独特性,同时探索不同线索的协同性。

- 对STMC网络的端到端序列学习提出了一种联合优化策略。

- 通过广泛的实验,我们证明了我们的STMC网络在三个公开可用的CSLR基准上超越了以前最先进的模型。

2 Related Work

In this section, we briefly review the related work on sign language recognition and multi-cue fusion.

在本节中,我们简要回顾了手语识别和多线索融合的相关工作。

A CSLR system usually consists of two parts: video representation and sequence learning. Early works utilize handcrafted features (Cooper and Bowden 2009; Buehler, Zisser-man, and Everingham 2009; Yin, Chai, and Chen 2016) for SLR. Recently, deep learning based methods have been applied to SLR for their strong representation capability. 2D convolutional neural networks (2D-CNN) and 3D convolutional neural networks (3D-CNN) (Ji et al. 2013; Qiu, Yao, and Mei 2017) are employed for modelling the appearance and motion in sign language videos. In (Cui, Liu, and Zhang 2017), Cui et al. propose to combine 2D-CNN with temporal convolutional layers for spatial-temporal representation. In (Molchanov et al. 2016; Pu, Zhou, and Li 2018; Zhou, Zhou, and Li 2019; Wei et al. 2019), 3D-CNN is adopted to learn motion features in sign language.

一个CSLR系统通常由两个部分组成:视频表示和序列学习。早期作品使用手工制作的特征(Cooper and Bowden 2009;Buehler, Zisser-man,和Everingham 2009;Yin, Chai, Chen(2016)。近年来,基于深度学习的方法因其较强的表示能力而被应用到手语识别中。2D卷积神经网络(2D-CNN)和3D卷积神经网络(3D-CNN) (Ji et al. 2013;Qiu, Yao, and Mei 2017)在手语视频中使用外观和动作作为特征的模型。在(Cui, Liu, and Zhang 2017)中,Cui等人提出将2D-CNN与时间卷积层相结合进行时空表示。在(Molchanov等人2016;Pu, Zhou, and Li 2018; Zhou, Zhou, and Li 2019; Wei et al. 2019),采用3D-CNN学习手语的动作特征。

本段首句:一个CSLR系统通常由两个部分组成:视频表示和序列学习。承接本章首段“在本节中,我们简要回顾了手语识别和多线索融合的相关工作。”然后,介绍了以往对 CSRL 系统中视频表示对研究工作。

| 年份 | 论文 | 方法 |

|---|---|---|

| 2009 | Cooper, H., and Bowden, R. 2009. Learning signs from subtitles: A weakly supervised approach to sign language recognition. In CVPR. | 使用手工制作的特征 |

| 2009 | Buehler, P.; Zisserman, A.; and Everingham, M. 2009. Learning sign language by watching tv (using weakly aligned subtitles). In CVPR. | 使用手工制作的特征 |

| 2016 | Yin, F.; Chai, X.; and Chen, X. 2016. Iterative reference driven metric learning for signer independent isolated sign language recognition. In ECCV. | 使用手工制作的特征 |

| 2013 | Ji, S.; Xu,W.; Yang, M.; and Yu, K. 2013. 3D convolutional neural networks for human action recognition. TPAMI 35(1):221–231. | 使用2D卷积神经网络(2D-CNN)和3D卷积神经网络搭建以外观和动作为特征的模型 |

| 2017 | Qiu, Z.; Yao, T.; and Mei, T. 2017. Learning spatio-temporal representation with pseudo-3d residual networks. In ICCV. | 使用2D卷积神经网络(2D-CNN)和3D卷积神经网络搭建以外观和动作为特征的模型 |

| 2017 | Cui, R.; Liu, H.; and Zhang, C. 2017. Recurrent convolutional neural networks for continuous sign language recognition by staged optimization. In CVPR. | 将2D-CNN与时间卷积层相结合进行时空表示 |

| 2016 | Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; and Kautz, J. 2016. Online detection and classification of dynamic hand gestures with recurrent 3d convolutional neural network. In CVPR. | 采用3D-CNN学习手语的动作特征 |

| 2018 | Pu, J.; Zhou, W.; and Li, H. 2018. Dilated convolutional network with iterative optimization for continuous sign language recognition. In IJCAI. | 采用3D-CNN学习手语的动作特征 |

| 2019 | Zhou, H.; Zhou, W.; and Li, H. 2019. Dynamic pseudo label decoding for continuous sign language recognition. In ICME. | 采用3D-CNN学习手语的动作特征 |

Sequence learning in CSLR is to learn the correspondence between video sequence and sign gloss sequence. Koller et al. (Koller, Ney, and Bowden 2016; Koller, Zargaran, and Ney 2017; Koller et al. 2018) propose to integrate 2D-CNNs with hidden markov models (HMM) to model the state transitions. In (Cihan Camgoz et al. 2017; Wang et al. 2018;Cui, Liu, and Zhang 2017; Cui, Liu, and Zhang 2019), connectionist temporal classification (CTC) (Graves et al. 2006) algorithm is employed as a cost function for CSLR, which is able to process unsegmented input data. In (Huang et al. 2018; Guo et al. 2018), the attention-based encoder-decoder model (Bahdanau, Cho, and Bengio 2014) is adopted to deal with CSLR in the way of neural machine translation.

CSLR中的序列学习是学习视频序列和 sign gloss 序列之间的对应关系。Koller等人(Koller, Ney和Bowden 2016;Koller, Zargaran和Ney 2017;Koller等人2018年)提出将2D-CNNs与隐马尔可夫模型(HMM)集成,以对状态转换进行建模。在 (Cihan Camgoz et al. 2017; Wang et al. 2018;Cui, Liu, and Zhang 2017; Cui, Liu, and Zhang 2019)CSLR 采用 connectionist temporal classification (CTC) (Graves et al. 2006)算法作为代价函数,能够处理未分割的输入数据。在 (Huang et al. 2018;Guo et al. 2018),中采用基于注意力机制的编码-解码器模型(Bahdanau, Cho, and Bengio 2014)的神经机器翻译方式处理CSLR。

本段首句:CSLR 中的序列学习是学习视频序列和 sign gloss 序列之间的对应关系。承接上一段首句“一个CSLR系统通常由两个部分组成:视频表示和序列学习。”然后,介绍了 以往对 CSRL 中序列学习的研究工作。

| 年份 | 论文 | 方法 |

|---|---|---|

| 2016 | Koller, O.; Zargaran, O.; Ney, H.; and Bowden, R. 2016. Deep sign: hybrid cnn-hmm for continuous sign language recognition. In BMVC. | 将2D-CNNs与隐马尔可夫模型(HMM)集成,以对状态转换进行建模 |

| 2017 | Koller, O.; Zargaran, S.; and Ney, H. 2017. Re-sign: re-aligned end-to-end sequence modelling with deep recurrent cnn-hmms. In CVPR. | 将2D-CNNs与隐马尔可夫模型(HMM)集成,以对状态转换进行建模 |

| 2018 | Koller, O.; Zargaran, S.; Ney, H.; and Bowden, R. 2018. Deep sign: enabling robust statistical continuous sign language recognition via hybrid cnn-hmms. IJCV 126(12):1311–1325. | 将2D-CNNs与隐马尔可夫模型(HMM)集成,以对状态转换进行建模 |

| 2017 | Cui, R.; Liu, H.; and Zhang, C. 2017. Recurrent convolutional neural networks for continuous sign language recognition by staged optimization. In CVPR. | 在 CSRL 中采用 connectionist temporal classification (CTC) 算法作为代价函数,能够处理未分割的输入数据。 |

| 2017 | Cihan Camgoz, N.; Hadfield, S.; Koller, O.; and Bowden, R. 2017. Subunets: end-to-end hand shape and continuous sign language recognition. In ICCV. | 在 CSRL 中采用 connectionist temporal classification (CTC) 算法作为代价函数,能够处理未分割的输入数据。 |

| 2018 | Wang, S.; Guo, D.; Zhou, W.-g.; Zha, Z.-J.; and Wang, M. 2018. Connectionist temporal fusion for sign language translation. In ACM MM. | 在 CSRL 中采用 connectionist temporal classification (CTC) 算法作为代价函数,能够处理未分割的输入数据。 |

| 2019 | Cui, R.; Liu, H.; and Zhang, C. 2019. A deep neural framework for continuous sign language recognition by iterative training. TMM 21(7):1880–1891. | 在 CSRL 中采用 connectionist temporal classification (CTC) 算法作为代价函数,能够处理未分割的输入数据。 |

| 2006 | Graves, A.; Fern´andez, S.; Gomez, F.; and Schmidhuber, J. 2006. Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. In ICML. | 提出connectionist temporal classification (CTC) |

| 2014 | Bahdanau, D.; Cho, K.; and Bengio, Y. 2014. Neural machine translation by jointly learning to align and translate. In ICLR. | 提出基于注意力机制的编码-解码器模型 |

| 2018 | Huang, J.; Zhou, W.; Zhang, Q.; Li, H.; and Li, W. 2018. Videobased sign language recognition without temporal segmentation. In AAAI. | 采用基于注意力机制的编码-解码器模型的神经机器翻译方式处 CSLR |

| 2018 | Guo, D.; Zhou, W.; Li, H.; and Wang, M. 2018. Hierarchical lstm for sign language translation. In AAAI. | 采用基于注意力机制的编码-解码器模型的神经机器翻译方式处 CSLR |

The multiple cues of sign language can be separated into categories of multi-modality and multi-semantic. Early works about multi-modality utilize physical sensors to collect the 3D space information, such as depth and infrared maps (Molchanov et al. 2016; Liu et al. 2017). With the development of flow estimation, Cui et al. (Cui, Liu, and Zhang 2019) explore the multi-modality fusion of RGB and optical flow and achieve state-of-the-art performance on PHOENIX-2014 database. In contrast, multi-semantic refers to human body parts with different semantics. Early works use hand-crafted features from segmented hands, tracked body-parts and trajectories for recognition (Buehler, Zisser-man, and Everingham 2009; Pfister, Charles, and Zisserman 2013; Koller, Forster, and Ney 2015). In (Cihan Camgoz et al. 2017; Huang et al. 2018), feature sequence of hand patches captured by a tracker is fused with feature sequence of full-frames for further sequence prediction. In (Koller et al. 2019), Koller et al. propose to infer weak mouth labels from spoken German annotations and weak hand labels from SL dictionaries. These weak labels are used to establish the state synchronization in HMM of different cues, including full-frame, hand shape and mouth shape. Unlike previous methods, we propose an end-to-end differentiable network for multi-cue fusion with joint optimization, which achieves excellent performance.

手语的多种线索可分为多模态和多语义两大类。关于多模态的早期工作是利用物理传感器来收集三维空间信息,如深度和红外地图(Molchanov et al. 2016; Liu et al. 2017)。随着流量估计技术的发展,Cui等(Cui, Liu, and Zhang 2019)探索了RGB光流的多模态融合,并在PHOENIX-2014数据库上实现了最先进的性能。而多语义指的是具有不同语义的人体部位。早期的研究使用了 hand-crafted features :分割手部,跟踪身体的部分肢体和轨迹识别(Buehler, Zisser-man, and Everingham 2009;Pfister, Charles和Zisserman 2013;Koller, Forster和Ney, 2015年)。在(Cihan Camgoz et al. 2017;(Huang et al. 2018),将跟踪器捕获的手部贴片特征序列与全帧特征序列融合,进一步进行序列预测。在(Koller et al. 2019)中,Koller et al.提出从德语口语注释推断弱口标签,从SL词典推断弱手标签。这些弱标签用于在HMM中建立不同线索的状态同步,包括全帧、手形和嘴形。与以往的方法不同,我们提出了一种基于联合优化的端到端可微网络用于多线索融合,取得了良好的性能。

本段首句:手语的多种线索可分为多模态和多语义两大类。承接本章首段“在本节中,我们简要回顾了手语识别和多线索融合的相关工作。”然后,介绍了以往对手语识别中多线索融合的研究工作。

| 年份 | 论文 | 方法(解决 SRL 多线索中的多模态问题) |

|---|---|---|

| 2016 | Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; and Kautz, J. 2016. Online detection and classification of dynamic hand gestures with recurrent 3d convolutional neural network. In CVPR. | 利用物理传感器来收集三维空间信息,比如:depth and infrared maps |

| 2017 | Liu, Z.; Chai, X.; Liu, Z.; and Chen, X. 2017. Continuous gesture recognition with hand-oriented spatiotemporal feature. In ICCV. | 利用物理传感器来收集三维空间信息,比如:depth and infrared maps |

| 2019 | Cui, R.; Liu, H.; and Zhang, C. 2019. A deep neural framework for continuous sign language recognition by iterative training. TMM 21(7):1880–1891. | 探索了RGB光流的多模态融合,并在PHOENIX-2014数据库上实现了最先进的性能 |

| 年份 | 论文 | 方法(解决 SRL 多线索中的多语义问题) |

|---|---|---|

| 2009 | Buehler, P.; Zisserman, A.; and Everingham, M. 2009. Learning sign language by watching tv (using weakly aligned subtitles). In CVPR. | 使用了 hand-crafted features :分割手部,跟踪身体的部分肢体和轨迹识别 |

| 2013 | Pfister, T.; Charles, J.; and Zisserman, A. 2013. Large-scale learning of sign language by watching tv (using co-occurrences). In BMVC. | 使用了 hand-crafted features :分割手部,跟踪身体的部分肢体和轨迹识别 |

| 2015 | Koller, O.; Forster, J.; and Ney, H. 2015. Continuous sign language recognition: Towards large vocabulary statistical recognition systems handling multiple signers. CVIU 141:108–125. | 使用了 hand-crafted features :分割手部,跟踪身体的部分肢体和轨迹识别 |

| 2017 | Cihan Camgoz, N.; Hadfield, S.; Koller, O.; and Bowden, R. 2017. Subunets: end-to-end hand shape and continuous sign language recognition. In ICCV. | 将跟踪器捕获的手部贴片特征序列与全帧特征序列融合,进一步进行序列预测 |

| 2018 | Huang, J.; Zhou, W.; Zhang, Q.; Li, H.; and Li, W. 2018. Videobased sign language recognition without temporal segmentation. In AAAI. | 将跟踪器捕获的手部贴片特征序列与全帧特征序列融合,进一步进行序列预测 |

| 2019 | Koller, O.; Camgoz, C.; Ney, H.; and Bowden, R. 2019. Weakly supervised learning with multi-stream cnn-lstm-hmms to discover sequential parallelism in sign language videos. TPAMI. | 从德语口语注释推断弱口标签,从SL词典推断弱手标签,这些弱标签用于在HMM中建立不同线索的状态同步,包括全帧、手形和嘴形 |

3 Proposed Approach

In this section, we first introduce the overall architecture of the proposed method. Then we elaborate the key components in our framework, including the spatial multi-cue (SMC) module and temporal multi-cue (TMC) module. Finally, we detail the sequence learning part and the joint loss optimization of our spatial-temporal multi-cue (STMC) framework.

在本节中,我们首先介绍所提方法的整体架构。然后详细阐述了该框架的关键组成部分,包括空间多线索模块和时间多线索模块。最后,我们详细介绍了序列学习部分和联合损失优化的时空多线索(STMC)框架。

3.1 Framework Overview

Given a video x = { x t } t = 1 T \mathbf{x}=\left\{x_{t}\right\}_{t=1}^{T} x={

xt}t=1Twith T frames, the target of CSLR task is to predict its corresponding sign gloss sequence ℓ = { ℓ i } i = 1 L \ell=\left\{\ell_{i}\right\}_{i=1}^{L} ℓ={

ℓi}i=1L with L words. As illustrated in Figure 1, our framework consists of three key modules, i.e., spatial representation, temporal modelling and sequence learning. First, each frame is processed by an SMC module to generate spatial features of multiple cues, including full-frame, hand, face and pose. Then, a TMC module is leveraged to capture the temporal correlations of intra-cue features and inter-cue features at different time steps and time scales. Finally, the whole STMC network equipped with bidirectional Long-Short Term Memory (BLSTM) (Hochreiter and Schmidhuber 1997) encoders utilizes connectionist temporal classification (CTC) for sequence learning and inference.

CSLR 的目的是使视频帧 X 与手语字 L 相对应。如图1所示,我们的框架由三个关键模块组成,即空间表示、时间建模和序列学习。首先,利用 SMC 模块对每一帧进行处理,生成包括全帧、手、脸和姿态等多种线索的空间特征; 然后,利用TMC模块捕捉线索内特征和线索间特征在不同时间步长和时间尺度上的时间相关性。最后,整个 STMC 网络配备了双向长期记忆(BLSTM) (Hochreiter and Schmidhuber 1997)编码器,利用连接主义者时间分类(CTC)进行序列学习和推理。  Figure 1: An overview of the proposed STMC framework. The SMC module is firstly utilized to decompose spatial features of visual cues for T frames in a video. Strips with different colors represent feature sequences of different cues. Then, the feature sequences of cues are fed into the TMC module with stacked TMC blocks and temporal pooling (TP) layers. The output of TMC module consists of feature sequence in the inter-cue path and feature sequences of N cues in the intra-cue path, which are processed by BLSTM encoders and CTC layers for sequence learning and inference. Here, N denotes the number of cues.

Figure 1: An overview of the proposed STMC framework. The SMC module is firstly utilized to decompose spatial features of visual cues for T frames in a video. Strips with different colors represent feature sequences of different cues. Then, the feature sequences of cues are fed into the TMC module with stacked TMC blocks and temporal pooling (TP) layers. The output of TMC module consists of feature sequence in the inter-cue path and feature sequences of N cues in the intra-cue path, which are processed by BLSTM encoders and CTC layers for sequence learning and inference. Here, N denotes the number of cues.

图1:建议的 STMC 框架的概述。首先利用 SMC 模块对视频 T 帧的视觉线索进行空间特征分解。不同颜色的条带代表不同线索的特征序列。然后,将线索特征序列输入基于 temporal pooling (TP) 层和 TMC blocks 的 TMC 模块。TMC模块的输出由线索间路径的特征序列和线索内路径的 N 个线索的特征序列组成,通过 BLSTM 编码器和 CTC 层进行序列学习和推理。这里,N 表示线索的数量。

T × H × W × 3 \mathrm{T} \times \mathrm{H} \times \mathrm{W} \times 3 T×H×W×3:T帧 × H高 × W宽 × 3通道(RGB

SMC:Spatial Multi-Cue (SMC) 空间多线索

T × ( C 1 + ⋯ + C N ) \mathrm{T} \times\left(C_{1}+\cdots+C_{N}\right) T×(C1+⋯+CN):T帧 × (线索1 + 线索2 +…+ 线索N);本文的线索可以理解为特征部位。比如,线索1是 Full-frame;线索2是 手形 Shape;线索3是 面部表情 Head;线索4是 姿势 Bodyjoints。

-

TMC Block:Temporal Multi-Cue Block 时间多线索块

-

TP:Temporal Pooling 时序池化

2 × T 2 \times \mathrm{T} 2×T :2 × T帧 ???

2 × C 2 \times \mathrm{C} 2×C:2 × C个线索 ???

4 × C 4 \times \mathrm{C} 4×C:4 × C个线索 ???

BLSTM:bidirectional Long-Short Term Memory 双向长短时记忆。详情参考 作者: herosunly

CTC:connectionist temporal classification 连接主义时间分类 ,用来解决输入序列和输出序列难以一一对应的问题。详情参考 作者:yudonglee

-

Inference:Friday is expected with sunshine

识别出:周五有可能出太阳。 -

Optimization:Joint Loss

优化:加入损失函数

3.2 Spatial Multi-Cue Representation

In spatial representation module, 2D-CNN is adopted to generate multi-cue features of full-frame, hands, face and pose. Here, we select VGG-11 model (Simonyan and Zisserman 2015) as the backbone network, considering its simple but effective neural architecture design. As depicted in Figure 2, the operations in SMC are composed of three steps: pose estimation, patch cropping and feature generation.

在空间表示模块中,采用 2D-CNN 生成全帧、手、脸、姿态的多线索特征。在这里,我们选择 VGG-11 模型(Simonyan and Zisserman 2015)作为骨干网,考虑到其简单而有效的神经结构设计。如图2所示,SMC 的操作包括姿态估计、patch 裁剪和 feature 生成三个步骤。

Figure 2: The SMC Module. The keypoints are estimated for patch cropping of face and hands. The output of SMC includes features from full-frame, hands, face and pose.

图2:SMC 模块。估计出人脸和手的关键点并进行 patch 裁剪。SMC 的输出包括全帧图、手、脸、姿态等特征。

模型选择:VGG-11(2015年 Simonyan, K., and Zisserman, A. 2015. Very deep convolutional networks for large-scale image recognition. In ICLR.)作为骨干网络

- crop:修剪

Pose Estimation. Deconvolutional networks (Zeiler et al. 2010) are widely used in pixel-wise prediction. For pose estimation, two deconvolutional layers are added after the 7-th convolutional layer of VGG-11. The stride of each layer is 2. So, the feature maps are 4× upsampled from the resolution 14×14 to 56×56. The output is fed into a point-wise convolutional layer to generate K predicted heat maps. In each heat map, the position of its corresponding keypoint is expected to show the highest response value. Here, K is set to 7 for keypoints at the upper body, including the nose, both shoulders, both elbows and both wrists.

姿态估计. 反卷积网络(Zeiler et al. 2010)广泛应用于像素级预测。对于姿态估计,在VGG-11的第7个卷积层之后增加了2个反卷积层。每一层的步长为2。因此,从14×14到56×56的分辨率对特征图进行了4倍的更新采样。输出反馈入各个点的卷积层,以生成K个预测热图。在每一张热图中,期望其对应的关键点的位置表现出最高的响应值。在这里,上半身的关键部位K值设为7,包括鼻子、肩膀、肘部和手腕。

To make the keypoint prediction differentiable for subsequent sequence learning, a soft-argmax layer is applied on K these heat maps. Denoting K heat maps as h = { h k } k = 1 K \mathbf{h}=\left\{h_{k}\right\}_{k=1}^{K} h={

hk}k=1K, each heat map h k ∈ R H × W h_{k} \in \mathbb{R}^{H \times W} hk∈RH×W is passed through a spatial softmax function as follows,

p i , j , k = e h i , j , k ∑ i = 1 H ∑ j = 1 W e h i , j , k p_{i, j, k}=\frac{e^{h_{i, j, k}}}{\sum_{i=1}^{H} \sum_{j=1}^{W} e^{h_{i, j, k}}} pi,j,k=∑i=1H∑j=1Wehi,j,kehi,j,k, (1)

where h i , j , k h_{i, j, k} hi,j,k is the value of heat map h k h_{k} hk at position ( i , j ) (i, j) (i,j) and p i , j , k p_{i, j, k} pi,j,k is the probability of keypoint k at position ( i , j ) (i, j) (i,j). Afterwards, the expected values of coordinates along x-axis and y-axis over the whole probability map are calculated as follows,

( x ^ , y ^ ) k = ( ∑ i = 1 H ∑ j = 1 W i − 1 H − 1 p i , j , k , ∑ i = 1 H ∑ j = 1 W j − 1 W − 1 p i , j , k ) (\hat{x}, \hat{y})_{k}=\left(\sum_{i=1}^{H} \sum_{j=1}^{W} \frac{i-1}{H-1} p_{i, j, k}, \sum_{i=1}^{H} \sum_{j=1}^{W} \frac{j-1}{W-1} p_{i, j, k}\right) (x^,y^)k=(∑i=1H∑j=1WH−1i−1pi,j,k,∑i=1H∑j=1WW−1j−1pi,j,k), (2)

Here, J k = ( x ^ , y ^ ) k ∈ [ 0 , 1 ] J_{k}=(\hat{x}, \hat{y})_{k} \in[0,1] Jk=(x^,y^)k∈[0,1] is the normalized predicted position of keypoint k. The corresponding position of ( x , y ) (x, y) (x,y) in a H × W H \times W H×W feature map is ( x ^ ( H − 1 ) + 1 , y ^ ( W − 1 ) + 1 ) (\hat{x}(H-1)+1, \hat{y}(W-1)+1) (x^(H−1)+1,y^(W−1)+1).

为了使关键点预测可微,便于后续序列学习,在这些K个预测热图上应用了soft-argmax层。K个预测的热图表示为 h = { h k } k = 1 K \mathbf{h}=\left\{h_{k}\right\}_{k=1}^{K} h={

hk}k=1K,每张热图的 h k ∈ R H × W h_{k} \in \mathbb{R}^{H \times W} hk∈RH×W 是通过如下的 空间 softmax 函数计算的,

p i , j , k = e h i , j , k ∑ i = 1 H ∑ j = 1 W e h i , j , k p_{i, j, k}=\frac{e^{h_{i, j, k}}}{\sum_{i=1}^{H} \sum_{j=1}^{W} e^{h_{i, j, k}}} pi,j,k=∑i=1H∑j=1W<

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

6927

6927

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?