ELK filebeat docker部署

一、 elasticsearch部署

1、运行elasticsearch临时配置容器

docker run -it -p 9200:9200 -p 9300:9300 --name elasticsearch --net elastic -e ES_JAVA_OPTS="-Xms1g -Xmx1g" -e "discovery.type=single-node" -e LANG=C.UTF-8 -e LC_ALL=C.UTF-8 elasticsearch:8.4.3

2、拷贝文件目录到本地

mkdir -p /u01/elk/{elasticsearch,filebeat,kibana,logstash}

cd /u01/elk/elasticsearch/

docker cp elasticsearch:/usr/share/elasticsearch/config .

docker cp elasticsearch:/usr/share/elasticsearch/data .

docker cp elasticsearch:/usr/share/elasticsearch/plugins .

docker cp elasticsearch:/usr/share/elasticsearch/logs .

3、检查elasticsearch.yml

elasticsearch.yml

network.host: 0.0.0.0

4、删除之前elastic,运行正式容器

docker run -it \

-d \

-p 9200:9200 \

-p 9300:9300 \

--name elasticsearch \

--net elastic \

-e ES_JAVA_OPTS="-Xms1g -Xmx1g" \

-e "discovery.type=single-node" \

-e LANG=C.UTF-8 \

-e LC_ALL=C.UTF-8 \

-v /u01/elk/elasticsearch/config:/usr/share/elasticsearch/config \

-v /u01/elk/elasticsearch/data:/usr/share/elasticsearch/data \

-v /u01/elk/elasticsearch/plugins:/usr/share/elasticsearch/plugins \

-v /u01/elk/elasticsearch/logs:/usr/share/elasticsearch/logs \

elasticsearch:8.4.3

5、docker logs记录启动日志

后续用到的密码,证书,token可在以下查找

✅ Elasticsearch security features have been automatically configured!

✅ Authentication is enabled and cluster connections are encrypted.

ℹ️ Password for the elastic user (reset with `bin/elasticsearch-reset-password -u elastic`):

BtFaekYKKP6m0_pp+s9g

ℹ️ HTTP CA certificate SHA-256 fingerprint:

ab72993f131f9bae496f4f4b35f955f4909557e13da751bd5f2d1198c2695af6

ℹ️ Configure Kibana to use this cluster:

• Run Kibana and click the configuration link in the terminal when Kibana starts.

• Copy the following enrollment token and paste it into Kibana in your browser (valid for the next 30 minutes):

eyJ2ZXIiOiI4LjQuMyIsImFkciI6WyIxNzIuMTguMC4yOjkyMDAiXSwiZmdyIjoiYWI3Mjk5M2YxMzFmOWJhZTQ5NmY0ZjRiMzVmOTU1ZjQ5MDk1NTdlMTNkYTc1MWJkNWYyZDExOThjMjY5NWFmNiIsImtleSI6ImtWZFZJbzhCbjJVRjUtRUxmektrOjFva29XRktxUlBxYkh4UmdXWWhhMkEifQ==

ℹ️ Configure other nodes to join this cluster:

• Copy the following enrollment token and start new Elasticsearch nodes with `bin/elasticsearch --enrollment-token <token>` (valid for the next 30 minutes):

eyJ2ZXIiOiI4LjQuMyIsImFkciI6WyIxNzIuMTguMC4yOjkyMDAiXSwiZmdyIjoiYWI3Mjk5M2YxMzFmOWJhZTQ5NmY0ZjRiMzVmOTU1ZjQ5MDk1NTdlMTNkYTc1MWJkNWYyZDExOThjMjY5NWFmNiIsImtleSI6ImsxZFZJbzhCbjJVRjUtRUxmekttOnJxY0NUekM0VGR5YlRVaXF4dDRRQ1EifQ==

If you're running in Docker, copy the enrollment token and run:

`docker run -e "ENROLLMENT_TOKEN=<token>" docker.elastic.co/elasticsearch/elasticsearch:8.4.3`

二、部署kibana

1、运行kibana临时配置容器

docker run -it -d --restart=always --log-driver json-file --log-opt max-size=100m --log-opt max-file=2 --name kibana -p 5601:5601 --net elastic kibana:8.4.3

2、docker拷贝配置文件到本地,

cd /u01/elk/kibana

docker cp kibana:/usr/share/kibana/config/ .

docker cp kibana:/usr/share/kibana/data/ .

docker cp kibana:/usr/share/kibana/plugins/ .

docker cp kibana:/usr/share/kibana/logs/ .

3、删除之前kibana,运行正式容器

docker run -it \

-d \

--restart=always \

--log-driver json-file \

--log-opt max-size=100m \

--log-opt max-file=2 \

--name kibana \

-p 5601:5601 \

--net elastic \

-v /u01/elk/kibana/config:/usr/share/kibana/config \

-v /u01/elk/kibana/data:/usr/share/kibana/data \

-v /u01/elk/kibana/plugins:/usr/share/kibana/plugins \

-v /u01/elk/kibana/logs:/usr/share/kibana/logs \

kibana:8.4.3

三、部署logstash

1、运行logstash临时配置容器

docker run -it -d --name logstash -p 9600:9600 -p 5044:5044 --net elastic logstash:8.4.3

2、docker拷贝配置文件到本地,

cd /u01/elk/logstash

docker cp logstash:/usr/share/logstash/config/* ./config/

docker cp logstash:/usr/share/logstash/config/ ./config

docker cp logstash:/usr/share/logstash/pipeline ./

3、修改logstash配置–1

cat config/logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "https://172.18.0.2:9200" ]

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "BtFaekYKKP6m0_pp+s9g" ##前面elastic日志中查找

xpack.monitoring.elasticsearch.ssl.certificate_authority: "/usr/share/logstash/config/certs/http_ca.crt"

xpack.monitoring.elasticsearch.ssl.ca_trusted_fingerprint: "ab72993f131f9bae496f4f4b35f955f4909557e13da751bd5f2d1198c2695af6" ##前面elastic日志中查找

4、修改logstash配置–2

cat pipeline/logstash.conf

input {

beats {

port => 5044

}

}

filter {

}

output {

if [fields][project] == "nginx-accesslog" {

elasticsearch {

hosts => ["https://172.18.0.2:9200"]

index => "server-%{+YYYY.MM.dd}"

ssl => true

ssl_certificate_verification => false

cacert => "/usr/share/logstash/config/certs/http_ca.crt"

ca_trusted_fingerprint => "ab72993f131f9bae496f4f4b35f955f4909557e13da751bd5f2d1198c2695af6"

user => "elastic"

password => "BtFaekYKKP6m0_pp+s9g"

}

}

if [fields][project] == "nginx-errorlog" {

elasticsearch {

hosts => ["https://172.18.0.2:9200"]

index => "error-%{+YYYY.MM.dd}"

ssl => true

ssl_certificate_verification => false

cacert => "/usr/share/logstash/config/certs/http_ca.crt"

ca_trusted_fingerprint => "ab72993f131f9bae496f4f4b35f955f4909557e13da751bd5f2d1198c2695af6"

user => "elastic"

password => "BtFaekYKKP6m0_pp+s9g"

}

}

}

5、删除之前logstash,运行正式容器

docker run -it \

-d \

--name logstash \

-p 9600:9600 \

-p 5044:5044 \

--net elastic \

-v /u01/elk/logstash/config:/usr/share/logstash/config \

-v /u01/elk/logstash/pipeline:/usr/share/logstash/pipeline \

logstash:8.4.3

四、部署filebeat

1、运行logstash临时配置容器

docker run -it -d --name filebeat --network host -e TZ=Asia/Shanghai elastic/filebeat:8.4.3 filebeat -e -c /usr/share/filebeat/filebeat.yml

2、docker拷贝配置文件到本地,

docker cp filebeat:/usr/share/filebeat/filebeat.yml .

docker cp filebeat:/usr/share/filebeat/data .

docker cp filebeat:/usr/share/filebeat/logs .

3、修改filebeat配置,匹配nginx access/error未json格式化日志

尽可能自己格式化nginx日志,这是未格式化的情况

cat filebeat.yml

ilebeat.config:

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

processors:

- add_host_metadata: ~

#when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

#output.elasticsearch:

# hosts: '${ELASTICSEARCH_HOSTS:elasticsearch:9200}'

# username: '${ELASTICSEARCH_USERNAME:}'

# password: '${ELASTICSEARCH_PASSWORD:}'

filebeat.inputs:

- type: log

enabled: true

paths:

- /usr/share/filebeat/target/access.log # 这个路径是需要收集的日志路径,是docker容器中的路径

fields:

project: nginx-accesslog

scan_frequency: 10s

#multiline.pattern: '((2(5[0-5]|[0-4]\d))|[0-1]?\d{1,2})(\.((2(5[0-5]|[0-4]\d))|[0-1]?\d{1,2})){3}'

multiline.pattern: '^\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}'

multiline.negate: false

multiline.match: after

processors:

- dissect:

tokenizer: '"%{ip} - - [%{timestamp}] \"%{method} %{url} HTTP/%{http_version}\" %{response_code} %{bytes} \"%{referrer}\" \"%{user_agent}\"'

field: "message"

target_prefix: ""

ignore_missing: true

# '^[0-9]{4}-[0-9]{2}-[0-9]{2}'

- type: log

enabled: true

paths:

- /usr/share/filebeat/target/error.log # 这个路径是需要收集的日志路径,是docker容器中的路径

fields:

project: nginx-errorlog

scan_frequency: 10s

#multiline.pattern: '((2(5[0-5]|[0-4]\d))|[0-1]?\d{1,2})(\.((2(5[0-5]|[0-4]\d))|[0-1]?\d{1,2})){3}'

multiline.pattern: '^[0-9]{4}/[0-9]{2}/[0-9]{2} '

multiline.negate: false

multiline.match: after

processors:

- dissect:

tokenizer: '%{timestamp} [%{log.level}] %{nginx.error}: %{error_message}, client: %{client_ip}, server: %{server_name}, request: "%{request}", host: "%{host}"'

field: "message"

target_prefix: ""

ignore_missing: true

# '^[0-9]{4}-[0-9]{2}-[0-9]{2}'

json.keys_under_root: true # 开启json格式

json.overwrite_keys: true

#- type: log

# enabled: true

# paths:

# - /usr/share/filebeat/target/error.log. # 这个路径是需要收集的日志路径,是docker容器中的路径

# scan_frequency: 10s

# exclude_lines: ['error']

# multiline.pattern: '^[0-9]{4}/[0-9]{2}/[0-9]{2}'

# multiline.negate: false

# multiline.match: after

#

output.logstash:

enabled: true

# The Logstash hosts

hosts: ["192.168.10.222:5044"]

5、删除之前logstash,运行正式容器

docker run --name=filebeat --hostname=ubuntu --user=filebeat --env=TZ=Asia/Shanghai --volume=/etc/nginx/logs/:/usr/share/filebeat/target/ --volume=/u01/elk/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml --volume=/u01/elk/filebeat/data:/usr/share/filebeat/data --volume=/u01/elk/filebeat/logs:/usr/share/filebeat/logs --network=host --workdir=/usr/share/filebeat --restart=no --runtime=runc --detach=true -t elastic/filebeat:8.4.3 filebeat -e -c /usr/share/filebeat/filebeat.yml

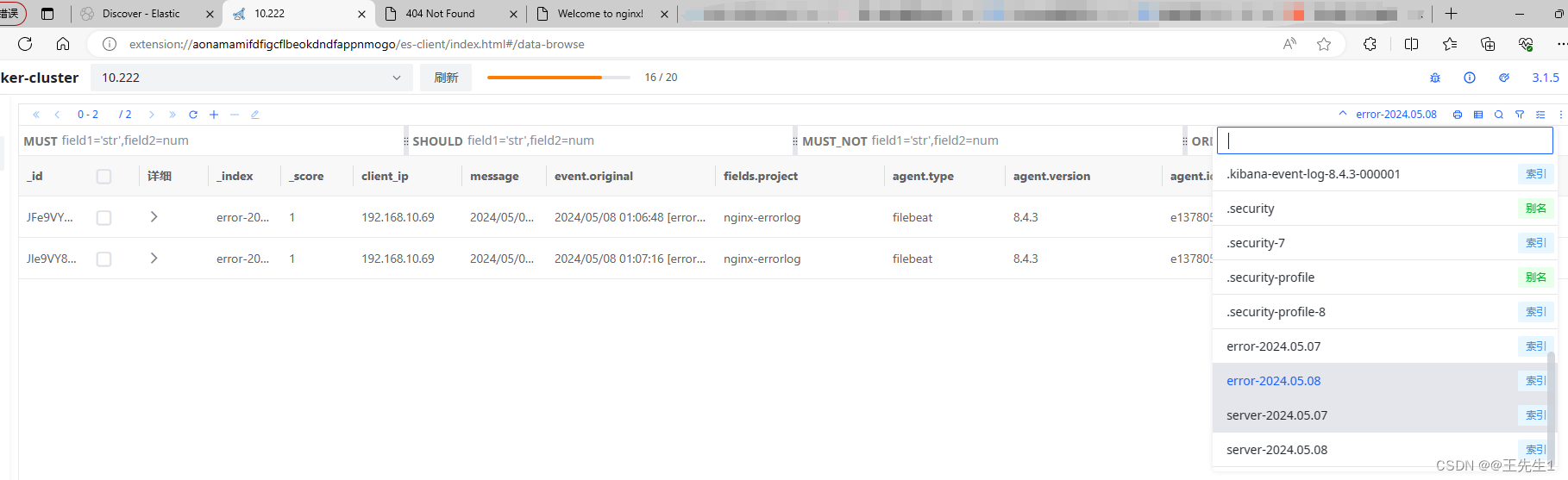

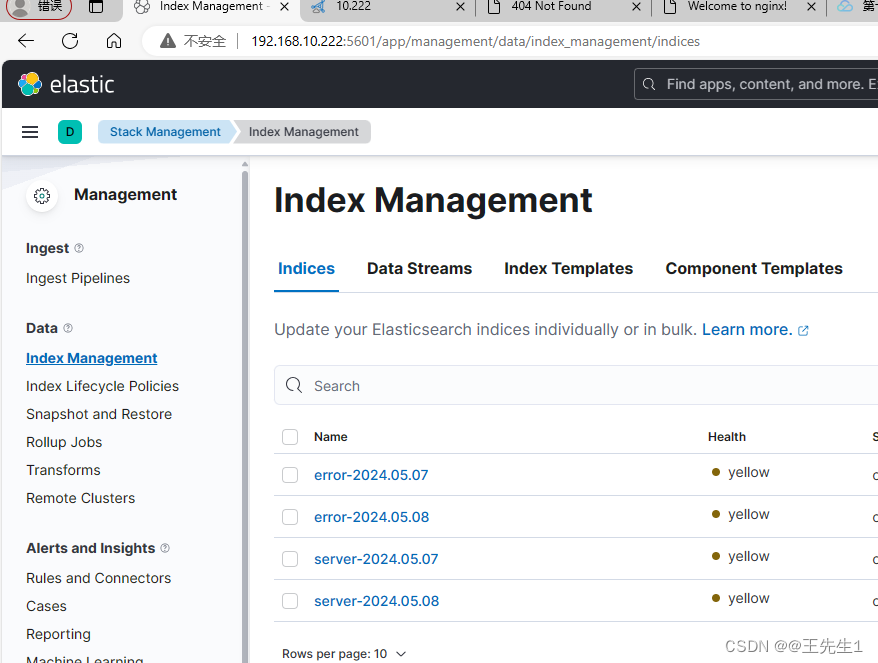

五、最终展示

nginx 初始日志格式

access.log

192.168.10.69 - - [08/May/2024:01:07:20 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 Edg/123.0.0.0"

192.168.10.69 - - [08/May/2024:01:07:20 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 Edg/123.0.0.0"

error.log

2024/05/08 01:07:17 [error] 2966095#0: *49 open() "/etc/nginx/html/asaa" failed (2: No such file or directory), client: 192.168.10.69, server: localhost, request: "GET /asaa HTTP/1.1", host: "192.168.10.222"

2024/05/08 01:07:17 [error] 2966095#0: *49 open() "/etc/nginx/html/asaa" failed (2: No such file or directory), client: 192.168.10.69, server: localhost, request: "GET /asaa HTTP/1.1", host: "192.168.10.222"

3109

3109

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?