scrapy的安装和简单实用

安装

👉 https://blog.csdn.net/qq_44766883/article/details/107790504

基本使用

import scrapy

class QuoteSpider(scrapy.Spider):

name = "quote"

start_urls = ["http://quotes.toscrape.com/"]

def parse(self, response):

# quotes = response.css("div.quote")

quotes = response.xpath("//div[@class='quote']")

for quote in quotes:

yield {

"text": quote.css("span.text::text").extract_first(),

"author": quote.xpath("./span/small/text()").extract_first(),

}

next_page = response.xpath("//li[@class='next']/a/@href").extract_first()

if next_page:

yield response.follow(next_page, self.parse)

运行命令

-

控制台输出

scrapy runspider quotes_spider.py -

保存到指定文件

scrapy runspider scrapy_learn/quotes_spider.py -o ./scrapy_learn/quotes.json -

指定文件类型

scrapy runspider scrapy_learn/quotes_spider.py -o ./scrapy_learn/quotes.csv -t csv

常用命令

-

创建一个项目

scrapy startproject qianmu -

初始化一个爬虫文件

# scrapy genspider [爬虫名字] [目标网站域名] scrapy genspider qianmu_new qianmu.iguye.com -

运行爬虫

# 运行名为qianmu_new的爬虫 scrapy crawl qianmu_new scrapy crawl qianmu_new -o qianmu_new.json scrapy crawl qianmu_new -o qianmu_new.csv -t csv # 单独运行爬虫文件 scrapy runspider quotes_spider.py scrapy runspider scrapy_learn/quotes_spider.py -o ./scrapy_learn/quotes.json scrapy runspider scrapy_learn/quotes_spider.py -o ./scrapy_learn/quotes.csv -t csv -

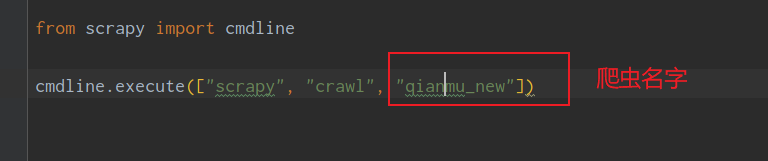

创建以下文件,便于直接运行

调试爬虫

# 进入到scrapy控制台,使用的是项目的环境

scrapy shell

# 带一个URL参数,将会自动请求这个url,并在请求成功后进入控制台

scrapy shell http://www.qianmu.org/ranking/1528.html

# 调用parse方法

result = spider.parse(response)

# result是一个生成器,没什么疑惑好吧

type(result):<generator object QianmuNewSpider.parse at 0x0000025096AEF200>

# one其实就是一个Request对象

one = next(result)

one:<GET http://www.qianmu.org/%E9%BA%BB%E7%9C%81%E7%90%86%E5%B7%A5%E5%AD%A6%E9%99%A2>

type(one):<class 'scrapy.http.request.Request'>

# callback其实就是yield response.follow(link, self.parse_university)中的 parse_university

one.callback:<bound method QianmuNewSpider.parse_university of <QianmuNewSpider 'qianmu_new' at 0x25096aa3640>>

# 继续请求

fetch(one) # 输出:2020-08-04 20:54:46 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://www.qianmu.org/%E9%BA%BB%E7%9C%81%E7%90%86%E5%B7%A5%E5%AD%A6%E9%99%A2> (referer: None) ['cached']

data = next(response) # 输出了:18 26

data # 输出一个请求抓取的数据

# 可以进行循环爬取

for req in result:

... fetch(req)

进入到控制台以后,可以使用以下函数和对象

| A | B |

|---|---|

| fetch | 请求url或者Requesrt对象,注意:请求成功以后会自动将当前作用域内的request和responsne对象重新赋值 |

| view | 用浏览器打开response对象内的网页 |

| shelp | 打印帮助信息 |

| spider | 相应的Spider类的实例 |

| settings | 保存所有配置信息的Settings对象 |

| crawler | 当前Crawler对象 |

| scrapy | scrapy模块 |

# 用项目配置下载网页,然后用浏览器打开网页

scrapy view url

# 用项目配置下载网页,然后输出至控制台

scrapy fetch url

一张图

这张图一定要熟记呀!!!

The data flow in Scrapy is controlled by the execution engine, and goes like this:

- The Engine gets the initial Requests to crawl from the Spider.

- The Engine schedules the Requests in the Scheduler and asks for the next Requests to crawl.

- The Scheduler returns the next Requests to the Engine.

- The Engine sends the Requests to the Downloader, passing through the Downloader Middlewares (see

process_request()). - Once the page finishes downloading the Downloader generates a Response (with that page) and sends it to the Engine, passing through the Downloader Middlewares (see

process_response()). - The Engine receives the Response from the Downloader and sends it to the Spider for processing, passing through the Spider Middleware (see

process_spider_input()). - The Spider processes the Response and returns scraped items and new Requests (to follow) to the Engine, passing through the Spider Middleware (see

process_spider_output()). - The Engine sends processed items to Item Pipelines, then send processed Requests to the Scheduler and asks for possible next Requests to crawl.

- The process repeats (from step 1) until there are no more requests from the Scheduler.

6010

6010

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?