1. 双目测距基础

本项目是在YOLOV8 + 双目测距的基础上实现的,测距原理可见上边文章

2. 计数功能

2.1 计数模块

在指定区域内计数模块

region_points = [(20, 20), (20, 600), (1200, 600), (1200, 20)]

count_class1 = 0

count_class2 = 0

for i, box in enumerate(boxes):

x1, y1, x2, y2 = box[:4]

x_center = (x1 + x2) / 2

y_center = (y1 + y2) / 2

center_point = (int(x_center), int(y_center))

if is_inside_region(center_point, region_points):

if box[-1] == 0: # 类别1的标签.人

count_class1 += 1

elif box[-1] == 2: # 类别2的标签,车

count_class2 += 1

2.2 判断模块

def is_inside_region(point, region_points):

"""

判断点是否在指定区域内

"""

return cv2.pointPolygonTest(np.array(region_points), point, False) >= 0

3. 初始代码

import time

import cv2

import numpy as np

from ultralytics import YOLO

from stereo import stereoconfig_040_2

from stereo.stereo import stereo_40

from stereo.stereo import stereo_threading, MyThread

from stereo.dianyuntu_yolo import preprocess, undistortion, getRectifyTransform, draw_line, rectifyImage, \

stereoMatchSGBM

def is_inside_region(point, region_points):

"""

判断点是否在指定区域内

"""

return cv2.pointPolygonTest(np.array(region_points), point, False) >= 0

def count_objects_in_region(boxes, region_points):

"""

在指定区域内计数物体

"""

count = 0

for box in boxes:

x_center, y_center, width, height = box

center_point = (int(x_center), int(y_center))

if is_inside_region(center_point, region_points):

count += 1

return count

def detect():

model = YOLO("yolov8n.pt")

cv2.namedWindow('region couter', cv2.WINDOW_NORMAL)

cv2.resizeWindow('region couter', 1280, 360) # 设置宽高

cap = cv2.VideoCapture('ultralytics/assets/a3.mp4')

out_video = cv2.VideoWriter('output.avi', cv2.VideoWriter_fourcc(*'XVID'), 30, (2560, 720))

region_points = [(20, 20), (20, 600), (1200, 600), (1200, 20)]

region_points_np = np.array(region_points)

assert cap.isOpened(), "Error reading video file"

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

config = stereoconfig_040_2.stereoCamera()

map1x, map1y, map2x, map2y, Q = getRectifyTransform(720, 1280, config)

thread = MyThread(stereo_threading, args=(config, im0, map1x, map1y, map2x, map2y, Q))

thread.start()

tracks = model.track(im0, persist=True)

annotated_frame = tracks[0].plot()

boxes = tracks[0].boxes.data

count_class1 = 0

count_class2 = 0

for i, box in enumerate(boxes):

x1, y1, x2, y2 = box[:4]

x_center = (x1 + x2) / 2

y_center = (y1 + y2) / 2

center_point = (int(x_center), int(y_center))

if is_inside_region(center_point, region_points):

if box[-1] == 0: # 类别1的标签.人

count_class1 += 1

elif box[-1] == 2: # 类别2的标签,车

count_class2 += 1

if (0 < x2 < 1280):

thread.join()

points_3d = thread.get_result()

a = points_3d[int(y_center), int(x_center), 0] / 1000

b = points_3d[int(y_center), int(x_center), 1] / 1000

c = points_3d[int(y_center), int(x_center), 2] / 1000

distance = ((a ** 2 + b ** 2 + c ** 2) ** 0.5)

if (distance != 0):

text_dis_avg = "dis:%0.2fm" % distance

cv2.putText(annotated_frame, text_dis_avg, (int(x2 + 5), int(y1 + 30)), cv2.FONT_ITALIC, 1.0,

(0, 255, 255), 2)

cv2.polylines(annotated_frame, [region_points_np], isClosed=True, color=(255, 0, 0), thickness=2)

print("Number of objects in class 1:", count_class1)

print("Number of objects in class 2:", count_class2)

cv2.imshow("region couter", annotated_frame)

out_video.write(annotated_frame)

if cv2.waitKey(1) == ord('q'):

break

out_video.release()

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

detect()

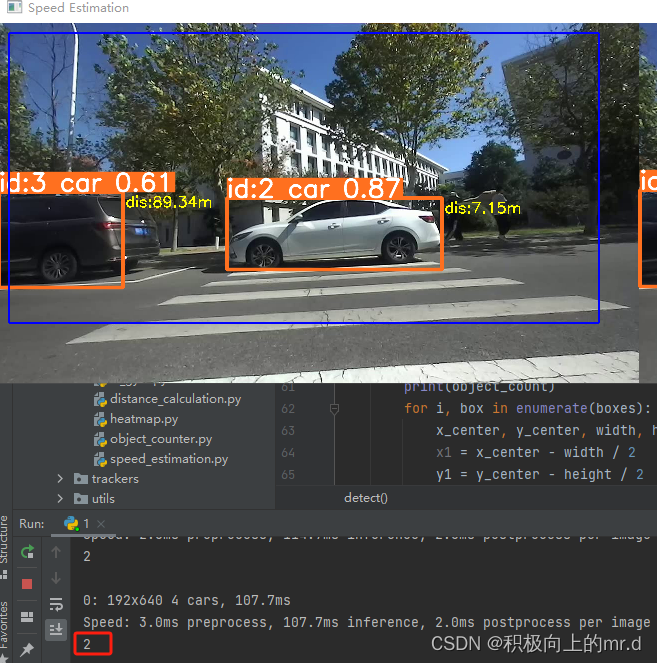

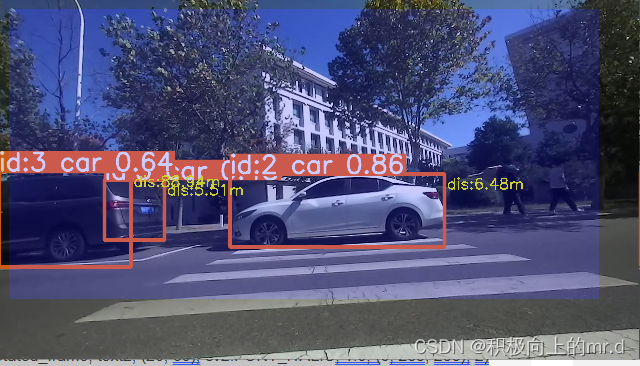

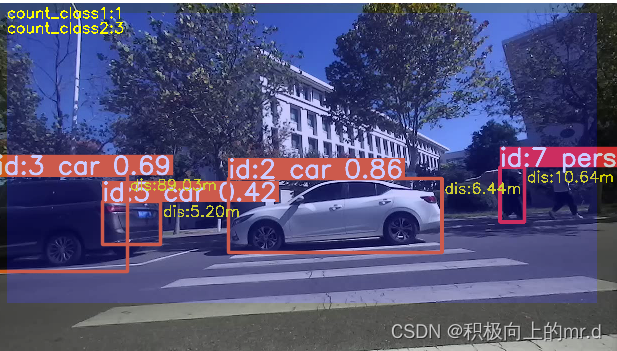

4. 实验结果

本实验可以实现区域多类别计数

(1)区域计数实验

(2)如需把区域背景换成填充色,可将上边代码里的

cv2.polylines(annotated_frame, [region_points_np], isClosed=True, color=(255, 0, 0), thickness=2)

替换为

region_mask = np.zeros_like(annotated_frame)

cv2.fillPoly(region_mask, [region_points_np], color=(255, 0, 0))

# 使用透明度将填充后的区域与原始帧混合

alpha = 0.2 # 调整透明度

annotated_frame = cv2.addWeighted(annotated_frame, 1 - alpha, region_mask, alpha, 0)

(3)计数在图上显示

在annotated_frame = cv2.addWeighted(annotated_frame, 1 - alpha, region_mask, alpha, 0)添加

text1 = "count_class1:%d" % count_class1

text2 = "count_class2:%d" % count_class2

cv2.putText(annotated_frame, text1, (20, 30), cv2.FONT_ITALIC, 1.0, (0, 255, 255), 2)

cv2.putText(annotated_frame, text2, (20, 60), cv2.FONT_ITALIC, 1.0, (0, 255, 255), 2)

5. 完整代码

import time

import cv2

import numpy as np

from ultralytics import YOLO

from stereo import stereoconfig_040_2

from stereo.stereo import stereo_40

from stereo.stereo import stereo_threading, MyThread

from stereo.dianyuntu_yolo import preprocess, undistortion, getRectifyTransform, draw_line, rectifyImage, \

stereoMatchSGBM

def is_inside_region(point, region_points):

"""

判断点是否在指定区域内

"""

return cv2.pointPolygonTest(np.array(region_points), point, False) >= 0

def count_objects_in_region(boxes, region_points):

"""

在指定区域内计数物体

"""

count = 0

for box in boxes:

x_center, y_center, width, height = box

center_point = (int(x_center), int(y_center))

if is_inside_region(center_point, region_points):

count += 1

return count

def detect():

model = YOLO("yolov8n.pt")

cv2.namedWindow('region couter', cv2.WINDOW_NORMAL)

cv2.resizeWindow('region couter', 1280, 360) # 设置宽高

cap = cv2.VideoCapture('ultralytics/assets/a3.mp4')

out_video = cv2.VideoWriter('output.avi', cv2.VideoWriter_fourcc(*'XVID'), 30, (2560, 720))

region_points = [(20, 20), (20, 600), (1200, 600), (1200, 20)]

region_points_np = np.array(region_points)

assert cap.isOpened(), "Error reading video file"

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

config = stereoconfig_040_2.stereoCamera()

map1x, map1y, map2x, map2y, Q = getRectifyTransform(720, 1280, config)

thread = MyThread(stereo_threading, args=(config, im0, map1x, map1y, map2x, map2y, Q))

thread.start()

tracks = model.track(im0, persist=True)

annotated_frame = tracks[0].plot()

boxes = tracks[0].boxes.data

count_class1 = 0

count_class2 = 0

for i, box in enumerate(boxes):

x1, y1, x2, y2 = box[:4]

x_center = (x1 + x2) / 2

y_center = (y1 + y2) / 2

center_point = (int(x_center), int(y_center))

if is_inside_region(center_point, region_points):

if box[-1] == 0: # 类别1的标签.人

count_class1 += 1

elif box[-1] == 2: # 类别2的标签,车

count_class2 += 1

if (0 < x2 < 1280):

thread.join()

points_3d = thread.get_result()

a = points_3d[int(y_center), int(x_center), 0] / 1000

b = points_3d[int(y_center), int(x_center), 1] / 1000

c = points_3d[int(y_center), int(x_center), 2] / 1000

distance = ((a ** 2 + b ** 2 + c ** 2) ** 0.5)

if (distance != 0):

text_dis_avg = "dis:%0.2fm" % distance

cv2.putText(annotated_frame, text_dis_avg, (int(x2 + 5), int(y1 + 30)), cv2.FONT_ITALIC, 1.0,

(0, 255, 255), 2)

# cv2.polylines(annotated_frame, [region_points_np], isClosed=True, color=(255, 0, 0), thickness=2)

region_mask = np.zeros_like(annotated_frame)

cv2.fillPoly(region_mask, [region_points_np], color=(255, 0, 0))

# 使用透明度将填充后的区域与原始帧混合

alpha = 0.2 # 调整透明度

annotated_frame = cv2.addWeighted(annotated_frame, 1 - alpha, region_mask, alpha, 0)

text1 = "count_class1:%d" % count_class1

text2 = "count_class2:%d" % count_class2

cv2.putText(annotated_frame, text1, (20, 30), cv2.FONT_ITALIC, 1.0, (0, 255, 255), 2)

cv2.putText(annotated_frame, text2, (20, 60), cv2.FONT_ITALIC, 1.0, (0, 255, 255), 2)

print("Number of objects in class 1:", count_class1)

print("Number of objects in class 2:", count_class2)

cv2.imshow("region couter", annotated_frame)

out_video.write(annotated_frame)

if cv2.waitKey(1) == ord('q'):

break

out_video.release()

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

detect()

6. 源码

可以去 YOLOV8 + 双目测距 下载源码,然后把上边主代码贴进去运行即可

本文介绍了如何在YOLOV8基础上结合双目测距技术,实现在指定区域对人和车辆进行多类别的计数,并展示了计数模块和判断模块的代码示例。实验结果显示了在视频流中实时计数的效果。

本文介绍了如何在YOLOV8基础上结合双目测距技术,实现在指定区域对人和车辆进行多类别的计数,并展示了计数模块和判断模块的代码示例。实验结果显示了在视频流中实时计数的效果。

2292

2292

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?