Hadoop配置

Docker

安装docker

$ yum install docker

设置开机自启Docker

$ systemctl enable docker

启动Docker

$ systemctl start docker

查看docker版本

[root@VM-16-13-centos ~]$ docker -v

"Docker version 1.13.1, build 0be3e21/1.13.1"

docker 配置阿里云镜像加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://i05bzcuy.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

java环境配置

首先在终端中输入 java 命令:

[root@localhost ~]$ java

-bash: java: command not found

使用wget工具获得JDK:(使用华为JDK镜像库):

https://repo.huaweicloud.com/java/jdk/

如果没有weget,可以使用 yum install wget

$ wget https://repo.huaweicloud.com/java/jdk/12.0.1+12/jdk-12.0.1_linux-x64_bin.tar.gz

[root@localhost ~]# ll

total 185540

-rw-------. 1 root root 993 Jan 22 2018 anaconda-ks.cfg

-rw-r--r--. 1 root root 189981475 Apr 2 2019 jdk-12.0.1_linux-x64_bin.tar.gz

drwxr-xr-x. 2 root root 4096 Jun 6 2020 test

配置JAVA_HOME

$ cd /usr/local

$ mkdir java

$ mv jdk-12.0.1_linux-x64_bin.tar.gz /usr/local/java

$ cd java/

$ tar -zxvf jdk-12.0.1_linux-x64_bin.tar.gz

[root@localhost java]$ ll

total 185532

drwxr-xr-x. 9 root root 99 Dec 31 00:45 jdk-12.0.1

-rw-r--r--. 1 root root 189981475 Apr 2 2019 jdk-12.0.1_linux-x64_bin.tar.gz

$ cd jdk-12.0.1

$ vim /etc/profile

在文件底部添加如下内容:

# config the java home

JAVA_HOME=/usr/local/java/jdk-12.0.1

CLASSPATH=$JAVA_HOME/lib/

PATH=$PATH:$JAVA_HOME/bin

export PATH JAVA_HOME CLASSPATH

让配置生效:

source /etc/profile

检查安装:

$ java -version

java version "12.0.1" 2019-04-16

Java(TM) SE Runtime Environment (build 12.0.1+12)

Java HotSpot(TM) 64-Bit Server VM (build 12.0.1+12, mixed mode, sharing)

docker 拉取centos内核镜像

$ docker search centos

$ docker pull centos

在拉取了centos镜像后,使用 docker images查看镜像,会有一个docker centos镜像在本地。

接下来,我们以该内核镜像来构建具有hadoop的容器。

docker 运行容器,配置hadoop,构建镜像

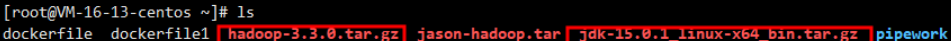

首先,我们在宿主机中应该准备好两个安装包

- jdk-15.0.1_linux-x64_bin.tar.gz——JDK

- hadoop-3.3.0.tar.gz——hadoop安装包

在当前目录中编辑 dockerfile文件

- dockerfile

FROM centos

MAINTAINER lengdanran

RUN yum install -y openssh-server sudo

RUN sed -i 's/UsePAM yes/UsePAM no/g' /etc/ssh/sshd_config

RUN yum install -y openssh-clients

RUN echo "root:root" | chpasswd # 镜像容器的root用户密码为root,可以自己修改

RUN echo "root ALL=(ALL) ALL" >> /etc/sudoers # 授予root 用户容器的全部权限

RUN ssh-keygen -t dsa -f /etc/ssh/ssh_host_dsa_key # ssh免密登录

RUN ssh-keygen -t rsa -f /etc/ssh/ssh_host_rsa_key

RUN mkdir /var/run/sshd

EXPOSE 22 # 放开22端口

ADD jdk-15.0.1_linux-x64_bin.tar.gz /usr/local/ # 添加JDK,需要自行修改配置

RUN mv /usr/local/jdk-15.0.1 /usr/local/jdk

ENV JAVA_HOME /usr/local/jdk

ENV PATH $JAVA_HOME/bin:$PATH # 配置java 环境变量

ADD hadoop-3.3.0.tar.gz /usr/local # 添加 Hadoop

RUN mv /usr/local/hadoop-3.3.0 /usr/local/hadoop

ENV HADOOP_HOME /usr/local/hadoop

ENV PATH $HADOOP_HOME/bin:$PATH # 配置 Hadoop 环境变量

RUN yum install -y which sudo

CMD ["/usr/sbin/sshd", "-D"]

- 执行build 命令 :

docker build -f dockerfile -t 镜像名称:tag .执行日志(命令最后的英文 . 不能忽略!!)

[root@VM-16-13-centos ~]# docker build -f dockerfile -t hadoop-test:latest .

Sending build context to Docker daemon 3.828 GB

Step 1/21 : FROM centos

---> 300e315adb2f

Step 2/21 : MAINTAINER lengdanran

---> Using cache

---> 7198b4e040b2

Step 3/21 : RUN yum install -y openssh-server sudo

---> Using cache

---> 1a3815a5dd0b

Step 4/21 : RUN sed -i 's/UsePAM yes/UsePAM no/g' /etc/ssh/sshd_config

---> Using cache

---> 9d8dc6f36c65

Step 5/21 : RUN yum install -y openssh-clients

---> Using cache

---> 803631d92101

Step 6/21 : RUN echo "root:root" | chpasswd

---> Using cache

---> 7fab3380d558

Step 7/21 : RUN echo "root ALL=(ALL) ALL" >> /etc/sudoers

---> Using cache

---> dabf65730596

Step 8/21 : RUN ssh-keygen -t dsa -f /etc/ssh/ssh_host_dsa_key

---> Using cache

---> 6851eaeedcb4

Step 9/21 : RUN ssh-keygen -t rsa -f /etc/ssh/ssh_host_rsa_key

---> Using cache

---> ff8db8a0f8d1

Step 10/21 : RUN mkdir /var/run/sshd

---> Using cache

---> 97d0860dc9e0

Step 11/21 : EXPOSE 22

---> Using cache

---> 1804eda26ce4

Step 12/21 : ADD jdk-15.0.1_linux-x64_bin.tar.gz /usr/local/

---> e517aae234dc

Removing intermediate container b22ad6966bb5

Step 13/21 : RUN mv /usr/local/jdk-15.0.1 /usr/local/jdk

---> Running in 279abadd65e7

---> 83f122a4e1b9

Removing intermediate container 279abadd65e7

Step 14/21 : ENV JAVA_HOME /usr/local/jdk

---> Running in 6e9cec742432

---> 3ba9e11ef03b

Removing intermediate container 6e9cec742432

Step 15/21 : ENV PATH $JAVA_HOME/bin:$PATH

---> Running in a820f79eab34

---> 173170e955c0

Removing intermediate container a820f79eab34

Step 16/21 : ADD hadoop-3.3.0.tar.gz /usr/local

---> 422eca6291b1

Removing intermediate container ec817f7145ea

Step 17/21 : RUN mv /usr/local/hadoop-3.3.0 /usr/local/hadoop

---> Running in 95aebc52deef

---> ea2ce61953f2

Removing intermediate container 95aebc52deef

Step 18/21 : ENV HADOOP_HOME /usr/local/hadoop

---> Running in 921481584b81

---> d3acd5a99a43

Removing intermediate container 921481584b81

Step 19/21 : ENV PATH $HADOOP_HOME/bin:$PATH

---> Running in e13e1cb5e9a0

---> 006151a93144

Removing intermediate container e13e1cb5e9a0

Step 20/21 : RUN yum install -y which sudo

---> Running in 76041da5815b

Last metadata expiration check: 1 day, 1:11:23 ago on Sun Jan 3 01:28:51 2021.

Package sudo-1.8.29-6.el8.x86_64 is already installed.

Dependencies resolved.

================================================================================

Package Architecture Version Repository Size

================================================================================

Installing:

which x86_64 2.21-12.el8 baseos 49 k

Transaction Summary

================================================================================

Install 1 Package

Total download size: 49 k

Installed size: 81 k

Downloading Packages:

which-2.21-12.el8.x86_64.rpm 121 kB/s | 49 kB 00:00

--------------------------------------------------------------------------------

Total 43 kB/s | 49 kB 00:01

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : which-2.21-12.el8.x86_64 1/1

Running scriptlet: which-2.21-12.el8.x86_64 1/1

Verifying : which-2.21-12.el8.x86_64 1/1

Installed:

which-2.21-12.el8.x86_64

Complete!

---> 9678194a27c5

Removing intermediate container 76041da5815b

Step 21/21 : CMD /usr/sbin/sshd -D

---> Running in 46d340f4fce8

---> 58aea5225dca

Removing intermediate container 46d340f4fce8

Successfully built 58aea5225dca

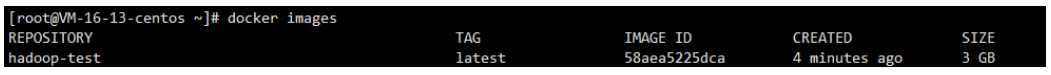

镜像构建成功后,可以在本地看到新构建的镜像

启动构建好的镜像

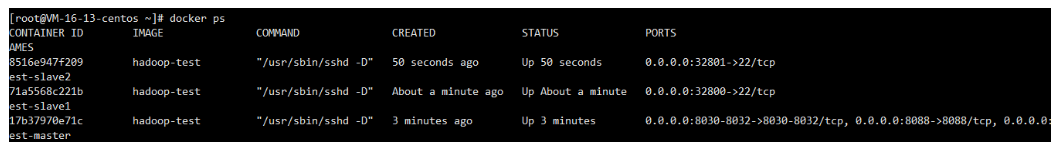

使用如下命令启动3个容器

$ docker run --name test-master --hostname test-master -d -P -p 50070:50070 -p 8088:8088 -p 9001:9001 -p 8030:8030 -p 8031:8031 -p 8032:8032 hadoop-test

$ docker run --name test-slave1 --hostname test-slave1 -d -P hadoop-test

$ docker run --name test-slave2 --hostname test-slave2 -d -P hadoop-test

进入容器内部配置Hadoop集群

使用如下命令,开启3个终端,进入三个容器内部

$ docker exec -it test-master bash

$ docker exec -it test-slave1 bash

$ docker exec -it test-slave2 bash

由于是基于 centos 内核镜像构建的,一些常用的工具 vim 等没有安装,可以使用如下命令安装必要的工具

$ yum install vim

$ yum install ncurses

在每个容器内部输入命令检查环境配置是否完成

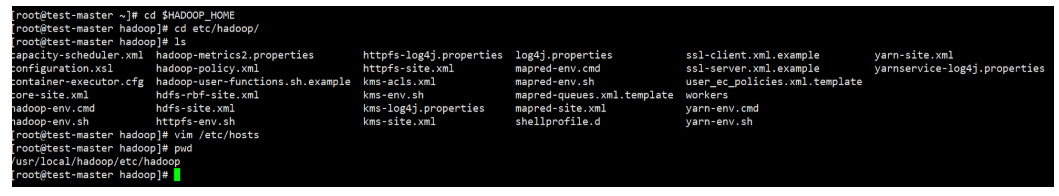

进入Hadoop配置文件目录

利用docker 将该目录复制到宿主机

只是方便修改配置文件, 也可以使用vim编辑

$ docker cp test-master:/usr/local/hadoop/etc/hadoop ~/

使用xftp下载下来,更改里面的配置文件

- 修改core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:50070</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/hadoop/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131702</value>

</property>

</configuration>

- 修改hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

- 修改mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

- 修改yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

- 修改 slaves,3.0版本为workers

slave1

slvae2

- 修改 hadoop-env.sh

添加如下内容:

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export JAVA_HOME=/usr/local/jdk

- 将文件上传到宿主机,并使用docker cp将文件更新到容器中

$ docker cp ~/hadoop test-master:/usr/local/hadoop/etc

构建镜像

将正在运行的test-master容器提交为镜像

$ docker commit test-master centos-hadoop

$ docker stop test-master test-slave1 test-slave2 # 终止运行的容器

Hadoop集群配置启动

$ docker run --name hadoop-master --hostname hadoop-master -d -P -p 50070:50070 -p 8088:8088 -p 9001:9001 -p 8030:8030 -p 8031:8031 -p 8032:8032 centos-hadoop

$ docker run --name hadoop-slave1 --hostname hadoop-slave1 -d -P centos-hadoop

$ docker run --name hadoop-slave2 --hostname hadoop-slave2 -d -P centos-hadoop

同样打开3个终端进入到启动的3个容器中

$ docker exec -it hadoop-master bash

$ docker exec -it hadoop-slave1 bash

$ docker exec -it hadoop-slave2 bash

在各个容器的内部执行 vim /etc/hosts查看每个容器的ip

并为每个容器配置下列内容

172.18.0.2 master

172.18.0.3 slave1

172.18.0.4 slave2

配置ssh 免密登录

在每个容器内部执行下列命令

$ ssh-keygen

(执行后会有多个输入提示,不用输入任何内容,全部直接回车即可)

$ ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@master

$ ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@slave1

$ ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@slave2

每个容器执行 ssh-keygen命令

[root@hadoop-master /]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:0gySLsZy/97FMoRM1DBZLwdRC+14P1NKouLklyICsag root@hadoop-master

The key's randomart image is:

+---[RSA 3072]----+

| +==+. |

| o...+.. |

| o o .o+ |

|.. . + =.o+ . . |

|oo= . + So + o |

|++ o oo.. = |

|.. .+ .o.o o |

|E . ..+.o+ |

| . ooo. |

+----[SHA256]-----+

[root@hadoop-master /]#

每个容器配置免密登录,其中会输入root用户的密码

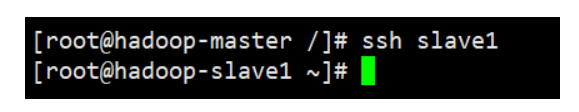

成功执行后,应该可以免密码登录到其他节点,这里以master节点ssh登录slave1节点为例

在master节点中进入到 HADOOP_HOME目录中

$ cd $HADOOP_HOME

进入其中的sbin目录中

执行下列命令:

$ hdfs namenode -format

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-fC6KDkYy-1609740768276)(C:\Users\ASUS\AppData\Roaming\Typora\typora-user-images\image-20210104123139156.png)]

$ hdfs --daemon start namenode

$ hdfs --daemon start datanode

最后执行启动命令

$ ./start-all.sh # 启动命令

$ ./stop-all.sh # 终止命令

启动成功

[root@hadoop-master sbin]# ./start-all.sh

Starting namenodes on [master]

master: namenode is running as process 343. Stop it first.

Starting datanodes

Last login: Mon Jan 4 04:31:52 UTC 2021

slave2: WARNING: /usr/local/hadoop/logs does not exist. Creating.

slave1: WARNING: /usr/local/hadoop/logs does not exist. Creating.

Starting secondary namenodes [master]

Last login: Mon Jan 4 04:31:52 UTC 2021

Starting resourcemanager

Last login: Mon Jan 4 04:32:00 UTC 2021

Starting nodemanagers

Last login: Mon Jan 4 04:32:11 UTC 2021

[root@hadoop-master sbin]# jps

1344 Jps

448 DataNode

343 NameNode

925 SecondaryNameNode

1183 ResourceManager

[root@hadoop-master sbin]#

#############################

[root@hadoop-slave2 /]# jps

296 DataNode

506 Jps

412 NodeManager

[root@hadoop-slave2 /]#

##########################

[root@hadoop-slave1 /]# jps

448 NodeManager

332 DataNode

541 Jps

[root@hadoop-slave1 /]#

617

617

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?