from datasets import load_dataset, DatasetDict

from transformers import AutoTokenizer, AutoModelForQuestionAnswering, TrainingArguments, Trainer, DefaultDataCollator

import nltk

nltk.download('punkt')

datasets = DatasetDict.load_from_disk("/remote-home/cs_tcci_huangyuqian/code/transformer-code-master/02-NLP Tasks/10-question_answering/mrc_data")

tokenizer = AutoTokenizer.from_pretrained("/remote-home/cs_tcci_huangyuqian/code/bert/chinese-macbert-base")

model = AutoModelForQuestionAnswering.from_pretrained("/remote-home/cs_tcci_huangyuqian/code/bert/chinese-macbert-base")

def process_func(examples):

tokenized_examples = tokenizer(text=examples["question"],

text_pair=examples["context"],

return_offsets_mapping=True,

return_overflowing_tokens=True,

stride=128,

max_length=384, truncation="only_second", padding="max_length")

sample_mapping = tokenized_examples.pop("overflow_to_sample_mapping")

start_positions = []

end_positions = []

example_ids = []

for idx, _ in enumerate(sample_mapping):

answer = examples["answers"][sample_mapping[idx]]

start_char = answer["answer_start"][0]

end_char = start_char + len(answer["text"][0])

# 定位答案在token中的起始位置和结束位置

# 一种策略,我们要拿到context的起始和结束,然后从左右两侧向答案逼近

context_start = tokenized_examples.sequence_ids(idx).index(1)

context_end = tokenized_examples.sequence_ids(idx).index(None, context_start) - 1

offset = tokenized_examples.get("offset_mapping")[idx]

# 判断答案是否在context中

if offset[context_end][1] < start_char or offset[context_start][0] > end_char:

start_token_pos = 0

end_token_pos = 0

else:

token_id = context_start

while token_id <= context_end and offset[token_id][0] < start_char:

token_id += 1

start_token_pos = token_id

token_id = context_end

while token_id >= context_start and offset[token_id][1] > end_char:

token_id -= 1

end_token_pos = token_id

start_positions.append(start_token_pos)

end_positions.append(end_token_pos)

example_ids.append(examples["id"][sample_mapping[idx]])

tokenized_examples["offset_mapping"][idx] = [

(o if tokenized_examples.sequence_ids(idx)[k] == 1 else None)

for k, o in enumerate(tokenized_examples["offset_mapping"][idx])

]

tokenized_examples["example_ids"] = example_ids

tokenized_examples["start_positions"] = start_positions

tokenized_examples["end_positions"] = end_positions

return tokenized_examples

tokenied_datasets = datasets.map(process_func, batched=True, remove_columns=datasets["train"].column_names)

import collections

# example 和 feature的映射

example_to_feature = collections.defaultdict(list)

for idx, example_id in enumerate(tokenied_datasets["train"]["example_ids"][:10]):

example_to_feature[example_id].append(idx)

import numpy as np

import collections

def get_result(start_logits, end_logits, exmaples, features):

predictions = {}

references = {}

# example 和 feature的映射

example_to_feature = collections.defaultdict(list)

for idx, example_id in enumerate(features["example_ids"]):

example_to_feature[example_id].append(idx)

# 最优答案候选

n_best = 20

# 最大答案长度

max_answer_length = 30

for example in exmaples:

example_id = example["id"]

context = example["context"]

answers = []

for feature_idx in example_to_feature[example_id]:

start_logit = start_logits[feature_idx]

end_logit = end_logits[feature_idx]

offset = features[feature_idx]["offset_mapping"]

start_indexes = np.argsort(start_logit)[::-1][:n_best].tolist()

end_indexes = np.argsort(end_logit)[::-1][:n_best].tolist()

for start_index in start_indexes:

for end_index in end_indexes:

if offset[start_index] is None or offset[end_index] is None:

continue

if end_index < start_index or end_index - start_index + 1 > max_answer_length:

continue

answers.append({

"text": context[offset[start_index][0]: offset[end_index][1]],

"score": start_logit[start_index] + end_logit[end_index]

})

if len(answers) > 0:

best_answer = max(answers, key=lambda x: x["score"])

predictions[example_id] = best_answer["text"]

else:

predictions[example_id] = ""

references[example_id] = example["answers"]["text"]

return predictions, references

from cmrc_eval import evaluate_cmrc

def metirc(pred):

start_logits, end_logits = pred[0]

if start_logits.shape[0] == len(tokenied_datasets["validation"]):

p, r = get_result(start_logits, end_logits, datasets["validation"], tokenied_datasets["validation"])

else:

p, r = get_result(start_logits, end_logits, datasets["test"], tokenied_datasets["test"])

return evaluate_cmrc(p, r)

args = TrainingArguments(

output_dir="models_for_qa",

per_device_train_batch_size=32,

per_device_eval_batch_size=32,

evaluation_strategy="steps",

eval_steps=200,

save_strategy="epoch",

logging_steps=50,

num_train_epochs=3

)

trainer = Trainer(

model=model,

args=args,

train_dataset=tokenied_datasets["train"],

eval_dataset=tokenied_datasets["validation"],

data_collator=DefaultDataCollator(),

compute_metrics=metirc

)

trainer.train()

from transformers import pipeline

pipe = pipeline("question-answering", model=model, tokenizer=tokenizer, device=0)

pipe(question="小明在哪里上班?", context="小明在北京上班")

https://www.bilibili.com/video/BV1rs4y1k7FX/?spm_id_from=333.788&vd_source=c88ccb26cdf0efeef470b1ac71b5deff

下载punkt安装包:链接点这里 提取码:lgs1

下载完成后解压在任一nltk_data路径下的tokenizers目录下即可!

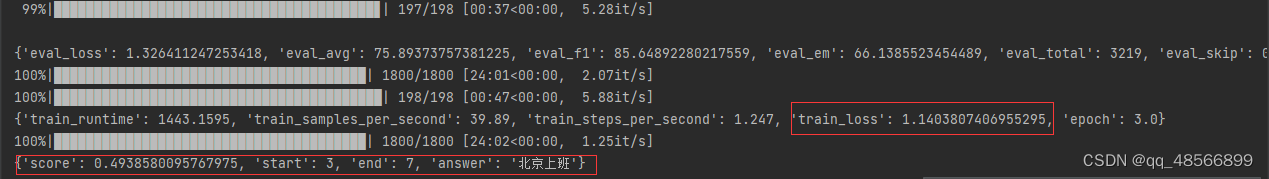

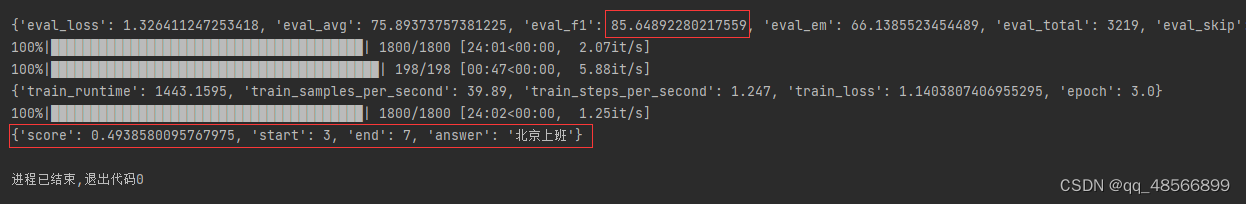

{'eval_loss': 1.326411247253418, 'eval_avg': 75.89373757381225, 'eval_f1': 85.64892280217559, 'eval_em': 66.1385523454489, 'eval_total': 3219, 'eval_skip': 0, 'eval_runtime': 47.4837, 'eval_samples_per_second': 133.246, 'eval_steps_per_second': 4.17, 'epoch': 3.0}

100%|███████████████████████████████████████| 1800/1800 [24:01<00:00, 2.07it/s]

100%|█████████████████████████████████████████| 198/198 [00:47<00:00, 5.88it/s]

{'train_runtime': 1443.1595, 'train_samples_per_second': 39.89, 'train_steps_per_second': 1.247, 'train_loss': 1.1403807406955295, 'epoch': 3.0}

100%|███████████████████████████████████████| 1800/1800 [24:02<00:00, 1.25it/s]

{'score': 0.4938580095767975, 'start': 3, 'end': 7, 'answer': '北京上班'}

进程已结束,退出代码0

803

803

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?