学习目标:

通过实战项目进行机器学习(一)

学习步骤:

1.对前程无忧网进行数据爬去

2.对所爬去的数据进行数据清洗

3.对数据进行可视化

4.进行特征工程

5.进行建模

–

学习内容:

一.利用selenium+Python对前程无忧网进行在这里插入代码片数据爬去

1.`对数据分析师工作岗位进行爬取

1)对首页的工作名称,工作地点等进行爬取

from selenium import webdriver

from selenium.webdriver.common.by import By

from time import sleep

from lxml import etree

import pandas as pd

#防止selenium被检测

def get_browser():

options = webdriver.ChromeOptions()

options.add_experimental_option('excludeSwitches', ['enable-automation'])

options.add_argument("--disable-blink-features=AutomationControlled")

driver = webdriver.Chrome(options=options)

driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {

"source": """

Object.defineProperty(navigator, 'webdriver', {

get: () => undefined

})

"""

})

return driver

#请求函数

def request_url(url):

driver = get_browser()

driver.get(url)#发起请求

sleep(10)

driver.implicitly_wait(20)

page_text = driver.page_source#返回html源码

return page_text

#解析初始页的工作的详情页函数

def any_firspage(page):

tree = etree.HTML(page)#对html源码进行解析

div_list = tree.xpath('//div[@class="j_joblist"]/div')#定位到每个子页面所在的父标签

for div in div_list:

job_name = div.xpath('./a/div[1]/span/text()')[0]

job_money = div.xpath('./a/p/span/text()')

job_loction = div.xpath('./a/p[1]/span[2]/span[1]/text()')

job_education = div.xpath('./a/p[1]/span[2]/span[5]/text()')

detail_url = div.xpath('./a/@href')

print(job_name,detail_url,job_money,job_loction,job_education)

if __name__=="__main__":

url = 'https://we.51job.com/pc/search?keyword=%E6%95%B0%E6%8D%AE%E5%88%86%E6%9E%90%E5%B8%88'

driver = get_browser()

page_text = request_url(url)

any_firspage(page_text)

效果如下

行业数据分析师 ['https://jobs.51job.com/shanghai/107108862.html?s=sou_sou_soulb&t=0_0'] ['8千-1.2万'] ['上海·杨浦区'] ['本科']

数据分析师 ['https://jobs.51job.com/huizhou/142530342.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['惠州'] ['本科']

数据分析师 ['https://jobs.51job.com/suzhou/143615794.html?s=sou_sou_soulb&t=0_0'] ['8千-1.2万'] ['苏州·工业园区'] ['大专']

数据分析师(医疗健康投融资方向) ['https://jobs.51job.com/beijing/145281378.html?s=sou_sou_soulb&t=0_0'] ['8千-1.6万·14薪'] ['上海·浦东新区'] ['硕士']

运维数据分析师(环保行业) ['https://jobs.51job.com/jian/145004115.html?s=sou_sou_soulb&t=0_0'] ['5-7千·13薪'] ['吉安'] ['大专']

商城数据分析师 ['https://jobs.51job.com/shanghai/144105359.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['上海·徐汇区'] ['本科']

数据分析师 ['https://jobs.51job.com/shanghai/139598001.html?s=sou_sou_soulb&t=0_0'] ['1-2万'] ['上海·杨浦区'] ['本科']

数据分析师 ['https://jobs.51job.com/yingtan/145270409.html?s=sou_sou_soulb&t=0_0'] ['7-8千·13薪'] ['鹰潭'] ['本科']

水质数据分析师 ['https://jobs.51job.com/yichune/143741308.html?s=sou_sou_soulb&t=0_0'] ['1-1.1万·13薪'] ['宜春'] ['硕士']

商城数据分析师 ['https://jobs.51job.com/qingdao/144105360.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['青岛·市北区'] ['本科']

数据分析师(BI) ['https://jobs.51job.com/guangzhou/145226682.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['广州·海珠区'] ['大专']

数据分析师 ['https://jobs.51job.com/shanghai/132585002.html?s=sou_sou_soulb&t=0_0'] ['1-1.7万'] ['上海·长宁区'] ['本科']

闪购-高级数据分析师 ['https://jobs.51job.com/beijing/145247527.html?s=sou_sou_soulb&t=0_0'] [] ['北京·朝阳区'] []

数据分析师 Data Insight Analyst ['https://jobs.51job.com/shanghai/128266074.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['上海·徐汇区'] ['本科']

数据分析师 ['https://jobs.51job.com/guangzhou/142617621.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['广州·天河区'] ['本科']

数据分析师(月薪15K起) ['https://jobs.51job.com/guangzhou/145122652.html?s=sou_sou_soulb&t=0_0'] ['9千-1.8万·14薪'] ['广州·越秀区'] ['本科']

电商数据分析师(长沙) ['https://jobs.51job.com/shenzhen/128815506.html?s=sou_sou_soulb&t=0_0'] ['1.3-2万'] ['长沙·岳麓区'] ['本科']

数据分析师 ['https://jobs.51job.com/huizhou/142861453.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['惠州'] ['本科']

报价数据分析师 ['https://jobs.51job.com/suzhou/139535613.html?s=sou_sou_soulb&t=0_0'] ['5千-1万'] ['苏州·工业园区'] ['本科']

量化数据分析师 ['https://jobs.51job.com/xian/141765650.html?s=sou_sou_soulb&t=0_0'] ['8千-1.6万'] ['西安·高新技术产业开发区'] ['硕士']

临床数据分析师 ['https://jobs.51job.com/nanjing/133443581.html?s=sou_sou_soulb&t=0_0'] ['8千-1.3万'] ['南京·建邺区'] ['本科']

数据分析师助理 ['https://jobs.51job.com/chengdu/143276399.html?s=sou_sou_soulb&t=0_0'] ['5-8千'] ['成都·武侯区'] ['大专']

商业数据分析师 ['https://jobs.51job.com/shanghai/141293359.html?s=sou_sou_soulb&t=0_0'] ['2.5-4万·13薪'] ['上海·静安区'] ['硕士']

模型数据分析师 ['https://jobs.51job.com/shanghai/128862781.html?s=sou_sou_soulb&t=0_0'] ['1.5-2万'] ['上海·徐汇区'] ['本科']

数据分析师(管培生) ['https://jobs.51job.com/wuhan/131546222.html?s=sou_sou_soulb&t=0_0'] ['6.5-8千'] ['武汉·洪山区'] ['本科']

初级数据分析师 ['https://jobs.51job.com/chengdu/145235090.html?s=sou_sou_soulb&t=0_0'] ['7-9千'] ['成都·武侯区'] ['大专']

风险数据分析师 ['https://jobs.51job.com/chengdu/145225726.html?s=sou_sou_soulb&t=0_0'] ['6-8千'] ['成都·双流区'] ['大专']

高级数据分析师Sr. Data Analyst ['https://jobs.51job.com/shanghai/109058606.html?s=sou_sou_soulb&t=0_0'] ['1.5-2万'] ['上海·徐汇区'] ['本科']

数据分析师 (MJ001697) ['https://jobs.51job.com/guangzhou/145230930.html?s=sou_sou_soulb&t=0_0'] ['1-1.7万·15薪'] ['广州'] ['本科']

数据分析师 ['https://jobs.51job.com/xian/131096252.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['西安·未央区'] ['本科']

数据分析师 ['https://jobs.51job.com/xining/100858222.html?s=sou_sou_soulb&t=0_0'] ['6千-1万'] ['西宁'] ['本科']

大数据分析师 ['https://jobs.51job.com/chengdu/145226007.html?s=sou_sou_soulb&t=0_0'] ['7千-1万'] ['成都·双流区'] ['本科']

数据分析师 ['https://jobs.51job.com/guangzhou/145173164.html?s=sou_sou_soulb&t=0_0'] ['10-18万/年'] ['广州·天河区'] ['本科']

2)进行翻页爬取

经过修改代码如下

from selenium import webdriver

from selenium.webdriver.common.by import By

from time import sleep

from lxml import etree

import pandas as pd

#防止selenium被检测

def get_browser():

options = webdriver.ChromeOptions()

options.add_experimental_option('excludeSwitches', ['enable-automation'])

options.add_argument("--disable-blink-features=AutomationControlled")

driver = webdriver.Chrome(options=options)

driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {

"source": """

Object.defineProperty(navigator, 'webdriver', {

get: () => undefined

})

"""

})

return driver

#解析初始页的工作的详情页函数

def any_firspage(page):

tree = etree.HTML(page)#对html源码进行解析

div_list = tree.xpath('//div[@class="j_joblist"]/div')#定位到每个子页面所在的父标签

for div in div_list:

job_name = div.xpath('./a/div[1]/span/text()')[0]

job_money = div.xpath('./a/p/span/text()')

job_loction = div.xpath('./a/p[1]/span[2]/span[1]/text()')

job_education = div.xpath('./a/p[1]/span[2]/span[5]/text()')

detail_url = div.xpath('./a/@href')

print(job_name,detail_url,job_money,job_loction,job_education)

if __name__=="__main__":

url = 'https://we.51job.com/pc/search?keyword=%E6%95%B0%E6%8D%AE%E5%88%86%E6%9E%90%E5%B8%88'

driver = get_browser()

driver.get(url) # 发起请求

sleep(10)

driver.implicitly_wait(20)

for page in range(1,10):

page_text = driver.page_source # 返回html源码

any_firspage(page_text)

driver.find_element(By.CLASS_NAME,'btn-next').click()

sleep(5)

print("正在爬取第{}页".format(page)

效果如下

正在爬取第8页

游戏数据分析师 ['https://jobs.51job.com/guangzhou/98084548.html?s=sou_sou_soulb&t=0_0'] ['8千-1.5万'] ['广州·黄埔区'] ['本科']

业务数据分析师-黄岛 ['https://jobs.51job.com/qingdao/143546870.html?s=sou_sou_soulb&t=0_0'] ['6-8千'] ['青岛·黄岛区'] ['本科']

数据分析师 ['https://jobs.51job.com/chongqing/137747326.html?s=sou_sou_soulb&t=0_0'] ['5-9千'] ['重庆·江北区'] ['大专']

数据分析工程师 ['https://jobs.51job.com/shanghai/143685043.html?s=sou_sou_soulb&t=0_0'] ['1-1.6万'] ['上海·浦东新区'] ['大专']

数据分析师/数据统计专员(六险一金+双休) ['https://jobs.51job.com/wuhan/136653949.html?s=sou_sou_soulb&t=0_0'] ['8千-1.2万'] ['武汉·江汉区'] ['硕士']

数据分析师(BI方向) ['https://jobs.51job.com/wuxi/144214723.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['无锡'] ['本科']

数据分析师 ['https://jobs.51job.com/nanning/141561196.html?s=sou_sou_soulb&t=0_0'] ['5-7千'] ['南宁'] ['大专']

数据分析师 ['https://jobs.51job.com/shenzhen/126301768.html?s=sou_sou_soulb&t=0_0'] ['8-9千'] ['深圳·福田区'] ['本科']

数据分析师 ['https://jobs.51job.com/guangzhou/134894646.html?s=sou_sou_soulb&t=0_0'] ['5-8千'] ['广州·越秀区'] ['大专']

数据分析师 ['https://jobs.51job.com/shanghai/137306785.html?s=sou_sou_soulb&t=0_0'] ['2-2.5万'] ['上海·浦东新区'] ['本科']

大数据分析工程师 ['https://jobs.51job.com/wuhan/143863709.html?s=sou_sou_soulb&t=0_0'] ['1.2-2.2万'] ['武汉'] ['本科']

数据分析师 ['https://jobs.51job.com/shanghai/137276906.html?s=sou_sou_soulb&t=0_0'] ['1-2万'] ['上海·黄浦区'] ['本科']

呼叫中心数据分析师//排班专员 ['https://jobs.51job.com/chongqing/144892689.html?s=sou_sou_soulb&t=0_0'] ['5-6千·13薪'] ['重庆'] ['大专']

数据分析师 ['https://jobs.51job.com/wuxi/132769835.html?s=sou_sou_soulb&t=0_0'] ['4.5-6千'] ['无锡'] ['大专']

数据分析工程师 ['https://jobs.51job.com/shanghai/144229303.html?s=sou_sou_soulb&t=0_0'] ['1-2万'] ['上海·奉贤区'] ['本科']

数据分析师(经营分析) ['https://jobs.51job.com/wuhan/144006368.html?s=sou_sou_soulb&t=0_0'] ['1-2万'] ['湖北省'] ['大专']

数据分析师 ['https://jobs.51job.com/wuxi/143800368.html?s=sou_sou_soulb&t=0_0'] ['8千-1万'] ['无锡'] ['本科']

售后工程师(数据分析能力强) ['https://jobs.51job.com/dongguan/144376937.html?s=sou_sou_soulb&t=0_0'] ['8千-1万'] ['东莞·南城区'] ['本科']

游戏数据分析师 ['https://jobs.51job.com/shenzhen/143560035.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['深圳·龙岗区'] ['本科']

高级产品分析师(产品数据对接方向) ['https://jobs.51job.com/guangzhou/142833234.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['广州·增城区'] ['大专']

数据分析工程师 ['https://jobs.51job.com/shanghai/130191817.html?s=sou_sou_soulb&t=0_0'] ['5千-1万'] ['上海'] ['本科']

数据分析工程师(湖南-长沙-走航)(J10577) ['https://jobs.51job.com/changsha/143811185.html?s=sou_sou_soulb&t=0_0'] ['8千-1万'] ['长沙'] ['硕士']

数据分析师 ['https://jobs.51job.com/hefei/140460279.html?s=sou_sou_soulb&t=0_0'] ['8千-1.5万·14薪'] ['合肥·新站区'] ['本科']

数据分析师(周末双休) ['https://jobs.51job.com/guangzhou/144671068.html?s=sou_sou_soulb&t=0_0'] ['8千-1万'] ['广州·越秀区'] ['大专']

数据分析师 ['https://jobs.51job.com/shanghai/140404847.html?s=sou_sou_soulb&t=0_0'] ['1.4-1.5万·13薪'] ['上海·浦东新区'] ['本科']

数据分析助理工程师 ['https://jobs.51job.com/kunshan/143217033.html?s=sou_sou_soulb&t=0_0'] ['6-8千'] ['昆山'] ['本科']

中级产品分析师 (产品数据对接方向) ['https://jobs.51job.com/guangzhou/142835207.html?s=sou_sou_soulb&t=0_0'] ['7千-1万'] ['广州·增城区'] ['大专']

日语数据分析工程师(141672) ['https://jobs.51job.com/shenzhen/139532257.html?s=sou_sou_soulb&t=0_0'] ['2.5-5万'] ['深圳'] ['本科']

数据分析师 ['https://jobs.51job.com/guangzhou/141730972.html?s=sou_sou_soulb&t=0_0'] ['4-8千'] ['广州·白云区'] ['本科']

临床数据分析师 ['https://jobs.51job.com/beijing/145086288.html?s=sou_sou_soulb&t=0_0'] ['1.5-2.5万·14薪'] ['北京·昌平区'] ['硕士']

数据分析工程师(运维-数据)(J10579) ['https://jobs.51job.com/guangzhou/143811920.html?s=sou_sou_soulb&t=0_0'] ['6-9千'] ['长沙'] ['本科']

数据分析师(142465)餐补交通费 ['https://jobs.51job.com/shanghai/140194726.html?s=sou_sou_soulb&t=0_0'] ['1.2-1.5万'] ['上海'] ['大专']

数据运营分析师 ['https://jobs.51job.com/hangzhou/144233186.html?s=sou_sou_soulb&t=0_0'] ['7千-1万'] ['杭州·拱墅区'] ['大专']

数据分析师 ['https://jobs.51job.com/huangshi/143476198.html?s=sou_sou_soulb&t=0_0'] ['1-1.5万'] ['黄石'] ['本科']

数据分析师 ['https://jobs.51job.com/guangzhou/137623445.html?s=sou_sou_soulb&t=0_0'] ['9千-1.2万'] ['广州·天河区'] ['本科']

制造系数据分析师(144143)14薪起 ['https://jobs.51job.com/suzhou/141208877.html?s=sou_sou_soulb&t=0_0'] ['1.2-2.2万·14薪'] ['苏州'] ['本科']

医药学数据分析师 ['https://jobs.51job.com/wuhan/125764752.html?s=sou_sou_soulb&t=0_0'] ['6-8千'] ['武汉·武昌区'] ['本科']

初级数据开发工程师/数据分析师 ['https://jobs.51job.com/shanghai/144918779.html?s=sou_sou_soulb&t=0_0'] ['8千-1万'] ['上海·长宁区'] ['本科']

捷豹路虎技术数据分析师 ['https://jobs.51job.com/shanghai/138816632.html?s=sou_sou_soulb&t=0_0'] ['1-1.2万'] ['上海·浦东新区'] ['大专']

临床数据分析师 ['https://jobs.51job.com/shanghai/142054396.html?s=sou_sou_soulb&t=0_0'] ['2-3.5万·14薪'] ['上海'] ['本科']

数据分析师 ['https://jobs.51job.com/xiamen/135791088.html?s=sou_sou_soulb&t=0_0'] ['1.5-3万'] ['厦门·思明区'] ['本科']

数据分析师 ['https://jobs.51job.com/shenzhen/142233819.html?s=sou_sou_soulb&t=0_0'] ['1.6-3.2万'] ['深圳'] ['本科']

初级产品分析师 (产品数据对接方向) ['https://jobs.51job.com/guangzhou/142835981.html?s=sou_sou_soulb&t=0_0'] ['5-7千'] ['广州·增城区'] ['大专']

数据分析师/助理 ['https://jobs.51job.com/xian/139796685.html?s=sou_sou_soulb&t=0_0'] ['4-8千'] ['西安·高新技术产业开发区'] ['大专']

数据分析师 ['https://jobs.51job.com/nanjing/125771863.html?s=sou_sou_soulb&t=0_0'] ['4.5-6千'] ['南京·雨花台区'] ['大专']

电商数据分析师(144241)补贴奖金优 日语英语必须 ['https://jobs.51job.com/shanghai/141292343.html?s=sou_sou_soulb&t=0_0'] ['8千-1.5万'] ['上海'] ['大专']

数据分析工程师 ['https://jobs.51job.com/shanghai/143794908.html?s=sou_sou_soulb&t=0_0'] ['1.5-2.5万'] ['上海·闵行区'] ['本科']

金融数据分析师(双休、不加班) ['https://jobs.51job.com/guangzhou/143714318.html?s=sou_sou_soulb&t=0_0'] ['6-8千'] ['广州'] ['大专']

数据分析工程师 ['https://jobs.51job.com/shanghai/140661976.html?s=sou_sou_soulb&t=0_0'] ['7千-1.2万'] ['上海·普陀区'] ['本科']

初级数据分析师 500强16薪可能(148626) 日语英语必须 ['https://jobs.51job.com/shanghai/145143574.html?s=sou_sou_soulb&t=0_0'] ['7千-1万'] ['上海'] ['本科']

正在爬取第9页

3)将爬取的内容存为csv

经过修改最终代码如下

from selenium import webdriver

from selenium.webdriver.common.by import By

from time import sleep

from lxml import etree

import pandas as pd

import csv

#防止selenium被检测

def get_browser():

options = webdriver.ChromeOptions()

options.add_experimental_option('excludeSwitches', ['enable-automation'])

options.add_argument("--disable-blink-features=AutomationControlled")

driver = webdriver.Chrome(options=options)

driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {

"source": """

Object.defineProperty(navigator, 'webdriver', {

get: () => undefined

})

"""

})

return driver

#解析初始页的工作的详情页函数

def any_firspage(page):

table = [] # 构建列表存放每次爬取的内容

tree = etree.HTML(page)#对html源码进行解析

div_list = tree.xpath('//div[@class="j_joblist"]/div')#定位到每个子页面所在的父标签

for div in div_list:

job_name = div.xpath('./a/div[1]/span/text()')[0]#定位到工作名位置提取内容

salary = div.xpath('./a/p/span/text()')

company_name = div.xpath('./div[2]/a/text()')

job_location = div.xpath('./a/p[1]/span[2]/span[1]/text()')

job_education = div.xpath('./a/p[1]/span[2]/span[5]/text()')

detail_url = div.xpath('./a/@href')

dit = {

'工作名称': job_name,

'薪资': salary,

'公司名称': company_name,

'工作地点': job_location,

'学历': job_education,

'详情页链接': detail_url

}

csvwriter.writerow(dit)#将每次所获取的数据按行写入构建的csv中

if __name__=="__main__":

#创建一个csv用于存取数据

with open(f'qiancheng.csv','w',encoding='ANSI',newline='') as filename:

csvwriter = csv.DictWriter(filename,fieldnames=[

'工作名称',

'薪资',

'公司名称',

'工作地点',

'学历',

'详情页链接',

])

csvwriter.writeheader()#将以上内容写入表头

url = 'https://we.51job.com/pc/search?keyword=%E6%95%B0%E6%8D%AE%E5%88%86%E6%9E%90%E5%B8%88'

driver = get_browser()

driver.get(url) # 发起请求

sleep(10)#延迟10秒

driver.implicitly_wait(20)#影视等待,等待页面刷新完成

for page in range(1,10):

page_text = driver.page_source # 返回html源码

any_firspage(page_text)#调用解析函数

driver.find_element(By.CLASS_NAME,'btn-next').click()#定位到下一页按钮进行点击完成翻页

sleep(5)

print("正在爬取第{}页".format(page))

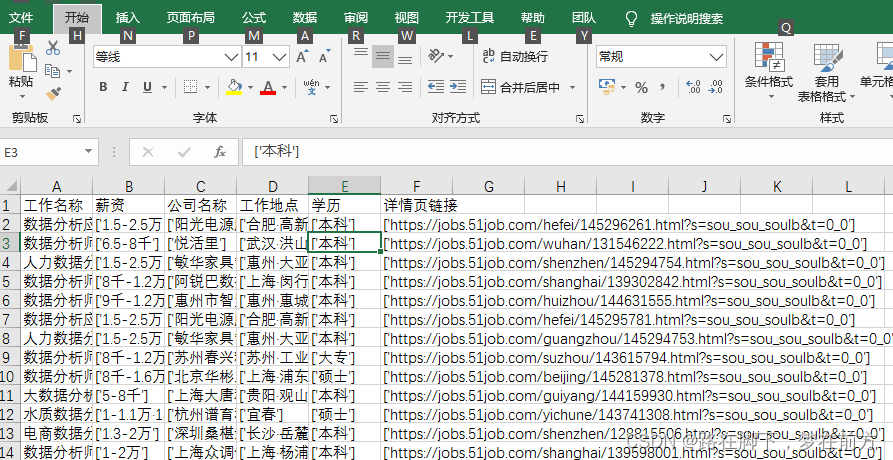

其结果如图

以上是数据爬取阶段,接下来是数据清洗阶段

#这是我第一次进行爬虫项目实战,欢迎各位大佬指点

#未完待续

1157

1157

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?