DashBoard

之前在kubernetes中完成的所有操作都是通过命令行工具kubectl完成的。其实,为了提供更丰富的用户体验,kubernetes还开发了一个基于web的用户界面(Dashboard)。用户可以使用Dashboard部署容器化的应用,还可以监控应用的状态,执行故障排查以及管理kubernetes中各种资源。

一. 部署Dashboard

1)下载yaml,并运行Dashboard

# 下载yaml

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

[root@k8s-master ~]# ls

anaconda-ks.cfg dashboard.yml kube-flannel.yml

[root@k8s-master ~]# vim dashboard.yml

# 修改kubernetes-dashboard的Service类型

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort # 新增

ports:

- port: 443

targetPort: 8443

nodePort: 30009 # 新增

selector:

k8s-app: kubernetes-dashboard

- 文件如下

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.2

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.2

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

# 部署

[root@k8s-master ~]# kubectl apply -f dashboard.yml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

# 查看namespace下的kubernetes-dashboard下的资源

[root@k8s-master ~]# kubectl get pod,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-64bcc67c9c-jpchc 0/1 Pending 0 106s

pod/kubernetes-dashboard-5c8bd6b59-gvpnl 0/1 Pending 0 106s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.106.233.243 <none> 8000/TCP 106s

service/kubernetes-dashboard NodePort 10.106.97.237 <none> 443:30001/TCP 107s

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-64bcc67c9c-jpchc 0/1 Pending 0 3m16s

kubernetes-dashboard-5c8bd6b59-gvpnl 0/1 Pending 0 3m16s

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-64bcc67c9c-jpchc 0/1 Pending 0 6m53s

kubernetes-dashboard-5c8bd6b59-gvpnl 0/1 Pending 0 6m53s

[root@k8s-master ~]# kubectl describe pod -n kubernetes-dashboard dashboard-metrics-scraper-64bcc67c9c-jpchc

Name: dashboard-metrics-scraper-64bcc67c9c-jpchc

Namespace: kubernetes-dashboard

Priority: 0

Service Account: kubernetes-dashboard

Node: <none>

Labels: k8s-app=dashboard-metrics-scraper

pod-template-hash=64bcc67c9c

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/dashboard-metrics-scraper-64bcc67c9c

Containers:

dashboard-metrics-scraper:

Image: kubernetesui/metrics-scraper:v1.0.8

Port: 8000/TCP

Host Port: 0/TCP

Liveness: http-get http://:8000/ delay=30s timeout=30s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/tmp from tmp-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-zxkr2 (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

tmp-volume:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

kube-api-access-zxkr2:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: kubernetes.io/os=linux

Tolerations: node-role.kubernetes.io/master:NoSchedule

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 8m9s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 node(s) had untolerated taint {node.kubernetes.io/unreachable: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

Warning FailedScheduling 2m51s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 node(s) had untolerated taint {node.kubernetes.io/unreachable: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-64bcc67c9c-jpchc 0/1 Pending 0 9m56s

kubernetes-dashboard-5c8bd6b59-gvpnl 0/1 Pending 0 9m56s

[root@k8s-master ~]# kubectl describe pod -n kubernetes-dashboard kubernetes-dashboard-5c8bd6b59-gvpnl

Name: kubernetes-dashboard-5c8bd6b59-gvpnl

Namespace: kubernetes-dashboard

Priority: 0

Service Account: kubernetes-dashboard

Node: <none>

Labels: k8s-app=kubernetes-dashboard

pod-template-hash=5c8bd6b59

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/kubernetes-dashboard-5c8bd6b59

Containers:

kubernetes-dashboard:

Image: kubernetesui/dashboard:v2.7.0

Port: 8443/TCP

Host Port: 0/TCP

Args:

--auto-generate-certificates

--namespace=kubernetes-dashboard

Liveness: http-get https://:8443/ delay=30s timeout=30s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/certs from kubernetes-dashboard-certs (rw)

/tmp from tmp-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-5znqq (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

kubernetes-dashboard-certs:

Type: Secret (a volume populated by a Secret)

SecretName: kubernetes-dashboard-certs

Optional: false

tmp-volume:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

kube-api-access-5znqq:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: kubernetes.io/os=linux

Tolerations: node-role.kubernetes.io/master:NoSchedule

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 10m default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 node(s) had untolerated taint {node.kubernetes.io/unreachable: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

Warning FailedScheduling 5m4s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 node(s) had untolerated taint {node.kubernetes.io/unreachable: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

[root@k8s-node1 ~]# docker pull kubernetesui/dashboard:v2.7.0

v2.7.0: Pulling from kubernetesui/dashboard

ee3247c7e545: Pull complete

8e052fd7e2d0: Pull complete

Digest: sha256:2e500d29e9d5f4a086b908eb8dfe7ecac57d2ab09d65b24f588b1d449841ef93

Status: Downloaded newer image for kubernetesui/dashboard:v2.7.0

docker.io/kubernetesui/dashboard:v2.7.0

2)创建访问账户,获取token

创建服务帐户

[root@k8s-master dashboard]# cat user.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kubernetes-dashboard

创建集群RoleBinding

[root@k8s-master dashboard]# cat ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin

namespace: kubernetes-dashboard

[root@k8s-master dashboard]#

[root@k8s-master dashboard]# kubectl apply -f user.yml

serviceaccount/admin created

[root@k8s-master dashboard]# kubectl apply -f ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/admin created

[root@k8s-master dashboard]#

[root@k8s-master dashboard]# kubectl get -f user.yml NAME SECRETS AGE

admin 0 26s

[root@k8s-master dashboard]# kubectl get -f ClusterRoleBinding NAME ROLE AGE

admin ClusterRole/cluster-admin 28s

[root@k8s-master dashboard]#

# 创建账号token

[root@k8s-master dashboard]# kubectl -n kubernetes-dashboard create token admin

eyJhbGciOiJSUzI1NiIsImtpZCI6IkNCaENTR3BMRlhEckRlWmduYXNZaXktUVdqbWNOYWNTbnRVSTU2Q3RxWXcifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjYzNTk3MDAyLCJpYXQiOjE2NjM1OTM0MDIsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbiIsInVpZCI6IjI4YzZiNDhiLWY4MzEtNDYxNS1hNWIzLTc1MmVjYjZlYzIyZiJ9fSwibmJmIjoxNjYzNTkzNDAyLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4ifQ.QKJ4k1mWtFMCvrb3pg85Rzor3IAK9Y968sVu_Wg9cJ8DW6cKIDi3thFdvFWC3OmDHQMtxPrBwMRoKvCWlKpQ_kn4EU1eQGTNQJjStz9_J2N-thdpd1tROG-SSkUENWt173ob4bR_PPpKsEjske-kgtQEkuFbaXsq9wVXOMwIaFLixfRu5MqcaVSjbjMZDp2TYSCiG2PCA0Wqh8iD3fto9k9xinL-wMSxc-QU-lT_LU3oYOXxWUOd-klYPEm6jhimlxbfRy4UWDeZh9U4P_Lg9kKjgD7gvFkm0ReUZBKZshN50bBC0jWFCbbuZ1tbOvHZps57gK7tEQIqpHcDkiJsfw

[root@k8s-master dashboard]#

[root@k8s-master ~]# kubectl get serviceaccount -n kubernetes-dashboard

NAME SECRETS AGE

dashboard-admin 0 5m20s

default 0 24m

kubernetes-dashboard 0 24m

[root@k8s-master ~]#

删除用户

[root@k8s-master ~]# kubectl delete serviceaccount dashboard-admin -n kubernetes-dashboard

serviceaccount "dashboard-admin" deleted

[root@k8s-master ~]#

删除授权

[root@k8s-master ~]# kubectl delete clusterrolebinding dashboard-admin-rb

clusterrolebinding.rbac.authorization.k8s.io "dashboard-admin-rb" deleted

[root@k8s-master ~]#

- 删除admin ServiceAccount和ClusterRoleBinding。

kubectl -n kubernetes-dashboard delete serviceaccount admin

kubectl -n kubernetes-dashboard delete clusterrolebinding admin

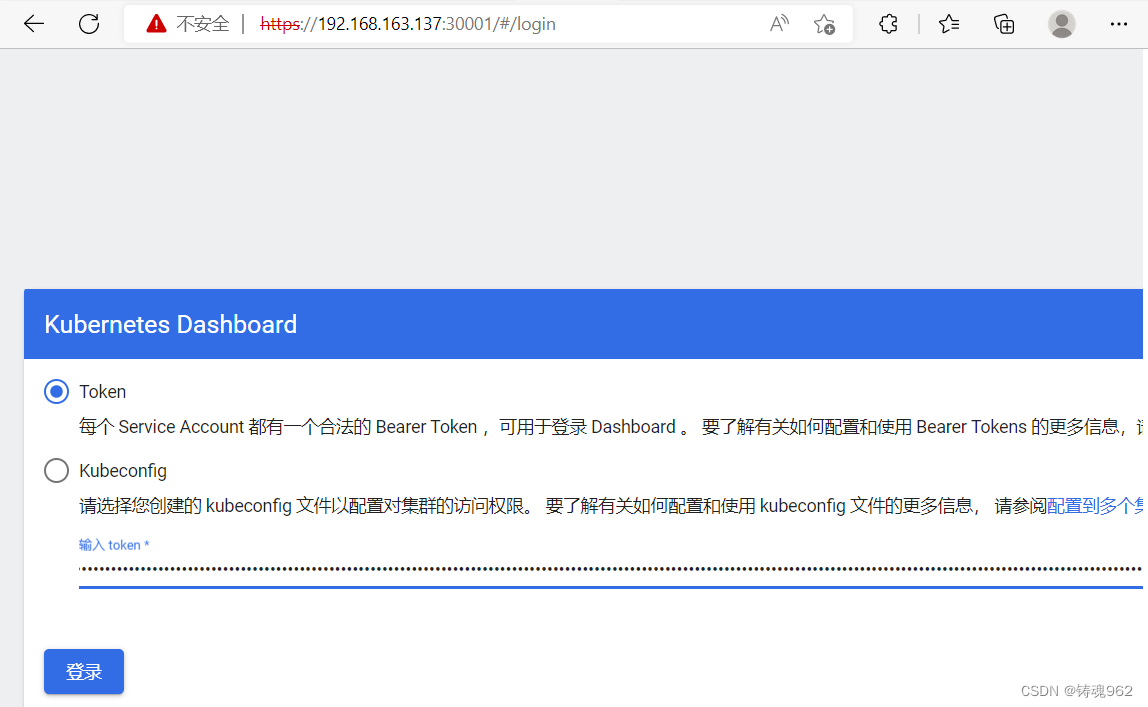

3)通过浏览器访问Dashboard的UI

在登录页面上输入上面的token

二. 使用DashBoard

创建一个pod

[root@k8s-master manifest]# cat httpd.yml

apiVersion: v1

kind: Pod

metadata:

name: pod-pullimage

namespace: dev

labels:

app: httpdlab

spec:

nodeName: k8s-node2

containers:

- name: httpd

image: httpd:latest

imagePullPolicy: IfNotPresent

[root@k8s-master manifest]#

[root@k8s-master manifest]# kubectl apply -f httpd.yml

pod/pod-pullimage created

[root@k8s-master manifest]# kubectl get -f httpd.yml

NAME READY STATUS RESTARTS AGE

pod-pullimage 1/1 Running 0 2s

[root@k8s-master manifest]# kubectl get pods

No resources found in default namespace.

[root@k8s-master manifest]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pod-pullimage 1/1 Running 0 15s

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?