##

RKE是一款经过CNCF认证的开源Kubernetes发行版,可以在Docker容器内运行。它通过删除大部分主机依赖项,并为部署、升级和回滚提供一个稳定的路径,从而解决了Kubernetes最常见的安装复杂性问题。

借助RKE,Kubernetes可以完全独立于正在运行的操作系统和平台,轻松实现Kubernetes的自动化运维。只要运行受支持的Docker版本,就可以通过RKE部署和运行Kubernetes。仅需几分钟,RKE便可通过单条命令构建一个集群,其声明式配置使Kubernetes升级操作具备原子性且安全。

rancher官网:https://docs.rancher.cn/

##环境初始化

#所有主机执行

#配置本地hosts文件

#根据具体主句ip地址配置

echo 192.168.12.21 app1-12.21 >> /etc/hosts

echo 192.168.12.22 app2-12.22 >> /etc/hosts

echo 192.168.12.23 app3-12.23 >> /etc/hosts

echo 192.168.12.24 app4-12.24 >> /etc/hosts

cat /etc/hosts

#关闭sawp

sed -ri 's/.*swap.*/#&/' /etc/fstab

#时间同步

yum -y install ntpdate

crontab -e

0 * * * * /usr/sbin/ntpdate ntp.aliyun.com &>/dev/null

#关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

#配置 ip_forward 及过滤机制

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

modprobe br_netfilter

sysctl -p /etc/sysctl.conf

##部署docker

#yum安装gcc相关

yum -y install gcc gcc-c++ yum-utils

#卸载旧版本

yum -y remove docker*

rm -rf /opt/docker

rm -rf /opt/containerd

rm -rf /var/lib/docker

rm -rf /etc/docker/daemon.json

#配置国内镜像docker源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#查询需要安装的版本

yum list docker-ce --showduplicates | sort -r

yum list docker-ce-cli --showduplicates | sort -r

yum list containerd.io --showduplicates | sort -r

#选择版本安装

yum install docker-ce-20.10.18-3.el7 docker-ce-cli-20.10.18-3.el7 containerd.io -y

#调整docker工作目录

#默认情况下Docker的目录存放位置为:/var/lib/docker,需修改指定工作目录:/opt/docker

vim /usr/lib/systemd/system/docker.service

#注释掉

#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

#新增

ExecStart=/usr/bin/dockerd --graph /opt/docker

###其中/opt/docker代表docker需要存放的目录

#wq保存配置文件

#新建/opt/docker目录

mkdir -p /opt/docker

#配置镜像加速

cat >> /etc/docker/daemon.json <<EOF

{

"oom-score-adjust": -1000,

"max-concurrent-downloads": 3,

"max-concurrent-uploads": 10,

"registry-mirrors": ["https://1ybmbiq3.mirror.aliyuncs.com","https://7bezldxe.mirror.aliyuncs.com/"],

"insecure-registries":["125.71.215.213:5000","docker.funi.local:5000"] ,

"storage-driver": "overlay2",

"storage-opts": ["overlay2.override_kernel_check=true"],

"log-driver": "json-file",

"log-opts": { "max-size": "100m", "max-file": "3" },

"data-root": "/opt/docker"

}

EOF

#重新加载,重启docker服务1

systemctl daemon-reload

systemctl restart docker

##配置ssh免密

#添加rancher用户

useradd rancher

usermod -aG docker rancher

echo 123 | passwd --stdin rancher

#登录rancher用户生成ssh证书

su - rancher

ssh-keygen

ssh-copy-id rancher@192.168.12.21

ssh-copy-id rancher@192.168.12.22

ssh-copy-id rancher@192.168.12.23

ssh-copy-id rancher@192.168.12.24 ##注意本机也需要进行免密!

#测试验证

ssh rancher@192.168.12.23

##部署rke工具

wget https://github.com/rancher/rke/releases/download/v1.3.19/rke_linux-amd64

mv /home/rancher/rke_linux-amd64 /usr/local/bin/rke

chmod +x /usr/local/bin/rke

rke --version

#生成部署配置文件(注意切换到部署的普通用户)

rke config --name cluster.yml

[+] Cluster Level SSH Private Key Path [~/.ssh/id_rsa]: #ssh密钥位置

[+] Number of Hosts [1]: 4 #节点数

[+] SSH Address of host (1) [none]: 192.168.12.24 #节点ip

[+] SSH Port of host (1) [22]: #ssh连接端口

[+] SSH Private Key Path of host (192.168.12.24) [none]: ~/.ssh/id_rsa #主机秘钥路径

[+] SSH User of host (192.168.12.24) [ubuntu]: rancher #登录主机用户

[+] Is host (192.168.12.24) a Control Plane host (y/n)? [y]: y #是否部署Control Plane

[+] Is host (192.168.12.24) a Worker host (y/n)? [n]: y #是否部署Worker

[+] Is host (192.168.12.24) an etcd host (y/n)? [n]: y #是否部署etcd

[+] Override Hostname of host (192.168.12.24) [none]: #是否覆盖现有主机名

[+] Internal IP of host (192.168.12.24) [none]: #主机局域网 IP 地址

[+] Docker socket path on host (192.168.12.24) [/var/run/docker.sock]: #主机上 docker.sock 路径

[+] SSH Address of host (2) [none]: 192.168.12.23 ##后面同上 根据具体情况而定

[+] SSH Port of host (2) [22]:

[+] SSH Private Key Path of host (192.168.12.23) [none]: ~/.ssh/id_rsa

[+] SSH User of host (192.168.12.23) [ubuntu]:rancher

[+] Is host (192.168.12.23) a Control Plane host (y/n)? [y]: y

[+] Is host (192.168.12.23) a Worker host (y/n)? [n]: y

[+] Is host (192.168.12.23) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (192.168.12.23) [none]:

[+] Internal IP of host (192.168.12.23) [none]:

[+] Docker socket path on host (192.168.12.23) [/var/run/docker.sock]:

[+] SSH Address of host (3) [none]: 192.168.12.22

[+] SSH Port of host (3) [22]:

[+] SSH Private Key Path of host (192.168.12.22) [none]: ~/.ssh/id_rsa

[+] SSH User of host (192.168.12.22) [ubuntu]: rancher

[+] Is host (192.168.12.22) a Control Plane host (y/n)? [y]: n

[+] Is host (192.168.12.22) a Worker host (y/n)? [n]: y

[+] Is host (192.168.12.22) an etcd host (y/n)? [n]: n

[+] Override Hostname of host (192.168.12.22) [none]:

[+] Internal IP of host (192.168.12.22) [none]:

[+] Docker socket path on host (192.168.12.22) [/var/run/docker.sock]:

[+] SSH Address of host (4) [none]: 192.168.12.21

[+] SSH Port of host (4) [22]:

[+] SSH Private Key Path of host (192.168.12.21) [none]: ~/.ssh/id_rsa

[+] SSH User of host (192.168.12.21) [ubuntu]: rancher

[+] Is host (192.168.12.21) a Control Plane host (y/n)? [y]: n

[+] Is host (192.168.12.21) a Worker host (y/n)? [n]: y

[+] Is host (192.168.12.21) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (192.168.12.21) [none]:

[+] Internal IP of host (192.168.12.21) [none]:

[+] Docker socket path on host (192.168.12.21) [/var/run/docker.sock]:

[+] Network Plugin Type (flannel, calico, weave, canal, aci) [canal]: flannel #选择使用的网络插件

[+] Authentication Strategy [x509]: #认证策略默认即可

[+] Authorization Mode (rbac, none) [rbac]: #认证模式

[+] Kubernetes Docker image [rancher/hyperkube:v1.24.10-rancher4]: rancher/hyperkube:v1.20.15-rancher1 #集群容器镜像

[+] Cluster domain [cluster.local]: #集群域名

[+] Service Cluster IP Range [10.43.0.0/16]: #集群中 Servic IP 地址范围

[+] Enable PodSecurityPolicy [n]: #是否开启 Pod 安全策略

[+] Cluster Network CIDR [10.42.0.0/16]: #集群 Pod 网络

[+] Cluster DNS Service IP [10.43.0.10]: #集群 DNS Service IP 地址

[+] Add addon manifest URLs or YAML files [no]: #是否增加插件 manifest URL 或配置文件

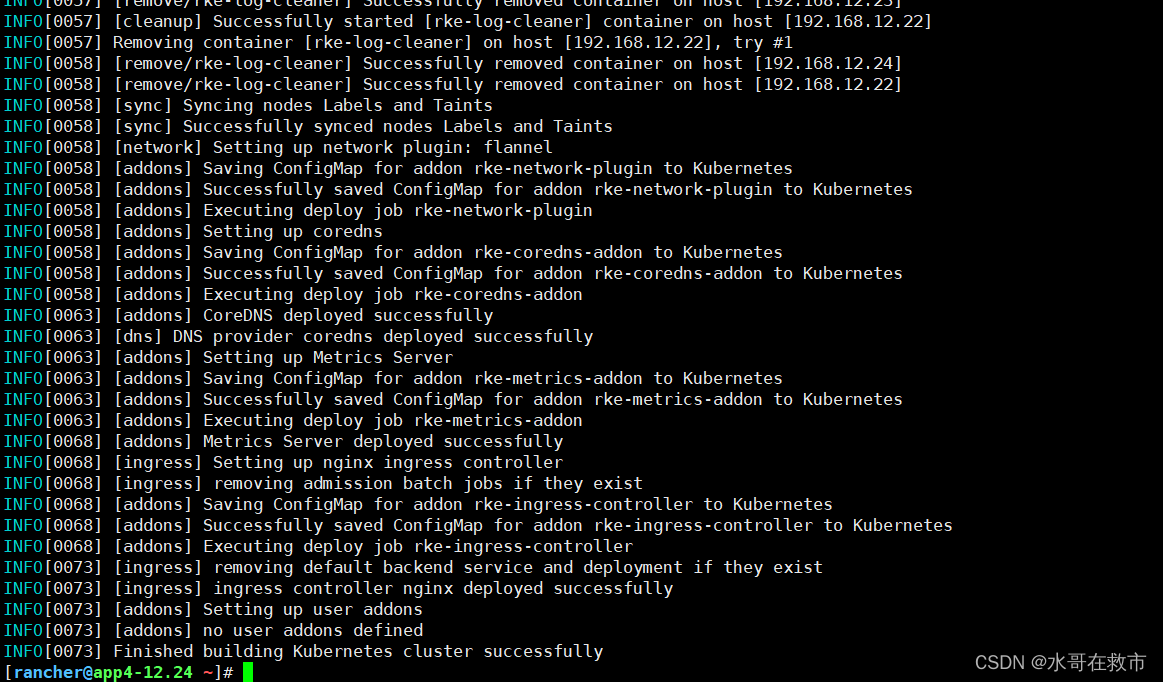

#执行部署

rke up --config cluster.yml

##亲测大坑!!亲测大坑!!配置文件手动修改了ingress镜像哦,试了下高版本的导致ingress服务故障,所以使用了nginx-1.2.1-rancher1版本。

##集群更新操作 rke up --update-only --config cluster.yml

#配置文件如下,直接使用需按实际情况修改

cat >> cluster.yml <<EOF

# If you intended to deploy Kubernetes in an air-gapped environment,

# please consult the documentation on how to configure custom RKE images.

nodes:

- address: 192.168.12.24

port: "22"

internal_address: ""

role:

- controlplane

- worker

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.12.23

port: "22"

internal_address: ""

role:

- controlplane

- worker

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.12.22

port: "22"

internal_address: ""

role:

- worker

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: 192.168.12.21

port: "22"

internal_address: ""

role:

- worker

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: flannel

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.5.4

alpine: rancher/rke-tools:v0.1.88

nginx_proxy: rancher/rke-tools:v0.1.88

cert_downloader: rancher/rke-tools:v0.1.88

kubernetes_services_sidecar: rancher/rke-tools:v0.1.88

kubedns: rancher/mirrored-k8s-dns-kube-dns:1.21.1

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.21.1

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.21.1

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

coredns: rancher/mirrored-coredns-coredns:1.9.3

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.21.1

kubernetes: rancher/hyperkube:v1.20.15-rancher1

flannel: rancher/mirrored-coreos-flannel:v0.15.1

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/mirrored-calico-node:v3.22.5

calico_cni: rancher/calico-cni:v3.22.5-rancher1

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.22.5

calico_ctl: rancher/mirrored-calico-ctl:v3.22.5

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.5

canal_node: rancher/mirrored-calico-node:v3.22.5

canal_cni: rancher/calico-cni:v3.22.5-rancher1

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.22.5

canal_flannel: rancher/mirrored-flannelcni-flannel:v0.17.0

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.5

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.6

ingress: rancher/nginx-ingress-controller:nginx-1.2.1-rancher1

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.1.1

metrics_server: rancher/mirrored-metrics-server:v0.6.2

windows_pod_infra_container: rancher/mirrored-pause:3.6

aci_cni_deploy_container: noiro/cnideploy:5.2.3.5.1d150da

aci_host_container: noiro/aci-containers-host:5.2.3.5.1d150da

aci_opflex_container: noiro/opflex:5.2.3.5.1d150da

aci_mcast_container: noiro/opflex:5.2.3.5.1d150da

aci_ovs_container: noiro/openvswitch:5.2.3.5.1d150da

aci_controller_container: noiro/aci-containers-controller:5.2.3.5.1d150da

aci_gbp_server_container: noiro/gbp-server:5.2.3.5.1d150da

aci_opflex_server_container: noiro/opflex-server:5.2.3.5.1d150da

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

enable_cri_dockerd: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

default_ingress_class: null

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

ignore_proxy_env_vars: false

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null

EOF

##安装kubectl客户端

#配置kubernertes源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

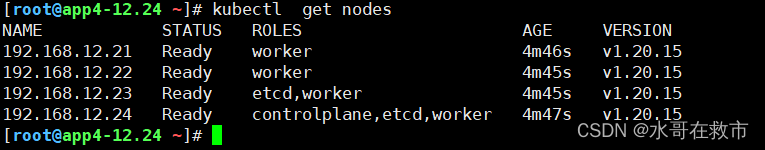

yum install -y kubectl-1.20.15

su - root

cp -a /home/rancher/kube_config_cluster.yml /root/.kube/config

kubectl get nodes

##至此rke部署高可用k8s集群已完毕。

##添加kubectl命令补全

yum install -y bash-completion bash-completion-extras

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo 'source <(kubectl completion bash)' >/etc/profile.d/k8s.sh && source /etc/profile

kubectl completion bash >/etc/bash_completion.d/kubectl

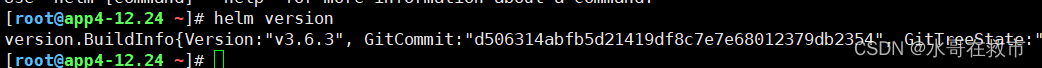

###部署helm3.6.3

Helm 组件介绍

Helm 包含两个组件,分别是 helm 客户端 和 Tiller 服务器:

helm 是一个命令行工具,用于本地开发及管理chart,chart仓库管理等

Tiller 是 Helm 的服务端。Tiller 负责接收 Helm 的请求,与 k8s 的 apiserver 交互,根据chart 来生成一个 release 并管理 release

chart Helm的打包格式叫做chart,所谓chart就是一系列文件, 它描述了一组相关的 k8s 集群资源

release 使用 helm install 命令在 Kubernetes 集群中部署的 Chart 称为 Release

Repoistory Helm chart 的仓库,Helm 客户端通过 HTTP 协议来访问存储库中 chart 的索引文件和压缩包

#下载安装包

wget https://get.helm.sh/helm-v3.6.3-linux-amd64.tar.gz

tar xf helm-v3.6.3-linux-amd64.tar.gz

mv linux-amd64/helm /usr/bin

helm version

version.BuildInfo{Version:"v3.6.3", GitCommit:"d506314abfb5d21419df8c7e7e68012379db2354", GitTreeState:"clean", GoVersion:"go1.16.5"}

#添加国内helm镜像仓库加速

helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

#更新helm仓库列表

helm repo update

#列出已添加到本地 Helm 环境中的所有 Chart 仓库

helm repo list

##部署rancher2.5.16

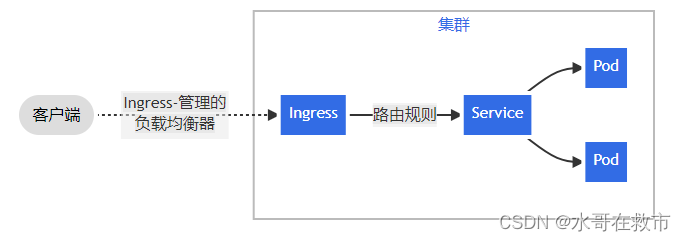

Ingress 提供从集群外部到集群内服务的 HTTP 和 HTTPS 路由。 流量路由由 Ingress 资源所定义的规则来控制。可将所有流量都发送到同一 Service

#创建k8s名称空间:

kubectl create namespace cattle-system

#安装rancher,##--version 指定rancher版本 --namespace指定运行的名称空间 --set hostname 指定访问域名

helm install rancher rancher-stable/rancher --version v2.5.16 --namespace cattle-system --set hostname=bengbu.rancher.com --set ingress.tls.source=secret

#添加ssl证书 (此处自签证书脚本,供参考)

cat >> ssl_make.sh << EOF

#!/bin/bash -e

help ()

{

echo ' ================================================================ '

echo ' --ssl-domain: 生成ssl证书需要的主域名,如不指定则默认为www.rancher.local,如果是ip访问服务,则可忽略;'

echo ' --ssl-trusted-ip: 一般ssl证书只信任域名的访问请求,有时候需要使用ip去访问server,那么需要给ssl证书添加扩展IP,多个IP用逗号隔开;'

echo ' --ssl-trusted-domain: 如果想多个域名访问,则添加扩展域名(SSL_TRUSTED_DOMAIN),多个扩展域名用逗号隔开;'

echo ' --ssl-size: ssl加密位数,默认2048;'

echo ' --ssl-cn: 国家代码(2个字母的代号),默认CN;'

echo ' 使用示例:'

echo ' ./create_self-signed-cert.sh --ssl-domain=www.test.com --ssl-trusted-domain=www.test2.com \ '

echo ' --ssl-trusted-ip=1.1.1.1,2.2.2.2,3.3.3.3 --ssl-size=2048 --ssl-date=3650'

echo ' ================================================================'

}

case "$1" in

-h|--help) help; exit;;

esac

if [[ $1 == '' ]];then

help;

exit;

fi

CMDOPTS="$*"

for OPTS in $CMDOPTS;

do

key=$(echo ${OPTS} | awk -F"=" '{print $1}' )

value=$(echo ${OPTS} | awk -F"=" '{print $2}' )

case "$key" in

--ssl-domain) SSL_DOMAIN=$value ;;

--ssl-trusted-ip) SSL_TRUSTED_IP=$value ;;

--ssl-trusted-domain) SSL_TRUSTED_DOMAIN=$value ;;

--ssl-size) SSL_SIZE=$value ;;

--ssl-date) SSL_DATE=$value ;;

--ca-date) CA_DATE=$value ;;

--ssl-cn) CN=$value ;;

esac

done

# CA相关配置

CA_DATE=${CA_DATE:-3650}

CA_KEY=${CA_KEY:-cakey.pem}

CA_CERT=${CA_CERT:-cacerts.pem}

CA_DOMAIN=cattle-ca

# ssl相关配置

SSL_CONFIG=${SSL_CONFIG:-$PWD/openssl.cnf}

SSL_DOMAIN=${SSL_DOMAIN:-'www.rancher.local'}

SSL_DATE=${SSL_DATE:-3650}

SSL_SIZE=${SSL_SIZE:-2048}

## 国家代码(2个字母的代号),默认CN;

CN=${CN:-CN}

SSL_KEY=$SSL_DOMAIN.key

SSL_CSR=$SSL_DOMAIN.csr

SSL_CERT=$SSL_DOMAIN.crt

echo -e "\033[32m ---------------------------- \033[0m"

echo -e "\033[32m | 生成 SSL Cert | \033[0m"

echo -e "\033[32m ---------------------------- \033[0m"

if [[ -e ./${CA_KEY} ]]; then

echo -e "\033[32m ====> 1. 发现已存在CA私钥,备份"${CA_KEY}"为"${CA_KEY}"-bak,然后重新创建 \033[0m"

mv ${CA_KEY} "${CA_KEY}"-bak

openssl genrsa -out ${CA_KEY} ${SSL_SIZE}

else

echo -e "\033[32m ====> 1. 生成新的CA私钥 ${CA_KEY} \033[0m"

openssl genrsa -out ${CA_KEY} ${SSL_SIZE}

fi

if [[ -e ./${CA_CERT} ]]; then

echo -e "\033[32m ====> 2. 发现已存在CA证书,先备份"${CA_CERT}"为"${CA_CERT}"-bak,然后重新创建 \033[0m"

mv ${CA_CERT} "${CA_CERT}"-bak

openssl req -x509 -sha256 -new -nodes -key ${CA_KEY} -days ${CA_DATE} -out ${CA_CERT} -subj "/C=${CN}/CN=${CA_DOMAIN}"

else

echo -e "\033[32m ====> 2. 生成新的CA证书 ${CA_CERT} \033[0m"

openssl req -x509 -sha256 -new -nodes -key ${CA_KEY} -days ${CA_DATE} -out ${CA_CERT} -subj "/C=${CN}/CN=${CA_DOMAIN}"

fi

echo -e "\033[32m ====> 3. 生成Openssl配置文件 ${SSL_CONFIG} \033[0m"

cat > ${SSL_CONFIG} <<EOM

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth, serverAuth

EOM

if [[ -n ${SSL_TRUSTED_IP} || -n ${SSL_TRUSTED_DOMAIN} ]]; then

cat >> ${SSL_CONFIG} <<EOM

subjectAltName = @alt_names

[alt_names]

EOM

IFS=","

dns=(${SSL_TRUSTED_DOMAIN})

dns+=(${SSL_DOMAIN})

for i in "${!dns[@]}"; do

echo DNS.$((i+1)) = ${dns[$i]} >> ${SSL_CONFIG}

done

if [[ -n ${SSL_TRUSTED_IP} ]]; then

ip=(${SSL_TRUSTED_IP})

for i in "${!ip[@]}"; do

echo IP.$((i+1)) = ${ip[$i]} >> ${SSL_CONFIG}

done

fi

fi

echo -e "\033[32m ====> 4. 生成服务SSL KEY ${SSL_KEY} \033[0m"

openssl genrsa -out ${SSL_KEY} ${SSL_SIZE}

echo -e "\033[32m ====> 5. 生成服务SSL CSR ${SSL_CSR} \033[0m"

openssl req -sha256 -new -key ${SSL_KEY} -out ${SSL_CSR} -subj "/C=${CN}/CN=${SSL_DOMAIN}" -config ${SSL_CONFIG}

echo -e "\033[32m ====> 6. 生成服务SSL CERT ${SSL_CERT} \033[0m"

openssl x509 -sha256 -req -in ${SSL_CSR} -CA ${CA_CERT} \

-CAkey ${CA_KEY} -CAcreateserial -out ${SSL_CERT} \

-days ${SSL_DATE} -extensions v3_req \

-extfile ${SSL_CONFIG}

echo -e "\033[32m ====> 7. 证书制作完成 \033[0m"

echo

echo -e "\033[32m ====> 8. 以YAML格式输出结果 \033[0m"

echo "----------------------------------------------------------"

echo "ca_key: |"

cat $CA_KEY | sed 's/^/ /'

echo

echo "ca_cert: |"

cat $CA_CERT | sed 's/^/ /'

echo

echo "ssl_key: |"

cat $SSL_KEY | sed 's/^/ /'

echo

echo "ssl_csr: |"

cat $SSL_CSR | sed 's/^/ /'

echo

echo "ssl_cert: |"

cat $SSL_CERT | sed 's/^/ /'

echo

echo -e "\033[32m ====> 9. 附加CA证书到Cert文件 \033[0m"

cat ${CA_CERT} >> ${SSL_CERT}

echo "ssl_cert: |"

cat $SSL_CERT | sed 's/^/ /'

echo

echo -e "\033[32m ====> 10. 重命名服务证书 \033[0m"

echo "cp ${SSL_DOMAIN}.key tls.key"

cp ${SSL_DOMAIN}.key tls.key

echo "cp ${SSL_DOMAIN}.crt tls.crt"

cp ${SSL_DOMAIN}.crt tls.crt

EOF

#授权ssl.sh执行权限

chmod +x ssl.sh

#根据脚本提示,选择参数,生成ssl证书

./ssl_make.sh --ssl-domain=bengbu.rancher.com --ssl-size=2048 --ssl-date=3650

#添加ssl证书创建secret资源

kubectl -n cattle-system create secret tls tls-rancher-ingress --cert=tls.crt --key=tls.key

kubectl -n cattle-system create secret generic tls-ca --from-file=cacerts.pem

#查看ingress资源详细信息

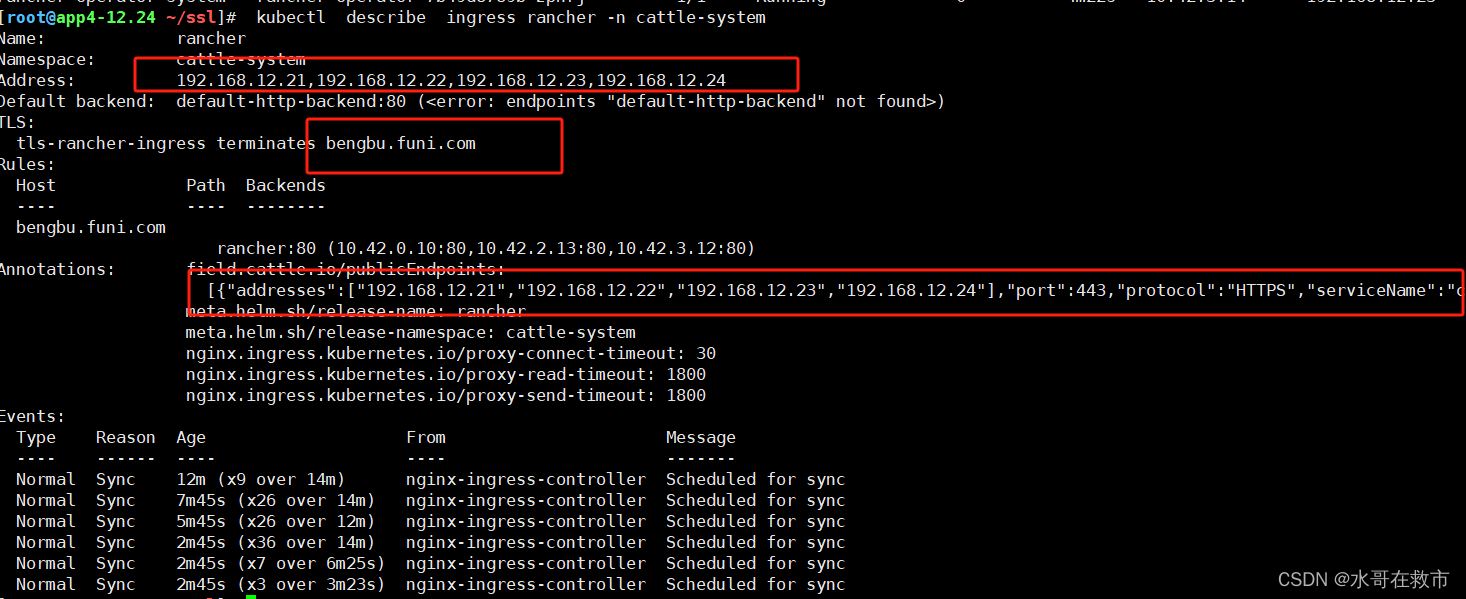

kubectl describe ingress rancher -n cattle-system

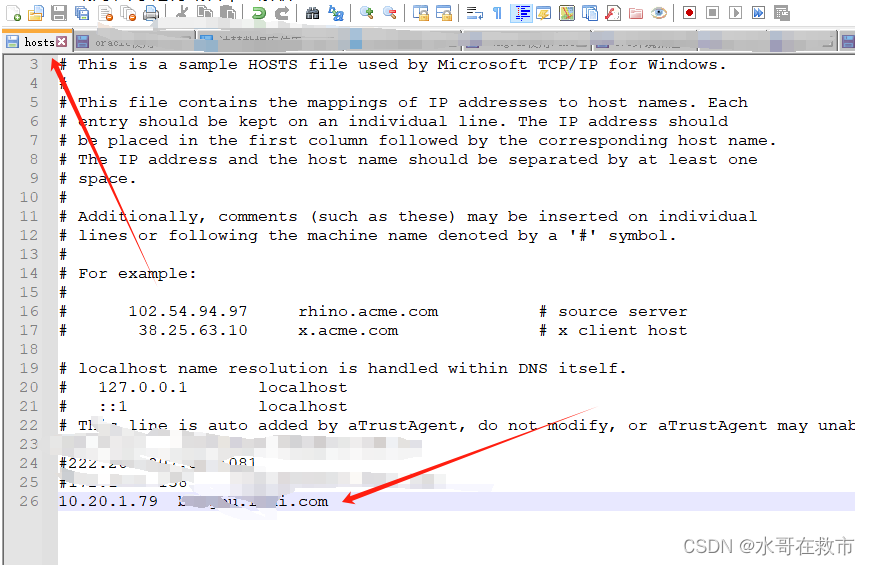

#本地做hosts解析进行测试

echo 192.168.12.24 bengbu.rancher.com >> /etc/hosts

##windows测试需将主机IP写入DNS解析文件

#ingress对外提供访问需再次代理 nginx配置文件如下

cat >> rancehr.conf << EOF\

upstream rancherhttps {

server 192.168.12.21:443;

server 192.168.12.22:443;

server 192.168.12.23:443;

server 192.168.12.24:443;

keepalive 60;

}

upstream rancherhttp {

server 192.168.12.21:80;

server 192.168.12.22:80;

server 192.168.12.23:80;

server 192.168.12.24:80;

keepalive 60;

}

server {

listen 443 ssl;

server_name bengbu.funi.com;

ssl_certificate /etc/nginx/conf.d/ssl/tls.crt;

ssl_certificate_key /etc/nginx/conf.d/ssl/tls.key;

#ssl_protocols SSLv2 SSLv3 TLSv1 TLSv1.1 TLSv1.2;

ssl_protocols SSLv2 SSLv3 TLSv1.3 TLSv1.2;

ssl_ciphers ALL:!ADH:!EXPORT56:RC4+RSA:+HIGH:+MEDIUM:+LOW:+SSLv2:+EXP:AES128+EECDH:AES128+EDH:!aNULL;

charset utf-8;

access_log /var/log/nginx/rancherhttps.log;

location / {

proxy_pass https://rancherhttps;

add_header X-Xss-Protection "1;mode=block";

add_header X-Content-Type-Options nosniff;

proxy_http_version 1.1;

proxy_redirect off;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503 http_504;

}

}

EOF

systemctl reload nginx

##注意局域网访问 记得域名本地解析!!

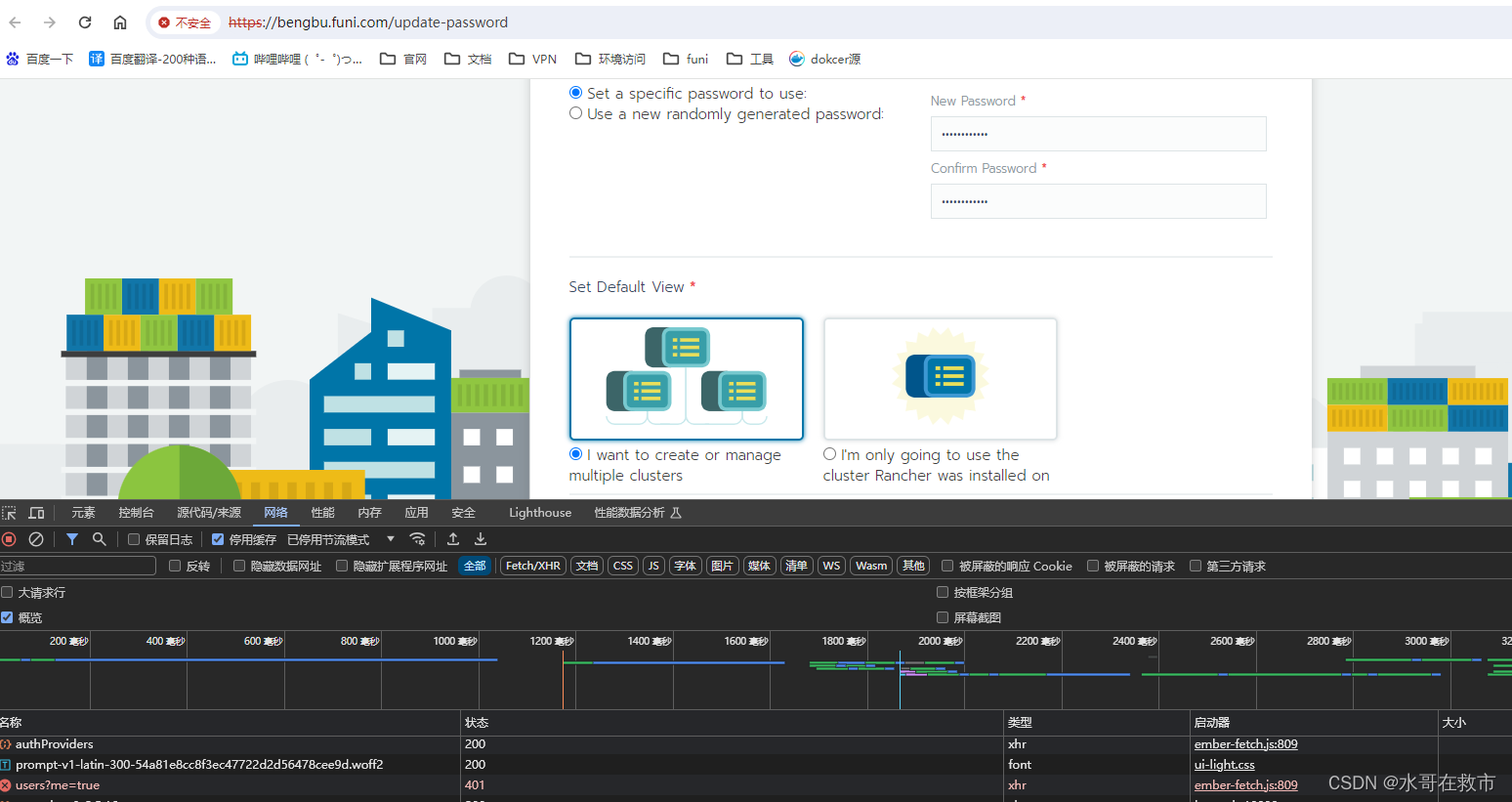

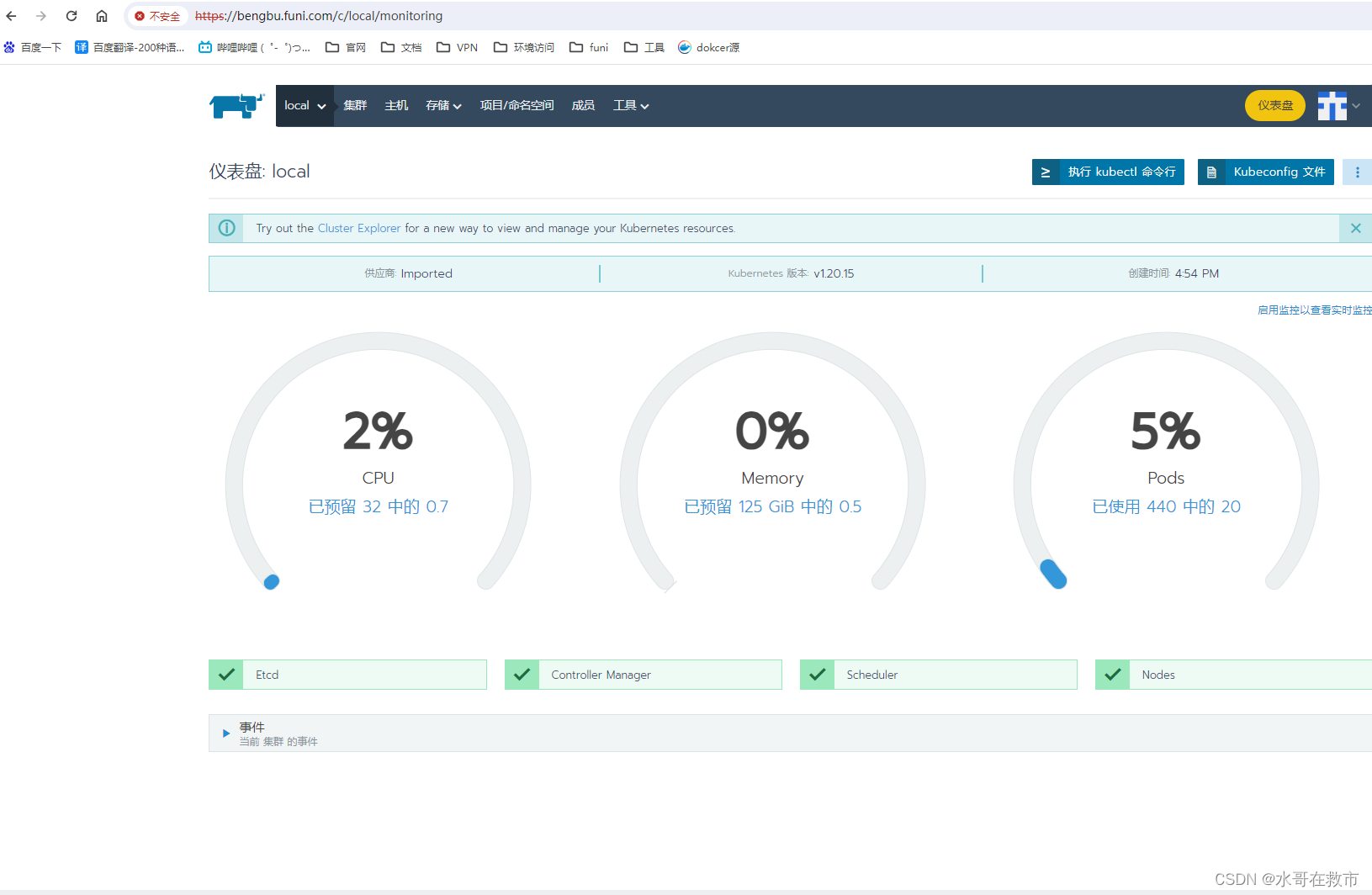

#至此rancher部署完成

1564

1564

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?