目录

1 数据集处理- IMDB 电影评论数据集

1.1 认识数据集

IMDB 电影评论数据集是一份关于电影评论的经典二分类数据集.IMDB 按照评分的高低筛选出了积极评论和消极评论,如果评分 ≥7,则认为是积极评论;如果评分 ≤4,则认为是消极评论。

数据集包含训练集和测试集数据,数量各为 25000 条,每条数据都是一段用户关于某个电影的真实评价,以及观众对这个电影的情感倾向。

下载地址:IMDB数据集

1.2 数据加载

这里将原始的测试集平均分为两份,分别作为验证集和测试集,存放于./dataset目录下。

代码如下:

import os

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 加载IMDB数据集

def load_imdb_data(path):

assert os.path.exists(path)

# 初始化数据集列表

trainset, devset, testset = [], [], []

# 加载训练集数据

for label in ['pos', 'neg']:

label_path = os.path.join(path, 'train', label)

for filename in os.listdir(label_path):

if filename.endswith('.txt'):

with open(os.path.join(label_path, filename), 'r', encoding='utf-8') as f:

sentence = f.read().strip().lower() # 读取并处理每个评论

trainset.append((sentence, label))

# 加载测试集数据

for label in ['pos', 'neg']:

label_path = os.path.join(path, 'test', label)

for filename in os.listdir(label_path):

if filename.endswith('.txt'):

with open(os.path.join(label_path, filename), 'r', encoding='utf-8') as f:

sentence = f.read().strip().lower() # 读取并处理每个评论

testset.append((sentence, label))

# 随机拆分测试集的一半作为验证集

random.shuffle(testset) # 打乱测试集顺序

split_index = len(testset) // 2 # 计算拆分索引

devset = testset[:split_index] # 选择测试集前一半作为验证集

testset = testset[split_index:] # 剩下的部分作为测试集

return trainset, devset, testset

# 加载IMDB数据集

train_data, dev_data, test_data = load_imdb_data("./dataset/")

# 打印一下加载后的数据样式

print(train_data[4]) # 打印训练集中的第5条数据

this is not the typical mel brooks film. it was much less slapstick than most of his movies and actually had a plot that was followable. leslie ann warren made the movie, she is such a fantastic, under-rated actress. there were some moments that could have been fleshed out a bit more, and some scenes that could probably have been cut to make the room to do so, but all in all, this is worth the price to rent and see it. the acting was good overall, brooks himself did a good job without his characteristic speaking to directly to the audience. again, warren was the best actor in the movie, but "fume" and "sailor" both played their parts well.', 'pos')

从输出结果看,加载后的每条样本包含两部分内容:文本串和标签。

1.3 构造Dataset类

构造IMDBDataset类用于数据管理,输入是文本序列,需要先将其中的每个词转换为该词在词表中的序号 ID,然后根据词表ID查询这些词对应的词向量(词向量)【使用IMDBDataset类中的words_to_id方法】。

利用词表将序列中的每个词映射为对应的数字编号,便于进一步转为为词向量。当序列中的词没有包含在词表时,默认会将该词用[UNK]代替。words_to_id方法利用一个如下图所示的哈希表来进行转换,实验中词表为数据集文件中的imdb.vocab。【这里注意原来的imdb.vocab中没有UNK和PAD映射,需要自行添加】

class IMDBDataset(Dataset):

def __init__(self, examples, word2id_dict):

super(IMDBDataset, self).__init__()

self.word2id_dict = word2id_dict

self.examples = self.words_to_id(examples)

def words_to_id(self, examples):

tmp_examples = []

for idx, example in enumerate(examples):

seq, label = example

# 将单词映射为字典索引的ID, 对于词典中没有的单词用[UNK]对应的ID进行替代

seq = [self.word2id_dict.get(word, self.word2id_dict['[UNK]']) for word in seq.split(" ")]

# 映射标签: 'pos' -> 1, 'neg' -> 0

label = 1 if label == 'pos' else 0 # 将标签从'pos'/'neg'转换为1/0

tmp_examples.append([seq, label])

return tmp_examples

def __getitem__(self, idx):

seq, label = self.examples[idx]

return seq, label

def __len__(self):

return len(self.examples)

def load_vocab(path):

assert os.path.exists(path) # 确保词表文件路径存在

words = [] # 初始化空列表,存储词表中的单词

with open(path, "r", encoding="utf-8") as f: # 打开文件并读取内容

words = f.readlines() # 读取文件中的所有行

words = [word.strip() for word in words if word.strip()] # 移除每个单词的前后空白字符并去掉空行

word2id = dict(zip(words, range(len(words)))) # 创建一个字典,将单词与对应的ID映射

return word2id # 返回这个字典

# 加载词表

word2id_dict = load_vocab("./dataset/imdb.vocab")

# 实例化Dataset

train_set = IMDBDataset(train_data, word2id_dict)

dev_set = IMDBDataset(dev_data, word2id_dict)

test_set = IMDBDataset(test_data, word2id_dict)

print('训练集样本数:', len(train_set))

print('样本示例:', train_set[4])运行结果:

([11, 7, 21, 2, 764, 3633, 2822, 0, 8, 13, 74, 324, 2706, 72, 89, 5, 25, 101, 3, 161, 67, 4, 113, 12, 13, 0, 2750, 1900, 3725, 92, 2, 0, 53, 7, 138, 4, 0, 13617, 0, 39, 69, 49, 369, 12, 97, 26, 75, 7239, 46, 4, 221, 0, 3, 49, 137, 12, 97, 234, 26, 75, 644, 6, 96, 2, 667, 6, 83, 0, 18, 30, 9, 0, 11, 7, 283, 2, 1766, 6, 859, 3, 66, 0, 2, 111, 13, 50, 0, 2822, 302, 119, 4, 50, 284, 202, 25, 7517, 1409, 6, 2475, 6, 2, 0, 0, 3725, 13, 2, 117, 266, 9, 2, 0, 18, 0, 3, 0, 192, 248, 65, 512, 0], 1)

训练集样本数: 25000

样本示例: ([11, 7, 21, 2, 764, 3633, 2822, 0, 8, 13, 74, 324, 2706, 72, 89, 5, 25, 101, 3, 161, 67, 4, 113, 12, 13, 0, 2750, 1900, 3725, 92, 2, 0, 53, 7, 138, 4, 0, 13617, 0, 39, 69, 49, 369, 12, 97, 26, 75, 7239, 46, 4, 221, 0, 3, 49, 137, 12, 97, 234, 26, 75, 644, 6, 96, 2, 667, 6, 83, 0, 18, 30, 9, 0, 11, 7, 283, 2, 1766, 6, 859, 3, 66, 0, 2, 111, 13, 50, 0, 2822, 302, 119, 4, 50, 284, 202, 25, 7517, 1409, 6, 2475, 6, 2, 0, 0, 3725, 13, 2, 117, 266, 9, 2, 0, 18, 0, 3, 0, 192, 248, 65, 512, 0], 1)

可知,train_set[4] 的样本为:

this is not the typical mel brooks film. it was much less slapstick than most of his movies and actually had a plot that was followable. leslie ann warren made the movie, she is such a fantastic, under-rated actress. there were some moments that could have been fleshed out a bit more, and some scenes that could probably have been cut to make the room to do so, but all in all, this is worth the price to rent and see it. the acting was good overall, brooks himself did a good job without his characteristic speaking to directly to the audience. again, warren was the best actor in the movie, but "fume" and "sailor" both played their parts well.', 'pos')

成功被转换为该词在词表中的序号 ID:

([11, 7, 21, 2, 764, 3633, 2822, 0, 8, 13, 74, 324, 2706, 72, 89, 5, 25, 101, 3, 161, 67, 4, 113, 12, 13, 0, 2750, 1900, 3725, 92, 2, 0, 53, 7, 138, 4, 0, 13617, 0, 39, 69, 49, 369, 12, 97, 26, 75, 7239, 46, 4, 221, 0, 3, 49, 137, 12, 97, 234, 26, 75, 644, 6, 96, 2, 667, 6, 83, 0, 18, 30, 9, 0, 11, 7, 283, 2, 1766, 6, 859, 3, 66, 0, 2, 111, 13, 50, 0, 2822, 302, 119, 4, 50, 284, 202, 25, 7517, 1409, 6, 2475, 6, 2, 0, 0, 3725, 13, 2, 117, 266, 9, 2, 0, 18, 0, 3, 0, 192, 248, 65, 512, 0], 1)

1.4 封装DataLoader

构造对应的 DataLoader,用于批次数据的迭代。

主要功能:

- 长度限制:需要将序列的长度控制在一定的范围内,避免部分数据过长影响整体训练效果。-----使用max_seq_len参数对于过长的文本进行截断.

- 长度补齐:神经网络模型通常需要同一批处理的数据的序列长度是相同的,然而在分批时通常会将不同长度序列放在同一批,因此需要对序列进行补齐处理.-----先统计该批数据中序列的最大长度,并将短的序列填充一些没有特殊意义的占位符 [PAD],将长度补齐到该批次的最大长度

1.4.1 collate_fn函数

定义一个collate_fn函数来做数据的截断和填充.。该函数可以作为回调函数传入 DataLoader,DataLoader 在返回一批数据之前,调用该函数去处理数据,并返回处理后的序列数据和对应标签。

def collate_fn(batch_data, pad_val=0, max_seq_len=256):

seqs, seq_lens, labels = [], [], []

max_len = 0

for example in batch_data:

seq, label = example

# 对数据序列进行截断

seq = seq[:max_seq_len]

# 对数据截断并保存于seqs中

seqs.append(seq)

seq_lens.append(len(seq))

labels.append(label)

# 保存序列最大长度

max_len = max(max_len, len(seq))

# 对数据序列进行填充至最大长度

for i in range(len(seqs)):

seqs[i] = seqs[i] + [pad_val] * (max_len - len(seqs[i]))

return (torch.tensor(seqs).to(device), torch.tensor(seq_lens)), torch.tensor(labels).to(device)测试一下collate_fn函数的功能,假定一下max_seq_len为5,然后定义序列长度分别为6和3的两条数据,传入collate_fn函数中

# =======测试==============

max_seq_len = 5

batch_data = [[[1, 2, 3, 4, 5, 6], 1], [[2, 4, 6], 0]]

(seqs, seq_lens), labels = collate_fn(batch_data, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

print("seqs: ", seqs)

print("seq_lens: ", seq_lens)

print("labels: ", labels)运行结果:

seqs: tensor([[1, 2, 3, 4, 5],

[2, 4, 6, 0, 0]], device='cuda:0')

seq_lens: tensor([5, 3])

labels: tensor([1, 0], device='cuda:0') 可以看到,原始序列中长度为6的序列被截断为5,同时原始序列中长度为3的序列被填充到5,同时返回了非[PAD]的序列长度。

1.4.2 封装dataloader

将collate_fn作为回调函数传入DataLoader中,其在返回一批数据时,可以通过collate_fn函数处理该批次的数据:

max_seq_len = 256

batch_size = 128

collate_fn = partial(collate_fn, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

train_loader = torch.utils.data.DataLoader(train_set, batch_size=batch_size,

shuffle=True, drop_last=False, collate_fn=collate_fn)

dev_loader = torch.utils.data.DataLoader(dev_set, batch_size=batch_size,

shuffle=False, drop_last=False, collate_fn=collate_fn)

test_loader = torch.utils.data.DataLoader(test_set, batch_size=batch_size,

shuffle=False, drop_last=False, collate_fn=collate_fn)2 模型构建

实践的整个模型结构如下图所示:

(1)嵌入层:将输入的数字序列(单词映射成的ID)进行向量化,即将每个数字映射为向量。 ---------------使用Pytorch API:torch.nn.Embedding来完成

(2)双向LSTM层:接收向量序列,分别用前向和反向更新循环单元。----------------使用Pytorch API:torch.nn.LSTM来完成【在定义LSTM时设置参数bidirectional为True,可使用双向LSTM。】

(3)汇聚层:将双向LSTM层所有位置上的隐状态进行平均,作为整个句子的表示。

(4)输出层:输出分类的几率。----------调用torch.nn.Linear来完成。

2.1 汇聚层算子

实现了AveragePooling算子进行隐状态的汇聚,首先利用序列长度向量生成掩码(Mask)矩阵【LSTM在传入批次数据的真实长度后,会对[PAD]位置返回零向量,但考虑到汇聚层与处理序列数据的模型进行解耦,因此在汇聚层的实现中,会对[PAD]位置进行掩码】,用于对文本序列中[PAD]位置的向量进行掩蔽,然后将该序列的向量进行相加后取均值。具体操作如下图:

代码实现如下:

class AveragePooling(nn.Module):

def __init__(self):

super(AveragePooling, self).__init__()

def forward(self, sequence_output, sequence_length):

# 假设 sequence_length 是一个 PyTorch 张量

sequence_length = sequence_length.unsqueeze(-1).to(torch.float32)

# 根据sequence_length生成mask矩阵,用于对Padding位置的信息进行mask

max_len = sequence_output.shape[1]

mask = torch.arange(max_len, device='cuda') < sequence_length.to('cuda')

mask = mask.to(torch.float32).unsqueeze(-1)

# 对序列中paddling部分进行mask

sequence_output = torch.multiply(sequence_output, mask.to('cuda'))

# 对序列中的向量取均值

batch_mean_hidden = torch.divide(torch.sum(sequence_output, dim=1), sequence_length.to('cuda'))

return batch_mean_hidden2.2 模型汇总

# ===================模型汇总=====================

class Model_BiLSTM_FC(nn.Module):

def __init__(self, num_embeddings, input_size, hidden_size, num_classes=2):

super(Model_BiLSTM_FC, self).__init__()

# 词典大小

self.num_embeddings = num_embeddings

# 单词向量的维度

self.input_size = input_size

# LSTM隐藏单元数量

self.hidden_size = hidden_size

# 情感分类类别数量

self.num_classes = num_classes

# 实例化嵌入层

self.embedding_layer = nn.Embedding(num_embeddings, input_size, padding_idx=0)

# 实例化LSTM层

self.lstm_layer = nn.LSTM(input_size, hidden_size, batch_first=True, bidirectional=True)

# 实例化聚合层

self.average_layer = AveragePooling()

# 实例化输出层

self.output_layer = nn.Linear(hidden_size * 2, num_classes)

def forward(self, inputs):

# 对模型输入拆分为序列数据和mask

input_ids, sequence_length = inputs

# 获取词向量

inputs_emb = self.embedding_layer(input_ids)

packed_input = nn.utils.rnn.pack_padded_sequence(inputs_emb, sequence_length.cpu(), batch_first=True,

enforce_sorted=False)

# 使用lstm处理数据

packed_output, _ = self.lstm_layer(packed_input)

# 解包输出

sequence_output, _ = nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

# 使用聚合层聚合sequence_output

batch_mean_hidden = self.average_layer(sequence_output, sequence_length)

# 输出文本分类logits

logits = self.output_layer(batch_mean_hidden)

return logits3 模型训练

from Runner import RunnerV3,Accuracy,plot_training_loss_acc

np.random.seed(0)

random.seed(0)

torch.seed()

# 指定训练轮次

num_epochs = 1

# 指定学习率

learning_rate = 0.001

# 指定embedding的数量为词表长度

num_embeddings = len(word2id_dict)

# embedding向量的维度

input_size = 256

# LSTM网络隐状态向量的维度

hidden_size = 256

# 模型保存目录

save_dir = "./checkpoints/best.pdparams"

# 实例化模型

model = Model_BiLSTM_FC(num_embeddings, input_size, hidden_size).to(device)

# 指定优化器

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

# 指定损失函数

loss_fn = nn.CrossEntropyLoss()

# 指定评估指标

metric = Accuracy()

# 实例化Runner

runner = RunnerV3(model, optimizer, loss_fn, metric)

# 模型训练

start_time = time.time()

runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=10, log_steps=10,

save_path=save_dir)

end_time = time.time()

print("time: ", (end_time - start_time))

# ============ 绘制训练过程中在训练集和验证集上的损失图像和在验证集上的准确率图像 ===========

# sample_step: 训练损失的采样step,即每隔多少个点选择1个点绘制

# loss_legend_loc: loss 图像的图例放置位置

# acc_legend_loc: acc 图像的图例放置位置

plot_training_loss_acc(runner, fig_size=(16, 6), sample_step=10, loss_legend_loc="lower left",

acc_legend_loc="lower right")epoch =1:

[Evaluate] best accuracy performence has been updated: 0.81120 --> 0.81880

[Train] epoch: 0/1, step: 190/196, loss: 0.31320

[Evaluate] dev score: 0.81912, dev loss: 0.40167

[Evaluate] best accuracy performence has been updated: 0.81880 --> 0.81912

[Evaluate] dev score: 0.81728, dev loss: 0.40298

[Train] Training done!

time: 124.63010001182556

观察到模型未收敛,验证集和训练集的损失都在下降,于是我增大epoch为3;

epoch=3:

[Train] epoch: 0/3, step: 0/588, loss: 0.69394

[Train] epoch: 0/3, step: 10/588, loss: 0.70491

[Evaluate] dev score: 0.52592, dev loss: 0.68407

[Evaluate] best accuracy performence has been updated: 0.00000 --> 0.52592

[Train] epoch: 0/3, step: 20/588, loss: 0.66260

[Evaluate] dev score: 0.62080, dev loss: 0.66250

[Evaluate] best accuracy performence has been updated: 0.52592 --> 0.62080

[Train] epoch: 0/3, step: 30/588, loss: 0.61762

[Evaluate] dev score: 0.62880, dev loss: 0.64522

......

.....

[Train] epoch: 1/3, step: 370/588, loss: 0.31063

[Evaluate] dev score: 0.84656, dev loss: 0.36641

[Evaluate] best accuracy performence has been updated: 0.84272 --> 0.84656

[Train] epoch: 1/3, step: 380/588, loss: 0.30818

[Evaluate] dev score: 0.84264, dev loss: 0.37259

[Train] epoch: 1/3, step: 390/588, loss: 0.19482

[Evaluate] dev score: 0.84600, dev loss: 0.35535

......

[Evaluate] best accuracy performence has been updated: 0.85048 --> 0.85088

[Train] epoch: 2/3, step: 560/588, loss: 0.25688

[Evaluate] dev score: 0.84792, dev loss: 0.37273

[Train] epoch: 2/3, step: 570/588, loss: 0.12472

[Evaluate] dev score: 0.84856, dev loss: 0.36705

[Train] epoch: 2/3, step: 580/588, loss: 0.11621

[Evaluate] dev score: 0.84848, dev loss: 0.38805

[Evaluate] dev score: 0.84976, dev loss: 0.37620

[Train] Training done!

time: 356.31542706489563

验证集的损失及变化趋于平衡,且有过拟合的迹象,不必再增大epoch,模型在在验证集的准确率也在不断上升 。

4 模型评价

# ======== 模型评价 =============

model_path = "./checkpoints/best.pdparams"

runner.load_model(model_path)

accuracy, _ = runner.evaluate(test_loader)

print(f"Evaluate on test set, Accuracy: {accuracy:.5f}")

epoch =1:

Evaluate on test set, Accuracy: 0.81352epoch=3:

Evaluate on test set, Accuracy: 0.84704对比不同epoch的结果,当eooch=3时,模型训练较好,测试集上准确率达到0.847 。

5 模型预测

# =======模型预测==========

id2label={0:"消极情绪", 1:"积极情绪"}

text = "this movie is so great. I watched it three times already"

# 处理单条文本

sentence = text.split(" ")

words = [word2id_dict[word] if word in word2id_dict else word2id_dict['[UNK]'] for word in sentence]

words = words[:max_seq_len]

sequence_length = torch.tensor([len(words)], dtype=torch.int64)

words = torch.tensor(words, dtype=torch.int64).unsqueeze(0)

# 使用模型进行预测

logits = runner.predict((words.to(device), sequence_length.to(device)))

max_label_id = torch.argmax(logits, dim=-1).cpu().numpy()[0]

pred_label = id2label[max_label_id]

print("Label: ", pred_label)Label: 积极情绪根据输出可以看出模型预测正确。

6 完整代码

'''

@Function:基于双向LSTM实现文本分类

@Author: lxy

@Date: 2024/12/12

'''

import os

import torch

import torch.nn as nn

from torch.utils.data import Dataset

from functools import partial

import random

import numpy as np

import time

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

'''

数据集处理部分

'''

# 加载IMDB数据集

def load_imdb_data(path):

assert os.path.exists(path)

# 初始化数据集列表

trainset, devset, testset = [], [], []

# 加载训练集数据

for label in ['pos', 'neg']:

label_path = os.path.join(path, 'train', label)

for filename in os.listdir(label_path):

if filename.endswith('.txt'):

with open(os.path.join(label_path, filename), 'r', encoding='utf-8') as f:

sentence = f.read().strip().lower() # 读取并处理每个评论

trainset.append((sentence, label))

# 加载测试集数据

for label in ['pos', 'neg']:

label_path = os.path.join(path, 'test', label)

for filename in os.listdir(label_path):

if filename.endswith('.txt'):

with open(os.path.join(label_path, filename), 'r', encoding='utf-8') as f:

sentence = f.read().strip().lower() # 读取并处理每个评论

testset.append((sentence, label))

# 随机拆分测试集的一半作为验证集

random.shuffle(testset) # 打乱测试集顺序

split_index = len(testset) // 2 # 计算拆分索引

devset = testset[:split_index] # 选择测试集前一半作为验证集

testset = testset[split_index:] # 剩下的部分作为测试集

return trainset, devset, testset

# 加载IMDB数据集

train_data, dev_data, test_data = load_imdb_data("./dataset/")

# # 打印一下加载后的数据样式

# print(train_data[4]) # 打印训练集中的第5条数据

class IMDBDataset(Dataset):

def __init__(self, examples, word2id_dict):

super(IMDBDataset, self).__init__()

self.word2id_dict = word2id_dict

self.examples = self.words_to_id(examples)

def words_to_id(self, examples):

tmp_examples = []

for idx, example in enumerate(examples):

seq, label = example

# 将单词映射为字典索引的ID, 对于词典中没有的单词用[UNK]对应的ID进行替代

seq = [self.word2id_dict.get(word, self.word2id_dict['[UNK]']) for word in seq.split(" ")]

# 映射标签: 'pos' -> 1, 'neg' -> 0

label = 1 if label == 'pos' else 0 # 将标签从'pos'/'neg'转换为1/0

tmp_examples.append([seq, label])

return tmp_examples

def __getitem__(self, idx):

seq, label = self.examples[idx]

return seq, label

def __len__(self):

return len(self.examples)

# ===============ID映射=====================

def load_vocab(path):

assert os.path.exists(path) # 确保词表文件路径存在

words = [] # 初始化空列表,存储词表中的单词

with open(path, "r", encoding="utf-8") as f: # 打开文件并读取内容

words = f.readlines() # 读取文件中的所有行

words = [word.strip() for word in words if word.strip()] # 移除每个单词的前后空白字符并去掉空行

word2id = dict(zip(words, range(len(words)))) # 创建一个字典,将单词与对应的ID映射

return word2id # 返回这个字典

# 加载词表

word2id_dict = load_vocab("./dataset/imdb.vocab")

# 实例化Dataset

train_set = IMDBDataset(train_data, word2id_dict)

dev_set = IMDBDataset(dev_data, word2id_dict)

test_set = IMDBDataset(test_data, word2id_dict)

# print('训练集样本数:', len(train_set))

# print('样本示例:', train_set[4])

def collate_fn(batch_data, pad_val=0, max_seq_len=256):

seqs, seq_lens, labels = [], [], []

max_len = 0

for example in batch_data:

seq, label = example

# 对数据序列进行截断

seq = seq[:max_seq_len]

# 对数据截断并保存于seqs中

seqs.append(seq)

seq_lens.append(len(seq))

labels.append(label)

# 保存序列最大长度

max_len = max(max_len, len(seq))

# 对数据序列进行填充至最大长度

for i in range(len(seqs)):

seqs[i] = seqs[i] + [pad_val] * (max_len - len(seqs[i]))

return (torch.tensor(seqs).to(device), torch.tensor(seq_lens)), torch.tensor(labels).to(device)

# =======测试==============

# max_seq_len = 5

# batch_data = [[[1, 2, 3, 4, 5, 6], 1], [[2, 4, 6], 0]]

# (seqs, seq_lens), labels = collate_fn(batch_data, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

# print("seqs: ", seqs)

# print("seq_lens: ", seq_lens)

# print("labels: ", labels)

# ===============封装dataloader=========================

max_seq_len = 256

batch_size = 128

collate_fn = partial(collate_fn, pad_val=word2id_dict["[PAD]"], max_seq_len=max_seq_len)

train_loader = torch.utils.data.DataLoader(train_set, batch_size=batch_size,

shuffle=True, drop_last=False, collate_fn=collate_fn)

dev_loader = torch.utils.data.DataLoader(dev_set, batch_size=batch_size,

shuffle=False, drop_last=False, collate_fn=collate_fn)

test_loader = torch.utils.data.DataLoader(test_set, batch_size=batch_size,

shuffle=False, drop_last=False, collate_fn=collate_fn)

'''

数据集处理部分结束

'''

'''

模型构建部分

'''

# ======================汇聚层====================

class AveragePooling(nn.Module):

def __init__(self):

super(AveragePooling, self).__init__()

def forward(self, sequence_output, sequence_length):

# 假设 sequence_length 是一个 PyTorch 张量

sequence_length = sequence_length.unsqueeze(-1).to(torch.float32)

# 根据sequence_length生成mask矩阵,用于对Padding位置的信息进行mask

max_len = sequence_output.shape[1]

mask = torch.arange(max_len, device='cuda') < sequence_length.to('cuda')

mask = mask.to(torch.float32).unsqueeze(-1)

# 对序列中paddling部分进行mask

sequence_output = torch.multiply(sequence_output, mask.to('cuda'))

# 对序列中的向量取均值

batch_mean_hidden = torch.divide(torch.sum(sequence_output, dim=1), sequence_length.to('cuda'))

return batch_mean_hidden

# ===================模型汇总=====================

class Model_BiLSTM_FC(nn.Module):

def __init__(self, num_embeddings, input_size, hidden_size, num_classes=2):

super(Model_BiLSTM_FC, self).__init__()

# 词典大小

self.num_embeddings = num_embeddings

# 单词向量的维度

self.input_size = input_size

# LSTM隐藏单元数量

self.hidden_size = hidden_size

# 情感分类类别数量

self.num_classes = num_classes

# 实例化嵌入层

self.embedding_layer = nn.Embedding(num_embeddings, input_size, padding_idx=0)

# 实例化LSTM层

self.lstm_layer = nn.LSTM(input_size, hidden_size, batch_first=True, bidirectional=True)

# 实例化聚合层

self.average_layer = AveragePooling()

# 实例化输出层

self.output_layer = nn.Linear(hidden_size * 2, num_classes)

def forward(self, inputs):

# 对模型输入拆分为序列数据和mask

input_ids, sequence_length = inputs

# 获取词向量

inputs_emb = self.embedding_layer(input_ids)

packed_input = nn.utils.rnn.pack_padded_sequence(inputs_emb, sequence_length.cpu(), batch_first=True,

enforce_sorted=False)

# 使用lstm处理数据

packed_output, _ = self.lstm_layer(packed_input)

# 解包输出

sequence_output, _ = nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

# 使用聚合层聚合sequence_output

batch_mean_hidden = self.average_layer(sequence_output, sequence_length)

# 输出文本分类logits

logits = self.output_layer(batch_mean_hidden)

return logits

'''

模型构建部分结束

'''

'''

模型训练部分

'''

# ===============模型训练===================

from Runner import RunnerV3,Accuracy,plot_training_loss_acc

np.random.seed(0)

random.seed(0)

torch.seed()

# 指定训练轮次

num_epochs = 1

# 指定学习率

learning_rate = 0.001

# 指定embedding的数量为词表长度

num_embeddings = len(word2id_dict)

# embedding向量的维度

input_size = 256

# LSTM网络隐状态向量的维度

hidden_size = 256

# 模型保存目录

save_dir = "./checkpoints/best.pdparams"

# 实例化模型

model = Model_BiLSTM_FC(num_embeddings, input_size, hidden_size).to(device)

# 指定优化器

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

# 指定损失函数

loss_fn = nn.CrossEntropyLoss()

# 指定评估指标

metric = Accuracy()

# 实例化Runner

runner = RunnerV3(model, optimizer, loss_fn, metric)

# 模型训练

start_time = time.time()

runner.train(train_loader, dev_loader, num_epochs=num_epochs, eval_steps=10, log_steps=10,

save_path=save_dir)

end_time = time.time()

print("time: ", (end_time - start_time))

# ============ 绘制训练过程中在训练集和验证集上的损失图像和在验证集上的准确率图像 ===========

# sample_step: 训练损失的采样step,即每隔多少个点选择1个点绘制

# loss_legend_loc: loss 图像的图例放置位置

# acc_legend_loc: acc 图像的图例放置位置

plot_training_loss_acc(runner, fig_size=(16, 6), sample_step=10, loss_legend_loc="lower left",

acc_legend_loc="lower right")

'''

模型训练部分结束

'''

# ==================== 模型评价 =============

model_path = "./checkpoints/best.pdparams"

runner.load_model(model_path)

accuracy, _ = runner.evaluate(test_loader)

print(f"Evaluate on test set, Accuracy: {accuracy:.5f}")

# =====================模型预测==========

id2label={0:"消极情绪", 1:"积极情绪"}

text = "this movie is so great. I watched it three times already"

# 处理单条文本

sentence = text.split(" ")

words = [word2id_dict[word] if word in word2id_dict else word2id_dict['[UNK]'] for word in sentence]

words = words[:max_seq_len]

sequence_length = torch.tensor([len(words)], dtype=torch.int64)

words = torch.tensor(words, dtype=torch.int64).unsqueeze(0)

# 使用模型进行预测

logits = runner.predict((words.to(device), sequence_length.to(device)))

max_label_id = torch.argmax(logits, dim=-1).cpu().numpy()[0]

pred_label = id2label[max_label_id]

print("Label: ", pred_label)7 拓展实验

8 总结

- 基于双向LSTM模型完成文本分类任务

- 在这次实验中又了解到一个新的库函数为from functools import partial,当我们需要经常调用某个函数时,但是其中某些参数是已知的固定值,这个时候就可以考虑使用partial函数,而不用每次都重复传入那些固定的参数值。感觉有点抽象,举个例子:

如果有函数def add(x, y): return x + y,使用partial(add, x=3)就会创建一个新函数,这个新函数调用时只需要传入y的值,相当于预先给add函数的x参数固定为了3。

在本次实验中的collate_fn函数,就将其中的pad_val参数绑定为word 2id_dict ["[PAD]"]这个填充值,将max_seq_len参数绑定为max_seq_len变量所代表的最大序列长度值。之后的代码流程中,调用这个新的collate_fn时,就不需要再重复传入这两个已经固定好的参数值了,只需完成其他必要的参数传入操作。

- 实验中我印象最为深刻的操作是掩码的实现,具体的实现步骤我在汇聚层流程图中也详细写出,这里再简单总结一下:为了方便以批量的形式将数据输入模型,需要把不同长度的序列统一成相同长度。实验采用的做法是对较短的序列进行[PAD]填充,将其补到和该批次中最长序列一样长。解耦在这里就是让汇聚层区分出从输出的包含 [PAD] 位置的向量,防止因为这些无意义的填充位置输出而影响汇聚效果。所有就有了掩码操作,采用一种标记机制,对应 [PAD] 位置的元素和原始有效数据位置的元素进行不同的标记来明确区分[PAD] 位置和真实数据位置(实验用的true和false)。在汇聚层进行具体运算时,可以依据这个掩码忽略掉 [PAD] 位置的数据。

- 实验还是比较简单的,嵌入层和BiLSTM都是调用的API,主要是数据的处理和汇聚层的实现,IMDB数据集直接在官网下载的,划分过程和paddle是不一样的,需要注意的一点就是在单词映射的哈希表中添加[PAD]和[UNK]cv,原始的映射中是没有的!!

4、另外再说我实验中遇到的一个很头疼的问题,先说挂Gpu上运行这一步,一直报错,显示模型和数据不在同一设备,最后暴力解决添加了全局配置torch.set _default_tens or_type('torch.cuda.FloatTensor') ,只要是tensor类型的我就给转移到gpu上了,然而并不是我想的那么顺利,这次实验的数据集相对较大,如下:

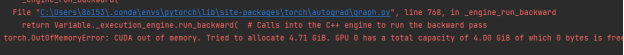

导致我挂GPU上一直爆内存,如下:

无奈之下只能去用服务器远程连接,因为之前zc学长培训教过,很顺利,数据一会就跑完了,比自己的GPU好使,但是在远程服务器上不能做图形可视化,只能跑数据,所以我就改了代码,记录每一步的loss,将其保存到了loss.txt文件,最后利用chatgpt做了一下loss的可视化。

- 基于双向LSTM和attention模型完成文本分类任务

- 这个实验同样还是掩码操作让我觉得很有趣,和上个实验使用false标记不同,这次是把[PAD]部分填充为-1e9之后,这样对应的Softmax输出变成了0,相当于把[PAD]这些没有特殊意义字符给屏蔽了,然后剩下元素计算注意力分布,这样做就减少了这些没有特殊意义单元对于注意力计算的影响。这里就和transformer模型中的掩码实现方式一样了。

- 有了之前的数据集和代码基础,很顺利完成了,主要是注意力层的实现,不过结果有点奇怪,paddle文档说点积注意力函数效果更好(attention论文中也是点积注意力),但是我的实验结果明显加性注意力模型的效果更好(不知道是不是数据集的问题),其中我还调了参,由于模型比较复杂,epoch在5以上就已经开始过拟合了,epoch=1也能取得不错的效果。还有一点需要注意就是paddle中的代码没有打乱训练集,写代码的时候要打乱一下。

- 最近很多地方都看到了掩码,在看VIT模型的论文里了解一点点BERT,也使用了一种掩码机制,但是还没仔细研究过这个模型具体是怎么样的。我感觉试着去读论文真的能学到很多奇奇怪怪的知识哈哈哈哈,还挺有意思的。

9 参考链接

| 双向LSTM参考资料: |

| 情感分析--数据集来源 |

| 双向LSTM+attention实验参考资料: |

| aistudio.baidu.com/projectdetail/8666087 --->源项目地址 |

| 通俗易懂理解注意力机制(Attention Mechanism)-CSDN博客 --->点赞点赞,学到了很多 |

| 注意力机制的两种常用形式-----加性注意力(Additive Attention)和点积注意力(Dot-product Attention |

1268

1268

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?