本文来自:公众号 量化投资与机器学习

gcForest Algorithm

对于周志华教授的文章,网上已经有人做出很详细的解释啦。我们对论文进行简单描述之后,然后直接从策略开始讲起。

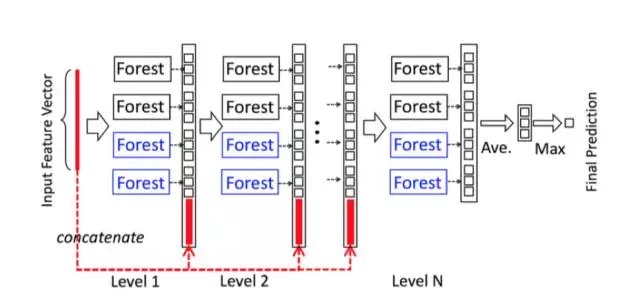

gcForest(multi-Grained Cascade forest 多粒度级联森林)是周志华教授最新提出的新的决策树集成方法。这种方法生成一个深度树集成方法(deep forest ensemble method),使用级联结构让gcForest学习。gcForest模型把训练分成两个阶段:Multi-Grained Scanning和Cascade Forest。Multi-Grained Scanning生成特征,Cascade Forest经过多个森林多层级联得出预测结果。

它的表征学习能力可以通过对高维输入数据的多粒度扫描而进行加强。串联的层数也可以通过自适应的决定从而使得模型复杂度不需要成为一个自定义的超参数,而是一个根据数据情况而自动设定的参数。值得注意的是,gcForest会比DNN有更少的超参数,更好的一点在于gcForest对参数是有非常好的鲁棒性,哪怕用默认参数也可以获得很棒的结果。

级联森林(Cascade Forest)

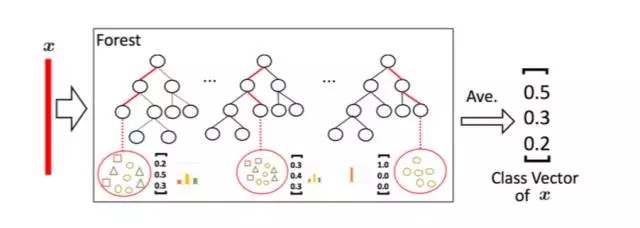

因为决策树其实是在特征空间中不断划分子空间,并且给每个子空间打上标签(分类问题就是一个类别,回归问题就是一个目标值),所以给予一条测试样本,每棵树会根据样本所在的子空间中训练样本的类别占比生成一个类别的概率分布,然后对森林内所有树的各类比例取平均,输出整个森林对各类的比例。例如下图所示,这是根据图1的三分类问题的一个简化森林,每个样本在每棵树中都会找到一条路径去找到自己对应的叶节点,而同样在这个叶节点中的训练数据很可能是有不同类别的,我们可以对不同类别进行统计获取各类的比例,然后通过对所有树的比例进行求均值生成整个森林的概率分布。

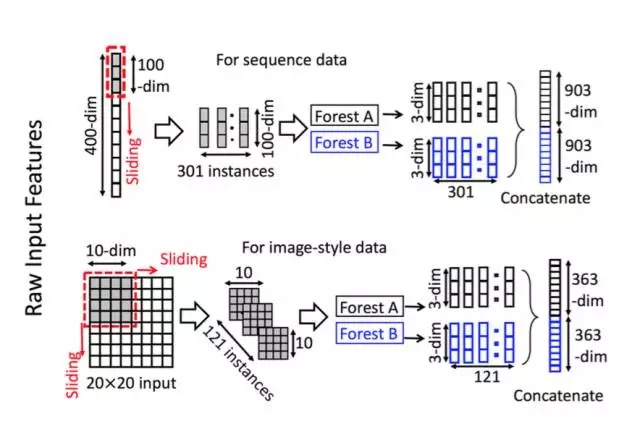

多粒度扫描

多粒度扫描其实是引用了类似CNN的一个滑动窗口,例如说我们现在有一个400维的样本输入,现在设定采样窗口是100维的,那我们可以通过逐步的采样,最终获得301个子样本(因此这里默认的采样步长是1,所以得到的子样本个数 = (400-100)/1 + 1)。如果输入的是一个20*20的图片,利用一个10*10的采样窗口,就可以获得121个子样本(对每行和每列都是 (20-10)/1 + 1 = 11,11*11 = 121)。所以,整个多粒度扫描过程就是:先输入一个完整的P维样本,然后通过一个长度为k的采样窗口进行滑动采样,得到S = (P - K)/1+1 个k维特征子样本向量,接着每个子样本都用于完全随机森林和普通随机森林的训练并在每个森林都获得一个长度为C的概率向量,这样每个森林会产生长度为S*C的表征向量(就是经过随机森林转换并拼接的概率向量),最后把每层的F个森林的结果拼接在一起得到本层输出。

算法实现

鉴于此,在Github上,已经有人实现了算法代码。在这里我们提供一个基于python3的代码实现方法。选择采用scikit学习语法以方便使用,下面将介绍如何使用它。

GCForest.py源码如下,首先需要将此模块导入到根目录并命名为GCForest.py,当然最好是从github克隆下来。

gcForest in Python

Status : under development

gcForest is an algorithm suggested in Zhou and Feng 2017. It uses a multi-grain scanning approach for data slicing and a cascade structure of multiple random forests layers (see paper for details).

gcForest has been first developed as a Classifier and designed such that the multi-grain scanning module and the cascade structure can be used separately. During development I’ve paid special attention to write the code in the way that future parallelization should be pretty straightforward to implement.

Prerequisites

The present code has been developed under python3.x. You will need to have the following installed on your computer to make it work :

- Python 3.x

- Numpy >= 1.12.0

- Scikit-learn >= 0.18.1

- jupyter >= 1.0.0 (only useful to run the tuto notebook)

You can install all of them using pip install :

$ pip3 install requirements.txt

Using gcForest

The syntax uses the scikit learn style with a .fit() function to train the algorithm and a .predict() function to predict new values class. You can find two examples in the jupyter notebook included in the repository.

from GCForest import *

gcf = gcForest( **kwargs )

gcf.fit(X_train, y_train)

gcf.predict(X_test)Notes

I wrote the code from scratch in two days and even though I have tested it on several cases I cannot certify that it is a 100% bug free obviously. Feel free to test it and send me your feedback about any improvement and/or modification!

Known Issues

Memory comsuption when slicing data There is now a short naive calculation illustrating the issue in the notebook. So far the input data slicing is done all in a single step to train the Random Forest for the Multi-Grain Scanning. The problem is that it might requires a lot of memory depending on the size of the data set and the number of slices asked resulting in memory crashes (at least on my Intel Core 2 Duo).

I have recently improved the memory usage (from version 0.1.4) when slicing the data but will keep looking at ways to optimize the code.

OOB score error During the Random Forests training the Out-Of-Bag (OOB) technique is used for the prediction probabilities. It was found that this technique can sometimes raises an error when one or several samples is/are used for all trees training.

A potential solution consists in using cross validation instead of OOB score although it slows down the training. Anyway, simply increasing the number of trees and re-running the training (and crossing fingers) is often enough.

Built With

- PyCharm community edition

- memory_profiler libra

License

This project is licensed under the MIT License (see LICENSE for details)

Early Results

(will be updated as new results come out)

- Scikit-learn handwritten digits classification :

training time ~ 5min

accuracy ~ 98%

部分代码:

import itertools

import numpy as np

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

__author__ = "Pierre-Yves Lablanche"

__email__ = "plablanche@aims.ac.za"

__license__ = "MIT"

__version__ = "0.1.3"

__status__ = "Development"

# noinspection PyUnboundLocalVariable

class gcForest(object):

def __init__(self, shape_1X=None, n_mgsRFtree=30, window=None, stride=1,

cascade_test_size=0.2, n_cascadeRF=2, n_cascadeRFtree=101, cascade_layer=np.inf,

min_samples_mgs=0.1, min_samples_casca

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1013

1013

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?