文章目录

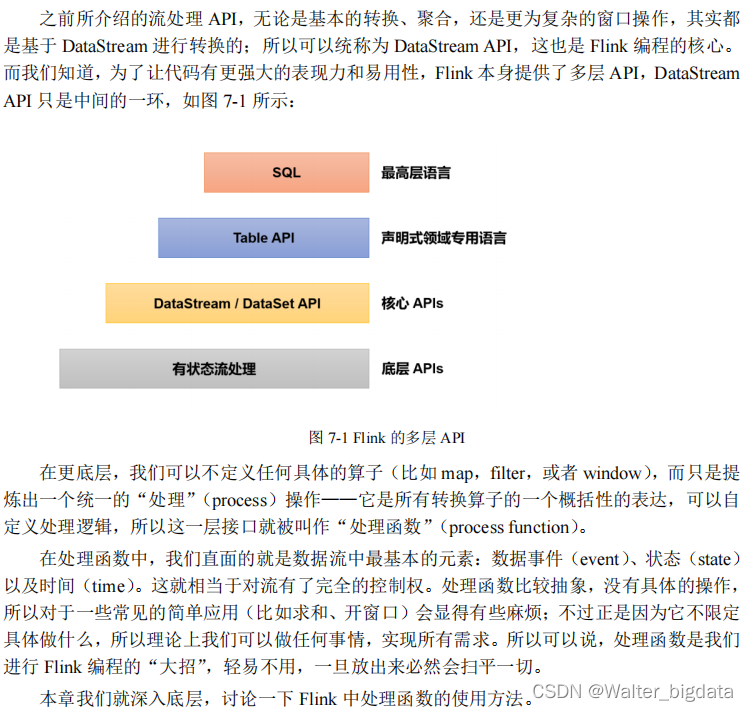

第 7 章 处理函数

7.1 基本处理函数(ProcessFunction)

处理函数主要是定义数据流的转换操作,所以也可以把它归到转换算子中。我们知道在Flink 中几乎所有转换算子都提供了对应的函数类接口,处理函数也不例外;它所对应的函数

类,就叫作 ProcessFunction。

7.1.1 处理函数的功能和使用

我们之前学习的转换算子,一般只是针对某种具体操作来定义的,能够拿到的信息比较有限。比如 map 算子,我们实现的 MapFunction 中,只能获取到当前的数据,定义它转换之后的形式;而像窗口聚合这样的复杂操作,AggregateFunction 中除数据外,还可以获取到当前的状态(以累加器 Accumulator 形式出现)。另外我们还介绍过富函数类,比如 RichMapFunction,

它提供了获取运行时上下文的方法 getRuntimeContext(),可以拿到状态,还有并行度、任务名

称之类的运行时信息。

7.1.2 ProcessFunction 解析

7.1.3 处理函数的分类

Flink 中的处理函数其实是一个大家族,ProcessFunction 只是其中一员。

我们知道,DataStream 在调用一些转换方法之后,

有可能生成新的流类型;例如调用.keyBy()之后得到 KeyedStream,进而再调用.window()之后得到 WindowedStream。对于不同类型的流,其实都可以直接调用.process()方法进行自定义处理,这时传入的参数就都叫作处理函数。当然,它们尽管本质相同,都是可以访问状态和时间信息的底层 API,可彼此之间也会有所差异。

Flink 提供了 8 个不同的处理函数:

- ProcessFunction

最基本的处理函数,基于 DataStream 直接调用.process()时作为参数传入。 - KeyedProcessFunction

对流按键分区后的处理函数,基于 KeyedStream 调用.process()时作为参数传入。要想使用

定时器,比如基于 KeyedStream。 - ProcessWindowFunction

开窗之后的处理函数,也是全窗口函数的代表。基于 WindowedStream 调用.process()时作

为参数传入。 - ProcessAllWindowFunction

同样是开窗之后的处理函数,基于 AllWindowedStream 调用.process()时作为参数传入。 - CoProcessFunction

合并(connect)两条流之后的处理函数,基于 ConnectedStreams 调用.process()时作为参数传入。关于流的连接合并操作,我们会在后续章节详细介绍。 - ProcessJoinFunction

间隔连接(interval join)两条流之后的处理函数,基于 IntervalJoined 调用.process()时作为参数传入。 - BroadcastProcessFunction

广播连接流处理函数,基于 BroadcastConnectedStream 调用.process()时作为参数传入。这里的“广播连接流”BroadcastConnectedStream,是一个未 keyBy 的普通 DataStream 与一个广播流(BroadcastStream)做连接(conncet)之后的产物。关于广播流的相关操作,我们会在后续章节详细介绍。 - KeyedBroadcastProcessFunction

按键分区的广播连接流处理函数,同样是基于 BroadcastConnectedStream 调用.process()时作为参数传入。与 BroadcastProcessFunction 不同的是,这时的广播连接流,是一个 KeyedStream与广播流(BroadcastStream)做连接之后的产物。

示例:

package com.scy.chapter07;

import com.scy.chapter01.ClickSource;

import com.scy.chapter01.Event;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class ProcessFunctionTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Event> stream = env.addSource(new ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

}));

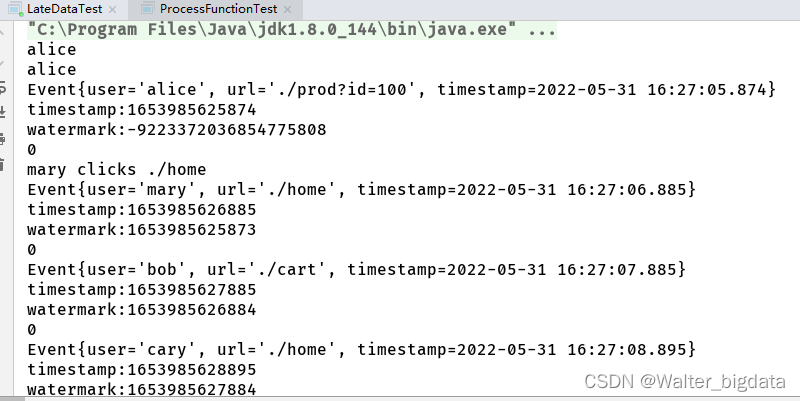

stream.process(new ProcessFunction<Event, String>() {

@Override

public void processElement(Event value, Context ctx, Collector<String> out) throws Exception {

if (value.user.equals("mary")){

out.collect(value.user + " clicks " + value.url);

}else if (value.user.equals("alice")){

out.collect(value.user);

out.collect(value.user);

}

out.collect(value.toString());

System.out.println("timestamp:" + ctx.timestamp());

System.out.println("watermark:" + ctx.timerService().currentWatermark());

System.out.println(getRuntimeContext().getIndexOfThisSubtask());

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

super.onTimer(timestamp, ctx, out);

}

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

}

}).print();

env.execute();

}

}

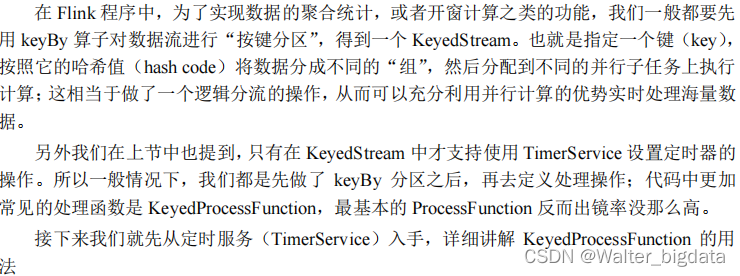

7.2 按键分区处理函数(KeyedProcessFunction)

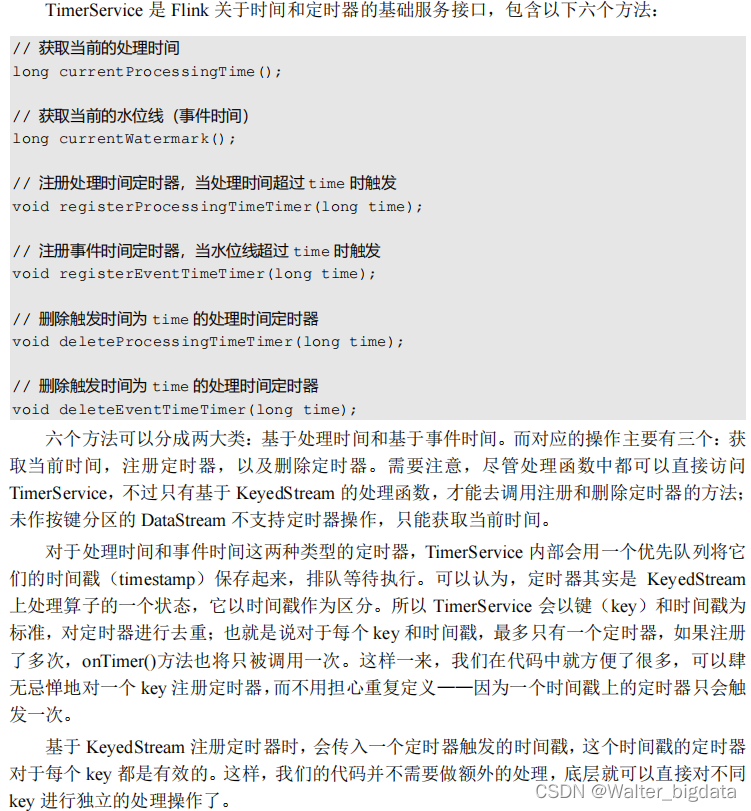

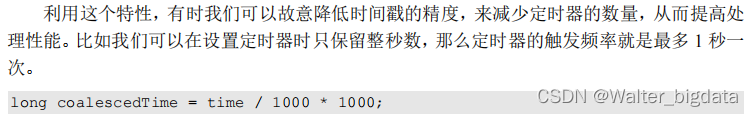

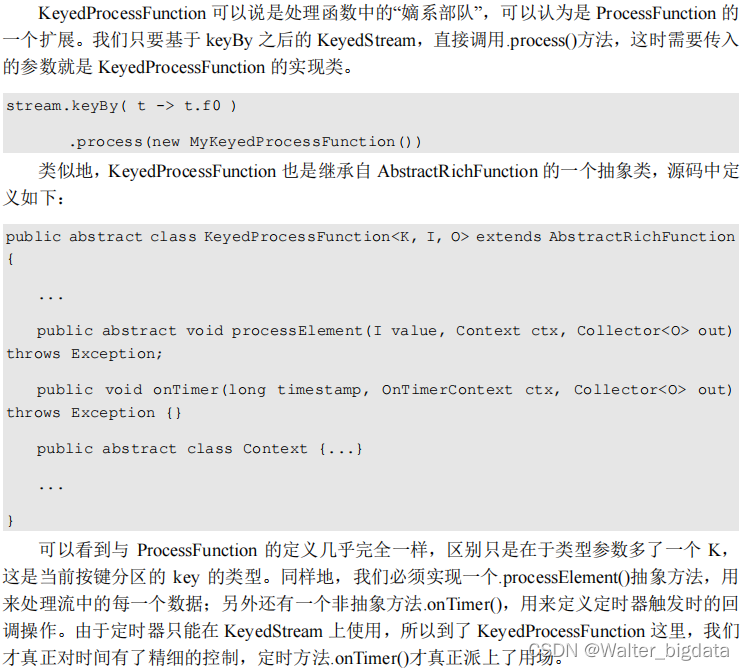

7.2.1 定时器(Timer)和定时服务(TimerService)

KeyedProcessFunction 的一个特色,就是可以灵活地使用定时器。

定时器(timers)是处理函数中进行时间相关操作的主要机制。在.onTimer()方法中可以实

现定时处理的逻辑,而它能触发的前提,就是之前曾经注册过定时器、并且现在已经到了触发

时间。注册定时器的功能,是通过上下文中提供的“定时服务”(TimerService)来实现的。

定时服务与当前运行的环境有关。前面已经介绍过,ProcessFunction 的上下文(Context)

中提供了.timerService()方法,可以直接返回一个 TimerService 对象:

public abstract TimerService timerService();

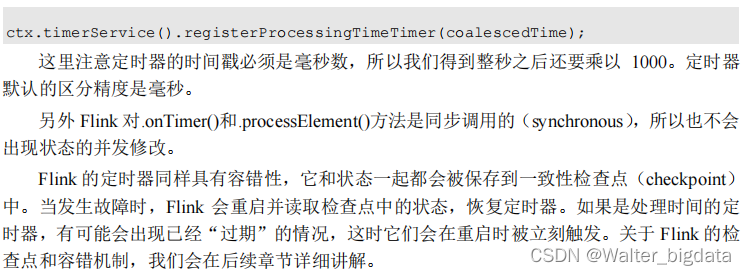

7.2.2 KeyedProcessFunction 的使用

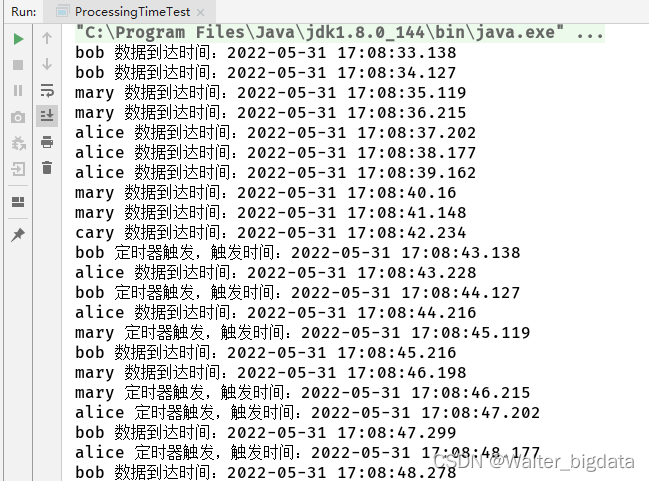

ProcessingTimeTest示例:

package com.scy.chapter07;

import com.scy.chapter01.ClickSource;

import com.scy.chapter01.Event;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.sql.Timestamp;

public class ProcessingTimeTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Event> stream = env.addSource(new ClickSource());

stream.keyBy(data -> data.user)

.process(new KeyedProcessFunction<String, Event, String>() {

@Override

public void processElement(Event value, Context ctx, Collector<String> out) throws Exception {

Long currTs = ctx.timerService().currentProcessingTime();

out.collect(ctx.getCurrentKey() + " 数据到达时间:" + new Timestamp(currTs));

//注册一个10秒后的定时器

ctx.timerService().registerProcessingTimeTimer(currTs+10 *1000L);

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

out.collect(ctx.getCurrentKey() + " 定时器触发,触发时间:" + new Timestamp(timestamp));

}

}).print();

env.execute();

}

}

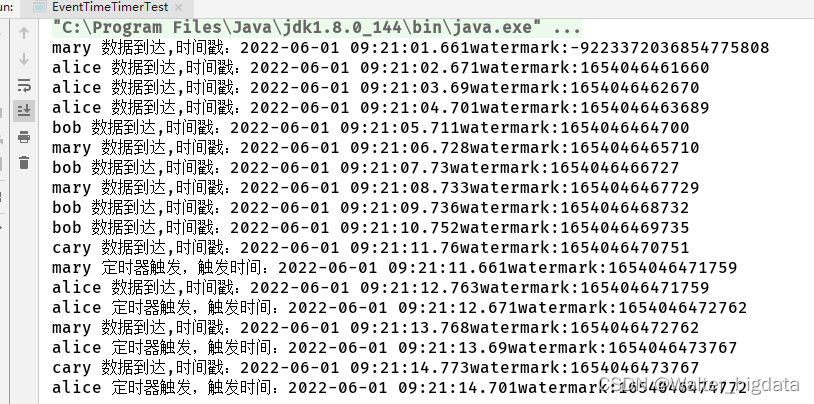

EventTimeTimerTest示例:

package com.scy.chapter07;

import com.scy.chapter01.ClickSource;

import com.scy.chapter01.Event;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.util.Collector;

import java.sql.Timestamp;

import java.time.Duration;

public class EventTimeTimerTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Event> stream = env.addSource(new ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

}));

//事件时间定时器

stream.keyBy(data -> data.user)

.process(new KeyedProcessFunction<String, Event, String>() {

@Override

public void processElement(Event value, Context ctx, Collector<String> out) throws Exception {

Long currTs = ctx.timestamp();

out.collect(ctx.getCurrentKey() + " 数据到达,时间戳:" + new Timestamp(currTs) + "watermark:" + ctx.timerService().currentWatermark());

//注册一个10秒后的定时器

ctx.timerService().registerEventTimeTimer(currTs+10 *1000L);

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

out.collect(ctx.getCurrentKey() + " 定时器触发,触发时间:" + new Timestamp(timestamp) + "watermark:" + ctx.timerService().currentWatermark());

}

}).print();

env.execute();

}

}

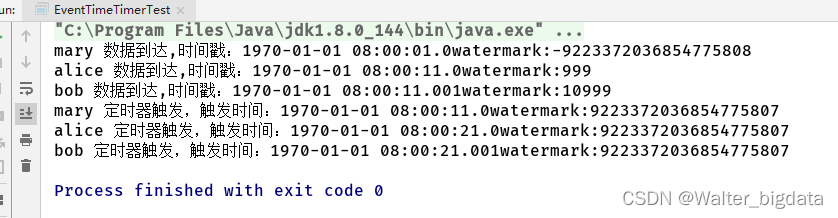

package com.scy.chapter07;

import com.scy.chapter01.ClickSource;

import com.scy.chapter01.Event;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.apache.flink.util.Collector;

import java.sql.Timestamp;

import java.time.Duration;

public class EventTimeTimerTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Event> stream = env.addSource(new CustomSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

}));

//事件时间定时器

stream.keyBy(data -> data.user)

.process(new KeyedProcessFunction<String, Event, String>() {

@Override

public void processElement(Event value, Context ctx, Collector<String> out) throws Exception {

Long currTs = ctx.timestamp();

out.collect(ctx.getCurrentKey() + " 数据到达,时间戳:" + new Timestamp(currTs) + "watermark:" + ctx.timerService().currentWatermark());

//注册一个10秒后的定时器

ctx.timerService().registerEventTimeTimer(currTs+10 *1000L);

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

out.collect(ctx.getCurrentKey() + " 定时器触发,触发时间:" + new Timestamp(timestamp) + "watermark:" + ctx.timerService().currentWatermark());

}

}).print();

env.execute();

}

//自定义测试数据源

public static class CustomSource implements SourceFunction<Event>{

@Override

public void run(SourceContext<Event> ctx) throws Exception {

//直接发出测试数据

ctx.collect(new Event("mary", "./home", 1000L));

Thread.sleep(5000L);

ctx.collect(new Event("alice", "./home", 11000L));

Thread.sleep(5000L);

ctx.collect(new Event("bob", "./home", 11001L));

}

@Override

public void cancel() {

}

}

}

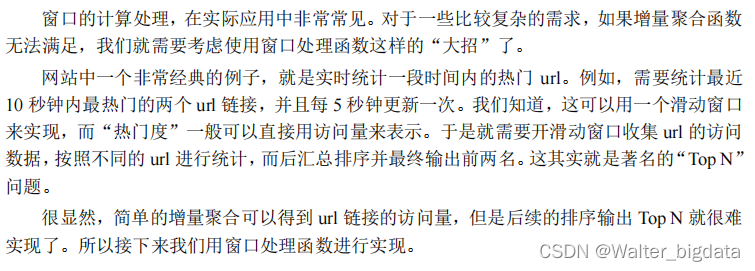

7.4 应用案例——Top N

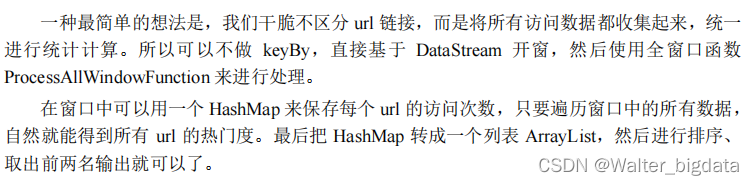

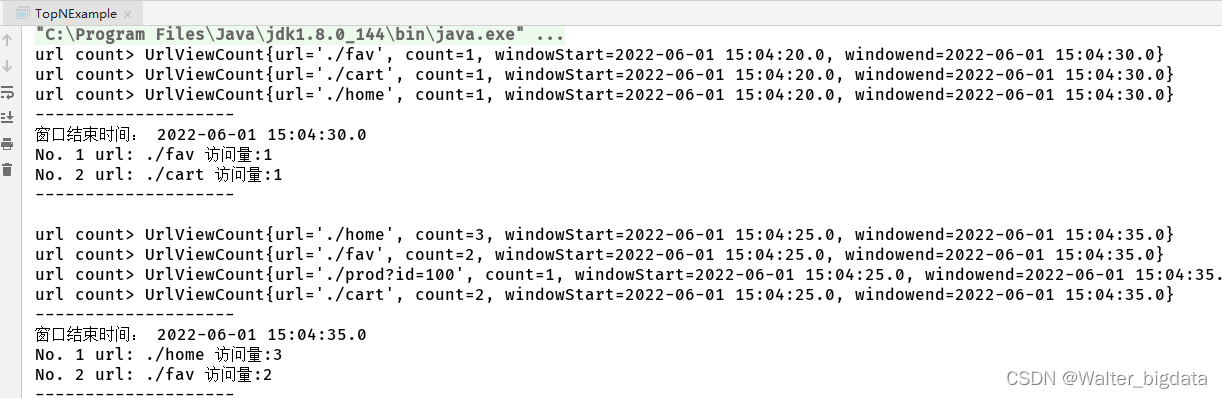

7.4.1 使用 ProcessAllWindowFunction实现

package com.scy.chapter07;

import com.scy.chapter01.ClickSource;

import com.scy.chapter01.Event;

import com.scy.chapter06.UrlCountViewExample;

import com.scy.chapter06.UrlViewCount;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.windowing.ProcessAllWindowFunction;

import org.apache.flink.streaming.api.windowing.assigners.SlidingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.sql.Timestamp;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Comparator;

import java.util.HashMap;

public class TopNExample_ProcessAllWindowFunctiom {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//读取数据

SingleOutputStreamOperator<Event> stream = env.addSource(new ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ZERO) //时间戳减1ms

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

})

);

//1.直接开窗手机所有数据,然后进行排序

stream.map(data -> data.url)

.windowAll(SlidingEventTimeWindows.of(Time.seconds(10),Time.seconds(5)))

.aggregate(new UrlHashMapCountAgg(), new UrlAllWindowResult())

.print();

env.execute();

}

//实现自定义增量聚合函数

private static class UrlHashMapCountAgg implements AggregateFunction<String, HashMap<String,Long>, ArrayList<Tuple2<String,Long>>> {

@Override

public HashMap<String, Long> createAccumulator() {

return new HashMap<String, Long>();

}

@Override

public HashMap<String, Long> add(String value, HashMap<String, Long> accumulator) {

if (accumulator.containsKey(value)){

Long count = accumulator.get(value);

accumulator.put(value,count+1);

}

else {

accumulator.put(value,1L);

}

return accumulator;

}

@Override

public ArrayList<Tuple2<String, Long>> getResult(HashMap<String, Long> accumulator) {

ArrayList<Tuple2<String, Long>> result = new ArrayList<>();

for (String key : accumulator.keySet()){

result.add(Tuple2.of(key,accumulator.get(key)));

}

result.sort(new Comparator<Tuple2<String, Long>>() {

@Override

public int compare(Tuple2<String, Long> o1, Tuple2<String, Long> o2) {

return o2.f1.intValue() - o1.f1.intValue();

}

});

return result;

}

@Override

public HashMap<String, Long> merge(HashMap<String, Long> stringLongHashMap, HashMap<String, Long> acc1) {

return null;

}

}

//实现自定义全窗口函数,包装信息输出结果

private static class UrlAllWindowResult extends ProcessAllWindowFunction<ArrayList<Tuple2<String, Long>>,String, TimeWindow> {

@Override

public void process(Context context, Iterable<ArrayList<Tuple2<String, Long>>> elements, Collector<String> out) throws Exception {

ArrayList<Tuple2<String, Long>> list = elements.iterator().next();

StringBuffer result = new StringBuffer();

result.append("--------------------\n");

result.append("窗口结束时间: " + new Timestamp(context.window().getEnd()) + "\n");

//取List前两个包装信息输出

for (int i = 0;i< 2;i++){

Tuple2<String, Long> currTuple = list.get(i);

String info = "No. " + (i+1) + " "

+ "url: " + currTuple.f0 + " "

+ "访问量:" + currTuple.f1 + "\n";

result.append(info);

}

result.append("--------------------\n");

out.collect(result.toString());

}

}

}

7.4.2 使用 KeyedProcessFunction实现

package com.scy.chapter07;

import com.scy.chapter01.ClickSource;

import com.scy.chapter01.Event;

import com.scy.chapter06.UrlCountViewExample;

import com.scy.chapter06.UrlViewCount;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.common.state.ListStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.api.windowing.assigners.SlidingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

import java.sql.Timestamp;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Comparator;

public class TopNExample {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//读取数据

SingleOutputStreamOperator<Event> stream = env.addSource(new ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ZERO) //时间戳减1ms

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

})

);

//1.第一步按url分组统计窗口内每个url的访问量

SingleOutputStreamOperator<UrlViewCount> urlCountStream = stream.keyBy(data -> data.url)

.window(SlidingEventTimeWindows.of(Time.seconds(10), Time.seconds(5)))

.aggregate(new UrlCountViewExample.UrlViewCountAgg(), new UrlCountViewExample.UrlViewCountResult());

urlCountStream.print("url count");

//2.对于同一窗口统计出的访问量进行收集和排序

urlCountStream.keyBy(data -> data.windowend)

.process(new TopNProcessResult(2))

.print();

env.execute();

}

private static class TopNProcessResult extends KeyedProcessFunction<Long,UrlViewCount,String> {

//定义一个属性n

private Integer n;

//定义列表状态

private ListState<UrlViewCount> urlViewCountListState;

public TopNProcessResult(Integer n) {

this.n = n;

}

//在生命周期环境中获取状态

@Override

public void open(Configuration parameters) throws Exception {

urlViewCountListState = getRuntimeContext().getListState(

new ListStateDescriptor<UrlViewCount>("url-count-list", Types.POJO(UrlViewCount.class))

);

}

@Override

public void processElement(UrlViewCount value, Context ctx, Collector<String> out) throws Exception {

//将数据保存到状态中

urlViewCountListState.add(value);

//注册windowend + 1ms的定时器

ctx.timerService().registerEventTimeTimer(ctx.getCurrentKey() + 1);

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

ArrayList<UrlViewCount> urlViewCountArrayList = new ArrayList<>();

for (UrlViewCount urlViewCount: urlViewCountListState.get()){

urlViewCountArrayList.add(urlViewCount);

}

//排序

urlViewCountArrayList.sort(new Comparator<UrlViewCount>() {

@Override

public int compare(UrlViewCount o1, UrlViewCount o2) {

return o2.count.intValue() - o1.count.intValue();

}

});

//包装信息打印输出

StringBuffer result = new StringBuffer();

result.append("--------------------\n");

result.append("窗口结束时间: " + new Timestamp(ctx.getCurrentKey()) + "\n");

//取List前两个包装信息输出

for (int i = 0;i< 2;i++){

UrlViewCount currTuple = urlViewCountArrayList.get(i);

String info = "No. " + (i+1) + " "

+ "url: " + currTuple.url + " "

+ "访问量:" + currTuple.count + "\n";

result.append(info);

}

result.append("--------------------\n");

out.collect(result.toString());

}

}

}

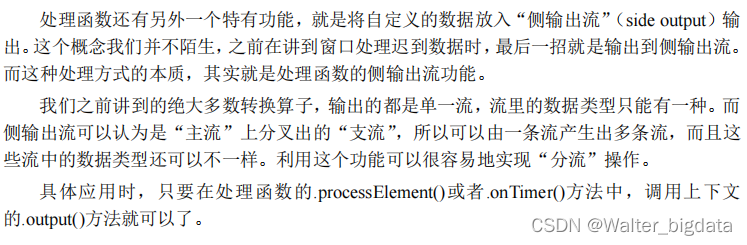

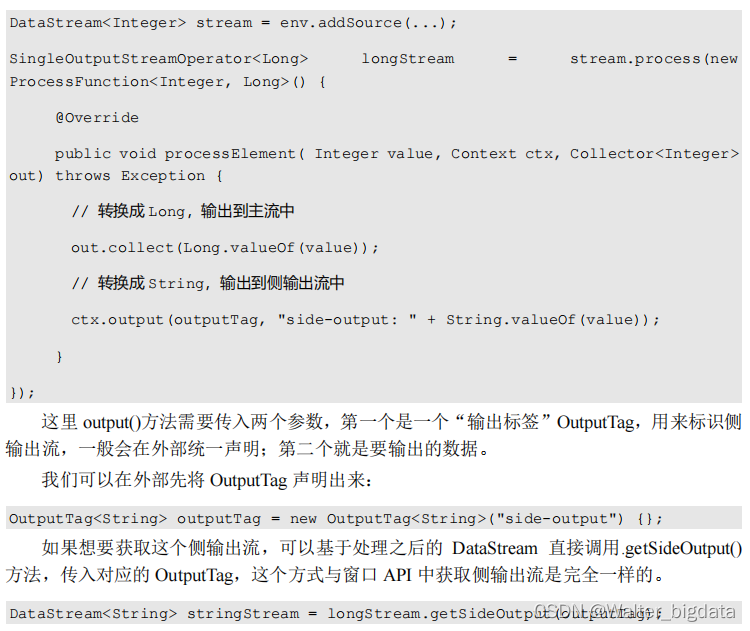

7.5 侧输出流(Side Output)

7.6 本章总结

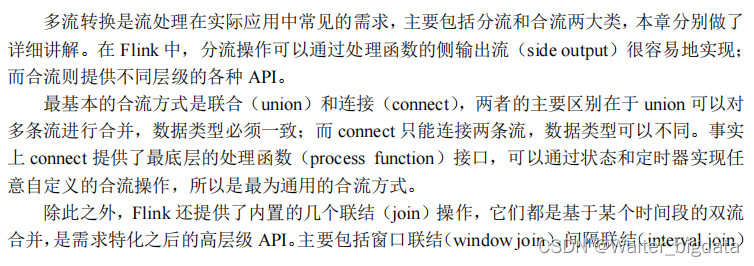

第 8 章 多流转换

8.1 分流

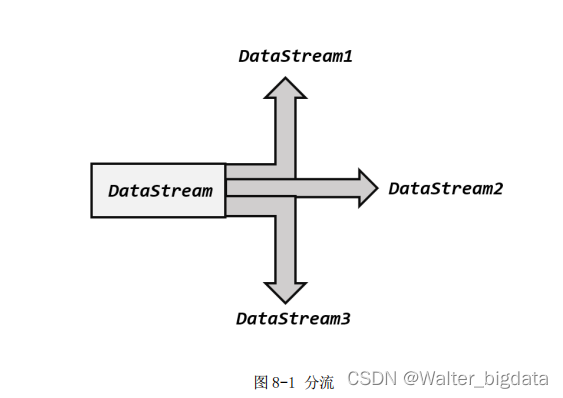

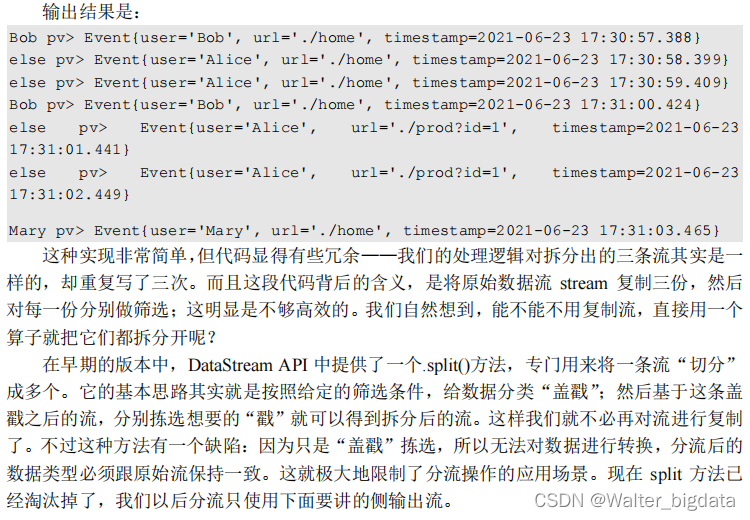

所谓“分流”,就是将一条数据流拆分成完全独立的两条、甚至多条流。也就是基于一个

DataStream,得到完全平等的多个子 DataStream,如图 8-1 所示。一般来说,我们会定义一些

筛选条件,将符合条件的数据拣选出来放到对应的流里。

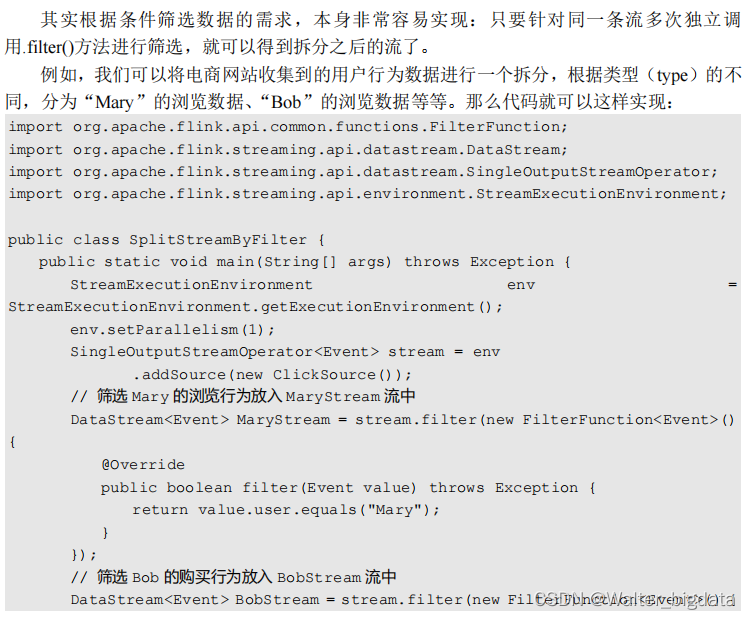

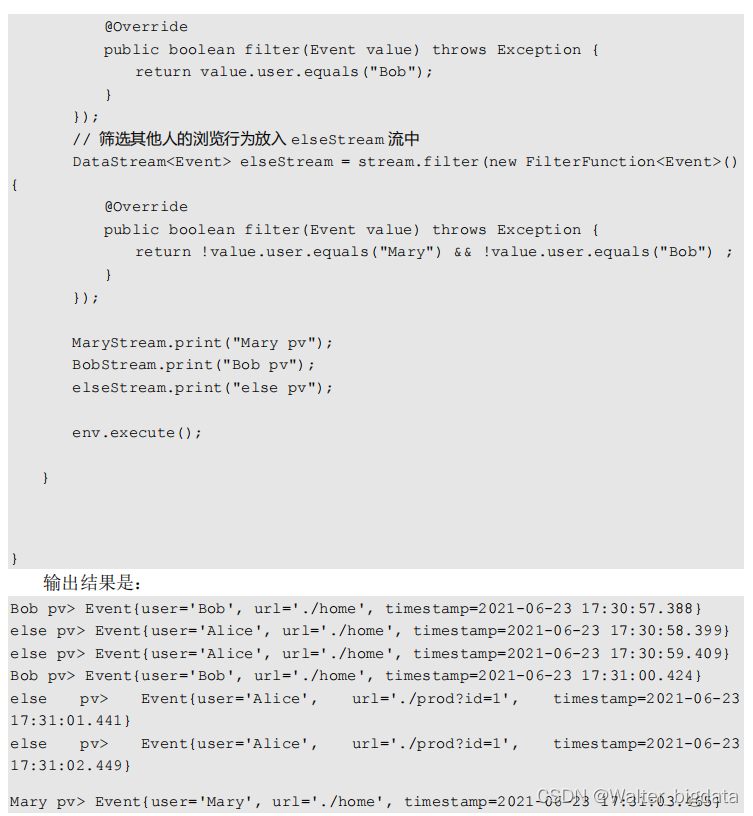

8.1.1 简单实现

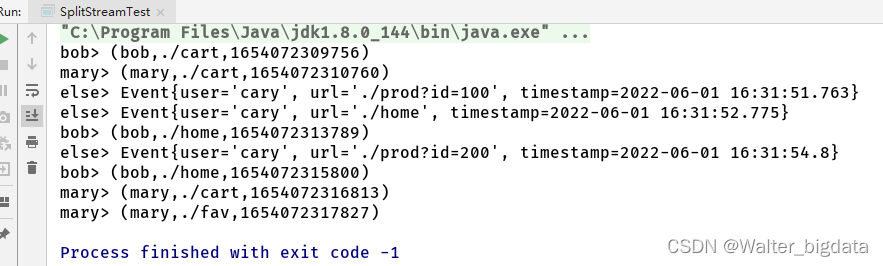

8.1.2 使用侧输出流

我们可以使用侧输出流将上一小节的分流代码改写如下:

package com.scy.chapter08;

import com.scy.chapter01.ClickSource;

import com.scy.chapter01.Event;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.util.Collector;

import org.apache.flink.util.OutputTag;

import java.time.Duration;

public class SplitStreamTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Event> stream = env.addSource(new ClickSource())

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

}));

//为侧输出流定义标签

OutputTag<Tuple3<String, String, Long>> maryTag = new OutputTag<Tuple3<String, String, Long>>("mary") {};

OutputTag<Tuple3<String, String, Long>> bobTag = new OutputTag<Tuple3<String, String, Long>>("bob") {};

SingleOutputStreamOperator<Event> processedStream = stream.process(new ProcessFunction<Event, Event>() {

@Override

public void processElement(Event value, Context ctx, Collector<Event> out) throws Exception {

if (value.user.equals("mary"))

ctx.output(maryTag, Tuple3.of(value.user, value.url, value.timestamp));

else if (value.user.equals("bob"))

ctx.output(bobTag, Tuple3.of(value.user, value.url, value.timestamp));

else

out.collect(value);

}

});

processedStream.print("else");

processedStream.getSideOutput(maryTag).print("mary");

processedStream.getSideOutput(bobTag).print("bob");

env.execute();

}

}

8.2 基本合流操作

既然一条流可以分开,自然多条流就可以合并。在实际应用中,我们经常会遇到来源不同

的多条流,需要将它们的数据进行联合处理。所以 Flink 中合流的操作会更加普遍,对应的

API 也更加丰富。

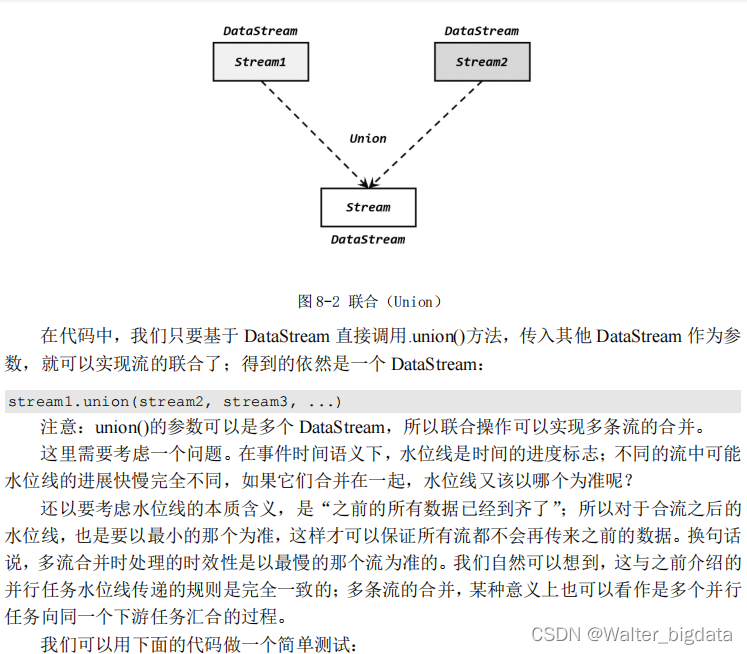

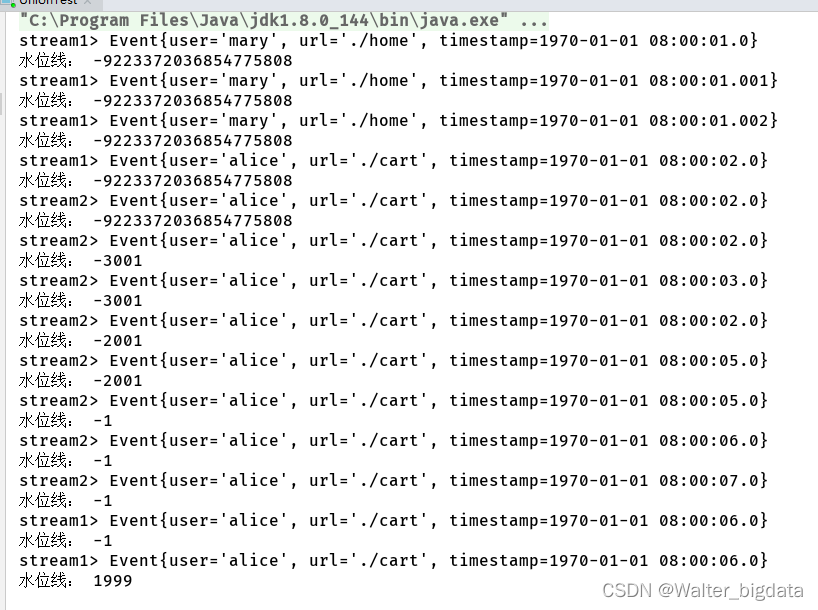

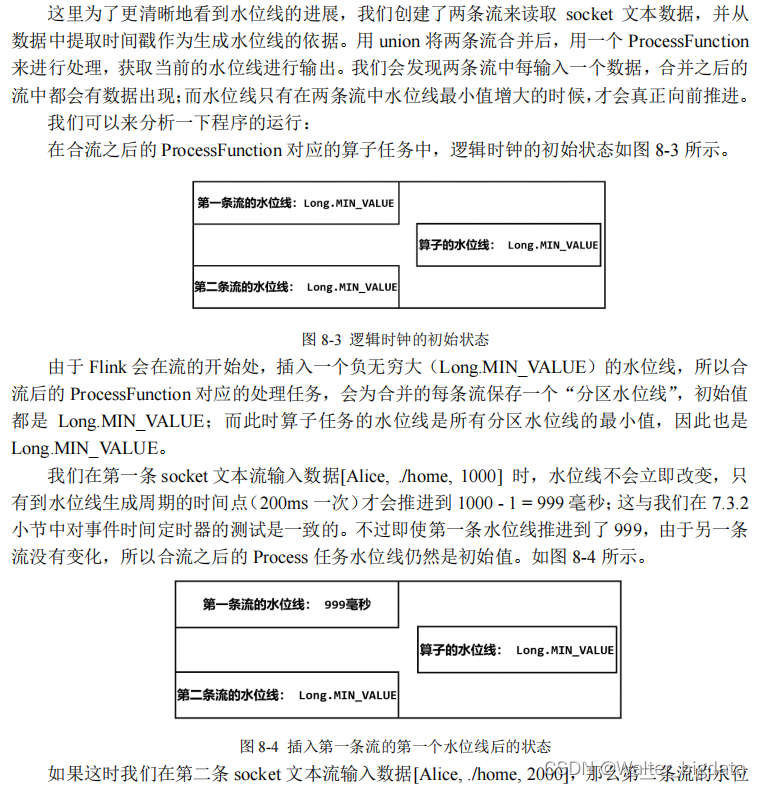

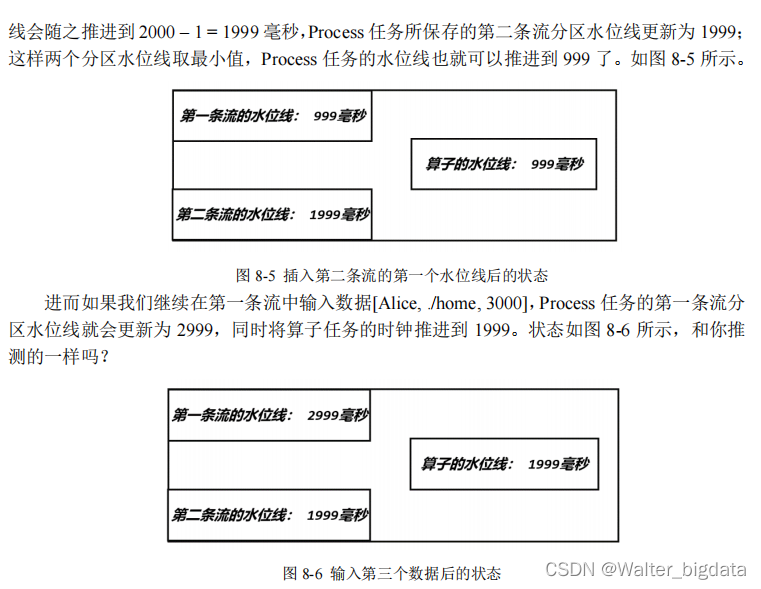

8.2.1 联合(Union)–数据类型必须相同

最简单的合流操作,就是直接将多条流合在一起,叫作流的“联合”(union),如图 8-2所示。联合操作要求必须流中的数据类型必须相同,合并之后的新流会包括所有流中的元素,数据类型不变。这种合流方式非常简单粗暴,就像公路上多个车道汇在一起一样。

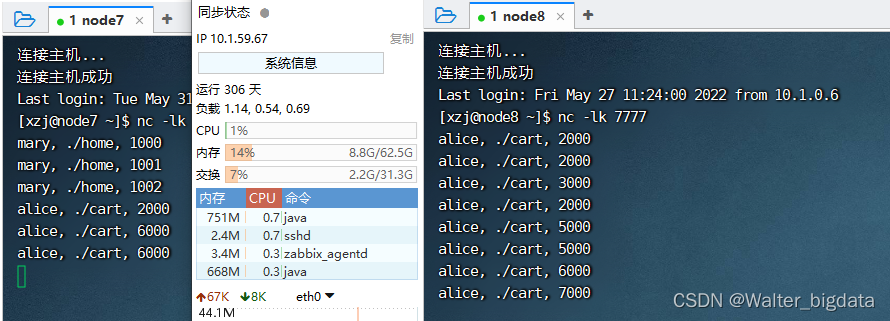

package com.scy.chapter08;

import com.scy.chapter01.ClickSource;

import com.scy.chapter01.Event;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class UnionTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Event> stream1 = env.socketTextStream("node7",7777)

.map(data -> {

String[] field = data.split(",");

return new Event(field[0].trim(),field[1].trim(),Long.valueOf(field[2].trim()));

})

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ofSeconds(2))

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

}));

stream1.print("stream1");

SingleOutputStreamOperator<Event> stream2 = env.socketTextStream("node8",7777)

.map(data -> {

String[] field = data.split(",");

return new Event(field[0].trim(),field[1].trim(),Long.valueOf(field[2].trim()));

})

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ofSeconds(5))

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

}));

stream2.print("stream2");

//合并两条流

stream1.union(stream2)

.process(new ProcessFunction<Event, String>() {

@Override

public void processElement(Event value, Context ctx, Collector<String> out) throws Exception {

out.collect("水位线: " + ctx.timerService().currentWatermark());

}

}).print();

env.execute();

}

}

8.2.2 连接(Connect)

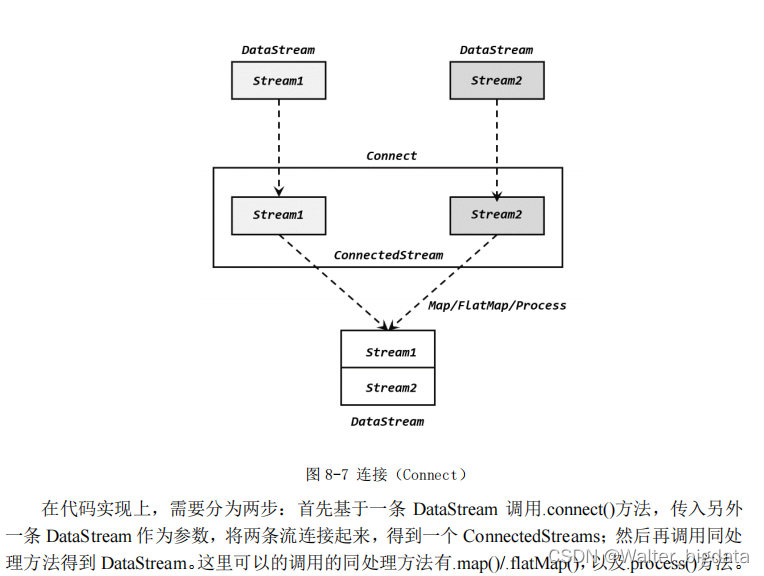

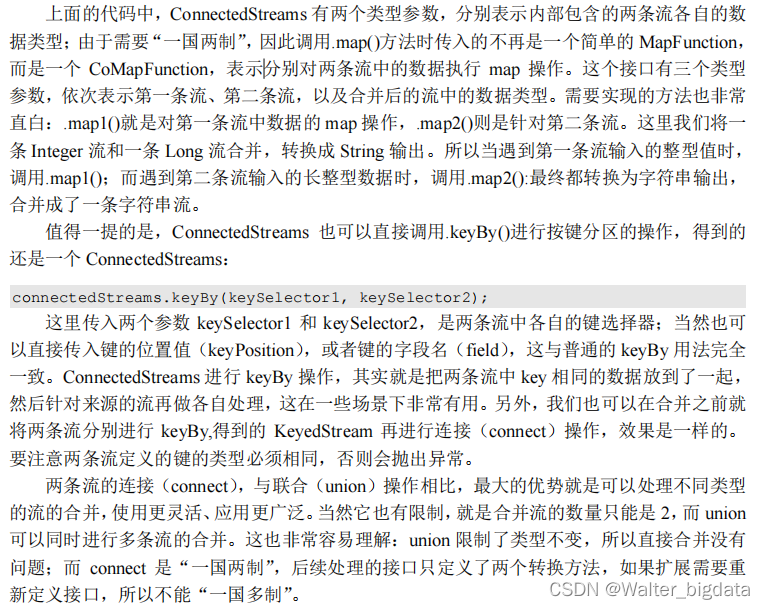

流的联合虽然简单,不过受限于数据类型不能改变,灵活性大打折扣,所以实际应用较少出现。除了联合(union),Flink 还提供了另外一种方便的合流操作——连接(connect)。顾名思义,这种操作就是直接把两条流像接线一样对接起来。

1. 连接流(ConnectedStreams)

为了处理更加灵活,连接操作允许流的数据类型不同。但我们知道一个 DataStream 中的

数据只能有唯一的类型,所以连接得到的并不是 DataStream,而是一个“连接流”

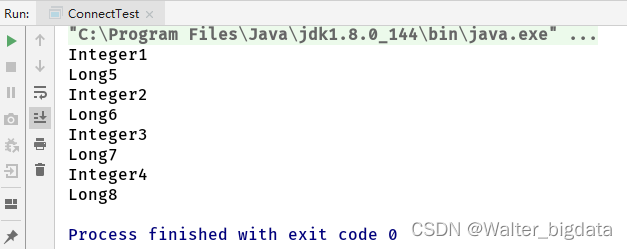

(ConnectedStreams)。连接流可以看成是两条流形式上的“统一”,被放在了一个同一个流中;事实上内部仍保持各自的数据形式不变,彼此之间是相互独立的。要想得到新的DataStream,还需要进一步定义一个“同处理”(co-process)转换操作,用来说明对于不同来源、不同类型的数据,怎样分别进行处理转换、得到统一的输出类型。所以整体上来,两条流的连接就像是“一国两制”,两条流可以保持各自的数据类型、处理方式也可以不同,不过最终还是会统一到同一个 DataStream 中,如图 8-7 所示。

package com.scy.chapter08;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoMapFunction;

public class ConnectTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStreamSource<Integer> stream1 = env.fromElements(1, 2, 3, 4);

DataStreamSource<Long> stream2 = env.fromElements(5L, 6L, 7L, 8L);

stream1.connect(stream2)

.map(new CoMapFunction<Integer, Long, String>() {

@Override

public String map1(Integer value) throws Exception {

return "Integer" + value.toString();

}

@Override

public String map2(Long value) throws Exception {

return "Long" + value.toString();

}

}).print();

env.execute();

}

}

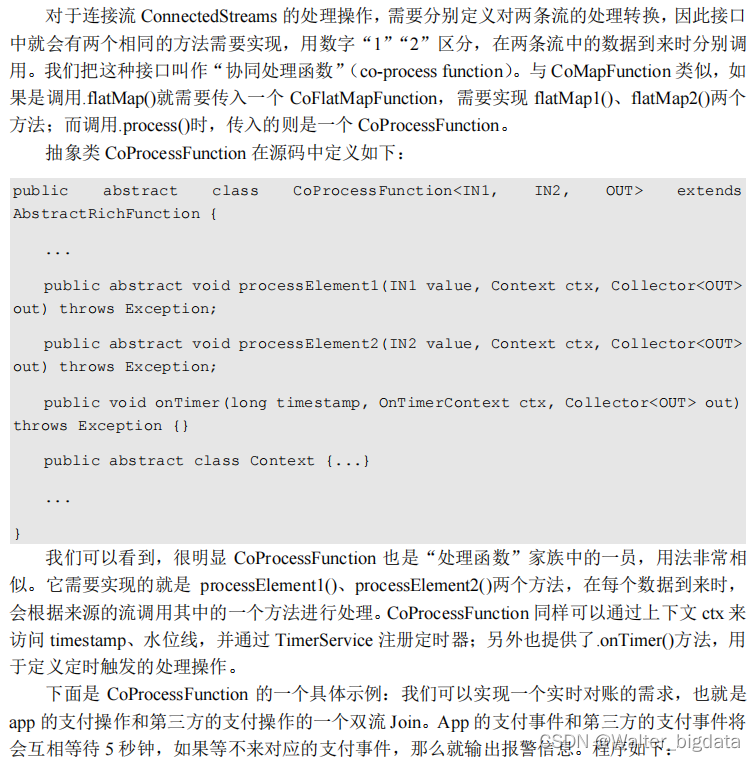

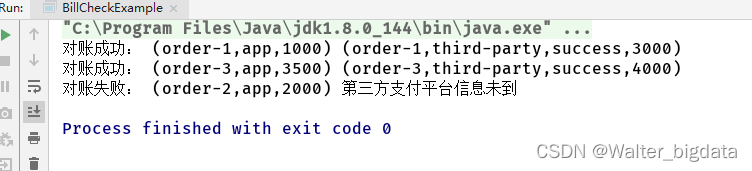

2. CoProcessFunction

package com.scy.chapter08;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoProcessFunction;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class BillCheckExample {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//来自app的支付日志

SingleOutputStreamOperator<Tuple3<String, String, Long>> appstream = env.fromElements(

Tuple3.of("order-1", "app", 1000L),

Tuple3.of("order-2", "app", 2000L),

Tuple3.of("order-3", "app", 3500L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple3<String, String, Long>>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple3<String, String, Long>>() {

@Override

public long extractTimestamp(Tuple3<String, String, Long> stringStringLongTuple3, long l) {

return stringStringLongTuple3.f2;

}

}));

//来自第三方支付平台的支付日志

SingleOutputStreamOperator<Tuple4<String, String, String, Long>> thirdpartstream = env.fromElements(

Tuple4.of("order-1", "third-party", "success", 3000L),

Tuple4.of("order-3", "third-party", "success", 4000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple4<String, String, String, Long>>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple4<String, String, String, Long>>() {

@Override

public long extractTimestamp(Tuple4<String, String, String, Long> stringStringStringLongTuple4, long l) {

return stringStringStringLongTuple4.f3;

}

}));

//检测同一支付单在两条流中是否匹配,不匹配就报警

//连接方式1

appstream.keyBy(data -> data.f0)

.connect(thirdpartstream.keyBy(data -> data.f0));

//连接方式2

appstream.connect(thirdpartstream)

.keyBy(data -> data.f0,data -> data.f0)

.process(new OrderMatchResult())

.print();

env.execute();

}

//自定义实现CoProcessFunction

private static class OrderMatchResult extends CoProcessFunction<Tuple3<String,String,Long>,Tuple4<String,String,String,Long>,String> {

//定义状态变量,用来保存已经到达的事件

private ValueState<Tuple3<String,String,Long>> appEventState;

private ValueState<Tuple4<String,String,String,Long>> thirdPartyEventState;

@Override

public void open(Configuration parameters) throws Exception {

appEventState = getRuntimeContext().getState(

new ValueStateDescriptor<Tuple3<String, String, Long>>("app-event", Types.TUPLE(Types.STRING,Types.STRING,Types.LONG))

);

thirdPartyEventState = getRuntimeContext().getState(

new ValueStateDescriptor<Tuple4<String, String,String, Long>>("thirdparty-event", Types.TUPLE(Types.STRING,Types.STRING,Types.STRING,Types.LONG))

);

}

@Override

public void processElement1(Tuple3<String, String, Long> value, Context ctx, Collector<String> out) throws Exception {

//来的是appevent,看另一条流中事件是否来过

if (thirdPartyEventState.value() != null){

out.collect("对账成功: " + value + " " + thirdPartyEventState.value());

//清空状态

thirdPartyEventState.clear();

}else {

//更新状态

appEventState.update(value);

//注册一5秒后的定时器,开始等待另一条流的事件

ctx.timerService().registerEventTimeTimer(value.f2+5000L);

}

}

@Override

public void processElement2(Tuple4<String, String, String, Long> value, Context ctx, Collector<String> out) throws Exception {

//来的是thirdpartyevent,看另一条流中事件是否来过

if (appEventState.value() != null){

out.collect("对账成功: " + appEventState.value() + " " + value);

//清空状态

appEventState.clear();

}else {

//更新状态

thirdPartyEventState.update(value);

//注册一5秒后的定时器,开始等待另一条流的事件

ctx.timerService().registerEventTimeTimer(value.f3);

}

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

//定时器触发执行,判断状态,如果某个状态不为空说明另一条事件没来

if(appEventState.value() !=null){

out.collect("对账失败: " + appEventState.value() + " " + "第三方支付平台信息未到");

}

if(thirdPartyEventState.value() !=null){

out.collect("对账失败: " + thirdPartyEventState.value() + " " + "app信息未到");

}

appEventState.clear();

thirdPartyEventState.clear();

}

}

}

3. 广播连接流(BroadcastConnectedStream)

8.3 基于时间的合流——双流联结(Join)

8.3.1 窗口联结(Window Join)–产生笛卡尔积

基于时间的操作,最基本的当然就是时间窗口了。我们之前已经介绍过 Window API 的用

法,主要是针对单一数据流在某些时间段内的处理计算。那如果我们希望将两条流的数据进行

合并、且同样针对某段时间进行处理和统计,又该怎么做呢?

Flink 为这种场景专门提供了一个窗口联结(window join)算子,可以定义时间窗口,并

将两条流中共享一个公共键(key)的数据放在窗口中进行配对处理。

1. 窗口联结的调用

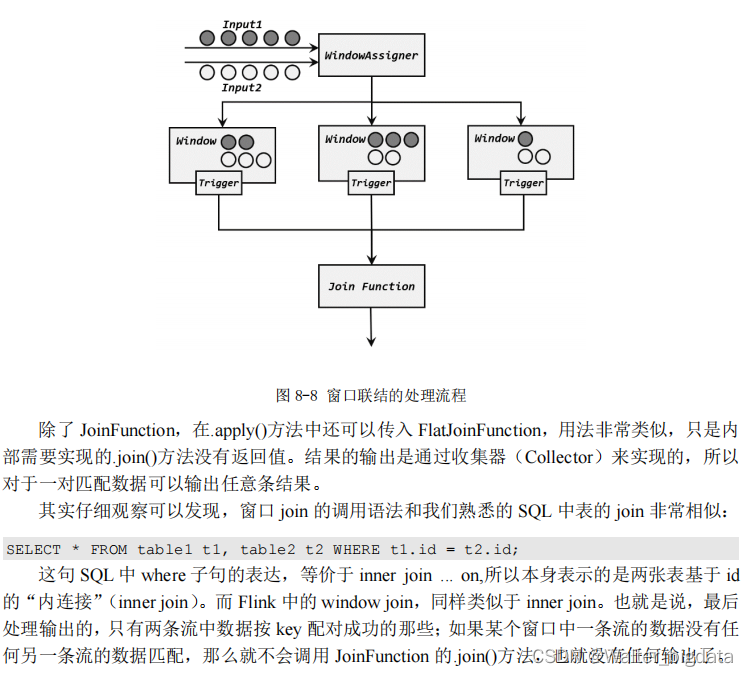

2. 窗口联结的处理流程

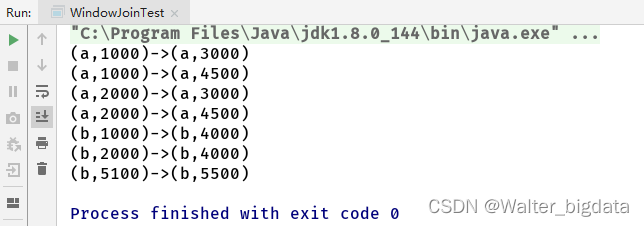

3. 窗口联结实例

package com.scy.chapter08;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.JoinFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import java.time.Duration;

public class WindowJoinTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Tuple2<String,Long>> stream1 = env.fromElements(

Tuple2.of("a", 1000L),

Tuple2.of("b", 1000L),

Tuple2.of("a", 2000L),

Tuple2.of("b", 2000L),

Tuple2.of("b", 5100L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple2<String, Long>>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}));

SingleOutputStreamOperator<Tuple2<String,Integer>> stream2 = env.fromElements(

Tuple2.of("a", 3000),

Tuple2.of("b", 4000),

Tuple2.of("a", 4500),

Tuple2.of("b", 5500)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple2<String, Integer>>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Integer>>() {

@Override

public long extractTimestamp(Tuple2<String, Integer> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}}));

stream1.join(stream2)

.where(data -> data.f0)

.equalTo(data -> data.f0)

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.apply(new JoinFunction<Tuple2<String, Long>, Tuple2<String, Integer>, String>() {

@Override

public String join(Tuple2<String, Long> first, Tuple2<String, Integer> second) throws Exception {

return first + "->" + second;

}

}).print();

env.execute();

}

}

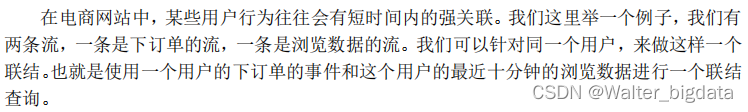

8.3.2 间隔联结(Interval Join)–相当于inner join

在有些场景下,我们要处理的时间间隔可能并不是固定的。比如,在交易系统中,需要实

时地对每一笔交易进行核验,保证两个账户转入转出数额相等,也就是所谓的“实时对账”。

两次转账的数据可能写入了不同的日志流,它们的时间戳应该相差不大,所以我们可以考虑只

统计一段时间内是否有出账入账的数据匹配。这时显然不应该用滚动窗口或滑动窗口来处理— —因为匹配的两个数据有可能刚好“卡在”窗口边缘两侧,于是窗口内就都没有匹配了;会话

窗口虽然时间不固定,但也明显不适合这个场景。 基于时间的窗口联结已经无能为力了。

为了应对这样的需求,Flink 提供了一种叫作“间隔联结”(interval join)的合流操作。顾

名思义,间隔联结的思路就是针对一条流的每个数据,开辟出其时间戳前后的一段时间间隔,

看这期间是否有来自另一条流的数据匹配。

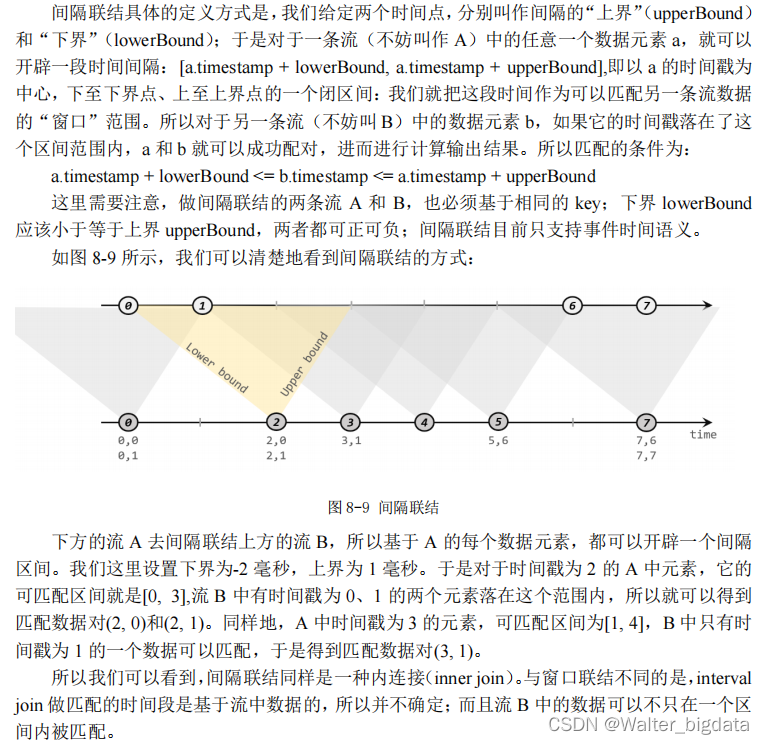

1. 间隔联结的原理

2. 间隔联结的调用

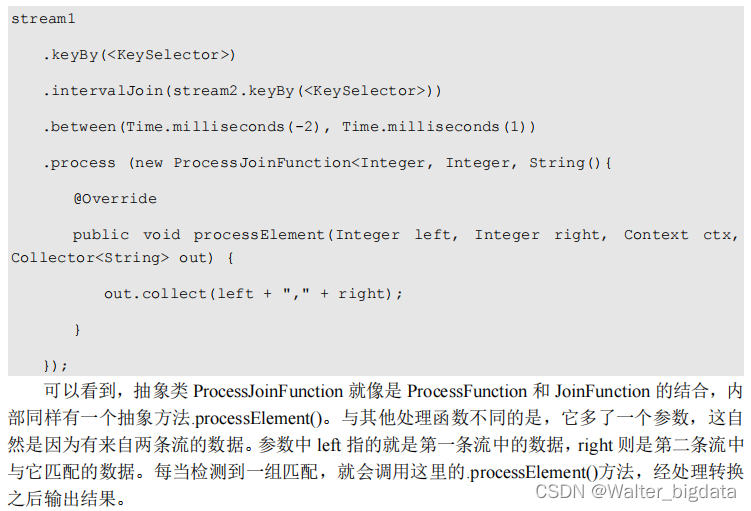

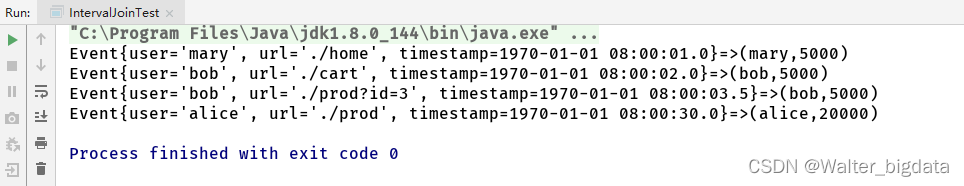

间隔联结在代码中,是基于 KeyedStream 的联结(join)操作。DataStream 在 keyBy 得到

KeyedStream 之后,可以调用.intervalJoin()来合并两条流,传入的参数同样是一个KeyedStream,两者的 key 类型应该一致;得到的是一个 IntervalJoin 类型。后续的操作同样是完全固定的:先通过.between()方法指定间隔的上下界,再调用.process()方法,定义对匹配数据对的处理操作。调用.process()需要传入一个处理函数,这是处理函数家族的最后一员:“处理联结函数”ProcessJoinFunction。

通用调用形式如下:

3. 间隔联结实例

package com.scy.chapter08;

import com.scy.chapter01.Event;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.ProcessJoinFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class IntervalJoinTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Tuple2<String, Long>> orderStream = env.fromElements(

Tuple2.of("mary", 5000L),

Tuple2.of("bob", 5000L),

Tuple2.of("alice", 20000L),

Tuple2.of("cary", 51000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple2<String, Long>>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}));

SingleOutputStreamOperator<Event> clickStream = env.fromElements(

new Event("alice", "./home", 1000L),

new Event("mary", "./home", 1000L),

new Event("bob", "./cart", 2000L),

new Event("scy", "./first", 5000L),

new Event("bob", "./prod?id=1", 25000L),

new Event("bob", "./prod?id=2", 30000L),

new Event("mary", "./cart", 20000L),

new Event("bob", "./prod?id=3", 3500L),

new Event("bob", "./home", 40000L),

new Event("scy", "./home", 60000L),

new Event("alice", "./prod", 30000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

return event.timestamp;

}

}));

orderStream.keyBy(data -> data.f0)

.intervalJoin(clickStream.keyBy(data -> data.user))

.between(Time.seconds(-5),Time.seconds(10))

.process(new ProcessJoinFunction<Tuple2<String, Long>, Event, String>() {

@Override

public void processElement(Tuple2<String, Long> left, Event right, Context ctx, Collector<String> out) throws Exception {

out.collect(right + "=>" + left);

}

}).print();

env.execute();

}

}

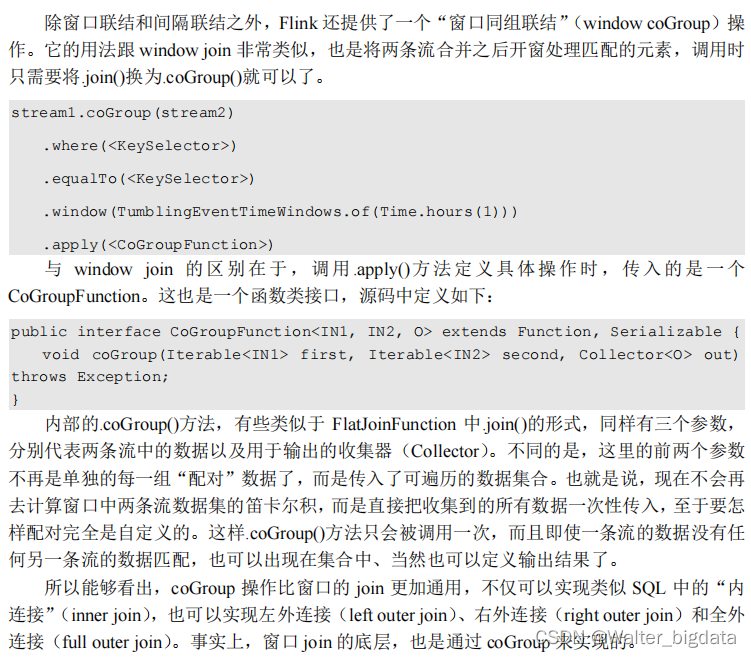

8.3.3 窗口同组联结(Window CoGroup)可以实现(Window join功能)

下面是一段 coGroup 的示例代码:

package com.scy.chapter08;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.CoGroupFunction;

import org.apache.flink.api.common.functions.JoinFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

import java.time.Duration;

public class CoGroupTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Tuple2<String, Long>> stream1 = env.fromElements(

Tuple2.of("a", 1000L),

Tuple2.of("b", 1000L),

Tuple2.of("a", 2000L),

Tuple2.of("b", 2000L),

Tuple2.of("b", 5100L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple2<String, Long>>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}));

SingleOutputStreamOperator<Tuple2<String, Integer>> stream2 = env.fromElements(

Tuple2.of("a", 3000),

Tuple2.of("b", 4000),

Tuple2.of("a", 4500),

Tuple2.of("b", 5500)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple2<String, Integer>>forBoundedOutOfOrderness(Duration.ZERO)

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Integer>>() {

@Override

public long extractTimestamp(Tuple2<String, Integer> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}));

stream1.coGroup(stream2)

.where(data -> data.f0)

.equalTo(data -> data.f0)

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.apply(new CoGroupFunction<Tuple2<String, Long>, Tuple2<String, Integer>, String>() {

@Override

public void coGroup(Iterable<Tuple2<String, Long>> first, Iterable<Tuple2<String, Integer>> second, Collector<String> collector) throws Exception {

collector.collect(first + "=>" + second);

}

}).print();

env.execute();

}

}

8.4 本章总结

567

567

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?