文章目录

1 前言

Hi,大家好,这里是丹成学长,今天向大家介绍

基于Apriori的购物商品关联分析

大家可用于 毕业设计

🧿 选题指导, 项目分享:见文末

1.1 实现目的

关联分析用于发现用户购买不同的商品之间存在关联和相关联系,比如A商品和B商品存在很强的相关性,常用于实体商店或在线电商的推荐系统,例如某一客户购买A商品,那么他很有可能会购买B商品,通过大量销售数据找到经常在一起购买的商品组合,可以了解用户的购买行为,根据销售的商品推荐关联商品从而给出购买建议,寻找销售新的增长点。

2 数据集

使用爬虫爬取相关的数据集,学长爬取了一小部分,如下:

列字段包含以下:

-

user_id:用户身份

-

item_id:商品ID

-

behavior_type:用户行为类型(包含点击、收藏、加购物车、购买四种行为,分别用数字1、2、3、4表示)

-

user_geohash:地理位置(有空值)

-

item_category:品类ID(商品所属的品类)

-

time:用户行为发生的时间

在本次分析中只选用user_id和item_id这两个字段

3 数据分析目的

1、 用户购买哪种商品次数最多

2、 用户购买的商品中,哪些商品组合关联度高

4 数据清洗和构建模型

关联分析算法常用Apriori算法和FP-growth算法

5 Apriori算法介绍

5.1 Apriori算法基本原理

Apriori算法是经典的挖掘频繁项集和关联规则的数据挖掘算法,可以从大规模数据集中寻找物品间的隐含关系。其核心思想是通过连接产生候选项及其支持度,然后通过剪枝生成频繁项集。

-

项集:包含0个或者多个项的集合称为项集。在购物蓝事务中,每一样商品就是一个项,一次购买行为包含了多个项,把其中的项组合起来就构成了项集

-

支持度计数:项集在事务中出现的次数

-

频繁项集:经常出现在一块的物品的集合

-

关联规则:暗示两种物品之间可能存在很强的关系

-

Support(支持度):表示同时包含 A 和 B 的事务占所有事务的比例。如果用 P(A) 表示包含 A 的事务的比例,那么 Support = P(A & B)

-

Confidence(可信度/置信度):表示包含 A 的事务中同时包含 B 的事务的比例,即同时包含 A 和 B 的事务占包含 A 的事务的比例。公式表达:Confidence = P(A & B)/ P(A)

Apriori算法两个重要的定律:

-

定律1:如果一个集合是频繁项集,则它的所有子集都是频繁项集

-

定律2:如果一个集合不是频繁项集,则它的所有超集都不是频繁项集

Apriori算法实现基本过程如下:

1) 创建初始候选集,筛选候选项1项集

2) 从候选项集中选出满足最小支持度的频繁项集并计算支持度

3) 根据频繁项集,生成新的候选项1项集

4) 循环生成候选集并计算其支持度

5) 从频繁项集中挖掘关联规则,筛选满足最小可信度的关联规则

# -*- coding: utf-8 -*-

import numpy as np

import pandas as pd

## 方法一:

def apriori(data_set):

"""创建初始候选集,候选项1项集"""

print('创建初始候选项1项集')

c1 = set()

for items in data_set:

for item in items:

# frozenset()返回一个冻结的集合,冻结后集合不能再添加或删除任何元素

item_set = frozenset([item])

c1.add(item_set)

def generate_freq_supports(data_set, item_set, min_support):

"""从候选项集中选出频繁项集并计算支持度"""

print('筛选频繁项集并计算支持度')

freq_set = set() # 保存频繁项集元素

item_count = {} # 保存元素频次,用于计算支持度

supports = {} # 保存支持度

# 如果项集中元素在数据集中则计数

for record in data_set:

for item in item_set:

# issubset()方法用于判断集合的所有元素是否都包含在指定集合中

if item.issubset(record):

if item not in item_count:

item_count[item] = 1

else:

item_count[item] += 1

data_len = float(len(data_set))

# 计算项集支持度

for item in item_count:

if (item_count[item] / data_len) >= min_support:

freq_set.add(item)

supports[item] = item_count[item] / data_len

return freq_set, supports

def generate_new_combinations(freq_set, k):

"""

根据频繁项集,生成新的候选项1项集

参数:频繁项集列表 freq_set 与项集元素个数 k

"""

print('生成新组合')

new_combinations = set() # 保存新组合

sets_len = len(freq_set) # 集合含有元素个数,用于遍历求得组合

freq_set_list = list(freq_set) # 集合转为列表用于索引

for i in range(sets_len):

for j in range(i + 1, sets_len):

l1 = list(freq_set_list[i])

l2 = list(freq_set_list[j])

l1.sort()

l2.sort()

# 若两个集合的前k-2个项相同时,则将两个集合合并

if l1[0:k-2] == l2[0:k-2]:

freq_item = freq_set_list[i] | freq_set_list[j]

new_combinations.add(freq_item)

return new_combinations

def apriori(data_set, min_support, max_len=None):

"""循环生成候选集并计算其支持度"""

print('循环生成候选集')

max_items = 2 # 初始项集元素个数

freq_sets = [] # 保存所有频繁项集

supports = {} # 保存所有支持度

# 候选项1项集

c1 = set()

for items in data_set:

for item in items:

item_set = frozenset([item])

c1.add(item_set)

# 频繁项1项集及其支持度

l1, support1 = generate_freq_supports(data_set, c1, min_support)

freq_sets.append(l1)

supports.update(support1)

if max_len is None:

max_len = float('inf')

while max_items and max_items <= max_len:

# 生成候选集

ci = generate_new_combinations(freq_sets[-1], max_items)

# 生成频繁项集和支持度

li, support = generate_freq_supports(data_set, ci, min_support)

# 如果有频繁项集则进入下个循环

if li:

freq_sets.append(li)

supports.update(support)

max_items += 1

else:

max_items = 0

return freq_sets, supports

def association_rules(freq_sets, supports, min_conf):

"""生成关联规则"""

print('生成关联规则')

rules = []

max_len = len(freq_sets)

# 筛选符合规则的频繁集计算置信度,满足最小置信度的关联规则添加到列表

for k in range(max_len - 1):

for freq_set in freq_sets[k]:

for sub_set in freq_sets[k + 1]:

if freq_set.issubset(sub_set):

frq = supports[sub_set]

conf = supports[sub_set] / supports[freq_set]

rule = (freq_set, sub_set - freq_set, frq, conf)

if conf >= min_conf:

print(freq_set,"-->",sub_set - freq_set,'frq:',frq,'conf:',conf)

rules.append(rule)

return rules

5.2 FP-growth算法

5.2.1 FP-growth算法基本原理

FP-growth算法基于Apriori构建,但采用了高级的数据结构减少扫描次数,大大加快了算法速度。FP-growth算法只需要对数据库进行两次扫描,而Apriori算法对于每个潜在的频繁项集都会扫描数据集判定给定模式是否频繁,因此FP-growth算法的速度要比Apriori算法快。

优点:一般要快于Apriori。

缺点:实现比较困难,在某些数据集上性能会下降。

适用数据类型:离散型数据。

这里涉及到另外一个指标:提升度(Lift)

Lift(提升度):表示“包含 A 的事务中同时包含 B 的事务的比例”与“包含 B 的事务的比例”的比值。公式表达:Lift = ( P(A & B)/ P(A) ) / P(B) = P(A & B)/ P(A) / P(B)。

5.2.2 FP-growth算法实现基本过程如下

# encoding: utf-8

"""

fp-growth算法是一个生成频繁项集的算法,其主要利用了FP树的数据结构,

整个生成过程只需要遍历数据集2次

"""

from collections import defaultdict, namedtuple

"""

collections模块中的defaultdict继承自dict,namedtuple继承自tuple

defaultdict会构建一个类似dict的对象,该对象具有默认值

当dict不存在的key时会报KeyError错误,调用defaultdict时遇到KeyError错误会用默认值填充

namedtuple主要用来产生可以使用名称来访问元素的数据对象,通常用来增强代码的可读性

"""

def find_frequent_itemsets(transactions, minimum_support, include_support=False):

"""

挖掘频繁项集,生成频繁项集和对应支持度(频数)

"""

items = defaultdict(lambda: 0) # mapping from items to their supports

# Load the passed-in transactions and count the support that individual

# items have.

for transaction in transactions:

for item in transaction:

items[item] += 1

# Remove infrequent items from the item support dictionary.

items = dict((item, support) for item, support in items.items()

if support >= minimum_support)

# Build our FP-tree. Before any transactions can be added to the tree, they

# must be stripped of infrequent items and their surviving items must be

# sorted in decreasing order of frequency.

def clean_transaction(transaction):

transaction = filter(lambda v: v in items, transaction)

transaction_list = list(transaction) # 为了防止变量在其他部分调用,这里引入临时变量transaction_list

transaction_list.sort(key=lambda v: items[v], reverse=True)

return transaction_list

master = FPTree()

for transaction in map(clean_transaction, transactions):

master.add(transaction)

def find_with_suffix(tree, suffix):

for item, nodes in tree.items():

support = sum(n.count for n in nodes)

if support >= minimum_support and item not in suffix:

# New winner!

found_set = [item] + suffix

yield (found_set, support) if include_support else found_set

# Build a conditional tree and recursively search for frequent

# itemsets within it.

cond_tree = conditional_tree_from_paths(tree.prefix_paths(item))

for s in find_with_suffix(cond_tree, found_set):

yield s # pass along the good news to our caller

# Search for frequent itemsets, and yield the results we find.

for itemset in find_with_suffix(master, []):

yield itemset

class FPTree(object):

"""

构建FP树

所有的项必须作为字典的键或集合成员

"""

Route = namedtuple('Route', 'head tail')

def __init__(self):

# The root node of the tree.

self._root = FPNode(self, None, None)

# A dictionary mapping items to the head and tail of a path of

# "neighbors" that will hit every node containing that item.

self._routes = {}

@property

def root(self):

"""The root node of the tree."""

return self._root

def add(self, transaction):

"""Add a transaction to the tree."""

point = self._root

for item in transaction:

next_point = point.search(item)

if next_point:

# There is already a node in this tree for the current

# transaction item; reuse it.

next_point.increment()

else:

# Create a new point and add it as a child of the point we're

# currently looking at.

next_point = FPNode(self, item)

point.add(next_point)

# Update the route of nodes that contain this item to include

# our new node.

self._update_route(next_point)

point = next_point

def _update_route(self, point):

"""Add the given node to the route through all nodes for its item."""

assert self is point.tree

try:

route = self._routes[point.item]

route[1].neighbor = point # route[1] is the tail

self._routes[point.item] = self.Route(route[0], point)

except KeyError:

# First node for this item; start a new route.

self._routes[point.item] = self.Route(point, point)

def items(self):

"""

Generate one 2-tuples for each item represented in the tree. The first

element of the tuple is the item itself, and the second element is a

generator that will yield the nodes in the tree that belong to the item.

"""

for item in self._routes.keys():

yield (item, self.nodes(item))

def nodes(self, item):

"""

Generate the sequence of nodes that contain the given item.

"""

try:

node = self._routes[item][0]

except KeyError:

return

while node:

yield node

node = node.neighbor

def prefix_paths(self, item):

"""Generate the prefix paths that end with the given item."""

def collect_path(node):

path = []

while node and not node.root:

path.append(node)

node = node.parent

path.reverse()

return path

return (collect_path(node) for node in self.nodes(item))

def inspect(self):

print('Tree:')

self.root.inspect(1)

print

print('Routes:')

for item, nodes in self.items():

print(' %r' % item)

for node in nodes:

print(' %r' % node)

def conditional_tree_from_paths(paths):

"""从给定的前缀路径构建一个条件fp树."""

tree = FPTree()

condition_item = None

items = set()

# Import the nodes in the paths into the new tree. Only the counts of the

# leaf notes matter; the remaining counts will be reconstructed from the

# leaf counts.

for path in paths:

if condition_item is None:

condition_item = path[-1].item

point = tree.root

for node in path:

next_point = point.search(node.item)

if not next_point:

# Add a new node to the tree.

items.add(node.item)

count = node.count if node.item == condition_item else 0

next_point = FPNode(tree, node.item, count)

point.add(next_point)

tree._update_route(next_point)

point = next_point

assert condition_item is not None

# Calculate the counts of the non-leaf nodes.

for path in tree.prefix_paths(condition_item):

count = path[-1].count

for node in reversed(path[:-1]):

node._count += count

return tree

class FPNode(object):

"""FP树节点"""

def __init__(self, tree, item, count=1):

self._tree = tree

self._item = item

self._count = count

self._parent = None

self._children = {}

self._neighbor = None

def add(self, child):

"""Add the given FPNode `child` as a child of this node."""

if not isinstance(child, FPNode):

raise TypeError("Can only add other FPNodes as children")

if not child.item in self._children:

self._children[child.item] = child

child.parent = self

def search(self, item):

"""

Check whether this node contains a child node for the given item.

If so, that node is returned; otherwise, `None` is returned.

"""

try:

return self._children[item]

except KeyError:

return None

def __contains__(self, item):

return item in self._children

@property

def tree(self):

"""The tree in which this node appears."""

return self._tree

@property

def item(self):

"""The item contained in this node."""

return self._item

@property

def count(self):

"""The count associated with this node's item."""

return self._count

def increment(self):

"""Increment the count associated with this node's item."""

if self._count is None:

raise ValueError("Root nodes have no associated count.")

self._count += 1

@property

def root(self):

"""True if this node is the root of a tree; false if otherwise."""

return self._item is None and self._count is None

@property

def leaf(self):

"""True if this node is a leaf in the tree; false if otherwise."""

return len(self._children) == 0

@property

def parent(self):

"""The node's parent"""

return self._parent

@parent.setter

def parent(self, value):

if value is not None and not isinstance(value, FPNode):

raise TypeError("A node must have an FPNode as a parent.")

if value and value.tree is not self.tree:

raise ValueError("Cannot have a parent from another tree.")

self._parent = value

@property

def neighbor(self):

"""

The node's neighbor; the one with the same value that is "to the right"

of it in the tree.

"""

return self._neighbor

@neighbor.setter

def neighbor(self, value):

if value is not None and not isinstance(value, FPNode):

raise TypeError("A node must have an FPNode as a neighbor.")

if value and value.tree is not self.tree:

raise ValueError("Cannot have a neighbor from another tree.")

self._neighbor = value

@property

def children(self):

"""The nodes that are children of this node."""

return tuple(self._children.itervalues())

def inspect(self, depth=0):

print((' ' * depth) + repr(self))

for child in self.children:

child.inspect(depth + 1)

def __repr__(self):

if self.root:

return "<%s (root)>" % type(self).__name__

return "<%s %r (%r)>" % (type(self).__name__, self.item, self.count)

5.3 商品数据关联分析结果

运行结果部分截图如下

频繁集结果:

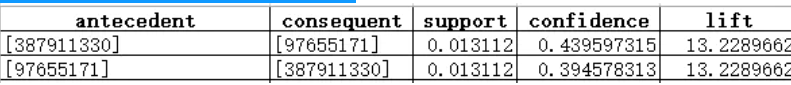

关联规则:

5.4 结论

1、 频繁项集我按支持度进行了排序,商品编号112921337最受欢迎,远远高于其他商品;

2、 从总体上看,所有组合商品中支持度数值偏低,这是由于平台销售的商品种类繁多;

3、 所有商品组合按支持度从高到低排序,

商品组合中 [387911330] à [97655171] 和 [97655171] à [387911330] 支持度最高,但是商品组合[387911330] à [97655171]的置信度最高,表示购买商品编号387911330的用户中有44%会购买商品编号97655171,可以对这两种商品进行捆绑销售;

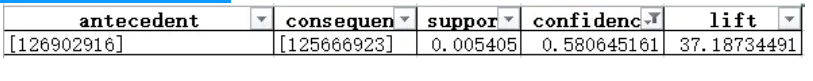

4、 置信度最高的商品组合是

购买商品126902916的用户最大可能会购买商品125666923,但出现的概率偏低;

4 最后-毕设帮助

**毕设帮助, 选题指导, 项目分享: ** https://gitee.com/yaa-dc/warehouse-1/blob/master/python/README.md

**毕设帮助, 选题指导, 项目分享: ** https://gitee.com/yaa-dc/warehouse-1/blob/master/python/README.md

413

413

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?