理论参见:http://blog.csdn.net/sadfasdgaaaasdfa/article/details/46850803

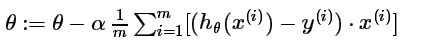

梯度公式:

__author__ = 'Chen'

from numpy import *

def linearRegresion(x,y,type=True,alpha=0.01):

xrow = shape(x)[0]

xcol = shape(x)[1]

x = matrix(x)

Y = matrix(y)

# fill ones

xone = ones((xrow,1))

X = hstack((xone,x))

X = matrix(X)

# normal equiation

if type == True:

theta = (X.T*X).I*X.T*Y

return theta

else:

# gradiant

theta = matrix(random.random(xcol+1))

# iterations

for iteration in range(1,10000):

sums = 0

#gradient method

for i in range(xrow):

sums += (theta*X[i,:].T-Y[i,:])*X[i,:]

theta -= alpha*sums/xrow

return theta

x= [[0,1,0],[0,0,1],[0,1,1],[1,1,1]]

y= [[1],[2],[3],[4]]

# calculate linearRegression by normal equation

theta1 = linearRegresion(x,y)

print theta1

#gradient descent

theta2 = linearRegresion(x,y,False)

print theta2C:\Python27\python.exe C:/Users/Chen/PycharmProjects/mypython/courseraML/LinearRegression.py

[[ 0.]

[ 1.]

[ 1.]

[ 2.]]

[[ 0.00709026 1.00390811 0.99480722 1.99480246]]

Process finished with exit code 0

957

957

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?