目录

Fine-Grained Image Quality Assessment: A Revisit and Further Thinking

GraphIQA: Learning Distortion Graph Representations for Blind Image Quality Assessment

Hallucinated-IQA: No-Reference Image Quality Assessment via Adversarial Learning

No-Reference Image Quality Assessment: An Attention Driven Approach

VCRNet: Visual Compensation Restoration Network for No-Reference Image Quality Assessment

-

Fine-Grained Image Quality Assessment: A Revisit and Further Thinking

Source: IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 32, NO. 5, MAY 2022

Opinion : the coarse-grained statistical results evaluated on existing databases mask the fine-grained differentiation.

Challenges: 1.the lack of large-scale databases 2. the influence of a reference image

Possible methods: 1. the combination for pairs(用图片对) 2. some possible combination processing tasks(结合多任务)

-

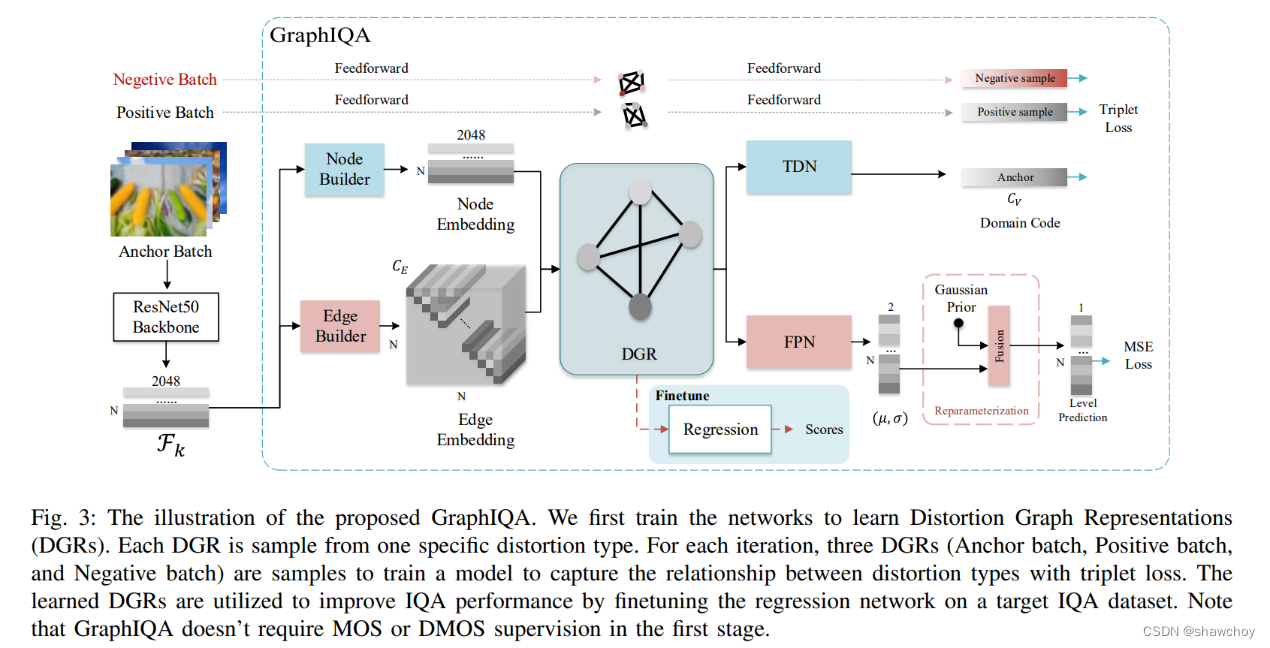

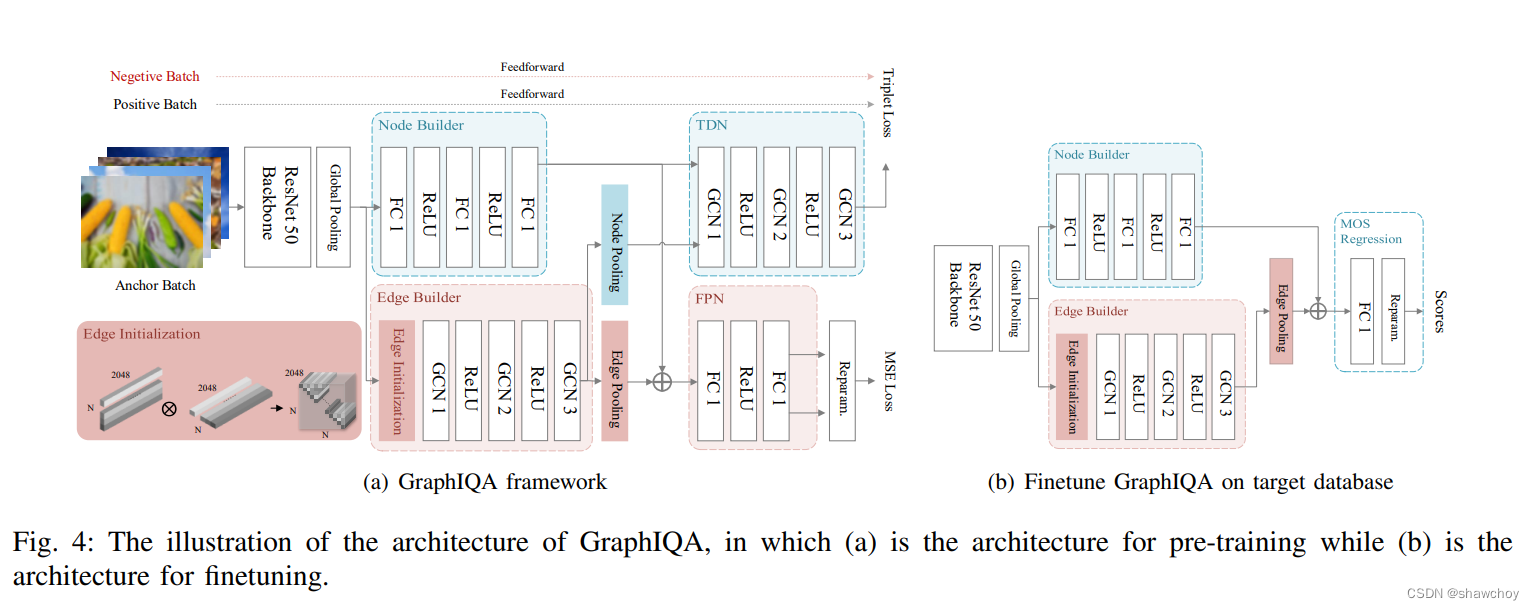

GraphIQA: Learning Distortion Graph Representations for Blind Image Quality Assessment

Source: IEEE TRANSACTIONS ON MULTIMEDIA, VOL. 14, NO. 8, MARCH 2021

Contribution: 1. investigate the inherent relationship between distortion-related factors and their effects on perceptual quality and propose an effective Distortion Graph Representation (DGR) learning framework dubbed GraphIQA for general-purpose BIQA task. 2. design a Type Discrimination Network (TDN) and a Fuzzy Prediction Network (FPN) to learn the proposed DGR.

Method:

-

Hallucinated-IQA: No-Reference Image Quality Assessment via Adversarial Learning (幻视IQA,名字起的挺新潮)

Source: CVPR2018

Contrubutions: 1. Hallucination-Guided Quality Regression Network(结合失真图片和discrepancy map进行质量评价,discrepancy map是失真图和幻视图Hallucinated image之间的偏差) 2. A Quality-Aware Generative Network together with a quality-aware perceptual loss (生成幻视图Hallucinated image) 3. an IQA-Discriminator and an implicit ranking relationship fusion scheme(指导幻视图的生成)

Approach:

-

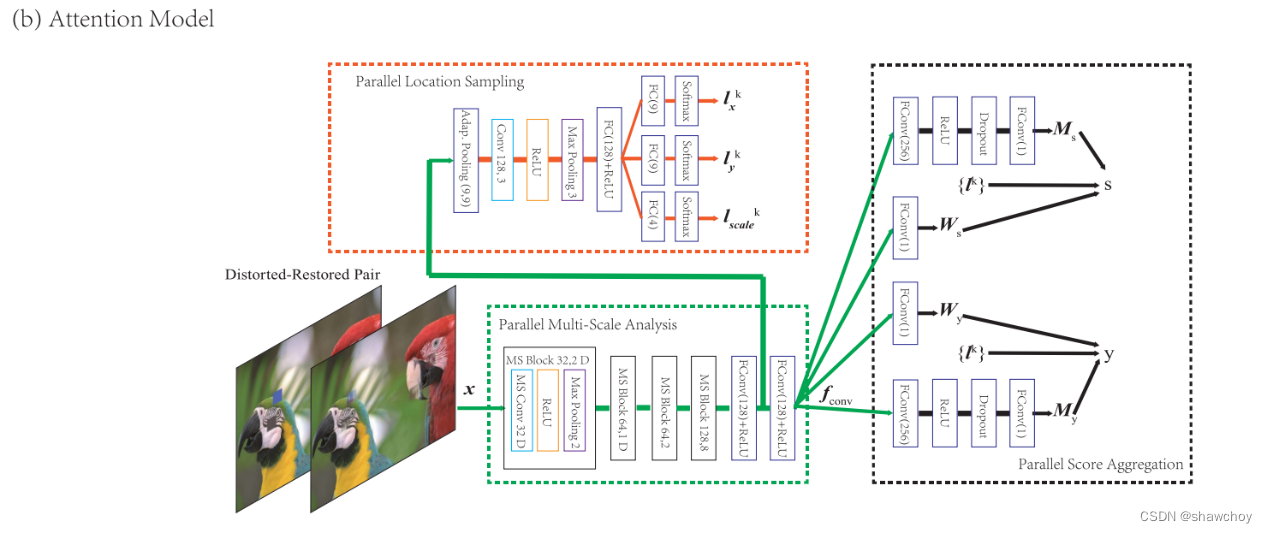

No-Reference Image Quality Assessment: An Attention Driven Approach

Source: IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 29, 2020

Aporoach:

The Restorative Adversarial Nets (RAN): 生成失真图的恢复图像

parallel multi-scale image analysis module: 输入失真图和恢复图对,提取特征

Parallel location sampling module: 找出受关注区域

parallel score aggregation module: 结合质量分数图,质量权重图计算质量分数,结合失真类别图,权重失真类别图得出失真类别

这篇文章中的方法和上一篇幻视IQA有点相似的地方是都根据失真图生成一张恢复图

-

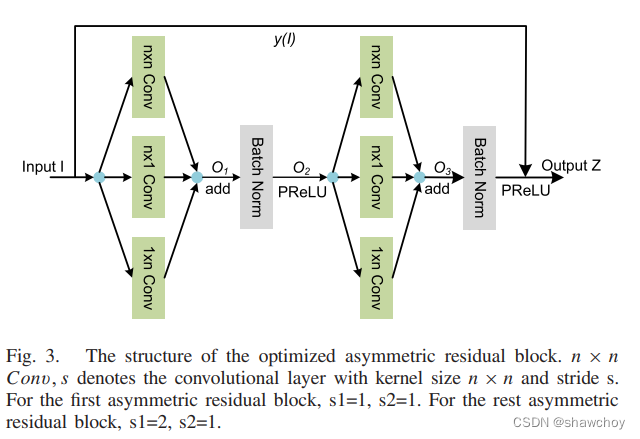

VCRNet: Visual Compensation Restoration Network for No-Reference Image Quality Assessment

Source: IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 31, 2022

Code: https://github.com/NUIST-Videocoding/VCRNet

GAN-based NR-IQA methods have three problems: 1. Instability 2. fake information, e.g., artificial edges, fake texture, and false color 3. When the distorted image is severely destroyed, the GAN-based NR-IQA methods can not repair the distorted image.

Contributions: 1. a VCRNet-based NR-IQA method is proposed, which uses a non-adversarial model to handle the distorted image restoration task 2. a visual compensation module, an optimized asymmetric residual block, and an error map-based mixed loss function 3. multi-level restoration features are used for mapping to an image quality score 4.SOTA

Method:

Visual Restoration Network: 生成恢复图像

Visual Compensation Module: enhance the feature representation ability增强特征表达,生成V1,V2,V3三个特征图

Optimized Asymmetric Residual Block(图中的粉色方块): 提取特征,具体操作如下图

EfficientNet :具体网络结构不了解,懒得看了,来自这篇参考文献M. Tan and Q. Le, “EfficientNet: Rethinking model scaling for convolutional neural networks,” in Proc. Int. Conf. Mach. Learn., Long Beach, CA, USA, 2015, vol. 97, pp. 6105–6114.

UNet: 文章中提到的一个网络,应该是Visual Restoration Network 参考了这个网络然后进行了改进,也不了解,来自这篇文章O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” in Proc. Int. Conf. Med. Image Comput.-Assist. Intervent (MICCAI), Munich, Germany, 2015, pp. 234–241

-

From Patches to Pictures (PaQ-2-PiQ): Mapping the Perceptual Space of Picture Quality

Source: CVPR2020

Contributions: 1. built the largest picture quality database in existence

2. conducted the largest subjective picture quality study to date. 3. collected both picture and patch quality labels to relate local and global picture quality. 4. SOTA

Method:

- Baseline Model: ResNet-18 with a modified head trained on pictures

- RoIPool Model: trained on both picture and patch qualities

RoIPool Model的想法来自Faster-RCNN,但与Faster-RCNN有三点不同,一是Model回归整张图片和图片块的质量分数,Faster-RCNN回归边界框;二是Faster-RCNN用两头的多尺度学习,一边得到分类另一边检测,model只有一头,共同学习整张图片和块;三是Faster-RCNN两头都用的是ROI池化区域特征,model用了全局特征

- Feedback Model: where the local quality predictions are fed back to improve global quality predictions

主干网络分成了两支,一支ROI池化接FC做局部图像块和整张图片的质量预测,另一支做全局池化

-

Incorporating Semi-Supervised and Positive-Unlabeled Learning for Boosting Full Reference Image Quality Assessment

Source: CVPR2022

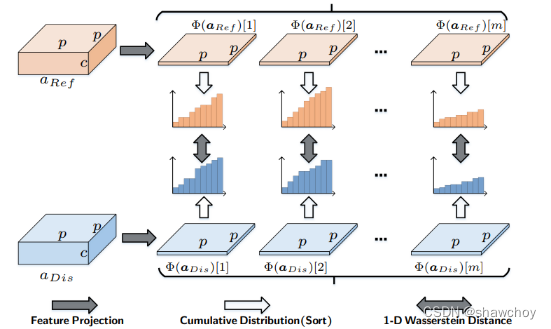

Contributions: 1. A joint semi-supervised and PU learning (JSPL) method is presented 2. spatial attention and local sliced Wasserstein distance are deployed in computing difference map for emphasizing informative regions and suppressing the effect of misalignment between distorted and pristine image. 3.SOTA

Method:

LocalSW(local sliced Wasserstein) Distance:衡量参考特征图和失真特征图的差异,与之前提出的sliced Wasserstein loss不同的是,LocalSW将整个特征图分成不重叠的很多块,计算每一对失真图块和参考图的块的差异,最后得到整个图的差异

Spatial Attention: 计算显著区域

3979

3979

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?