新手小白深度学习第P6周

⭐本文为365天深度学习训练营的学习记录博客

⭐原作者为K同学啊

基础配置

🏡 我的环境:

● 语言环境:Python3.8

● 编译器:jupyter notebook

● 深度学习环境:Pytorch

一、前期准备

1.设置GPU

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision

from torchvision import transforms, datasets

import os,PIL,pathlib,warnings

warnings.filterwarnings("ignore") #忽略警告信息

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

device

输出:

device(type='cpu')

2.导入数据

import os,PIL,random,pathlib

data_dir = './48-data/'

data_dir = pathlib.Path(data_dir)

data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[1] for path in data_paths]

classeNames

输出:

['Angelina Jolie',

'Brad Pitt',

'Denzel Washington',

'Hugh Jackman',

'Jennifer Lawrence',

'Johnny Depp',

'Kate Winslet',

'Leonardo DiCaprio',

'Megan Fox',

'Natalie Portman',

'Nicole Kidman',

'Robert Downey Jr',

'Sandra Bullock',

'Scarlett Johansson',

'Tom Cruise',

'Tom Hanks',

'Will Smith']

# 关于transforms.Compose的更多介绍可以参考:https://blog.csdn.net/qq_38251616/article/details/124878863

train_transforms = transforms.Compose([

transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸

# transforms.RandomHorizontalFlip(), # 随机水平翻转

transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

total_data = datasets.ImageFolder("./6-data/",transform=train_transforms)

total_data

输出:

Dataset ImageFolder

Number of datapoints: 1800

Root location: ./48-data/

StandardTransform

Transform: Compose(

Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=None)

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)```

```python

total_data.class_to_idx

输出:

{'Angelina Jolie': 0,

'Brad Pitt': 1,

'Denzel Washington': 2,

'Hugh Jackman': 3,

'Jennifer Lawrence': 4,

'Johnny Depp': 5,

'Kate Winslet': 6,

'Leonardo DiCaprio': 7,

'Megan Fox': 8,

'Natalie Portman': 9,

'Nicole Kidman': 10,

'Robert Downey Jr': 11,

'Sandra Bullock': 12,

'Scarlett Johansson': 13,

'Tom Cruise': 14,

'Tom Hanks': 15,

'Will Smith': 16}

3.划分数据集

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

train_dataset, test_dataset

输出:

(<torch.utils.data.dataset.Subset at 0x2570a8b6680>,

<torch.utils.data.dataset.Subset at 0x2570a8b67a0>)

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

for X, y in test_dl:

print("Shape of X [N, C, H, W]: ", X.shape)

print("Shape of y: ", y.shape, y.dtype)

break

输出:

Shape of X [N, C, H, W]: torch.Size([32, 3, 224, 224])

Shape of y: torch.Size([32]) torch.int64

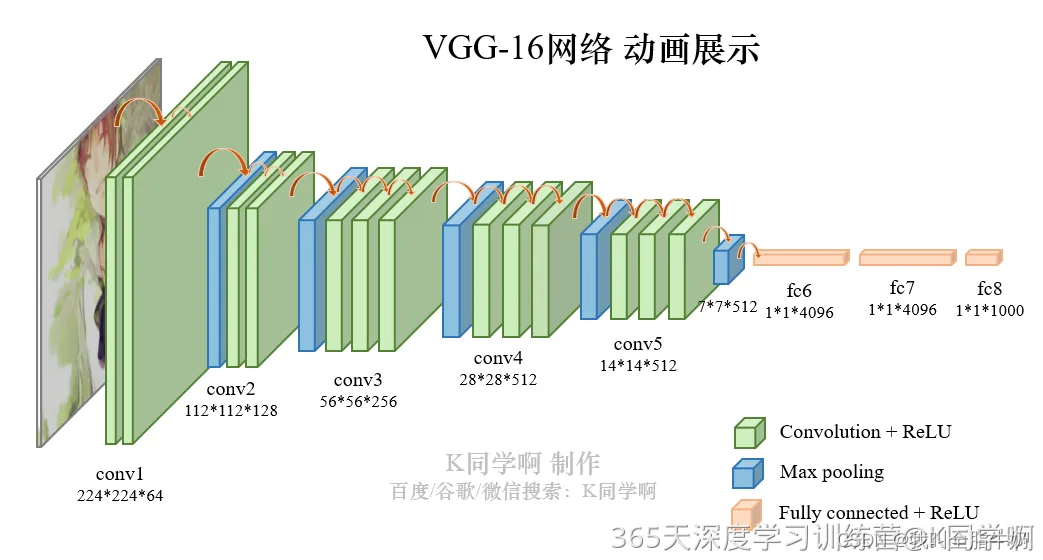

二、调用官方的VVG-16模型

VGG-16(Visual Geometry Group-16)是由牛津大学视觉几何组(Visual Geometry Group)提出的一种深度卷积神经网络架构,用于图像分类和对象识别任务。VGG-16在2014年被提出,是VGG系列中的一种。VGG-16之所以备受关注,是因为它在ImageNet图像识别竞赛中取得了很好的成绩,展示了其在大规模图像识别任务中的有效性。

以下是VGG-16的主要特点:

- 深度:VGG-16由16个卷积层和3个全连接层组成,因此具有相对较深的网络结构。这种深度有助于网络学习到更加抽象和复杂的特征。

- 卷积层的设计:VGG-16的卷积层全部采用3x3的卷积核和步长为1的卷积操作,同时在卷积层之后都接有ReLU激活函数。这种设计的好处在于,通过堆叠多个较小的卷积核,可以提高网络的非线性建模能力,同时减少了参数数量,从而降低了过拟合的风险。

- 池化层:在卷积层之后,VGG-16使用最大池化层来减少特征图的空间尺寸,帮助提取更加显著的特征并减少计算量。

- 全连接层:VGG-16在卷积层之后接有3个全连接层,最后一个全连接层输出与类别数相对应的向量,用于进行分类。

VGG-16结构说明:

● 13个卷积层(Convolutional Layer),分别用blockX_convX表示;

● 3个全连接层(Fully connected Layer),用classifier表示;

● 5个池化层(Pool layer)。

VGG-16包含了16个隐藏层(13个卷积层和3个全连接层),故称为VGG-16

from torchvision.models import vgg16

device = "cuda" if torch.cuda.is_available() else "cpu"

print("Using {} device".format(device))

# 加载预训练模型,并且对模型进行微调

model = vgg16(pretrained = True).to(device) # 加载预训练的vgg16模型

for param in model.parameters():

param.requires_grad = False # 冻结模型的参数,这样子在训练的时候只训练最后一层的参数

# 修改classifier模块的第6层(即:(6): Linear(in_features=4096, out_features=2, bias=True))

# 注意查看我们下方打印出来的模型

model.classifier._modules['6'] = nn.Linear(4096,len(classeNames)) # 修改vgg16模型中最后一层全连接层,输出目标类别个数

model.to(device)

model

输出:

Using cpu device

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=17, bias=True)

)

)

三、训练模型

1.编写训练函数

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss

2.编写测试函数

def test (dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

3.设置动态学习率

# 调用官方动态学习率接口时使用

lambda1 = lambda epoch: 0.92 ** (epoch // 4)

learn_rate = 1e-4 # 初始学习率

optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1) #选定调整方法

4.正式训练

import copy

loss_fn = nn.CrossEntropyLoss() # 创建损失函数

epochs = 40

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_acc = 0 # 设置一个最佳准确率,作为最佳模型的判别指标

for epoch in range(epochs):

# 更新学习率(使用自定义学习率时使用)

# adjust_learning_rate(optimizer, epoch, learn_rate)

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

# 保存最佳模型到 best_model

if epoch_test_acc > best_acc:

best_acc = epoch_test_acc

best_model = copy.deepcopy(model)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')

print(template.format(epoch+1, epoch_train_acc*100, epoch_train_loss,

epoch_test_acc*100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)

print('Done')

输出:

Epoch: 1, Train_acc:5.6%, Train_loss:2.948, Test_acc:4.4%, Test_loss:2.852, Lr:1.00E-04

Epoch: 2, Train_acc:6.5%, Train_loss:2.918, Test_acc:6.9%, Test_loss:2.810, Lr:1.00E-04

Epoch: 3, Train_acc:7.4%, Train_loss:2.890, Test_acc:11.1%, Test_loss:2.780, Lr:1.00E-04

Epoch: 4, Train_acc:9.9%, Train_loss:2.840, Test_acc:13.9%, Test_loss:2.765, Lr:9.20E-05

Epoch: 5, Train_acc:8.5%, Train_loss:2.832, Test_acc:16.9%, Test_loss:2.732, Lr:9.20E-05

Epoch: 6, Train_acc:10.6%, Train_loss:2.786, Test_acc:18.1%, Test_loss:2.711, Lr:9.20E-05

Epoch: 7, Train_acc:11.7%, Train_loss:2.782, Test_acc:18.6%, Test_loss:2.681, Lr:9.20E-05

Epoch: 8, Train_acc:13.1%, Train_loss:2.736, Test_acc:19.2%, Test_loss:2.656, Lr:8.46E-05

Epoch: 9, Train_acc:13.9%, Train_loss:2.723, Test_acc:19.4%, Test_loss:2.643, Lr:8.46E-05

Epoch:10, Train_acc:15.3%, Train_loss:2.714, Test_acc:19.4%, Test_loss:2.641, Lr:8.46E-05

Epoch:11, Train_acc:14.4%, Train_loss:2.698, Test_acc:20.6%, Test_loss:2.610, Lr:8.46E-05

Epoch:12, Train_acc:14.8%, Train_loss:2.696, Test_acc:20.8%, Test_loss:2.596, Lr:7.79E-05

Epoch:13, Train_acc:15.1%, Train_loss:2.654, Test_acc:21.4%, Test_loss:2.592, Lr:7.79E-05

Epoch:14, Train_acc:15.6%, Train_loss:2.664, Test_acc:21.7%, Test_loss:2.576, Lr:7.79E-05

Epoch:15, Train_acc:14.9%, Train_loss:2.649, Test_acc:21.9%, Test_loss:2.575, Lr:7.79E-05

Epoch:16, Train_acc:15.3%, Train_loss:2.628, Test_acc:21.9%, Test_loss:2.556, Lr:7.16E-05

Epoch:17, Train_acc:16.0%, Train_loss:2.619, Test_acc:21.9%, Test_loss:2.541, Lr:7.16E-05

Epoch:18, Train_acc:17.4%, Train_loss:2.607, Test_acc:22.2%, Test_loss:2.554, Lr:7.16E-05

Epoch:19, Train_acc:15.8%, Train_loss:2.586, Test_acc:22.5%, Test_loss:2.532, Lr:7.16E-05

Epoch:20, Train_acc:17.2%, Train_loss:2.592, Test_acc:22.5%, Test_loss:2.531, Lr:6.59E-05

Epoch:21, Train_acc:17.8%, Train_loss:2.561, Test_acc:22.5%, Test_loss:2.523, Lr:6.59E-05

Epoch:22, Train_acc:16.7%, Train_loss:2.577, Test_acc:22.5%, Test_loss:2.516, Lr:6.59E-05

Epoch:23, Train_acc:18.5%, Train_loss:2.549, Test_acc:22.5%, Test_loss:2.485, Lr:6.59E-05

Epoch:24, Train_acc:18.2%, Train_loss:2.550, Test_acc:21.9%, Test_loss:2.477, Lr:6.06E-05

Epoch:25, Train_acc:17.0%, Train_loss:2.551, Test_acc:21.9%, Test_loss:2.492, Lr:6.06E-05

Epoch:26, Train_acc:16.8%, Train_loss:2.552, Test_acc:21.9%, Test_loss:2.483, Lr:6.06E-05

Epoch:27, Train_acc:17.7%, Train_loss:2.515, Test_acc:21.9%, Test_loss:2.477, Lr:6.06E-05

Epoch:28, Train_acc:18.4%, Train_loss:2.519, Test_acc:21.9%, Test_loss:2.465, Lr:5.58E-05

Epoch:29, Train_acc:17.9%, Train_loss:2.524, Test_acc:22.5%, Test_loss:2.454, Lr:5.58E-05

Epoch:30, Train_acc:19.8%, Train_loss:2.507, Test_acc:23.1%, Test_loss:2.464, Lr:5.58E-05

Epoch:31, Train_acc:17.4%, Train_loss:2.511, Test_acc:23.6%, Test_loss:2.451, Lr:5.58E-05

Epoch:32, Train_acc:18.9%, Train_loss:2.490, Test_acc:23.6%, Test_loss:2.423, Lr:5.13E-05

Epoch:33, Train_acc:20.6%, Train_loss:2.483, Test_acc:23.6%, Test_loss:2.438, Lr:5.13E-05

Epoch:34, Train_acc:19.0%, Train_loss:2.490, Test_acc:24.2%, Test_loss:2.447, Lr:5.13E-05

Epoch:35, Train_acc:19.2%, Train_loss:2.471, Test_acc:24.2%, Test_loss:2.428, Lr:5.13E-05

Epoch:36, Train_acc:19.9%, Train_loss:2.464, Test_acc:24.2%, Test_loss:2.428, Lr:4.72E-05

Epoch:37, Train_acc:19.8%, Train_loss:2.457, Test_acc:24.2%, Test_loss:2.411, Lr:4.72E-05

Epoch:38, Train_acc:19.3%, Train_loss:2.461, Test_acc:24.7%, Test_loss:2.404, Lr:4.72E-05

Epoch:39, Train_acc:20.4%, Train_loss:2.456, Test_acc:24.7%, Test_loss:2.416, Lr:4.72E-05

Epoch:40, Train_acc:18.2%, Train_loss:2.469, Test_acc:24.7%, Test_loss:2.407, Lr:4.34E-05

Done

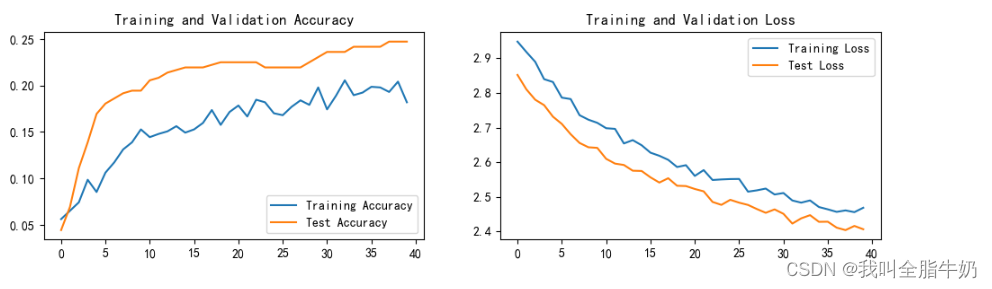

四、结果可视化

1.Loss与Accuracy图

import matplotlib.pyplot as plt

#隐藏警告

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 #分辨率

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

输出:

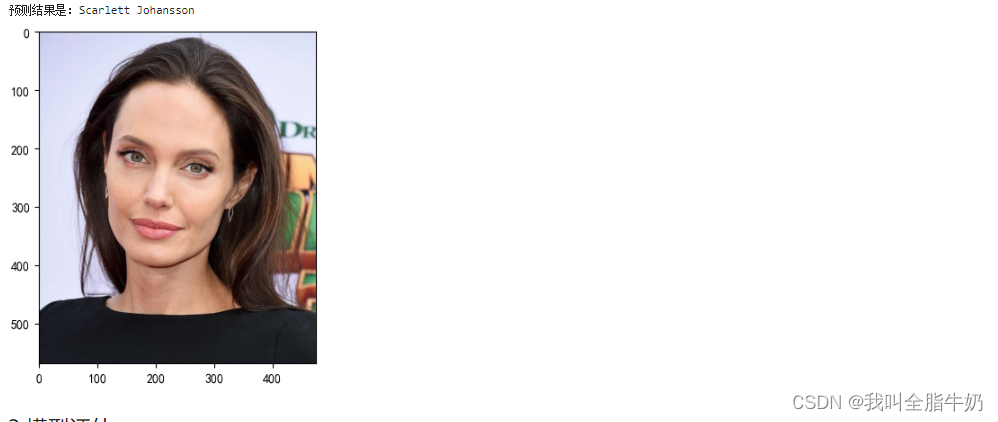

2.指定图片预测

from PIL import Image

classes = list(total_data.class_to_idx)

def predict_one_image(image_path, model, transform, classes):

test_img = Image.open(image_path).convert('RGB')

plt.imshow(test_img) # 展示预测的图片

test_img = transform(test_img)

img = test_img.to(device).unsqueeze(0)

model.eval()

output = model(img)

_,pred = torch.max(output,1)

pred_class = classes[pred]

print(f'预测结果是:{pred_class}')

# 预测训练集中的某张照片

predict_one_image(image_path='./6-data/Angelina Jolie/001_fe3347c0.jpg',

model=model,

transform=train_transforms,

classes=classes)

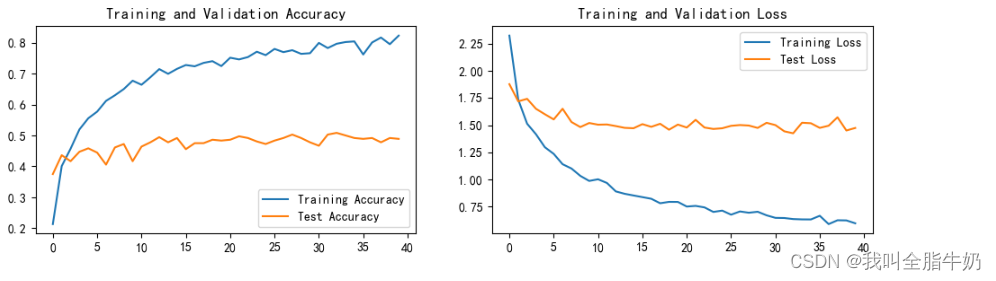

五、个人总结

将优化器从SGD调整为Adam,准确率获得了显著增长,将学习率调成1e-3后准确率进一步提高,但是始终达不到百分之60。

Epoch: 1, Train_acc:21.3%, Train_loss:2.325, Test_acc:37.5%, Test_loss:1.878, Lr:1.00E-03

Epoch: 2, Train_acc:40.1%, Train_loss:1.735, Test_acc:43.6%, Test_loss:1.720, Lr:1.00E-03

Epoch: 3, Train_acc:45.7%, Train_loss:1.514, Test_acc:41.7%, Test_loss:1.743, Lr:1.00E-03

Epoch: 4, Train_acc:51.9%, Train_loss:1.417, Test_acc:44.7%, Test_loss:1.651, Lr:9.20E-04

Epoch: 5, Train_acc:55.6%, Train_loss:1.298, Test_acc:45.8%, Test_loss:1.601, Lr:9.20E-04

Epoch: 6, Train_acc:57.7%, Train_loss:1.236, Test_acc:44.4%, Test_loss:1.554, Lr:9.20E-04

Epoch: 7, Train_acc:61.2%, Train_loss:1.141, Test_acc:40.6%, Test_loss:1.652, Lr:9.20E-04

Epoch: 8, Train_acc:63.0%, Train_loss:1.102, Test_acc:46.1%, Test_loss:1.530, Lr:8.46E-04

Epoch: 9, Train_acc:65.0%, Train_loss:1.034, Test_acc:47.2%, Test_loss:1.483, Lr:8.46E-04

Epoch:10, Train_acc:67.7%, Train_loss:0.988, Test_acc:41.7%, Test_loss:1.521, Lr:8.46E-04

Epoch:11, Train_acc:66.4%, Train_loss:1.003, Test_acc:46.4%, Test_loss:1.505, Lr:8.46E-04

Epoch:12, Train_acc:68.8%, Train_loss:0.969, Test_acc:47.8%, Test_loss:1.507, Lr:7.79E-04

Epoch:13, Train_acc:71.5%, Train_loss:0.891, Test_acc:49.4%, Test_loss:1.492, Lr:7.79E-04

Epoch:14, Train_acc:69.9%, Train_loss:0.869, Test_acc:47.8%, Test_loss:1.476, Lr:7.79E-04

Epoch:15, Train_acc:71.5%, Train_loss:0.854, Test_acc:49.2%, Test_loss:1.472, Lr:7.79E-04

Epoch:16, Train_acc:72.8%, Train_loss:0.839, Test_acc:45.6%, Test_loss:1.510, Lr:7.16E-04

Epoch:17, Train_acc:72.4%, Train_loss:0.824, Test_acc:47.5%, Test_loss:1.485, Lr:7.16E-04

Epoch:18, Train_acc:73.5%, Train_loss:0.782, Test_acc:47.5%, Test_loss:1.513, Lr:7.16E-04

Epoch:19, Train_acc:74.0%, Train_loss:0.795, Test_acc:48.6%, Test_loss:1.459, Lr:7.16E-04

Epoch:20, Train_acc:72.4%, Train_loss:0.795, Test_acc:48.3%, Test_loss:1.506, Lr:6.59E-04

Epoch:21, Train_acc:75.1%, Train_loss:0.752, Test_acc:48.6%, Test_loss:1.479, Lr:6.59E-04

Epoch:22, Train_acc:74.6%, Train_loss:0.758, Test_acc:49.7%, Test_loss:1.550, Lr:6.59E-04

Epoch:23, Train_acc:75.3%, Train_loss:0.744, Test_acc:49.2%, Test_loss:1.479, Lr:6.59E-04

Epoch:24, Train_acc:77.1%, Train_loss:0.702, Test_acc:48.1%, Test_loss:1.466, Lr:6.06E-04

Epoch:25, Train_acc:76.0%, Train_loss:0.715, Test_acc:47.2%, Test_loss:1.472, Lr:6.06E-04

Epoch:26, Train_acc:78.0%, Train_loss:0.677, Test_acc:48.3%, Test_loss:1.495, Lr:6.06E-04

Epoch:27, Train_acc:76.9%, Train_loss:0.707, Test_acc:49.2%, Test_loss:1.502, Lr:6.06E-04

Epoch:28, Train_acc:77.6%, Train_loss:0.695, Test_acc:50.3%, Test_loss:1.497, Lr:5.58E-04

Epoch:29, Train_acc:76.4%, Train_loss:0.704, Test_acc:49.2%, Test_loss:1.475, Lr:5.58E-04

Epoch:30, Train_acc:76.6%, Train_loss:0.671, Test_acc:47.8%, Test_loss:1.523, Lr:5.58E-04

Epoch:31, Train_acc:79.9%, Train_loss:0.648, Test_acc:46.7%, Test_loss:1.501, Lr:5.58E-04

Epoch:32, Train_acc:78.3%, Train_loss:0.646, Test_acc:50.3%, Test_loss:1.444, Lr:5.13E-04

Epoch:33, Train_acc:79.7%, Train_loss:0.637, Test_acc:50.8%, Test_loss:1.426, Lr:5.13E-04

Epoch:34, Train_acc:80.2%, Train_loss:0.634, Test_acc:50.0%, Test_loss:1.523, Lr:5.13E-04

Epoch:35, Train_acc:80.4%, Train_loss:0.633, Test_acc:49.2%, Test_loss:1.518, Lr:5.13E-04

Epoch:36, Train_acc:76.2%, Train_loss:0.667, Test_acc:48.9%, Test_loss:1.476, Lr:4.72E-04

Epoch:37, Train_acc:80.1%, Train_loss:0.590, Test_acc:49.2%, Test_loss:1.496, Lr:4.72E-04

Epoch:38, Train_acc:81.7%, Train_loss:0.626, Test_acc:47.8%, Test_loss:1.573, Lr:4.72E-04

Epoch:39, Train_acc:79.5%, Train_loss:0.624, Test_acc:49.2%, Test_loss:1.452, Lr:4.72E-04

Epoch:40, Train_acc:82.3%, Train_loss:0.598, Test_acc:48.9%, Test_loss:1.475, Lr:4.34E-04

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?