朴素贝叶斯分类基本理解:

朴素贝叶斯法是基于贝叶斯定理与特征条件独立假设的分类方法。简单来说,朴素贝叶斯分类器假设样本每个特征与其他特征都不相关。举个例子,如果一种水果具有红,圆,直径大概4英寸等特征,该水果可以被判定为是苹果。尽管这些特征相互依赖或者有些特征由其他特征决定,然而朴素贝叶斯分类器认为这些属性在判定该水果是否为苹果的概率分布上独立的。尽管是带着这些朴素思想和过于简单化的假设,但朴素贝叶斯分类器在很多复杂的现实情形中仍能够取得相当好的效果。朴素贝叶斯分类器的一个优势在于只需要根据少量的训练数据估计出必要的参数(离散型变量是先验概率和类条件概率,连续型变量是变量的均值和方差)。

NaiveBayes具体场景及其原理可参考:NLP系列(4)_朴素贝叶斯实战与进阶

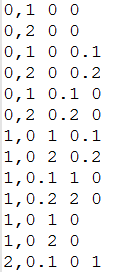

SparkMLlib Java程序所用数据:

训练数据:C:\hello\trainData.txt

该数据,逗号前为目标向量,逗号后为特征向量(空格隔开)。

测试数据:C:\hello\testData.txt

该数据为特征向量,空格隔开。

SparkMLlib NaiveBayes Java程序:

package MLlibTest;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.mllib.classification.NaiveBayes;

import org.apache.spark.mllib.classification.NaiveBayesModel;

import org.apache.spark.mllib.linalg.Vector;

import org.apache.spark.mllib.linalg.Vectors;

import org.apache.spark.mllib.regression.LabeledPoint;

import scala.Tuple2;

public class NaiveBayesTest {

public static void main(String[] args) {

SparkConf conf = new SparkConf().setAppName("NaiveBayesTest").setMaster("local[*]");

JavaSparkContext jsc = new JavaSparkContext(conf);

//读取文件的每一行到RDD

JavaRDD<String> lines = jsc.textFile("C://hello//trainData.txt");

//对每一行进行拆分,封装成LabeledPoint

JavaRDD<LabeledPoint> data = lines.map(new Function<String, LabeledPoint>() {

private static final long serialVersionUID = 1L;

@Override

public LabeledPoint call(String str) throws Exception {

String[] t1 = str.split(",");

String[] t2 = t1[1].split(" ");

LabeledPoint a = new LabeledPoint(Double.parseDouble(t1[0]),

Vectors.dense(Double.parseDouble(t2[0]), Double.parseDouble(t2[1]), Double.parseDouble(t2[2])));

return a;

}

});

//将所有LabeledPoint,随机分成7:3的两个切片

//小数据做练习时,这一步也可免去,全部作为训练数据得到模型。

JavaRDD<LabeledPoint>[] splits = data.randomSplit(new double[] { 0.7, 0.3 }, 11L);

JavaRDD<LabeledPoint> traindata = splits[0];

JavaRDD<LabeledPoint> testdata = splits[1];

//朴素贝叶斯模型训练

final NaiveBayesModel model = NaiveBayes.train(traindata.rdd(), 1.0, "multinomial");

//测试model,若未切分数据,可免去。

JavaPairRDD<Double, Double> predictionAndLabel = testdata

.mapToPair(new PairFunction<LabeledPoint, Double, Double>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<Double, Double> call(LabeledPoint p) {

return new Tuple2<Double, Double>(model.predict(p.features()), p.label());

}

});

//由测试数据得到模型分类精度

double accuracy = predictionAndLabel.filter(new Function<Tuple2<Double, Double>, Boolean>() {

private static final long serialVersionUID = 1L;

@Override

public Boolean call(Tuple2<Double, Double> pl) {

return pl._1().equals(pl._2());

}

}).count() / (double) testdata.count();

System.out.println("模型精度为:"+accuracy);

//方案一:直接利用模型计算答案

JavaRDD<String> testData = jsc.textFile("C://hello//testData.txt");

JavaPairRDD<String, Double> res = testData.mapToPair(new PairFunction<String, String, Double>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String,Double> call(String line) throws Exception{

String[] t2 = line.split(" ");

Vector v = Vectors.dense(Double.parseDouble(t2[0]), Double.parseDouble(t2[1]),

Double.parseDouble(t2[2]));

double res = model.predict(v);

return new Tuple2<String,Double>(line,res);

}

});

res.saveAsTextFile("C://hello//res");

//方案二:保存模型后,导入模型,再计算,计算代码与方案一完全相同。

model.save(jsc.sc(), "C://hello//BayesModel");

NaiveBayesModel sameModel = NaiveBayesModel.load(jsc.sc(), "C://hello//BayesModel");

//代码与方案一相同

}

}

结语:

做的时间匆忙,错误之处,请大家指出批评,相互学习。

735

735

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?